Previous blogs Synchronization and Communication between UCOS Tasks This paper introduces the synchronization mechanism between tasks, such as semaphores, mutexes, message mailbox and message queue, and the realization principle of communication mechanism between tasks. This paper mainly looks at the realization of synchronization between RT-Thread threads and communication object management from the comparison and difference between RT-Thread and UCOS.

I. IPC Object Management

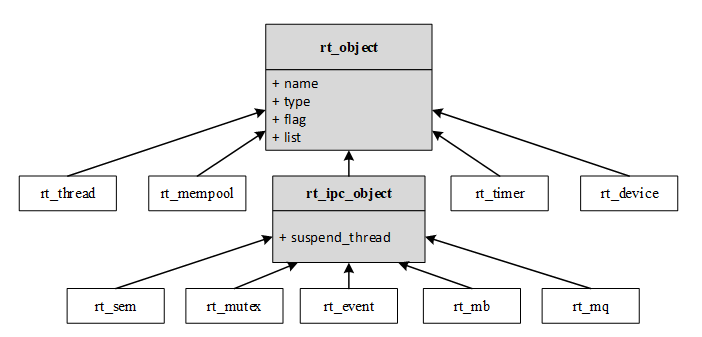

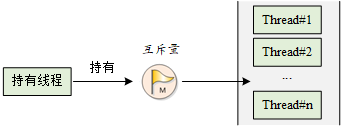

Reviewing the derivation and inheritance of kernel objects:

1.1 IPC Object Control Block

I've already described the direct inheritance from base object rt_object Timer object rt_timer, memory pool object rt_mempool Thread object rt_thread. Now we will introduce the synchronization and communication between threads. The synchronization object rt_sem/rt_mutex/rt_event between threads and the communication object rt_mb/rt_mq between threads are directly inherited from rt_ipc_object, while the IPC object inherits from the base object rt_object. Before introducing the synchronization and communication object between threads, we first introduce the derived IPC. The object rt_ipc_object has the following data structure:

// rt-thread-4.0.1\include\rtdef.h

/**

* Base structure of IPC object

*/

struct rt_ipc_object

{

struct rt_object parent; /**< inherit from rt_object */

rt_list_t suspend_thread; /**< threads pended on this resource */

};

/**

* IPC flags and control command definitions

*/

#define RT_IPC_FLAG_FIFO 0x00 /**< FIFOed IPC. @ref IPC. */

#define RT_IPC_FLAG_PRIO 0x01 /**< PRIOed IPC. @ref IPC. */

#define RT_IPC_CMD_UNKNOWN 0x00 /**< unknown IPC command */

#define RT_IPC_CMD_RESET 0x01 /**< reset IPC object */

#define RT_WAITING_FOREVER -1 /**< Block forever until get resource. */

#define RT_WAITING_NO 0 /**< Non-block. */

IPC object rt_ipc_object inherits from base object rt_object, rt_ipc_object.parent.flag is used to identify the processing method of actual IPC object. According to the macro definition above, it is summarized as follows:

| flag bit | 0 | 1 | Remarks |

|---|---|---|---|

| bit0 | RT_IPC_FLAG_FIFO: Processing according to message queue first in first out. | RT_IPC_FLAG_PRIO: Processing according to thread priority, i.e. which thread has the highest priority and which one operates first | IPC Processing Mode |

The other members of rt_ipc_object inherited from the base object rt_object take different values according to the specific object type, which will be introduced later when the specific object type is introduced.

The only private object of rt_ipc_object is rt_ipc_object.suspend_thread s. These suspend waiting threads together constitute the event waiting list. The organizational order of the event pending list is related to the previous flag. If RT_IPC_FLAG_FIFO is set, the suspended thread list is sorted by first in first out, such as If RT_IPC_FLAG_PRIO is set, the suspended threads are sorted by priority.

1.2 IPC Object Interface Function

The derived IPC object rt_ipc_object has only one private member suspend_thread, and its operation mainly includes list initialization, list node insertion/removal, etc. The initialization function and suspend thread function of IPC object are implemented as follows:

// rt-thread-4.0.1\src\ipc.c

/**

* This function will initialize an IPC object

*

* @param ipc the IPC object

*

* @return the operation status, RT_EOK on successful

*/

rt_inline rt_err_t rt_ipc_object_init(struct rt_ipc_object *ipc)

{

/* init ipc object */

rt_list_init(&(ipc->suspend_thread));

return RT_EOK;

}

/**

* This function will suspend a thread to a specified list. IPC object or some

* double-queue object (mailbox etc.) contains this kind of list.

*

* @param list the IPC suspended thread list

* @param thread the thread object to be suspended

* @param flag the IPC object flag,

* which shall be RT_IPC_FLAG_FIFO/RT_IPC_FLAG_PRIO.

*

* @return the operation status, RT_EOK on successful

*/

rt_inline rt_err_t rt_ipc_list_suspend(rt_list_t *list,

struct rt_thread *thread,

rt_uint8_t flag)

{

/* suspend thread */

rt_thread_suspend(thread);

switch (flag)

{

case RT_IPC_FLAG_FIFO:

rt_list_insert_before(list, &(thread->tlist));

break;

case RT_IPC_FLAG_PRIO:

{

struct rt_list_node *n;

struct rt_thread *sthread;

/* find a suitable position */

for (n = list->next; n != list; n = n->next)

{

sthread = rt_list_entry(n, struct rt_thread, tlist);

/* find out */

if (thread->current_priority < sthread->current_priority)

{

/* insert this thread before the sthread */

rt_list_insert_before(&(sthread->tlist), &(thread->tlist));

break;

}

}

/*

* not found a suitable position,

* append to the end of suspend_thread list

*/

if (n == list)

rt_list_insert_before(list, &(thread->tlist));

}

break;

}

return RT_EOK;

}

The function rt_ipc_list_suspend has a parameter flag which just represents the IPC processing method described above. RT_IPC_FLAG_FIFO is simple and fast. RT_IPC_FLAG_PRIO can achieve better real-time performance. Users can select the incoming flag value according to their needs.

In view of the fact that the IPC object may be deleted or detached, all threads suspended on the IPC object need to be awakened at this time. Therefore, a function to wake up all suspended threads is provided. The implementation code is as follows:

// rt-thread-4.0.1\src\ipc.c

/**

* This function will resume the first thread in the list of a IPC object:

* - remove the thread from suspend queue of IPC object

* - put the thread into system ready queue

*

* @param list the thread list

*

* @return the operation status, RT_EOK on successful

*/

rt_inline rt_err_t rt_ipc_list_resume(rt_list_t *list)

{

struct rt_thread *thread;

/* get thread entry */

thread = rt_list_entry(list->next, struct rt_thread, tlist);

RT_DEBUG_LOG(RT_DEBUG_IPC, ("resume thread:%s\n", thread->name));

/* resume it */

rt_thread_resume(thread);

return RT_EOK;

}

/**

* This function will resume all suspended threads in a list, including

* suspend list of IPC object and private list of mailbox etc.

*

* @param list of the threads to resume

*

* @return the operation status, RT_EOK on successful

*/

rt_inline rt_err_t rt_ipc_list_resume_all(rt_list_t *list)

{

struct rt_thread *thread;

register rt_ubase_t temp;

/* wakeup all suspend threads */

while (!rt_list_isempty(list))

{

/* disable interrupt */

temp = rt_hw_interrupt_disable();

/* get next suspend thread */

thread = rt_list_entry(list->next, struct rt_thread, tlist);

/* set error code to RT_ERROR */

thread->error = -RT_ERROR;

/*

* resume thread

* In rt_thread_resume function, it will remove current thread from

* suspend list

*/

rt_thread_resume(thread);

/* enable interrupt */

rt_hw_interrupt_enable(temp);

}

return RT_EOK;

}

2. Inter thread synchronization object management

Synchronization refers to running in a predetermined order. Thread synchronization refers to controlling the execution order among threads through specific mechanisms (such as mutexes, event objects, critical zones). It can also be said that the relationship between threads is established by synchronization. If there is no synchronization, then the threads are among themselves. It will be out of order.

There are many ways to synchronize threads, and the core idea is that only one (or a class) thread is allowed to run when accessing the critical zone. There are many ways to enter/exit the critical zone:

- Call rt_hw_interrupt_disable() into the critical zone and rt_hw_interrupt_enable()

Withdrawal from critical area; - Call rt_enter_critical() to enter the critical zone, and call rt_exit_critical() to exit the critical zone.

2.1 semaphore object management

A semaphore is a lightweight kernel object used to solve the problem of synchronization between threads. Threads can acquire or release it to achieve the purpose of synchronization or mutual exclusion.

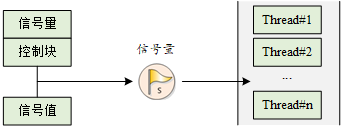

The semaphore diagram shows that each semaphore object has a semaphore value and a thread waiting queue. The semaphore value corresponds to the number of instances and resources of the semaphore object. If the semaphore value is 5, it means that there are five semaphore instances (resources) that can be used. When the number is zero, threads applying for the semaphore are suspended on the waiting queue of the semaphore, waiting for available semaphore instances (resources).

- Semaphore control block

In RT-Thread, the semaphore control block is a data structure used by the operating system to manage semaphores, which is represented by struct rt_semaphore. Another C expression, rt_sem_t, represents the handle of the semaphore. The implementation in C language is the pointer to the semaphore control block. The semaphore control block structure is defined in detail as follows:

// rt-thread-4.0.1\include\rtdef.h

/**

* Semaphore structure

*/

struct rt_semaphore

{

struct rt_ipc_object parent; /**< inherit from ipc_object */

rt_uint16_t value; /**< value of semaphore. */

rt_uint16_t reserved; /**< reserved field */

};

typedef struct rt_semaphore *rt_sem_t;

The rt_semaphore object is derived from rt_ipc_object. The value of rt_semaphore.parent.type is RT_Object_Class_Semaphor, rt_semaphore.parent.list is an initialized/created semaphore linked list node and is organized into a semaphore linked list (IPC objects are not divided into several states like timers or thread objects, maintaining only one I. PC object list is enough.

The rt_semaphore object has two private members, but one is left unused, which is equivalent to only one private member, rt_semaphore.value. The maximum semaphore value is 65535, which is determined by the member type rt_uint16_t.

- Semaphore interface function

The semaphore control block contains important parameters related to the semaphore, which plays a role of link between various states of the semaphore. The semaphore-related interface is shown in the following figure. The operation of a semaphore includes creating/initializing the semaphore, acquiring the semaphore, releasing the semaphore, deleting/detaching the semaphore.

First look at the semaphore construction/destructor prototype (still divided into static and dynamic objects):

// rt-thread-4.0.1\include\rtdef.h

/**

* This function will initialize a semaphore and put it under control of

* resource management.

*

* @param sem the semaphore object

* @param name the name of semaphore

* @param value the init value of semaphore

* @param flag the flag of semaphore

*

* @return the operation status, RT_EOK on successful

*/

rt_err_t rt_sem_init(rt_sem_t sem,

const char *name,

rt_uint32_t value,

rt_uint8_t flag);

/**

* This function will detach a semaphore from resource management

*

* @param sem the semaphore object

*

* @return the operation status, RT_EOK on successful

*

* @see rt_sem_delete

*/

rt_err_t rt_sem_detach(rt_sem_t sem);

/**

* This function will create a semaphore from system resource

*

* @param name the name of semaphore

* @param value the init value of semaphore

* @param flag the flag of semaphore

*

* @return the created semaphore, RT_NULL on error happen

*

* @see rt_sem_init

*/

rt_sem_t rt_sem_create(const char *name, rt_uint32_t value, rt_uint8_t flag);

/**

* This function will delete a semaphore object and release the memory

*

* @param sem the semaphore object

*

* @return the error code

*

* @see rt_sem_detach

*/

rt_err_t rt_sem_delete(rt_sem_t sem);

Look at the prototype of rt_sem_take acquisition and rt_sem_release function for semaphores:

// rt-thread-4.0.1\include\rtdef.h /** * This function will take a semaphore, if the semaphore is unavailable, the * thread shall wait for a specified time. * * @param sem the semaphore object * @param time the waiting time * * @return the error code */ rt_err_t rt_sem_take(rt_sem_t sem, rt_int32_t time); /** * This function will try to take a semaphore and immediately return * * @param sem the semaphore object * * @return the error code */ rt_err_t rt_sem_trytake(rt_sem_t sem); /** * This function will release a semaphore, if there are threads suspended on * semaphore, it will be waked up. * * @param sem the semaphore object * * @return the error code */ rt_err_t rt_sem_release(rt_sem_t sem);

Finally, look at the semaphore control function rt_sem_control. Two command macro definitions are also mentioned when introducing the IPC object. The control function command supported by the IPC object has only one RT_IPC_CMD_RESET. For RT_IPC_CMD_UNKNOWN, it may be reserved. The function of rt_sem_control is to reset the semaphore value. The function prototype is as follows:

// rt-thread-4.0.1\include\rtdef.h /** * This function can get or set some extra attributions of a semaphore object. * * @param sem the semaphore object * @param cmd the execution command * @param arg the execution argument * * @return the error code */ rt_err_t rt_sem_control(rt_sem_t sem, int cmd, void *arg);

Signal is a very flexible synchronization method, which can be used in many situations. It can also be conveniently used in the synchronization between threads, interrupts and threads. Mutual exclusion between interrupts and threads should not take the form of semaphores (locks), but switch interrupts.

Generally, resource counting types are mixed-mode inter-thread synchronization, because there are still multiple access threads for a single resource processing, which requires access to a single resource, processing, and mutually exclusive lock operation.

2.2 Mutex Object Management

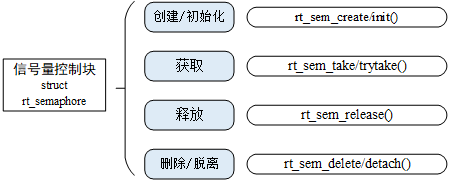

Mutual exclusion, also known as mutually exclusive semaphores, is a special binary semaphore. The difference between mutexes and semaphores is that threads with mutexes have ownership of mutexes, mutexes support recursive access and prevent thread priority flipping, and mutexes can only be released by holding threads, while semaphores can be released by any thread.

There are only two states of mutex, open or close (two state values). When a thread holds it, the mutex is locked and the thread gets its ownership. Instead, when the thread releases it, it unlocks the mutex and loses its ownership. When a thread holds a mutex, other threads will not be able to unlock or hold it, and threads holding the mutex will be able to retrieve the lock again without being suspended, as shown in the figure below. This feature is very different from the general binary semaphores: in semaphores, because there are no instances, thread recursive holding will occur active hang (eventually deadlock).

Another potential problem with signal usage is thread priority inversion, which occurs in blogs. Synchronization and Communication between UCOS Tasks As mentioned above, RT-Thread is similar to UCOS in that it uses priority inheritance algorithm to solve priority inversion problem. After obtaining the mutex, release the mutex as soon as possible, and in the process of holding the mutex, do not change the priority of thread holding the mutex.

- Mutex Control Block

In RT-Thread, the mutex control block is a data structure used by the operating system to manage mutex, which is represented by struct rt_mutex. Another C expression, rt_mutex_t, denotes the handle of the mutex. The implementation in C language refers to the pointer of the mutex control block. For a detailed definition of the structure of the mutex control block, see the following code:

// rt-thread-4.0.1\include\rtdef.h

/**

* Mutual exclusion (mutex) structure

*/

struct rt_mutex

{

struct rt_ipc_object parent; /**< inherit from ipc_object */

rt_uint16_t value; /**< value of mutex */

rt_uint8_t original_priority; /**< priority of last thread hold the mutex */

rt_uint8_t hold; /**< numbers of thread hold the mutex */

struct rt_thread *owner; /**< current owner of mutex */

};

typedef struct rt_mutex *rt_mutex_t;

The rt_mutex object is derived from rt_ipc_object. The value of rt_mutex.parent.type is RT_Object_Class_Mutex, and the node of rt_mutex.parent.parent.list mutex object list. All mutexes are organized into a mutex object list.

Rt_mutex object has more private members than semaphores, and rt_mutex.value is mutex value, usually 0 or 1; rt_mutex.original_priority is the original priority of holding thread, which is used for priority inheritance algorithm; rt_mutex.hold is the number of holding threads, and mutex.hold supports multiple nested holding of holding threads.

rt_mutex.owner points to the thread address that currently has mutex.

- Mutex interface function

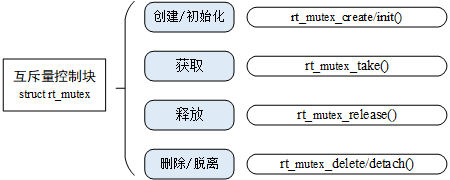

Mutex control block contains important parameters related to mutex, and plays an important role in the realization of mutex function. The mutex-related interface is shown in the following figure. The operation of a mutex includes creating/initializing mutex, acquiring mutex, releasing mutex, deleting/detaching mutex.

First look at the mutex construction, destructor prototype:

// rt-thread-4.0.1\src\ipc.c /** * This function will initialize a mutex and put it under control of resource * management. * * @param mutex the mutex object * @param name the name of mutex * @param flag the flag of mutex * * @return the operation status, RT_EOK on successful */ rt_err_t rt_mutex_init(rt_mutex_t mutex, const char *name, rt_uint8_t flag); /** * This function will detach a mutex from resource management * * @param mutex the mutex object * * @return the operation status, RT_EOK on successful * * @see rt_mutex_delete */ rt_err_t rt_mutex_detach(rt_mutex_t mutex); /** * This function will create a mutex from system resource * * @param name the name of mutex * @param flag the flag of mutex * * @return the created mutex, RT_NULL on error happen * * @see rt_mutex_init */ rt_mutex_t rt_mutex_create(const char *name, rt_uint8_t flag); /** * This function will delete a mutex object and release the memory * * @param mutex the mutex object * * @return the error code * * @see rt_mutex_detach */ rt_err_t rt_mutex_delete(rt_mutex_t mutex);

Next, we will look at the prototype of the mutex_take and release rt_mutex_release functions for mutex acquisition:

// rt-thread-4.0.1\src\ipc.c /** * This function will take a mutex, if the mutex is unavailable, the * thread shall wait for a specified time. * * @param mutex the mutex object * @param time the waiting time * * @return the error code */ rt_err_t rt_mutex_take(rt_mutex_t mutex, rt_int32_t time); /** * This function will release a mutex, if there are threads suspended on mutex, * it will be waked up. * * @param mutex the mutex object * * @return the error code */ rt_err_t rt_mutex_release(rt_mutex_t mutex);

Since the acquisition and release of mutexes are generally within the same thread, and the mutex value is set to 1 at initialization, the mutex constructor does not need to pass in the mutex parameter, and the mutex control function becomes meaningless, so the mutex does not implement the control command.

Mutexes are used singularly because they are semaphores and exist in the form of locks. When initializing, the mutex is always unlocked, and when held by threads, it immediately becomes locked. It should be remembered that the mutex cannot be used in interrupt service routines (interrupt service routines use switch interrupts to achieve mutual exclusion). Mutexes are more suitable for:

- Threads hold mutexes many times. This avoids deadlock problems caused by multiple recursive holdings of the same thread.

- Priority reversal may occur due to multithreaded synchronization.

2.3 Event Set Object Management

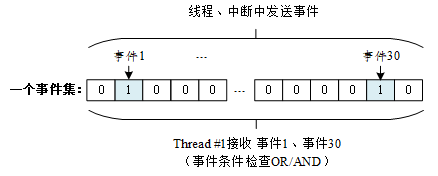

Event set is mainly used for synchronization between threads, which is different from semaphores. It is characterized by one-to-many, many-to-many synchronization. That is, the relationship between a thread and multiple events can be set as follows: any event wake-up thread, or several events are arrived before wake-up thread for subsequent processing; similarly, events can also be multiple threads synchronizing multiple events. This collection of multiple events can be represented by a 32-bit unsigned integer variable. Each bit of the variable represents an event. The thread associates one or more events through "logical and" or "logical or" to form an event combination. The logic or synchronization of events is also called independent synchronization, which means that threads synchronize with one of any events; the logic and synchronization of events is also called associated synchronization, which means that threads synchronize with several events.

The event set defined by RT-Thread has the following characteristics:

- Events are only related to threads, and they are independent of each other: each thread can have 32 event flags, which are recorded by a 32 bit unsigned integer, and each bit represents an event;

- Events are only used for synchronization and do not provide data transmission function.

- Events are not queued, that is to say, sending the same event to the thread many times (if the thread has not yet had time to read), the effect is equivalent to sending only once.

In RT-Thread, each thread has an event information tag with three attributes: RT_EVENT_FLAG_AND (logic and), RT_EVENT_FLAG_OR (logic or) and RT_EVENT_FLAG_CLEAR (clearance tag). When threads wait for event synchronization, 32 event flags and this event information flag can be used to determine whether the currently received event meets the synchronization conditions.

As shown in the figure above, the first and thirtieth bits in the event flag of thread 1 are positioned. If the event information flag bit is set to logical and, it means that thread 1 will only be triggered after both event 1 and event 30 occur. If the event information flag bit is set to logical or, then any one of event 1 or event 30 will be triggered. Each occurrence triggers the wake-up thread #1. If the clearance marker is set at the same time, the event 1 and event 30 will be cleared to zero actively when the thread #1 wakes up, otherwise the event marker will still exist (i.e. set 1).

- Event Set Control Block

In RT-Thread, event set control block is a data structure used by the operating system to manage events, which is represented by struct rt_event. Another C expression, rt_event_t, denotes the handle of the event set, and its implementation in C language is the pointer of the control block of the event set. For a detailed definition of the event set control block structure, see the following code:

// rt-thread-4.0.1\include\rtdef.h

/*

* event structure

*/

struct rt_event

{

struct rt_ipc_object parent; /**< inherit from ipc_object */

rt_uint32_t set; /**< event set */

};

typedef struct rt_event *rt_event_t;

/**

* flag defintions in event

*/

#define RT_EVENT_FLAG_AND 0x01 /**< logic and */

#define RT_EVENT_FLAG_OR 0x02 /**< logic or */

#define RT_EVENT_FLAG_CLEAR 0x04 /**< clear flag */

/**

* Thread structure

*/

struct rt_thread

{

......

#if defined(RT_USING_EVENT)

/* thread event */

rt_uint32_t event_set;

rt_uint8_t event_info;

#endif

......

};

typedef struct rt_thread *rt_thread_t;

The rt_event object is derived from rt_ipc_object, the value of rt_event.parent.type is RT_Object_Class_Event, and the rt_event.parent.list is the node of the event set object list. All event set objects are organized into a list.

The rt_event object also has only one private member, rt_event.set represents a 32-bit event set, each bit identifies an event, and the bit value can indicate whether or not an event occurs.

Because event set can realize one-to-many and many-to-many threads synchronization, it is difficult to satisfy the requirement only by event set objects, and it also requires Thread objects to cooperate with the management of the event set they need. In the previous introduction of thread management, it was mentioned that the two members rt_thread.event_set and rt_thread.event_info included in conditional macro RT_USING_EVENT are the key to synchronize Thread objects with event sets. Rt_thread.event_set denotes the set of events that the thread is waiting for; rt_thread.event_info denotes the logical combination of multiple events in the thread's event set. The values are consistent with the above definition of flag, which can be RT_EVENT_FLAG_AND/OR/CLEAR.

- Event Set Interface Function

First look at the construction of event sets, destructor prototype:

// rt-thread-4.0.1\src\ipc.c /** * This function will initialize an event and put it under control of resource * management. * * @param event the event object * @param name the name of event * @param flag the flag of event * * @return the operation status, RT_EOK on successful */ rt_err_t rt_event_init(rt_event_t event, const char *name, rt_uint8_t flag); /** * This function will detach an event object from resource management * * @param event the event object * * @return the operation status, RT_EOK on successful */ rt_err_t rt_event_detach(rt_event_t event); /** * This function will create an event object from system resource * * @param name the name of event * @param flag the flag of event * * @return the created event, RT_NULL on error happen */ rt_event_t rt_event_create(const char *name, rt_uint8_t flag); /** * This function will delete an event object and release the memory * * @param event the event object * * @return the error code */ rt_err_t rt_event_delete(rt_event_t event);

Next, look at the prototype of the sending rt_event_send and receiving rt_event_recv functions of the event set:

// rt-thread-4.0.1\src\ipc.c

/**

* This function will send an event to the event object, if there are threads

* suspended on event object, it will be waked up.

*

* @param event the event object

* @param set the event set

*

* @return the error code

*/

rt_err_t rt_event_send(rt_event_t event, rt_uint32_t set);

/**

* This function will receive an event from event object, if the event is

* unavailable, the thread shall wait for a specified time.

*

* @param event the fast event object

* @param set the interested event set

* @param option the receive option, either RT_EVENT_FLAG_AND or

* RT_EVENT_FLAG_OR should be set.

* @param timeout the waiting time

* @param recved the received event, if you don't care, RT_NULL can be set.

*

* @return the error code

*/

rt_err_t rt_event_recv(rt_event_t event,

rt_uint32_t set,

rt_uint8_t option,

rt_int32_t timeout,

rt_uint32_t *recved);

The event set receiving function rt_event_recv first determines the combination of events to be received according to the set of events expected to be received and the logic combination relation option of events, then looks up the event set object event to see whether the expected combination of events has been sent, returns immediately if it has been sent, and expects the current thread to receive if it has not been sent completely. Event set and logical combination relation option are saved to event_set and event_info members of the current thread, respectively, and then the current thread is suspended and waited.

The event set sending function rt_event_send first saves the set of events sent to the event set object, then traverses the linked list of suspended threads, judges whether the thread has met the wake-up condition according to the combination of events waiting for each thread, and wakes up the thread if the combination of events waiting for a suspended thread has been received.

Event set control function is actually to reset the object value of event set, that is, to reset the event set rt_event.set of event set object to 0 (which is also set to 0 when constructing event set object). The function prototype of rt_event_control is as follows:

// rt-thread-4.0.1\src\ipc.c /** * This function can get or set some extra attributions of an event object. * * @param event the event object * @param cmd the execution command * @param arg the execution argument * * @return the error code */ rt_err_t rt_event_control(rt_event_t event, int cmd, void *arg);

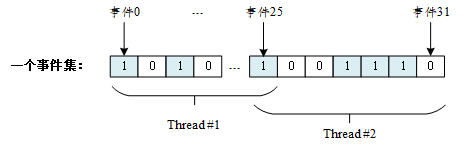

Event sets can be used in many situations, which can replace semaphores to some extent and be used for inter-thread synchronization. A thread or interrupt service routine sends an event to the event set object, and the waiting thread is awakened to process the corresponding event. But unlike the semaphore, the sending operation of the event is not cumulative until the event is cleared, and the releasing action of the semaphore is cumulative. Another feature of events is that receiving threads can wait for multiple events, that is, multiple events correspond to one or more threads. At the same time, according to the parameters that the thread waits for, it can choose whether to "logic or" trigger or "logic and" trigger. This feature is also not available in semaphores, which can only recognize a single release action, but can not wait for multiple types of release at the same time. The following figure shows a schematic diagram of multi-event reception:

An event set contains 32 events, and a specific thread only waits for and receives the events it concerns. It can be a thread waiting for multiple events (threads 1 and 2 are waiting for multiple events, and threads can be triggered by "and" or "or" logic between events), or it can be multiple threads waiting for an event to arrive (event 25). When events of interest occur, threads are awakened and subsequently processed.

3. Interthreaded Communication Object Management

In bare computer programming, global variables are often used to communicate between functions. For example, some functions may change the value of global variables due to some operations. Another function reads the global variables, and performs corresponding actions according to the values of global variables to achieve the purpose of communication cooperation. RT-Thread provides more tools to help transfer information between different threads, such as mailboxes, message queues, signals.

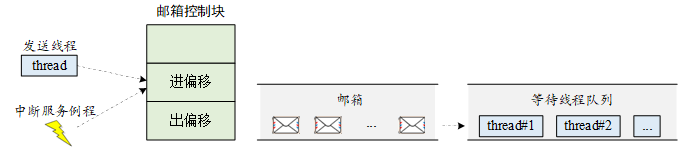

3.1 Mailbox Object Management

The mailbox of RT-Thread operating system is used for inter-thread communication, which is characterized by low overhead and high efficiency. Each email in the mailbox can only hold a fixed 4-byte content (for 32-bit processing systems, the size of the pointer is 4 bytes, so an email can just accommodate a pointer). Typical mailboxes are also called exchange messages. As shown in the figure below, threads or interrupt service routines send a 4-byte-long message to the mailbox, and one or more threads can receive and process it from the mailbox.

Non-blocking mail sending process can be safely applied to interrupt service, which is an effective means for thread, interrupt service and timer to send messages to threads. Generally speaking, the mail collection process may be blocked, depending on whether there is mail in the mailbox and the timeout set when receiving mail. When there is no mail in the mailbox and the timeout time is not zero, the mail collection process will become blocking. In such cases, mail can only be collected by threads.

When a thread sends mail to the mailbox, if the mailbox is not full, the mail will be copied to the mailbox. If the mailbox is full, the sending thread can set a timeout and choose to wait to hang or return directly to - RT_EFULL. If the sending thread chooses to suspend waiting, then when the mail in the mailbox is collected and the space is empty, the sending thread waiting for suspension will be awakened to continue sending.

When a thread receives mail from a mailbox, if the mailbox is empty, the receiving thread can choose whether to wait until it receives a new message to wake up, or set a timeout. When the set timeout time is reached and the mailbox still does not receive mail, the thread that chooses to wait overtime will be awakened and returned to - RT_ETIMEOUT. If mail exists in the mailbox, the receiving thread copies four bytes of mail from the mailbox into the receiving cache.

- Mailbox Control Block

In RT-Thread, the mailbox control block is a data structure used by the operating system to manage mailboxes, which is represented by struct rt_mailbox. Another C expression, rt_mailbox_t, denotes the handle of the mailbox. The implementation in C language is the pointer of the mailbox control block. For a detailed definition of the mailbox control block structure, see the following code:

// rt-thread-4.0.1\include\rtdef.h

/**

* mailbox structure

*/

struct rt_mailbox

{

struct rt_ipc_object parent; /**< inherit from ipc_object */

rt_ubase_t *msg_pool; /**< start address of message buffer */

rt_uint16_t size; /**< size of message pool */

rt_uint16_t entry; /**< index of messages in msg_pool */

rt_uint16_t in_offset; /**< input offset of the message buffer */

rt_uint16_t out_offset; /**< output offset of the message buffer */

rt_list_t suspend_sender_thread; /**< sender thread suspended on this mailbox */

};

typedef struct rt_mailbox *rt_mailbox_t;

The rt_mailbox object is derived from rt_ipc_object, the value of rt_mailbox.parent.type is RT_Object_Class_MailBox, and the rt_mailbox.parent.list is the mailbox object list node. All mailboxes are organized into a two-way list.

rt_mailbox.msg_pool points to the starting address of the mailbox buffer, rt_mailbox.size is the size of the mailbox buffer, rt_mailbox.entry is the number of mailboxes already in the mailbox, rt_mailbox.in_offset and rt_mailbox.out_offset are the entry and exit points of the mailbox buffer. The offset from the buffer start address can be considered as an index of the buffer mail array.

The last private member of the rt_mailbox object rt_mailbox.suspend_sender_thread is the pending waiting queue of the mail sending thread. It was introduced that the IPC object rt_ipc_object also has a private member rt_ipc_object.suspend_thread is the pending waiting queue waiting for the thread of the IPC object. Why does the mailbox object add another pending line? What about the waiting queue? Interprocess synchronization or communication is usually suspended while waiting for the IPC object to be acquired, and rt_ipc_object.suspend_thread saves the suspend waiting queue of the thread that acquires/receives the IPC object; rt_mailbox object suspends except for the thread that waits for receiving mail when the mailbox is empty, and waits for sending mail when the mailbox is full. Threads also hang, IPC objects have provided members to save pending thread lists for receiving mail, and rt_mailbox objects have provided private members to save pending thread lists for sending mail.

- Mailbox interface function

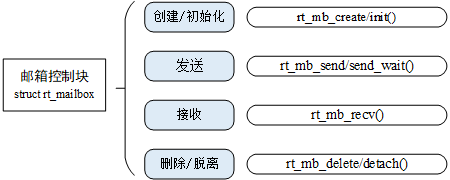

Mailbox control block is a structure, which contains important parameters related to events and plays an important role in the realization of mailbox functions. The related interface of mailbox is shown in the following figure. The operation of a mailbox includes creating/initializing mailbox, sending mailbox, receiving mailbox, deleting/leaving mailbox.

First look at the construction of mailbox objects, destructor prototype:

// rt-thread-4.0.1\src\ipc.c

/**

* This function will initialize a mailbox and put it under control of resource

* management.

*

* @param mb the mailbox object

* @param name the name of mailbox

* @param msgpool the begin address of buffer to save received mail

* @param size the size of mailbox

* @param flag the flag of mailbox

*

* @return the operation status, RT_EOK on successful

*/

rt_err_t rt_mb_init(rt_mailbox_t mb,

const char *name,

void *msgpool,

rt_size_t size,

rt_uint8_t flag);

/**

* This function will detach a mailbox from resource management

*

* @param mb the mailbox object

*

* @return the operation status, RT_EOK on successful

*/

rt_err_t rt_mb_detach(rt_mailbox_t mb);

/**

* This function will create a mailbox object from system resource

*

* @param name the name of mailbox

* @param size the size of mailbox

* @param flag the flag of mailbox

*

* @return the created mailbox, RT_NULL on error happen

*/

rt_mailbox_t rt_mb_create(const char *name, rt_size_t size, rt_uint8_t flag);

/**

* This function will delete a mailbox object and release the memory

*

* @param mb the mailbox object

*

* @return the error code

*/

rt_err_t rt_mb_delete(rt_mailbox_t mb);

Next, we look at the prototype of sending rt_mb_send and receiving rt_mb_recv function of mailbox object. Because mailbox object provides the member of pending waiting list node of mailbox sending thread separately, mailbox object also provides the interface of sending waiting function. The prototype is as follows:

// rt-thread-4.0.1\src\ipc.c

/**

* This function will send a mail to mailbox object. If the mailbox is full,

* current thread will be suspended until timeout.

*

* @param mb the mailbox object

* @param value the mail

* @param timeout the waiting time

*

* @return the error code

*/

rt_err_t rt_mb_send_wait(rt_mailbox_t mb,

rt_ubase_t value,

rt_int32_t timeout);

/**

* This function will send a mail to mailbox object, if there are threads

* suspended on mailbox object, it will be waked up. This function will return

* immediately, if you want blocking send, use rt_mb_send_wait instead.

*

* @param mb the mailbox object

* @param value the mail

*

* @return the error code

*/

rt_err_t rt_mb_send(rt_mailbox_t mb, rt_ubase_t value);

/**

* This function will receive a mail from mailbox object, if there is no mail

* in mailbox object, the thread shall wait for a specified time.

*

* @param mb the mailbox object

* @param value the received mail will be saved in

* @param timeout the waiting time

*

* @return the error code

*/

rt_err_t rt_mb_recv(rt_mailbox_t mb, rt_ubase_t *value, rt_int32_t timeout);

The mailbox control function rt_mb_control provides the mailbox reset command. In fact, it wakes up the suspended thread, counts the number of mails, counts the entry and exit cheaper points, and so on. The function prototype is as follows:

// rt-thread-4.0.1\src\ipc.c /** * This function can get or set some extra attributions of a mailbox object. * * @param mb the mailbox object * @param cmd the execution command * @param arg the execution argument * * @return the error code */ rt_err_t rt_mb_control(rt_mailbox_t mb, int cmd, void *arg);

Mailbox is a simple way of messaging between threads, which is characterized by low overhead and high efficiency. In the implementation of RT-Thread operating system, a 4-byte mail can be delivered at a time, and the mailbox has a certain storage function, and can cache a certain number of mails (the number of mails is determined by the specified capacity when creating and initializing mailboxes). The maximum length of an email in a mailbox is 4 bytes, so the mailbox can be used for messages that do not exceed 4 bytes. Since the content of 4 bytes on 32 system can exactly place a pointer, when large messages need to be passed between threads, the pointer to a buffer can be sent to the mailbox as mail, that is, the mailbox can also pass the pointer.

3.2 Message Queue Object Management

Message queue is another common way of inter-thread communication, which is an extension of mailbox. It can be used in many occasions: message exchange between threads, receiving variable length data by serial port, etc.

Message queues can receive messages of fixed length from threads or interrupt service routines and cache them in their own memory space. Other threads can also read the corresponding message from the message queue, and when the message queue is empty, the read thread can be suspended. When a new message arrives, the suspended thread is awakened to receive and process the message. Message queue is an asynchronous communication mode.

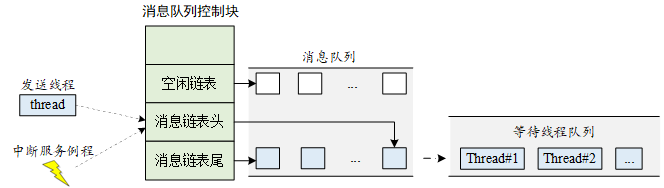

As shown in the figure below, threads or interrupt service routines can put one or more messages into message queues. Similarly, one or more threads can get messages from message queues. When more than one message is sent to a message queue, the first message to enter the message queue is usually passed to the thread first, that is to say, the thread gets the first message to enter the message queue, that is, the first-in first-out principle (FIFO).

The message queue object of RT-Thread operating system consists of many elements. When the message queue is created, it is allocated message queue control blocks: message queue name, memory buffer, message size and queue length. At the same time, each message queue object contains multiple message boxes, each message box can store a message; the first and last message boxes in the message queue are called message chain header and message chain tail respectively, corresponding to msg_queue_head and msg_queue_tail in the message queue control block; some message boxes may be They are empty and form a list of free message boxes through msg_queue_free. The total number of message boxes in all message queues is the length of the message queue, which can be specified when the message queue is created.

- Message queue control block

In RT-Thread, message queue control block is a data structure used by the operating system to manage message queues, which is represented by struct rt_message queue. Another C expression, rt_mq_t, represents the handle of the message queue, and its implementation in C language is the pointer of the message queue control block. For a detailed definition of the message queue control block structure, see the following code:

rt-thread-4.0.1\include\rtdef.h

/**

* message queue structure

*/

struct rt_messagequeue

{

struct rt_ipc_object parent; /**< inherit from ipc_object */

void *msg_pool; /**< start address of message queue */

rt_uint16_t msg_size; /**< message size of each message */

rt_uint16_t max_msgs; /**< max number of messages */

rt_uint16_t entry; /**< index of messages in the queue */

void *msg_queue_head; /**< list head */

void *msg_queue_tail; /**< list tail */

void *msg_queue_free; /**< pointer indicated the free node of queue */

};

typedef struct rt_messagequeue *rt_mq_t;

struct rt_mq_message

{

struct rt_mq_message *next;

};

The rt_messagequeue object is derived from rt_ipc_object. The rt_messagequeue. parent.type value is RT_Object_Class_MessageQueue, and the rt_messagequeue. parent.list is the linked list node of the message queue object. All message queues are organized into a two-way linked list.

There are also many private members of rt_messagequeue object, rt_messagequeue.msg_pool points to the start address of message queue buffer, rt_messagequeue.msg_size indicates the length of each message (message length in message queue is not fixed, unlike mail length in mailbox), rt_messagequeue.max_msgs represents the message queue. The maximum number of messages a queue can hold, and rt_messagequeue. entry represents the number of messages already in the queue.

rt_mq_message is the header in the message queue, and the messages in the queue are organized into a one-way list by the header. Mail length in mailbox is fixed, so mails in mailbox can be managed as arrays, and message length in message queue is not fixed, so each message needs a header organized into a linked list for easy management. The rt_messagequeue. msg_queue_head message chain header and the end of the rt_messagequeue.msg_queue_tail message chain are used as the first and last identifiers of the message chain list to facilitate traversal of the chain table to avoid cross-border access. After initialization, unused blank messages are organized into linked lists for easy management, and rt_messagequeue.msg_queue_free is the first node of these blank message lists.

- Message Queue Interface Function

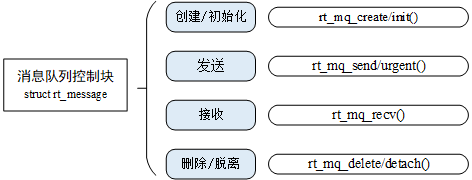

Message queue control block is a structure which contains important parameters related to message queue and plays an important role in the realization of message queue function. The interface of message queue is shown in the following figure. The operation of a message queue includes: creating message queue - sending message - receiving message - deleting message queue.

First, look at the construction of message queues, destructor prototypes:

// rt-thread-4.0.1\src\ipc.c

/**

* This function will initialize a message queue and put it under control of

* resource management.

*

* @param mq the message object

* @param name the name of message queue

* @param msgpool the beginning address of buffer to save messages

* @param msg_size the maximum size of message

* @param pool_size the size of buffer to save messages

* @param flag the flag of message queue

*

* @return the operation status, RT_EOK on successful

*/

rt_err_t rt_mq_init(rt_mq_t mq,

const char *name,

void *msgpool,

rt_size_t msg_size,

rt_size_t pool_size,

rt_uint8_t flag);

/**

* This function will detach a message queue object from resource management

*

* @param mq the message queue object

*

* @return the operation status, RT_EOK on successful

*/

rt_err_t rt_mq_detach(rt_mq_t mq);

/**

* This function will create a message queue object from system resource

*

* @param name the name of message queue

* @param msg_size the size of message

* @param max_msgs the maximum number of message in queue

* @param flag the flag of message queue

*

* @return the created message queue, RT_NULL on error happen

*/

rt_mq_t rt_mq_create(const char *name,

rt_size_t msg_size,

rt_size_t max_msgs,

rt_uint8_t flag);

/**

* This function will delete a message queue object and release the memory

*

* @param mq the message queue object

*

* @return the error code

*/

rt_err_t rt_mq_delete(rt_mq_t mq);

In addition to rt_messagequeue.msg_size, the space occupied by each message in the message queue includes the space sizeof(rt_mq_message) occupied by the message header. So the number of messages MQ - > max_msgs = pool_size/ (mq - > msg_size + sizeof (struct rt_mq_message) is calculated when creating static objects of message queue. According to the parameters passed in, the space MQ - > msg_pool = RT_KERNEL_MALLOC ((mq - > msg_size + si) is calculated when creating dynamic objects of message queue. Zeof (struct rt_mq_message)* MQ - > max_msgs.

Next, we can see the prototype of the message sending and receiving RT MQ send functions. Because the message queue is FIFO, sometimes the messages are urgent, and need to be transmitted as soon as possible to ensure real-time. The message queue provides LIFO processing of LIFO for this kind of urgent messages, which is about to be urgent. Messages are inserted into the header of the queue, emergency messages are taken out directly from the header of the queue next time messages are received, and functions rt_mq_urgent that send emergency messages are prototyped as follows:

// rt-thread-4.0.1\src\ipc.c

/**

* This function will send a message to message queue object, if there are

* threads suspended on message queue object, it will be waked up.

*

* @param mq the message queue object

* @param buffer the message

* @param size the size of buffer

*

* @return the error code

*/

rt_err_t rt_mq_send(rt_mq_t mq, void *buffer, rt_size_t size);

/**

* This function will send an urgent message to message queue object, which

* means the message will be inserted to the head of message queue. If there

* are threads suspended on message queue object, it will be waked up.

*

* @param mq the message queue object

* @param buffer the message

* @param size the size of buffer

*

* @return the error code

*/

rt_err_t rt_mq_urgent(rt_mq_t mq, void *buffer, rt_size_t size);

/**

* This function will receive a message from message queue object, if there is

* no message in message queue object, the thread shall wait for a specified

* time.

*

* @param mq the message queue object

* @param buffer the received message will be saved in

* @param size the size of buffer

* @param timeout the waiting time

*

* @return the error code

*/

rt_err_t rt_mq_recv(rt_mq_t mq,

void *buffer,

rt_size_t size,

rt_int32_t timeout);

The message queue control function rt_mq_control provides the message queue reset command. In fact, it wakes up the suspended thread and reorganizes the messages in the queue into a blank list. The function prototype is as follows:

// rt-thread-4.0.1\src\ipc.c /** * This function can get or set some extra attributions of a message queue * object. * * @param mq the message queue object * @param cmd the execution command * @param arg the execution argument * * @return the error code */ rt_err_t rt_mq_control(rt_mq_t mq, int cmd, void *arg);

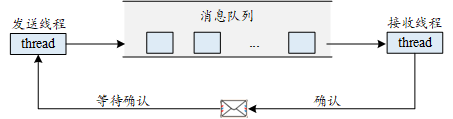

Message queues can be used in situations where messages of varying length are sent, including message exchange between threads, and messages sent to threads in interrupt service routines (interrupt service routines cannot receive messages). The use of message queues is described in the following two parts: sending and synchronizing messages.

- Sending messages: The obvious difference between message queues and mailboxes is that the length of messages is not limited to less than four bytes; in addition, message queues include a functional interface for sending emergency messages. But when a message queue is created that has a maximum length of 4 bytes for all messages, the message queue object will degenerate into a mailbox. Message queue is a direct replication of data content, which is equivalent to the function of mailbox plus memory pool. If mailbox wants to deliver messages with more than 4 bytes, it also needs to apply for and maintain memory pool space. Message queue can avoid the additional trouble of dynamically allocating memory for messages by users, and does not need to worry about the subsequent interpretation of message memory space. Put problems;

-

Synchronization message: In general system design, we often encounter the problem of sending synchronization message. At this time, we can choose the corresponding implementation according to the different state at that time: between two threads, we can use the form of [message queue + semaphore or mailbox]. The sending thread sends the corresponding message to the message queue in the form of message sending. After sending the message, it hopes to get the receipt confirmation of the receiving thread. The working schematic diagram is as follows:

Mailbox as a confirmation flag means that the receiving thread can notify the sending thread of some status values; while semaphore as a confirmation flag can only notify the sending thread of a single message, and the message has been confirmed to receive.

3.3 Signal Object Management

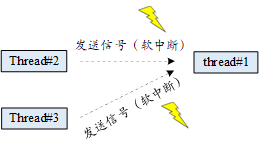

Signal (also known as soft interrupt signal) is a simulation of interrupt mechanism at the software level. In principle, a thread receives a signal similar to the processor receives an interrupt request.

Signal is used as asynchronous communication in RT-Thread. POSIX standard defines sigset_t type to define a signal set. However, sigset_t type may be defined in different systems in different ways. In RT-Thread, sigset_t is defined as unsigned long type and named rt_sigset_t, which can be used by applications. The signals are SIGUSR1 and SIGUSR2.

Signals are essentially soft interrupts, which are used to notify threads of asynchronous events, and to notify and deal with exceptions between threads. A thread does not have to wait for the arrival of a signal through any operation. In fact, the thread does not know when the signal arrives. Threads can send soft interrupt signals by calling rt_thread_kill().

Threads receiving signals have different processing methods for various signals, which can be divided into three categories:

- For interrupt-like processors, threads can specify a processing function for signals that need to be processed.

- Ignore a signal and do not process it as if it had never happened.

- The processing of the signal retains the default value of the system.

As shown in the following figure, suppose Thread 1 needs to process the signal. First, Thread 1 installs a signal and unblock it. At the same time, it sets up an exception handling method for the signal. Then other threads can send a signal to Thread 1 and trigger Thread 1 to process the signal.

When the signal is passed to thread 1, if it is in a pending state, it will change the state to ready state to process the corresponding signal. If it is running, a new stack frame space will be created on the basis of its current thread stack to process the corresponding signals. It should be noted that the size of the thread stack used will increase accordingly.

- Signal Data Structure

Signal data structure is not similar to the previous five kernel objects. It does not inherit from IPC objects, nor does it have corresponding control block management. It mainly relies on several members of the thread object rt_thread to achieve the purpose of signal management. Let's first look at the members of rt_threads that are related to signals:

// rt-thread-4.0.1\include\rtdef.h

/**

* @addtogroup Signal

*/

#ifdef RT_USING_SIGNALS

#include <libc/libc_signal.h>

typedef unsigned long rt_sigset_t;

typedef void (*rt_sighandler_t)(int signo);

typedef siginfo_t rt_siginfo_t;

#define RT_SIG_MAX 32

#endif

/**

* Thread structure

*/

struct rt_thread

{

......

#if defined(RT_USING_SIGNALS)

rt_sigset_t sig_pending; /**< the pending signals */

rt_sigset_t sig_mask; /**< the mask bits of signal */

#ifndef RT_USING_SMP

void *sig_ret; /**< the return stack pointer from signal */

#endif

rt_sighandler_t *sig_vectors; /**< vectors of signal handler */

void *si_list; /**< the signal infor list */

#endif

......

};

typedef struct rt_thread *rt_thread_t;

#define RT_THREAD_STAT_SIGNAL 0x10 /**< task hold signals */

#define RT_THREAD_STAT_SIGNAL_READY (RT_THREAD_STAT_SIGNAL | RT_THREAD_READY)

#define RT_THREAD_STAT_SIGNAL_WAIT 0x20 /**< task is waiting for signals */

#define RT_THREAD_STAT_SIGNAL_PENDING 0x40 /**< signals is held and it has not been procressed */

#define RT_THREAD_STAT_SIGNAL_MASK 0xf0

For non-SMP symmetric multicore processors, rt_threads are composed of four members by conditional macro RT_USING_SIGNALS: rt_thread.sig_pending represents the suspended signal, which can be understood by referring to EndSV introduced in interrupt.

rt_thread.sig_mask represents the shielding bit of the signal, which can be understood by referring to the Interrupt Shielding Register Group (PRIMASK, FAULTMASK, BASEPRI); rt_thread.sig_vectors save the address of the signal/soft interrupt processing program, which can be understood by referring to the interrupt vector table; rt_thread.si_list is a node of the signal information list, which stores the signal information. Structure address, what information does a signal have? Look at the source code of the following signal information structure:

// rt-thread-4.0.1\include\libc\libc_signal.h

struct siginfo

{

rt_uint16_t si_signo;

rt_uint16_t si_code;

union sigval si_value;

};

typedef struct siginfo siginfo_t;

union sigval

{

int sival_int; /* Integer signal value */

void *sival_ptr; /* Pointer signal value */

};

#define SI_USER 0x01 /* Signal sent by kill(). */

#define SI_QUEUE 0x02 /* Signal sent by sigqueue(). */

#define SI_TIMER 0x03 /* Signal generated by expiration of a

timer set by timer_settime(). */

......

/* #define SIGUSR1 25 */

/* #define SIGUSR2 26 */

#define SIGRTMIN 27

#define SIGRTMAX 31

#define NSIG 32

// rt-thread-4.0.1\src\signal.c

struct siginfo_node

{

siginfo_t si;

struct rt_slist_node list;

};

Signal information siginfo has three members: siginfo.si_signo is the signal number, which can be understood by analogy with the interrupt number. The signals that user applications can use are SIGUSR1 and SIGUSR2 (for the ARMGCC/ARMCLANG compiler, the two signal numbers are annotated, and it is not clear what the developers of RT-Thread think of siginfo.si);_ Code is the coding type of signal, SI_USER is the coding type that can be used by user application, siginfo.si_value is the signal value, which can be integer or pointer type. Whether to use SI_USER or siginfo.si_value depends on the need.

In order to organize siginfo into a linked list, siginfo_node is introduced, which organizes siginfo into a one-way linked list. The thread object member rt_thread.si_list introduced earlier is the pointer to siginfo_node.

If Thread objects use signals, rt_thread.stat adds four additional states to assist in signal management: RT_THREAD_STAT_SIGNAL for thread holding signals; RT_THREAD_STAT_SIGNAL_READY for thread holding signals and in ready state; RT_THREAD_STAT_SIGNAL_WAIT for thread waiting signals;

RT_THREAD_STAT_SIGNAL_PENDING indicates that the thread holding signal is suspended pending.

- Signal Object Interface Function

Since the signal data structure does not inherit from rt_ipc_object or rt_object, the interface function is different from the previous five objects. First, look at the system initialization function of the signal.

// rt-thread-4.0.1\src\signal.c

int rt_system_signal_init(void)

{

_rt_siginfo_pool = rt_mp_create("signal", RT_SIG_INFO_MAX, sizeof(struct siginfo_node));

if (_rt_siginfo_pool == RT_NULL)

{

LOG_E("create memory pool for signal info failed.");

RT_ASSERT(0);

}

return 0;

}

void rt_thread_alloc_sig(rt_thread_t tid);

void rt_thread_free_sig(rt_thread_t tid);

void rt_thread_handle_sig(rt_bool_t clean_state);

// rt-thread-4.0.1\src\components.c

int rtthread_startup(void)

{

......

#ifdef RT_USING_SIGNALS

/* signal system initialization */

rt_system_signal_init();

#endif

......

}

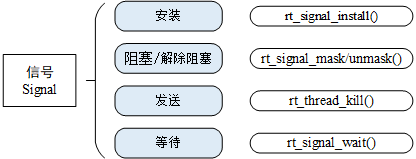

The system initialization function rt_system_signal_init of the signal is called in the system startup function rtthread_startup, and the signal memory pool is created in the initialization function. Distribution, release and processing of signals are called by other system interface functions, which need not be called by us. Next, we look at the signal operation interface functions commonly used by users (mainly the following: installation signal, blocking signal, blocking release, signal transmission, signal waiting):

Installation signal: If a thread wants to process a signal, it must install the signal in the thread. Installation signal is mainly used to determine the mapping relationship between the signal number and the thread's action (signal processing program) against the signal number, that is, which signal the thread will process and what operation it will perform when the signal is transmitted to the thread. In this function, the previous signal allocation function rt_thread_alloc_sig will also be called. The prototype of the function is as follows:

// rt-thread-4.0.1\src\signal.c /** * This function can establish the mapping between signal number and signal handler. * * @param signo: signal number, Only SIGUSR1 and SIGUSR2 are open to the user. * @param handler: signal handler, Three processing methods: user-defined processing function, * SIG_IGN(ignore a signal),SIG_DFL(call _signal_default_handler()); * * @return The handler value before the signal is installed or SIG_ERR. */ rt_sighandler_t rt_signal_install(int signo, rt_sighandler_t handler);

Shielding signal/de-shielding signal: Similar to interrupt shielding and de-shielding, software simulation interrupt signal also has interface function of shielding and de-shielding. Function prototype is as follows:

// rt-thread-4.0.1\src\signal.c /** * This function can mask the specified signal. * @param signo: signal number, Only SIGUSR1 and SIGUSR2 are open to the user. */ void rt_signal_mask(int signo); /** * This function can unmask the specified signal. * @param signo: signal number, Only SIGUSR1 and SIGUSR2 are open to the user. */ void rt_signal_unmask(int signo);

Send Signal/Wait Signal: When exception handling is required, it can send a signal to a thread that has set exception handling. Calling rt_thread_kill() can be used to send a signal to any thread; calling rt_signal_wait to wait for the set signal to arrive, and if not, hang the thread until it arrives. If a signal or a waiting time exceeds the specified timeout time, the pointer pointing to the signal body is stored in si. The prototypes of these two functions are as follows:

// rt-thread-4.0.1\src\signal.c /** * This function can send a signal to the specified thread. * * @param tid: thread receiving signal; * @param sig: signal number to be sent; * * @return RT_EOK or -RT_EINVAL. */ int rt_thread_kill(rt_thread_t tid, int sig); /** * This function will wait for a signal set. * * @param set: specify the set of signals to wait for; * @param si: pointer to store waiting for signal information; * @param timeout: specified waiting time; * * @return RT_EOK or -RT_ETIMEOUT or -RT_EINVAL. */ int rt_signal_wait(const rt_sigset_t *set, rt_siginfo_t *si, rt_int32_t timeout);