1. rt_system_signal_init source

int rt_system_signal_init(void)

{

_rt_siginfo_pool = rt_mp_create("signal", RT_SIG_INFO_MAX, sizeof(struct siginfo_node));

if (_rt_siginfo_pool == RT_NULL)

{

dbg_log(DBG_ERROR, "create memory pool for signal info failed.\n");

RT_ASSERT(0);

}

return 0;

}

2. rt_system_signal_init analysis

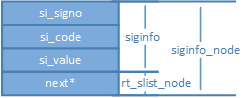

1. siginfo_node structure collation

2. rt_mp_create analysis

0, Source Code

rt_mp_t rt_mp_create(const char *name, // "signal"

rt_size_t block_count, // RT_SIG_INFO_MAX

rt_size_t block_size) // sizeof(struct siginfo_node)

{

rt_uint8_t *block_ptr;

struct rt_mempool *mp;

register rt_size_t offset;

RT_DEBUG_NOT_IN_INTERRUPT;

/* allocate object */

mp = (struct rt_mempool *)rt_object_allocate(RT_Object_Class_MemPool, name);

/* allocate object failed */

if (mp == RT_NULL)

return RT_NULL;

/* initialize memory pool */

block_size = RT_ALIGN(block_size, RT_ALIGN_SIZE);

mp->block_size = block_size;

mp->size = (block_size + sizeof(rt_uint8_t *)) * block_count;

/* allocate memory */

mp->start_address = rt_malloc((block_size + sizeof(rt_uint8_t *)) *

block_count);

if (mp->start_address == RT_NULL)

{

/* no memory, delete memory pool object */

rt_object_delete(&(mp->parent));

return RT_NULL;

}

mp->block_total_count = block_count;

mp->block_free_count = mp->block_total_count;

/* initialize suspended thread list */

rt_list_init(&(mp->suspend_thread));

mp->suspend_thread_count = 0;

/* initialize free block list */

block_ptr = (rt_uint8_t *)mp->start_address;

for (offset = 0; offset < mp->block_total_count; offset ++)

{

*(rt_uint8_t **)(block_ptr + offset * (block_size + sizeof(rt_uint8_t *)))

= block_ptr + (offset + 1) * (block_size + sizeof(rt_uint8_t *));

}

*(rt_uint8_t **)(block_ptr + (offset - 1) * (block_size + sizeof(rt_uint8_t *)))

= RT_NULL;

mp->block_list = block_ptr;

return mp;

}

RTM_EXPORT(rt_mp_create);

1. rt_mempool structure collation

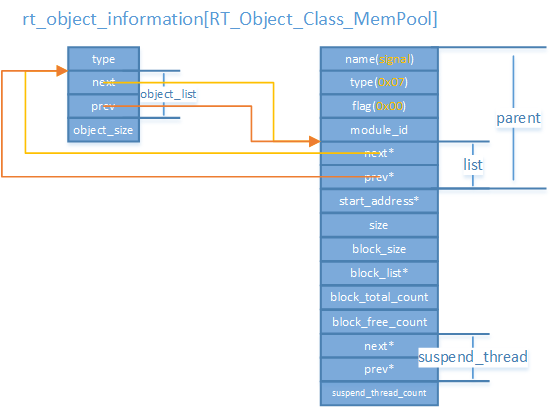

2,rt_object_allocate

// type - RT_Object_Class_MemPool

// name - "signal"

rt_object_t rt_object_allocate(enum rt_object_class_type type, const char *name)

{

struct rt_object *object;

register rt_base_t temp;

struct rt_object_information *information;

#ifdef RT_USING_MODULE

struct rt_dlmodule *module = dlmodule_self();

#endif

RT_DEBUG_NOT_IN_INTERRUPT;

/* get object information */

information = rt_object_get_information(type);

RT_ASSERT(information != RT_NULL);

object = (struct rt_object *)RT_KERNEL_MALLOC(information->object_size);

if (object == RT_NULL)

{

/* no memory can be allocated */

return RT_NULL;

}

/* clean memory data of object */

rt_memset(object, 0x0, information->object_size);

/* initialize object's parameters */

/* set object type */

object->type = type;

/* set object flag */

object->flag = 0;

/* copy name */

rt_strncpy(object->name, name, RT_NAME_MAX);

RT_OBJECT_HOOK_CALL(rt_object_attach_hook, (object));

/* lock interrupt */

temp = rt_hw_interrupt_disable();

#ifdef RT_USING_MODULE

if (module)

{

rt_list_insert_after(&(module->object_list), &(object->list));

object->module_id = (void *)module;

}

else

#endif

{

/* insert object into information object list */

rt_list_insert_after(&(information->object_list), &(object->list));

}

/* unlock interrupt */

rt_hw_interrupt_enable(temp);

/* return object */

return object;

}

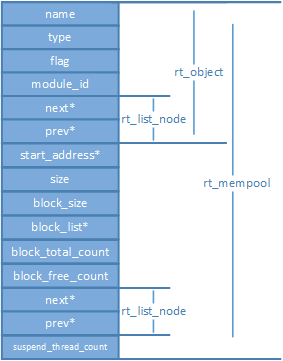

1,rt_object_get_information

information = rt_object_get_information(type);

Equivalent to

information = rt_object_get_information(RT_Object_Class_MemPool);

2,RT_KERNEL_MALLOC

#define RT_KERNEL_MALLOC(sz) rt_malloc(sz)

void *rt_malloc(rt_size_t size)

{

rt_size_t ptr, ptr2;

struct heap_mem *mem, *mem2;

RT_DEBUG_NOT_IN_INTERRUPT;

if (size == 0)

return RT_NULL;

// RT_ALIGN - Returns the minimum size of the specified width

if (size != RT_ALIGN(size, RT_ALIGN_SIZE))

RT_DEBUG_LOG(RT_DEBUG_MEM, ("malloc size %d, but align to %d\n",

size, RT_ALIGN(size, RT_ALIGN_SIZE)));

else

RT_DEBUG_LOG(RT_DEBUG_MEM, ("malloc size %d\n", size));

/* alignment size */

size = RT_ALIGN(size, RT_ALIGN_SIZE);

// mem_size_aligned is initialized with an initial value of SRAM free space

if (size > mem_size_aligned)

{

RT_DEBUG_LOG(RT_DEBUG_MEM, ("no memory\n"));

return RT_NULL;

}

// Minimum Allocated Memory

/* every data block must be at least MIN_SIZE_ALIGNED long */

if (size < MIN_SIZE_ALIGNED)

size = MIN_SIZE_ALIGNED;

/* take memory semaphore */

rt_sem_take(&heap_sem, RT_WAITING_FOREVER);

// lfree equals heap_ptr, ptr indicates the size of space used

for (ptr = (rt_uint8_t *)lfree - heap_ptr;

ptr < mem_size_aligned - size;

ptr = ((struct heap_mem *)&heap_ptr[ptr])->next)

{

mem = (struct heap_mem *)&heap_ptr[ptr];

// Mem->next - (ptr + SIZEOF_STRUCT_MEM) >= size means that after allocation, one more can be allocated

if ((!mem->used) && (mem->next - (ptr + SIZEOF_STRUCT_MEM)) >= size)

{

/* mem is not used and at least perfect fit is possible:

* mem->next - (ptr + SIZEOF_STRUCT_MEM) gives us the 'user data size' of mem */

// Mem->next - (ptr + SIZEOF_STRUCT_MEM) represents the remaining space after allocation

if (mem->next - (ptr + SIZEOF_STRUCT_MEM) >=

(size + SIZEOF_STRUCT_MEM + MIN_SIZE_ALIGNED))

{

/* (in addition to the above, we test if another struct heap_mem (SIZEOF_STRUCT_MEM) containing

* at least MIN_SIZE_ALIGNED of data also fits in the 'user data space' of 'mem')

* -> split large block, create empty remainder,

* remainder must be large enough to contain MIN_SIZE_ALIGNED data: if

* mem->next - (ptr + (2*SIZEOF_STRUCT_MEM)) == size,

* struct heap_mem would fit in but no data between mem2 and mem2->next

* @todo we could leave out MIN_SIZE_ALIGNED. We would create an empty

* region that couldn't hold data, but when mem->next gets freed,

* the 2 regions would be combined, resulting in more free memory

*/

ptr2 = ptr + SIZEOF_STRUCT_MEM + size;

/* create mem2 struct */

mem2 = (struct heap_mem *)&heap_ptr[ptr2];

mem2->magic = HEAP_MAGIC;

mem2->used = 0;

mem2->next = mem->next;

mem2->prev = ptr;

#ifdef RT_USING_MEMTRACE

rt_mem_setname(mem2, " ");

#endif

/* and insert it between mem and mem->next */

mem->next = ptr2;

mem->used = 1;

if (mem2->next != mem_size_aligned + SIZEOF_STRUCT_MEM)

{

((struct heap_mem *)&heap_ptr[mem2->next])->prev = ptr2;

}

#ifdef RT_MEM_STATS

used_mem += (size + SIZEOF_STRUCT_MEM);

if (max_mem < used_mem)

max_mem = used_mem;

#endif

}

else

{

/* (a mem2 struct does no fit into the user data space of mem and mem->next will always

* be used at this point: if not we have 2 unused structs in a row, plug_holes should have

* take care of this).

* -> near fit or excact fit: do not split, no mem2 creation

* also can't move mem->next directly behind mem, since mem->next

* will always be used at this point!

*/

mem->used = 1;

#ifdef RT_MEM_STATS

used_mem += mem->next - ((rt_uint8_t *)mem - heap_ptr);

if (max_mem < used_mem)

max_mem = used_mem;

#endif

}

/* set memory block magic */

mem->magic = HEAP_MAGIC;

#ifdef RT_USING_MEMTRACE

if (rt_thread_self())

rt_mem_setname(mem, rt_thread_self()->name);

else

rt_mem_setname(mem, "NONE");

#endif

if (mem == lfree)

{

/* Find next free block after mem and update lowest free pointer */

while (lfree->used && lfree != heap_end)

lfree = (struct heap_mem *)&heap_ptr[lfree->next];

RT_ASSERT(((lfree == heap_end) || (!lfree->used)));

}

rt_sem_release(&heap_sem);

RT_ASSERT((rt_uint32_t)mem + SIZEOF_STRUCT_MEM + size <= (rt_uint32_t)heap_end);

RT_ASSERT((rt_uint32_t)((rt_uint8_t *)mem + SIZEOF_STRUCT_MEM) % RT_ALIGN_SIZE == 0);

RT_ASSERT((((rt_uint32_t)mem) & (RT_ALIGN_SIZE - 1)) == 0);

RT_DEBUG_LOG(RT_DEBUG_MEM,

("allocate memory at 0x%x, size: %d\n",

(rt_uint32_t)((rt_uint8_t *)mem + SIZEOF_STRUCT_MEM),

(rt_uint32_t)(mem->next - ((rt_uint8_t *)mem - heap_ptr))));

RT_OBJECT_HOOK_CALL(rt_malloc_hook,

(((void *)((rt_uint8_t *)mem + SIZEOF_STRUCT_MEM)), size));

/* return the memory data except mem struct */

return (rt_uint8_t *)mem + SIZEOF_STRUCT_MEM;

}

}

rt_sem_release(&heap_sem);

return RT_NULL;

}

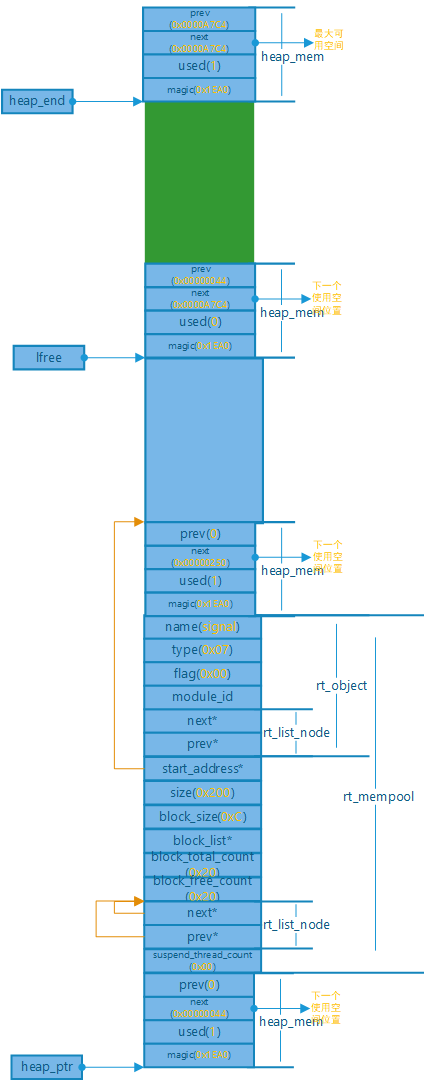

Role: Allocate sizeof (struct rt_rt) on the heapMempool) size of space.

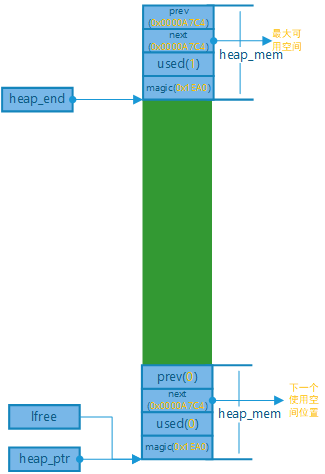

0, rt_System_Heap_Heap space after init initialization

1,RT_ALIGN

/** * @ingroup BasicDef * * @def RT_ALIGN(size, align) * Return the most contiguous size aligned at specified width. RT_ALIGN(13, 4) * would return 16. */ #define RT_ALIGN(size, align) (((size) + (align) - 1) & ~((align) - 1))

Align by specified bytes.

2,mem_size_aligned

Total heap space size.

mem_size_aligned at rt_Hw_Board_Rt_in init functionSystem_Heap_Init initialization.

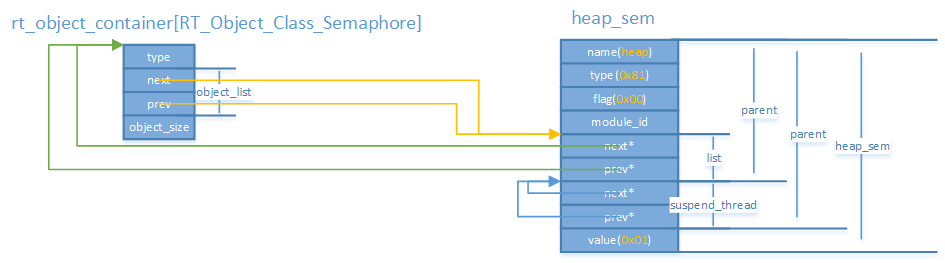

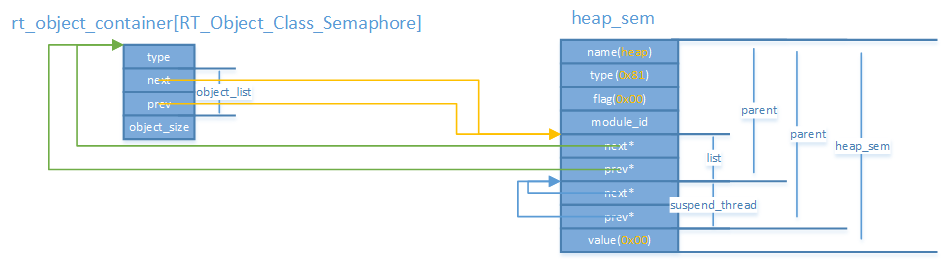

3,heap_sem

heap_sem in rt_Hw_Board_Rt_in init functionSystem_Heap_Init initialization.Initialized as follows:

4,rt_sem_take

Get the memory semaphore.

rt_err_t rt_sem_take(rt_sem_t sem, rt_int32_t time)

{

register rt_base_t temp;

struct rt_thread *thread;

/* parameter check */

RT_ASSERT(sem != RT_NULL);

RT_ASSERT(rt_object_get_type(&sem->parent.parent) == RT_Object_Class_Semaphore);

RT_OBJECT_HOOK_CALL(rt_object_trytake_hook, (&(sem->parent.parent)));

/* disable interrupt */

temp = rt_hw_interrupt_disable();

RT_DEBUG_LOG(RT_DEBUG_IPC, ("thread %s take sem:%s, which value is: %d\n",

rt_thread_self()->name,

((struct rt_object *)sem)->name,

sem->value));

if (sem->value > 0)

{

/* semaphore is available */

sem->value --;

/* enable interrupt */

rt_hw_interrupt_enable(temp);

}

else

{

/* no waiting, return with timeout */

if (time == 0)

{

rt_hw_interrupt_enable(temp);

return -RT_ETIMEOUT;

}

else

{

/* current context checking */

RT_DEBUG_IN_THREAD_CONTEXT;

/* semaphore is unavailable, push to suspend list */

/* get current thread */

thread = rt_thread_self();

/* reset thread error number */

thread->error = RT_EOK;

RT_DEBUG_LOG(RT_DEBUG_IPC, ("sem take: suspend thread - %s\n",

thread->name));

/* suspend thread */

rt_ipc_list_suspend(&(sem->parent.suspend_thread),

thread,

sem->parent.parent.flag);

/* has waiting time, start thread timer */

if (time > 0)

{

RT_DEBUG_LOG(RT_DEBUG_IPC, ("set thread:%s to timer list\n",

thread->name));

/* reset the timeout of thread timer and start it */

rt_timer_control(&(thread->thread_timer),

RT_TIMER_CTRL_SET_TIME,

&time);

rt_timer_start(&(thread->thread_timer));

}

/* enable interrupt */

rt_hw_interrupt_enable(temp);

/* do schedule */

rt_schedule();

if (thread->error != RT_EOK)

{

return thread->error;

}

}

}

RT_OBJECT_HOOK_CALL(rt_object_take_hook, (&(sem->parent.parent)));

return RT_EOK;

}

RTM_EXPORT(rt_sem_take);

Change only the value of value.

5,heap_ptr

static rt_uint8_t *heap_ptr;

heap_ptr in rt_Hw_Board_Rt_in init functionSystem_Heap_Init initialization.heap_ptr is set at the start of the heap.

6,lfree

static struct heap_mem *lfree;

lfree in rt_Hw_Board_Rt_in init functionSystem_Heap_Init initialization.lfree is set to the heap idle location.

Note:rt_System_Heap_After the init function is initialized, heap_ptr and lfree are equal.

7,size

The size value is equal to sizeof(struct rt_mempool).

8,SIZEOF_STRUCT_MEM

#define SIZEOF_STRUCT_MEM RT_ALIGN(sizeof(struct heap_mem), RT_ALIGN_SIZE)

9,used_mem,max_mem

static rt_size_t used_mem, max_mem;

used_mem: heap usage size

max_mem: heap maximum usage

10. Allocate memory space and initialize

for (ptr = (rt_uint8_t *)lfree - heap_ptr;

ptr < mem_size_aligned - size;

ptr = ((struct heap_mem *)&heap_ptr[ptr])->next)

ptr = (rt_uint8_t *)lfree - heap_ptr:ptr Save heap usage space size.

PTR < mem_Size_Aligned - size: detects if space is allocated enough

PTR = ((struct heap_MEM *) &heap_Ptr[ptr]->next: Points to the next allocated space.

mem = (struct heap_mem *)&heap_ptr[ptr];

heap_ptr is unsigned char pointer, PTR is usage space size, heap_ptr[ptr] is the first unused space.

if ((!mem->used) && (mem->next - (ptr + SIZEOF_STRUCT_MEM)) >= size)

Mem->user represents the usage of this space.0 means unused and 1 means used.

After the heap space is initialized, the last piece of space is set to be used. After initialization, mem->next saves the first address of the last piece of space.

Mem->next - (ptr + SIZEOF_STRUCT_MEM) >= size: Determine if the remaining space is sufficient to allocate SIZEOF_STRUCT_MEM and size.

if (mem->next - (ptr + SIZEOF_STRUCT_MEM) >= (size + SIZEOF_STRUCT_MEM + MIN_SIZE_ALIGNED))

Except to allocate space SIZEOF_STRUCT_Beyond MEM and size, detect if allocated is greater than SIZEOF_STRUCT_MEM + MIN_SIZE_ALIGNED.

ptr2 = ptr + SIZEOF_STRUCT_MEM + size; /* create mem2 struct */ mem2 = (struct heap_mem *)&heap_ptr[ptr2]; mem2->magic = HEAP_MAGIC; mem2->used = 0; mem2->next = mem->next; mem2->prev = ptr;

ptr2: Points to the allocated free header address.

mem2: Initialize the first free heap allocatable space.

mem->next = ptr2;

mem->used = 1;

used_mem += (size + SIZEOF_STRUCT_MEM);

if (max_mem < used_mem)

max_mem = used_mem;

mem->magic = HEAP_MAGIC;

Initialize allocated space.

11. Update lfree pointer

if (mem == lfree)

{

/* Find next free block after mem and update lowest free pointer */

while (lfree->used && lfree != heap_end)

lfree = (struct heap_mem *)&heap_ptr[lfree->next];

RT_ASSERT(((lfree == heap_end) || (!lfree->used)));

}

12,rt_sem_release

rt_err_t rt_sem_release(rt_sem_t sem)

{

register rt_base_t temp;

register rt_bool_t need_schedule;

/* parameter check */

RT_ASSERT(sem != RT_NULL);

RT_ASSERT(rt_object_get_type(&sem->parent.parent) == RT_Object_Class_Semaphore);

RT_OBJECT_HOOK_CALL(rt_object_put_hook, (&(sem->parent.parent)));

need_schedule = RT_FALSE;

/* disable interrupt */

temp = rt_hw_interrupt_disable();

RT_DEBUG_LOG(RT_DEBUG_IPC, ("thread %s releases sem:%s, which value is: %d\n",

rt_thread_self()->name,

((struct rt_object *)sem)->name,

sem->value));

if (!rt_list_isempty(&sem->parent.suspend_thread))

{

/* resume the suspended thread */

rt_ipc_list_resume(&(sem->parent.suspend_thread));

need_schedule = RT_TRUE;

}

else

sem->value ++; /* increase value */

/* enable interrupt */

rt_hw_interrupt_enable(temp);

/* resume a thread, re-schedule */

if (need_schedule == RT_TRUE)

rt_schedule();

return RT_EOK;

}

RTM_EXPORT(rt_sem_release);

Check for pending threads.

Execution result heap_Add 1 to the sem.value value value.

13. Return Heap Allocate Available Space

return (rt_uint8_t *)mem + SIZEOF_STRUCT_MEM;

14. RT_KERNEL_MALLOC execution results

RT_KERNEL_The heap usage after MALLOC execution is as follows:

3,rt_memset

void *rt_memset(void *s, int c, rt_ubase_t count)

{

#ifdef RT_USING_TINY_SIZE

char *xs = (char *)s;

while (count--)

*xs++ = c;

return s;

#else

#define LBLOCKSIZE (sizeof(rt_int32_t))

#define UNALIGNED(X) ((rt_int32_t)X & (LBLOCKSIZE - 1))

#define TOO_SMALL(LEN) ((LEN) < LBLOCKSIZE)

int i;

char *m = (char *)s;

rt_uint32_t buffer;

rt_uint32_t *aligned_addr;

rt_uint32_t d = c & 0xff;

if (!TOO_SMALL(count) && !UNALIGNED(s))

{

/* If we get this far, we know that n is large and m is word-aligned. */

aligned_addr = (rt_uint32_t *)s;

/* Store D into each char sized location in BUFFER so that

* we can set large blocks quickly.

*/

if (LBLOCKSIZE == 4)

{

buffer = (d << 8) | d;

buffer |= (buffer << 16);

}

else

{

buffer = 0;

for (i = 0; i < LBLOCKSIZE; i ++)

buffer = (buffer << 8) | d;

}

while (count >= LBLOCKSIZE * 4)

{

*aligned_addr++ = buffer;

*aligned_addr++ = buffer;

*aligned_addr++ = buffer;

*aligned_addr++ = buffer;

count -= 4 * LBLOCKSIZE;

}

while (count >= LBLOCKSIZE)

{

*aligned_addr++ = buffer;

count -= LBLOCKSIZE;

}

/* Pick up the remainder with a bytewise loop. */

m = (char *)aligned_addr;

}

while (count--)

{

*m++ = (char)d;

}

return s;

#undef LBLOCKSIZE

#undef UNALIGNED

#undef TOO_SMALL

#endif

}

RTM_EXPORT(rt_memset);

Clear rt_mempool spatial data, all set to 0.

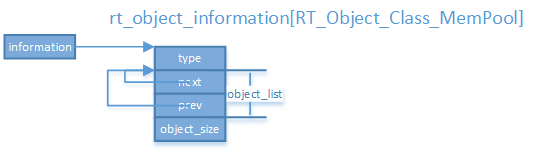

4. Initialize rt_for allocation spaceObject object

/* set object type */ object->type = type; /* set object flag */ object->flag = 0; /* copy name */ rt_strncpy(object->name, name, RT_NAME_MAX);

5,rt_list_insert_after

Insert Allocation Space into rt_object_information[RT_Object_Class_MemPool].

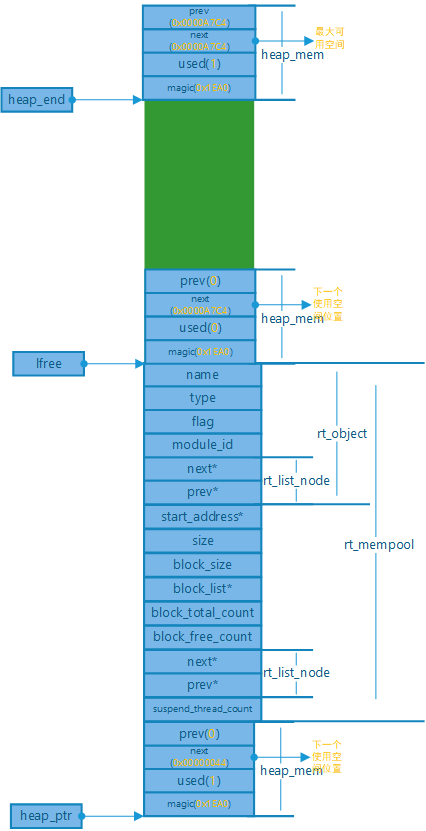

3,mp

mp is rt_Object_The allocate function returns the allocated spatial address.

4. Initialization Data-1

/* initialize memory pool */ block_size = RT_ALIGN(block_size, RT_ALIGN_SIZE); mp->block_size = block_size; mp->size = (block_size + sizeof(rt_uint8_t *)) * block_count;

5,rt_malloc

mp->start_address = rt_malloc((block_size + sizeof(rt_uint8_t *)) *

block_count);

block_size: 0x0000000C

block_count: 0x00000020

6. Initialization data-2

mp->block_total_count = block_count;

mp->block_free_count = mp->block_total_count;

/* initialize suspended thread list */

rt_list_init(&(mp->suspend_thread));

mp->suspend_thread_count = 0;

/* initialize free block list */

block_ptr = (rt_uint8_t *)mp->start_address;

for (offset = 0; offset < mp->block_total_count; offset ++)

{

*(rt_uint8_t **)(block_ptr + offset * (block_size + sizeof(rt_uint8_t *)))

= block_ptr + (offset + 1) * (block_size + sizeof(rt_uint8_t *));

}

*(rt_uint8_t **)(block_ptr + (offset - 1) * (block_size + sizeof(rt_uint8_t *)))

= RT_NULL;

mp->block_list = block_ptr;

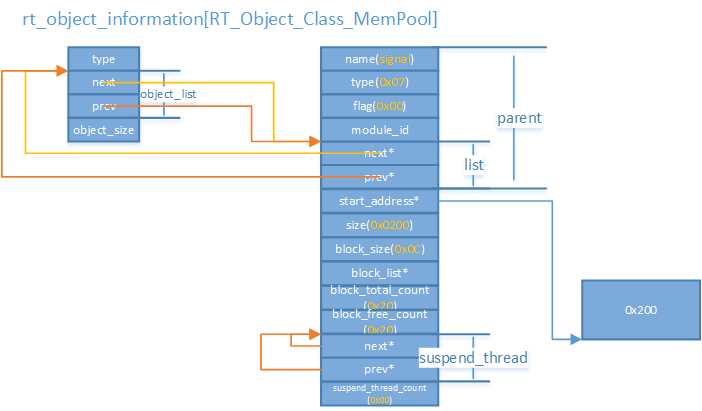

3. rt_mp_create execution result

Rt_Mp_When the create execution is complete, the heap allocation is as follows:

Rt_Object_Information[RT_Object_Class_The MemPool] list is as follows: