Installation of ScrapySplash

ScrapySplash is a tool in Scrapy that supports JavaScript rendering. This section describes how it is installed.

The installation of ScrapySplash is divided into two parts. One is the installation of Splash service. The installation mode is through Docker. After installation, a Splash service will be started. We can load JavaScript pages through its interface. The other is the installation of the Python Library of ScrapySplash, after which Splash services can be used in ScrapySplash.

1. Related links

- GitHub: https://github.com/scrapy-plu...

- PyPi: https://pypi.python.org/pypi/...

- Instructions: https://github.com/scrapy-plu...

- Splash official documents: http://splash.readthedocs.io

2. Install Splash

ScrapySplash uses Splash's HTTP API for page rendering, so we need to install Splash to provide rendering services. The installation is through Docker installation. Make sure that Docker is installed correctly before that.

The installation commands are as follows:

docker run -p 8050:8050 scrapinghub/splash

Similar output will occur after installation:

2017-07-03 08:53:28+0000 [-] Log opened. 2017-07-03 08:53:28.447291 [-] Splash version: 3.0 2017-07-03 08:53:28.452698 [-] Qt 5.9.1, PyQt 5.9, WebKit 602.1, sip 4.19.3, Twisted 16.1.1, Lua 5.2 2017-07-03 08:53:28.453120 [-] Python 3.5.2 (default, Nov 17 2016, 17:05:23) [GCC 5.4.0 20160609] 2017-07-03 08:53:28.453676 [-] Open files limit: 1048576 2017-07-03 08:53:28.454258 [-] Can't bump open files limit 2017-07-03 08:53:28.571306 [-] Xvfb is started: ['Xvfb', ':1599197258', '-screen', '0', '1024x768x24', '-nolisten', 'tcp'] QStandardPaths: XDG_RUNTIME_DIR not set, defaulting to '/tmp/runtime-root' 2017-07-03 08:53:29.041973 [-] proxy profiles support is enabled, proxy profiles path: /etc/splash/proxy-profiles 2017-07-03 08:53:29.315445 [-] verbosity=1 2017-07-03 08:53:29.315629 [-] slots=50 2017-07-03 08:53:29.315712 [-] argument_cache_max_entries=500 2017-07-03 08:53:29.316564 [-] Web UI: enabled, Lua: enabled (sandbox: enabled) 2017-07-03 08:53:29.317614 [-] Site starting on 8050 2017-07-03 08:53:29.317801 [-] Starting factory <twisted.web.server.Site object at 0x7ffaa4a98cf8> Python Resource sharing qun 784758214 ,Installation packages are included. PDF,Learning videos, here is Python The gathering place of learners, zero foundation and advanced level are all welcomed.

This proves that Splash is already running on port 8050.

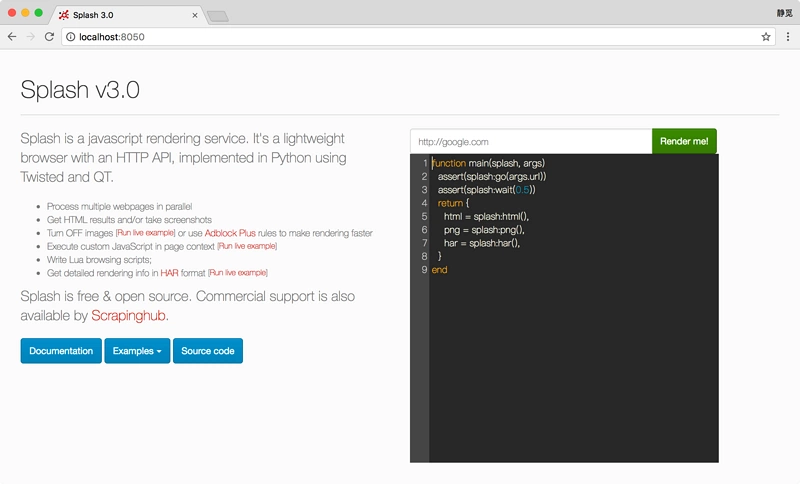

Then we open: http://localhost Splash's home page can be seen at 8050, as shown in Figure 1-81:

Figure 1-81 Running Page

Of course, Splash can also be installed directly on the remote server. We can run Splash in a daemon mode on the server. The commands are as follows:

docker run -d -p 8050:8050 scrapinghub/splash

Here is an additional - d parameter, which represents running the Docker container as a guardian, so that the Splash service will not be terminated after the remote server connection is interrupted.

3. Installation of ScrapySplash

After successfully installing Splash, let's install its Python library again. The installation commands are as follows:

pip3 install scrapy-splash

After the command is run, the library will be installed successfully. We will introduce its detailed usage later.

Installation of ScrapyRedis

Scrapy Redis is an extension module of Scrapy Distributed. With it, we can easily build Scrapy Distributed Crawler. This section describes how Scrapy Redis is installed.

1. Related links

- GitHub: https://github.com/rmax/scrap...

- PyPi: https://pypi.python.org/pypi/...

- Official documents: http://scrapy-redis.readthedo...

2. Pip Installation

Pip installation is recommended with the following commands:

pip3 install scrapy-redis

3. Test Installation

After the installation is complete, you can test it at the Python command line.

$ python3 >>> import scrapy_redis Python Resource sharing qun 784758214 ,Installation packages are included. PDF,Learning videos, here is Python The gathering place of learners, zero foundation and advanced level are all welcomed.

If no error is reported, the library is proven to be installed.