Current limiting of Springboot

Basic algorithm of current limiting

Token bucket and leaky bucket

-

The implementation of leaky bucket algorithm often depends on the queue. If the queue is not full, it will be directly put into the queue, and then a processor will take out the request from the queue head according to a fixed frequency for processing. If the number of requests is large, the queue will be full, and the new requests will be discarded.

-

The token bucket algorithm is a bucket for storing tokens with a fixed capacity. Tokens are added to the bucket at a fixed rate. There is a maximum number of tokens stored in the bucket, which will be discarded or rejected. When the traffic or network request arrives, each request must obtain a token. If it can be obtained, it will be processed directly, and a token will be deleted from the token bucket. If it cannot be obtained, the request will be throttled, either directly discarded or waiting in the buffer.

Comparison between token bucket and leaky bucket

- The token bucket adds tokens to the bucket at a fixed rate. Whether the request is processed depends on whether the tokens in the bucket are sufficient. When the number of tokens is reduced to zero, the new request is rejected; Leaky bucket refers to the outflow request at a constant fixed rate. The inflow request rate is arbitrary. When the number of incoming requests accumulates to the leaky bucket capacity, the new incoming request is rejected;

- The token bucket limits the average inflow rate and allows burst requests to be processed as long as there are tokens. It supports taking 3 tokens and 4 tokens at a time; The leaky bucket limits the constant outflow rate, that is, the outflow rate is a fixed constant value, such as the outflow rate of 1, but not 1 at one time and 2 next time, so as to smooth the burst inflow rate;

- The token bucket allows a certain degree of burst, while the main purpose of the leaky bucket is to smooth the outflow rate;

Guava RateLimiter

1. Dependence

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>28.1-jre</version>

<optional>true</optional>

</dependency>

2. Sample code

@Slf4j

@Configuration

public class RequestInterceptor implements HandlerInterceptor {

// The token bucket is divided into different token buckets according to the string, and the cache is automatically cleaned up every day

private static LoadingCache<String, RateLimiter> cachesRateLimiter = CacheBuilder.newBuilder()

.maximumSize(1000) //Set the number of caches

/**

* expireAfterWrite When the specified item is not created / overwritten within a certain period of time, the key will be removed and retrieved from loading the next time

* expireAfterAccess If the specified item has not been read or written within a certain period of time, the key will be removed and retrieved from loading the next time

* refreshAfterWrite If it is not created / overwritten within the specified time, the cache will be refreshed when accessing again after the specified time, and the old value will always be returned before the new value arrives

* The difference between the time after obtaining the new access and the time after obtaining the new access is the value of remove, and the value returned is the value of expire;

* Refresh means that the key will not be remove d after the specified time. The next access will trigger a refresh. If the new value does not come back, the old value will be returned

*/

.expireAfterAccess(1, TimeUnit.HOURS)

.build(new CacheLoader<String, RateLimiter>() {

@Override

public RateLimiter load(String key) throws Exception {

// New string initialization (limited to 2 token responses per second)

return RateLimiter.create(2);

}

});

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler)

throws Exception {

log.info("request Request address path[{}] uri[{}]", request.getServletPath(), request.getRequestURI());

try {

String str = "hello";

// Token bucket

RateLimiter rateLimiter = cachesRateLimiter.get(str);

if (!rateLimiter.tryAcquire()) {

System.out.println("too many requests.");

return false;

}

} catch (Exception e) {

// Solve the problem that the interceptor's exception cannot be caught by the global exception handler

request.setAttribute("exception", e);

request.getRequestDispatcher("/error").forward(request, response);

}

return true;

}

}

3. Test

@RestController

@RequestMapping(value = "user")

public class UserController {

@GetMapping

public Result test2(){

System.out.println("1111");

return new Result(true,200,"");

}

}

http://localhost:8080/user/

If there is no result class, you can return a string at will

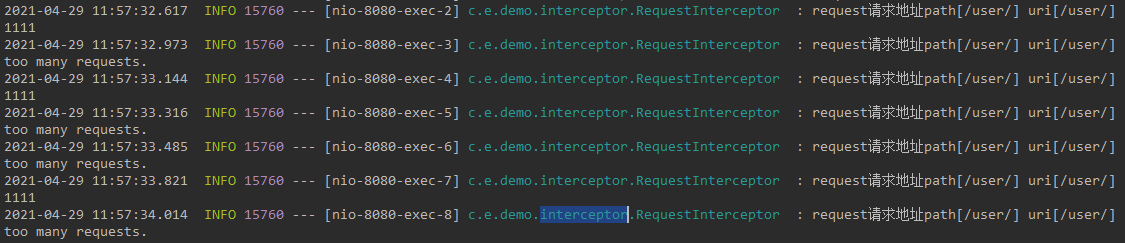

4. Test results

other

establish

RateLimiter provides two factory methods:

One is smooth burst current limiting

RateLimiter r = RateLimiter.create(5); // The project is started, and 5 tokens are allowed directly

One is smooth preheating and current limiting

RateLimiter r = RateLimiter.create(2, 3, TimeUnit.SECONDS); // The set 2 tokens will not arrive until 3 seconds after the project is started

shortcoming

RateLimiter can only be used for single machine current limiting. If you want to cluster current limiting, you need to introduce redis or sentinel middleware open source by Alibaba.

TimeUnit.SECONDS);` // The set 2 tokens will not arrive until 3 seconds after the project is started