Map interface: stored in the form of key value pairs. Key value pairs exist. Key values cannot be repeated, and value can be repeated.

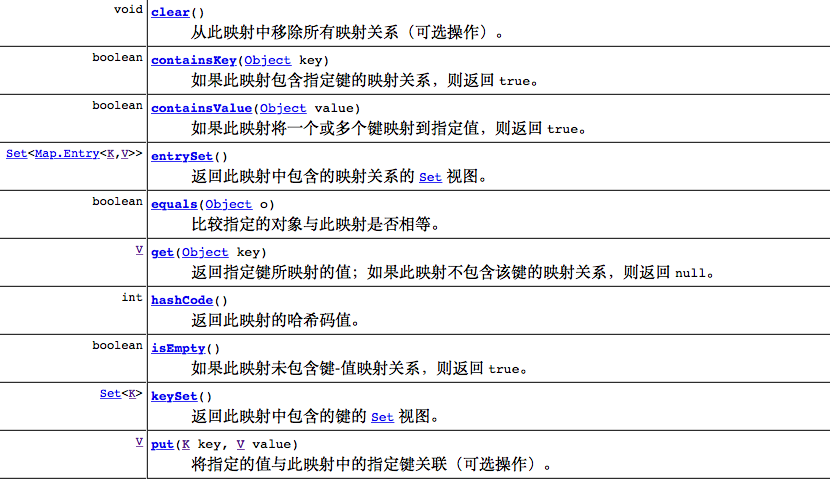

Methods under Map interface:

Interpretation of common methods in the collection:

// V put(K key, V value) adds a key value pair to the collection

hashMap.put("A","a");

System.out.println(hashMap.size());

//void clear() clears the collection elements

// hashMap.clear();

//boolean containsKey(Object key) determines whether the specified key exists in the collection. If the key exists, it returns true and if not, it returns false

boolean key = hashMap.containsKey("A");

// System.out.println(key);

//boolean containsValue(Object value) determines whether the specified value exists in the collection. Value returns true if it exists and false if it does not exist

hashMap.containsValue("zhangsna");

// System.out.println(hashMap.size());

//V get(Object key) get value through key

String s = hashMap.get("A");Traversal form of collection under Map interface:

//Traversing key value pairs through entrySet

Iterator <Map.Entry <String, String>> iterator = hashMap.entrySet().iterator();

while (iterator.hasNext()) {

Map.Entry <String, String> entry = iterator.next();

System.out.println("key:"+entry.getKey()+",value:"+entry.getValue()+ " ");

}

//Traverse keys through keySet

Iterator <String> iterator1 = hashMap.keySet().iterator();

while (iterator1.hasNext()) {

String key1 = iterator1.next();

System.out.print(key1+" ");

}

//Traverse the values through values()

Iterator <String> iterator2 = hashMap.values().iterator();

while (iterator2.hasNext()) {

String value = iterator2.next();

System.out.print(value+" ");

}Introduction to HashMap

characteristic:

1. The stored data is in the form of key value pairs. The key cannot be repeated and the value value can be repeated

2. Both key and value can be null

3. The order of internal elements cannot be guaranteed

4. The underlying data structure is a hash table

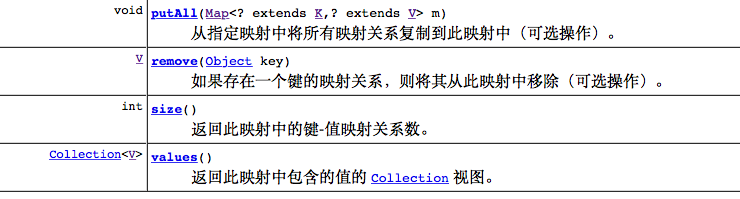

Hash table: a data structure in which a key is mapped to a specific value through a hash function

Hash conflict: hash function f (x), f (m) = f (n), M is not equal to n

Hash function: direct hash, modulo...

Hash conflict: chain address method, detection method (linear detection, random detection)...

Chain address method: as shown in the figure:

Research on HashMap source code

Inheritance relationship

public class HashMap<K,V>

extends AbstractMap<K,V>

implements Map<K,V>, Cloneable, Serializable HashMap inherits from AbstractMap. This abstract class implements common methods of Map interface to facilitate subclass reuse

Implement the class Map interface and have all the methods provided in the Map

Implement clonable and Serializable interfaces

Constructor

//The HashMap object is instantiated by the initial capacity and load factor

public HashMap(int initialCapacity, float loadFactor) {

//Check the validity of parameters

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

threshold = initialCapacity;

init();

}

//The HashMap is instantiated by the initial capacity, and the load factor is the default value of 0.75

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

//Instantiate the default initial capacity 16 through parameterless construction, and the default loading factor is 0.75

public HashMap() {

this(DEFAULT_INITIAL_CAPACITY, DEFAULT_LOAD_FACTOR);

}

//Constructing HashMap instances from map collection instances

public HashMap(Map<? extends K, ? extends V> m) {

this(Math.max((int) (m.size() / DEFAULT_LOAD_FACTOR) + 1,DEFAULT_INITIAL_CAPACITY),

DEFAULT_LOAD_FACTOR);

//Initialize the table and threshold parameters and instantiate the hash table

inflateTable(threshold);

//Store the key value pairs in the map combination into the HashMap

putAllForCreate(m);

}Properties and default values

//Default initial capacity: 16

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16

//Maximum capacity

static final int MAXIMUM_CAPACITY = 1 << 30;

//Default load factor: 0.75

static final float DEFAULT_LOAD_FACTOR = 0.75f;

//Default empty table

static final Entry<?,?>[] EMPTY_TABLE = {};

//The table property for storing data is an array of entry type

transient Entry<K,V>[] table = (Entry<K,V>[]) EMPTY_TABLE;

//Number of stored key value pairs

transient int size;

//Capacity expansion threshold: judge threshold =table.length()*loadFactor during capacity expansion

int threshold;

//Loading factor

final float loadFactor;

//version control

transient int modCount;

//key hash correlation

static final int ALTERNATIVE_HASHING_THRESHOLD_DEFAULT = Integer.MAX_VALUE;

//key hash correlation

transient int hashSeed = 0;Underlying data structure

//Data storage location

transient Entry<K,V>[] table = (Entry<K,V>[]) EMPTY_TABLE;

static class Entry<K,V> implements Map.Entry<K,V> {

final K key;

V value;

Entry<K,V> next;

int hash;

}It can be seen from the above that the data stored at the bottom of HashMap is in the form of data plus linked list, that is, hash table structure

Capacity expansion mechanism

Capacity expansion opportunity: when size > threshold, capacity expansion will be performed

common method

Insert element: put(key,value)

public V put(K key, V value) {

if (table == EMPTY_TABLE) {

//When the table array is empty, the initialization table data will be entered. When the put operation is called for the first time, the initialization table data will be entered

inflateTable(threshold);

}

//key is null. Store the data in slot 0

if (key == null)

return putForNullKey(value);

//Processing when key is not null

//Find the location of the corresponding storage card slot through the key

int hash = hash(key);

int i = indexFor(hash, table.length);

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

//Find the data linked list of card slot i through position i, and traverse from the beginning to find out whether the key exists

//The judgment conditions are hash and key, and the equal value is updated

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

//The key does not exist in position i, so you need to add an entry node to store data

modCount++;

addEntry(hash, key, value, i);

return null;

}

private V putForNullKey(V value) {

//If the key is null, the hash position will be No. 0. You need to traverse the linked list of No. 0 card slot and judge whether the key is null

//Update the value in the entry and return it to the old value

//If it does not exist, create an entry entity to store the put data

for (Entry<K,V> e = table[0]; e != null; e = e.next) {

if (e.key == null) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

modCount++;

addEntry(0, null, value, 0);

return null;

}

//Find the corresponding card slot through the key hash

final int hash(Object k) {

int h = hashSeed;

if (0 != h && k instanceof String) {

return sun.misc.Hashing.stringHash32((String) k);

}

h ^= k.hashCode();

// This function ensures that hashCodes that differ only by

// constant multiples at each bit position have a bounded

// number of collisions (approximately 8 at default load factor).

h ^= (h >>> 20) ^ (h >>> 12);

return h ^ (h >>> 7) ^ (h >>> 4);

}

adopt key The hash value of was found at a location in the hash table

static int indexFor(int h, int length) {

// assert Integer.bitCount(length) == 1 : "length must be a non-zero power of 2";

The capacity is 16

0001 0000 16

0000 1111 15

1010 1001

-------------

0000 1001 9

return h & (length-1);

}

void addEntry(int hash, K key, V value, int bucketIndex) {

//When the stored data size is greater than the capacity expansion threshold, capacity expansion is performed

if ((size >= threshold) && (null != table[bucketIndex])) {

//2X expansion of HashMap

resize(2 * table.length);

hash = (null != key) ? hash(key) : 0;

//Get the position of the new card slot that the current key should be inserted into

bucketIndex = indexFor(hash, table.length);

}

createEntry(hash, key, value, bucketIndex);

}

//Capacity expansion

void resize(int newCapacity) {

Entry[] oldTable = table;

int oldCapacity = oldTable.length;

if (oldCapacity == MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return;

}

//The newly created array is twice as large as the original one

Entry[] newTable = new Entry[newCapacity];

//Re hash each entry entity in the original map to the new map

transfer(newTable, initHashSeedAsNeeded(newCapacity));

table = newTable;

//threshold = table.length*loadFactor

threshold = (int)Math.min(newCapacity * loadFactor, MAXIMUM_CAPACITY + 1);

}

//Insert data using header interpolation

void createEntry(int hash, K key, V value, int bucketIndex) {

Entry<K,V> e = table[bucketIndex];

table[bucketIndex] = new Entry<>(hash, key, value, e);

size++;

}Operation steps:

1. First, judge whether the key is null and store it in slot 0 of the hash table for special processing

2. Whether the data with null key exists, traverse the linked list of the position of card slot 0. If it exists (= =), update value and return

3. If it does not exist, create a new node (addentry)

4. The key is not null. Use the key to calculate the hash value and find the card slot position (hash,indexFor) in the hash table

5. Obtain the linked list in the corresponding card slot, traverse to find out whether the key exists. If it exists (hash & & equals), update the value and return

6. key does not exist in the linked list. Create a new node (addEntry)

7. Consider whether to expand the capacity (size > threshold). If the capacity needs to be expanded, double the original size, and then re hash the data in the original hash table to the new hash table, and update the card slot position of the currently inserted node

8. Insert the new entry node into the given card slot position with head plug

Get element: get(key)

public V get(Object key) {

//If the key is null, go directly to the slot 0 to get the result

if (key == null)

return getForNullKey();

//key is not null

Entry<K,V> entry = getEntry(key);

return null == entry ? null : entry.getValue();

}

private V getForNullKey() {

//If the map is empty, null is returned

if (size == 0) {

return null;

}

//At the position of card slot 0, traverse the linked list to find out whether the key is null. If it exists, find value in the entry and return it. Otherwise, return null

for (Entry<K,V> e = table[0]; e != null; e = e.next) {

if (e.key == null)

return e.value;

}

return null;

}

adopt key Find corresponding entry example

final Entry<K,V> getEntry(Object key) {

if (size == 0) {

return null;

}

//Find the card slot position corresponding to the key through the hash of the key

int hash = (key == null) ? 0 : hash(key);

for (Entry<K,V> e = table[indexFor(hash, table.length)];e != null;e = e.next) {

Object k;

if (e.hash == hash &&((k = e.key) == key || (key != null && key.equals(k))))

return e;

}

return null;

}Query process:

1. If the key is null, traverse the linked list query at the 0 card slot. If the key exists, return the value of entry; otherwise, return null

2. If the key is not null, hash the key, find the corresponding card slot, traverse the linked list, judge whether the key exists (hash,key.equals), and return the value of entry; otherwise, return null

Delete element: remove(key)

Delete the entry entity through the key

public V remove(Object key) {

Entry<K,V> e = removeEntryForKey(key);

return (e == null ? null : e.value);

}

final Entry<K,V> removeEntryForKey(Object key) {

//If the collection is empty, null is returned directly

if (size == 0) {

return null;

}

//Find the corresponding card slot through the key hash (the key is null and the card slot is 0)

int hash = (key == null) ? 0 : hash(key);

int i = indexFor(hash, table.length);

Entry<K,V> prev = table[i];

Entry<K,V> e = prev;

//Deleting a node is to solve the problem of deleting a one-way linked list: solution: give two pointers, one before and one after, and the front pointer represents the node to be deleted,

, //Connect the node with the next of the previous node pointer through the latter pointer

while (e != null) {

Entry<K,V> next = e.next;

Object k;

if (e.hash == hash &&((k = e.key) == key || (key != null && key.equals(k)))) {

modCount++;

size--;

//If the head node is deleted, the latter node will be used as the head node of the current card slot

if (prev == e)

table[i] = next;

else

//The latter pointer is prex to connect the node with the next of the previous node pointer

prev.next = next;

e.recordRemoval(this);

return e;

}

prev = e;

e = next;

}

return e;

}Delete process:

1. Find the card slot position through the key hash (the key is null in slot 0)

2. Traverse and search the linked list corresponding to the card slot. Given two pointers, the former pointer finds the node to be deleted, and the latter pointer establishes the relationship with the next node

Summarize the characteristics of HashMap

1. Data structure: hash table (array + linked list)

2. Default array size: 16

3. Capacity expansion mechanism: the size is twice the length of the original array

4. Expansion factor: 0.75f

5. Expansion condition: 0.75 * array length

6. Thread safe: this collection is thread unsafe

7. The type of stored data is key value pair (key and value)

8. Both key and value can be null

9. The stored data is out of order

10. key cannot be repeated, value can be repeated

11. If the key is the same, the value will be overwritten

Application scenario

Do data statistics

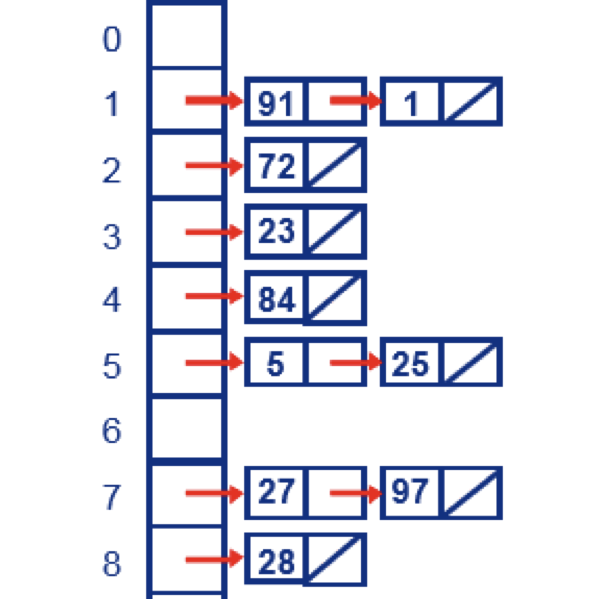

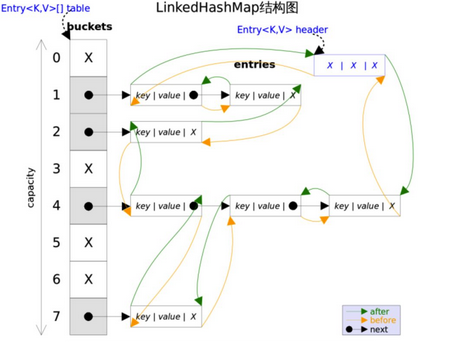

LinkedHashMap introduction

According to the collection framework diagram, LinkedHashMap belongs to the implementation subclass of HashMap

public class LinkedHashMap<K,V> extends HashMap<K,V> implements Map<K,V>

Basic features:

- 1. key cannot be repeated and value can be repeated

- 2. LinkedHashMap is insert ordered / access ordered accessOrder:true access ordered false: insert ordered

- 3. The underlying data structure is a hash table

- 4. Both key and value can be null

How is LinkedHashMap organized?

data structure

public class LinkedHashMap<K,V> extends HashMap<K,V> implements Map<K,V>

The inheritance relationship through LinkedHashMap is inherited from HashMap. If the properties and methods of HashMap are inherited, the table property and entry type will be inherited, and its internal structure is also a hash table structure.

There are also attributes inside

//Head node

private transient Entry<K,V> header;

//accessOrder controls whether to insert or access in order. The default is false, that is, insert in order. True: access in order

private final boolean accessOrder;

class Entry<K,V> extends HashMap.Entry<K,V> {

Entry<K,V> before, after;

}The entry class in LinkedHashMap inherits from HashMap.Entry, that is, it has attributes such as key\value\hash\next. In addition, two new attributes are before and after of entry type.

The implementation of LInkedHashMap is hash table + bidirectional linked list

Store data through hash table structure

Based on the hash table, the nodes of the two-way linked list are connected in the order of insertion or access

Insert data procedure: put

//Parent class HashMap implementation

public V put(K key, V value) {

if (table == EMPTY_TABLE) {

//If the table is empty, initialize the hash table according to the default 16 size

inflateTable(threshold);

}

if (key == null)

return putForNullKey(value);

int hash = hash(key);

int i = indexFor(hash, table.length);

for (Entry<K,V> e = table[i]; e != null; e = e.next) {

Object k;

if (e.hash == hash && ((k = e.key) == key || key.equals(k))) {

V oldValue = e.value;

e.value = value;

e.recordAccess(this);

return oldValue;

}

}

modCount++;

addEntry(hash, key, value, i);

return null;

}

//Implementation of linkedhashmap in entry

void recordAccess(HashMap<K,V> m) {

LinkedHashMap<K,V> lm = (LinkedHashMap<K,V>)m;

if (lm.accessOrder) {

lm.modCount++;

remove();

addBefore(lm.header);

}

}

//If the current access is ordered, delete the current node from the two-way linked list, and then insert it into the before processing of the head

//Implementation in linkedHashmap

void addEntry(int hash, K key, V value, int bucketIndex) {

super.addEntry(hash, key, value, bucketIndex);

// Remove eldest entry if instructed

Entry<K,V> eldest = header.after;

if (removeEldestEntry(eldest)) {

removeEntryForKey(eldest.key);

}

}

//addentry in HashMap

void addEntry(int hash, K key, V value, int bucketIndex) {

if ((size >= threshold) && (null != table[bucketIndex])) {

resize(2 * table.length);

hash = (null != key) ? hash(key) : 0;

bucketIndex = indexFor(hash, table.length);

}

createEntry(hash, key, value, bucketIndex);

}

//createEntry in linkedHashMap

void createEntry(int hash, K key, V value, int bucketIndex) {

HashMap.Entry<K,V> old = table[bucketIndex];

Entry<K,V> e = new Entry<>(hash, key, value, old);

table[bucketIndex] = e;

//Handle the two-way linked list and insert it into the before position of the header

e.addBefore(header);

size++;

}Differences between the implementation of LinkedHashMap insertion and that of HashMap

1. When inserting and the key exists, if the access is orderly, insert the node from the two-way linked list into the last position

2. When the key does not exist and the entry node is considered to be added, after the hash table is inserted, the two-way linked list needs to be maintained, that is, the tail of the two-way linked list needs to be inserted

Application scenario:

Statistics, according to the access order

HashTable

Inheritance relationship:

HashTable inheritance relationship:

public class Hashtable<K,V>

extends Dictionary<K,V>

implements Map<K,V>, Cloneable, java.io.SerializableInheritance relationship of HashMap:

public class HashMap<K,V>

extends AbstractMap<K,V>

implements Map<K,V>, Cloneable, SerializableDictionary implementation:

public abstract class Dictionary<K,V> {

public Dictionary() {

}

abstract public int size();

abstract public boolean isEmpty();

abstract public Enumeration<K> keys(); //Gets the collection of keys

abstract public Enumeration<V> elements(); //Gets a collection of values

abstract public V put(K key, V value);

abstract public V remove(Object key);

}AbstractMap class:

//Implement some of these methods

Similarities and differences between HashTable and HashMap

* * Similarities: * 1,The underlying data organization is a hash table( JDK 1.7) * 2,key-value Key value alignment, key It can't be repeated, value It can be repeated * 3,HashMap and Hashtable Are inserted unordered * * difference: * 1,HashTable Inherited from Dictionary Class, which is provided earlier map Parent class, now recommended AbstractMap class * 2,HashTable The default initial value for is 11 * 3,HashTable Is thread safe(By adding synchronized crux) * 4,HashTable in key and value Can't be null * 5,HashTable Middle pair key Hash procedure and HashMap It's different * 6,HashTable The capacity of is expanded according to the size of 2 times plus 1((oldCapacity << 1) + 1) */

TreeMap collection

Basic features:

treeMap features

1. Keys are sorted by size. By default, key s are sorted from small to large

2. key cannot be null, value can be null

3. key cannot be repeated and value can be repeated

How does TreeMap achieve key ordering?

The underlying data structure of TreeMap is red black tree

TreeMap inheritance relationship

public class TreeMap<K,V>

extends AbstractMap<K,V>

implements NavigableMap<K,V>, Cloneable, java.io.Serializabletreemap implements the NavigableMap interface, supports a series of navigation methods, and returns the key set with order

The NavigableMap interface is declared as follows:

public interface NavigableMap<K,V> extends SortedMap<K,V>

NavigableMap interface inherits from SortedMap interface. SortedMap interface has sorting function and Comparator class Comparator.

Comparator class description:

Comparison between Comparable and Comparator

Both comparators are interfaces.

Methods provided in the Comparable interface:

public interface Comparable<T> {

public int compareTo(T o);

}This interface provides a compareTo method,

The return values are - 1, 0 and 1 respectively

It is used as a comparator inside the class. Once the comparison attribute is determined, it cannot be changed

Comparator interface:

public interface Comparator<T> {

int compare(T o1, T o2);

}The compare method is provided in the interface,

The returned result of this method is greater than 0, equal to 0 and less than 0

Class, which is used by the user-defined implementation comparison process

Usage scenario: sort the data and select TreeMap

weakHashMap

There is no special data structure in weakHashMap, which is mainly to optimize the JVM. The JVM can more intelligently recycle useless objects during garbage collection

Weakhashmap is mainly applicable to caching scenarios. When a key object is recycled by the garbage collector, the value object of the response will be deleted from the map. Weakhashmap can save memory space and cache some unnecessary data

The difference between weakhashmap and other maps is that the key is a weak reference type, and the key in other maps is a strong reference type,

In Java, there are four types of references: strong, soft, weak, and phantom

reference type

Strong reference

Strong reference is a common reference type. Objects created in the form of new are strong reference objects

Object o = new Object();

Objects with strong references are not cleaned up by GC at any time

As long as the strong reference exists, GC will never recycle the referenced object. The reference associated with the object created through the keyword new is a strong reference. As long as the strong reference also points to an object, it means that the object still exists. This will not be touched by the garbage collector, that is, when the memory is insufficient, the JVM throws an OOM (OutOfMemoryError) Exceptions also do not recycle strongly referenced objects.

Soft Reference, if Reference and virtual Reference all exist in the java.lang.ref package, and the parent class is the Reference class

Reference is the constructor of the abstract parent class

//referent is the object that the reference points to

Reference(T referent) {

this(referent, null);

}

//ReferenceQueue can be understood as a Queue. Before GC, the referenced object is placed in the Queue to facilitate the cleaning of the referenced object itself

Reference(T referent, ReferenceQueue<? super T> queue) {

this.referent = referent;

this.queue = (queue == null) ? ReferenceQueue.NULL : queue;

}For example:

Object o = new Obeject(); ReferenceQueue queue = new ReferenceQueue(); WeakReference wk =new WeakReference(o,queue );

At this time, wk is a weak reference and points to the o object. o will be cleaned up by GC at a certain time, but the work of wk object depends on the Queue object. When wk appears in the Queue, it indicates that the object it points to is invalid and can be cleaned up safely.

Soft reference: SoftReference

When a GC operation occurs and the memory is sufficient, the object used by the soft reference will not be recycled. When the memory is insufficient, the object used by the soft reference will be recycled when the GC operation is triggered.

ReferenceQueue<A> queue = new ReferenceQueue<A>();

SoftReference<A> w = new SoftReference<A>(new A(), queue);

System.out.println(w.get());

System.out.println(w.isEnqueued());

System.out.println(queue.poll());

/**

* main.java.com.tulun.WeakHashMapGY02$A@1e3ac11b

* false

* null

*/

/**

* Key points:

* //The memory required here is limited and not enough. Therefore, GC is triggered to recycle soft reference objects

*

*/

byte[] array = new byte[7*1024*1024+500*1024];

System.out.println(array[0]);

System.gc();

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

System.out.println(w.get());

System.out.println(w.isEnqueued());

System.out.println(queue.poll());Weak reference: WeakReference

When a GC operation occurs, even if the memory space is sufficient, the GC operation will reclaim the object acted by the weak reference.

ReferenceQueue<A> queue = new ReferenceQueue<A>();

WeakReference<A> w = new WeakReference<A>(new A(), queue);

System.out.println(w.get());

System.out.println(w.isEnqueued());

System.out.println(queue.poll());

// main.java.com.tulun.WeakHashMapGY02$A@20724356

// false

// null

System.gc();

System.out.println("---------------------");

System.out.println(w.get());

System.out.println(w.isEnqueued());

System.out.println(queue.poll());Virtual reference: phantom reference

Virtual reference is the weakest reference relationship. Whether an object has a virtual reference will not affect the object lifetime, nor can it be used to obtain an object instance through virtual reference,

A virtual reference exists to determine whether an object is recycled

ReferenceQueue<A> queue = new ReferenceQueue<A>();

PhantomReference<A> ptr = new PhantomReference<A>(new A(), queue);

System.gc(); //Start GC here and recycle the A object. The queue receives A notification that the object has been recycled

if(ptr.isEnqueued()){

System.out.println("ptr.isEnqueued()");

}else{

System.out.println("not ptr.isEnqueued()");

}