Share a wave of GO Crawlers

Let's review the last time we talked about using Google to send email

Golang+chromedp+goquery simple crawling dynamic data Go theme month

- Shared email. What is email

- What are the mail protocols

- How to send e-mail using Golf

- How to send e-mail with plain text, HTML content, attachments, etc

- How to send e-mail, how to CC, how to BCC

- How to improve the performance of sending mail

If you want to see how to use Google to send mail, please check the article How to send mail using Golf

I still remember that we shared a simple article about golang crawling web dynamic data before Golang+chromedp+goquery simple crawling dynamic data Go theme month

If a friend is interested, we can study the use of this chromedp framework in detail

Today, let's share the static data of using GO to crawl web pages

What are static web pages and dynamic web pages

What is static web data?

- It means that there is no program code in the web page, only HTML, that is, only hypertext markup language, and the suffix is generally HTML, HTM, XML, etc

- Another feature of static web pages is that users can directly click to open them. No matter who opens the page at any time, the content of the page remains unchanged. The html code is fixed, and the effect is fixed

By the way, what is a dynamic web page

Dynamic web page is a web page programming technology

In addition to HTML tags, the web page files of dynamic web pages also include some program codes with specific functions

These codes are mainly used for the interaction between the browser and the server. The server can dynamically generate web content according to different requests of the client, which is very flexible

In other words, although the page code of dynamic web pages has not changed, the displayed content can change with the passage of time, different environments and the change of database

GO to crawl the static data of the web page

We crawl the data of static web pages. For example, we crawl the static data on this website, and crawl the account and password information on the web pages

http://www.ucbug.com/jiaocheng/63149.html?_t=1582307696

Steps for us to climb this website:

- Specify a website that needs to be crawled

- Get data through HTTP GET

- Convert byte array to string

- Use regular expressions to match the content we expect (this is very important. In fact, crawling static web pages and processing and filtering data take more time)

- Filtering data, de duplication and other operations (this step varies according to individual needs and target websites)

Let's write a DEMO and climb up the account and password information of the above website. The information we want on the website is like this. We are only for learning and should not be used to do some bad things

package main

import (

"io/ioutil"

"log"

"net/http"

"regexp"

)

const (

// Regular expression to match XL's account password

reAccount = `(account number|Xunlei account)(;|: )[0-9:]+(| )password:[0-9a-zA-Z]+`

)

// Get website account password

func GetAccountAndPwd(url string) {

// Get site data

resp, err := http.Get(url)

if err !=nil{

log.Fatal("http.Get error : ",err)

}

defer resp.Body.Close()

// The data content to be read is bytes

dataBytes, err := ioutil.ReadAll(resp.Body)

if err !=nil{

log.Fatal("ioutil.ReadAll error : ",err)

}

// Convert byte array to string

str := string(dataBytes)

// Filter XL account and password

re := regexp.MustCompile(reAccount)

// How many times do you match? - 1 is all by default

results := re.FindAllStringSubmatch(str, -1)

// Output results

for _, result := range results {

log.Println(result[0])

}

}

func main() {

// Simply set the log parameter

log.SetFlags(log.Lshortfile | log.LstdFlags)

// Enter the website address and start crawling data

GetAccountAndPwd("http://www.ucbug.com/jiaocheng/63149.html?_t=1582307696")

}The result of running the above code is as follows:

2021/06/xx xx:05:25 main.go:46: Account No.: 357451317 password: 110120 a 2021/06/xx xx:05:25 main.go:46: Account No.: 907812219 password: 810303 2021/06/xx xx:05:25 main.go:46: Account No.: 797169897 password: zxcvbnm132 2021/06/xx xx:05:25 main.go:46: Xunlei Account No.: 792253782:1 Password: 283999 2021/06/xx xx:05:25 main.go:46: Xunlei Account No.: 147643189:2 Password: 344867 2021/06/xx xx:05:25 main.go:46: Xunlei Account No.: 147643189:1 Password: 267297

It can be seen that the account: the first data and Xunlei account: the first data are crawled down by us. In fact, it is not difficult to crawl the content of static web pages. The time is basically spent on regular expression matching and data processing

According to the above steps of crawling web pages, we can list the following:

- Visit the website http Get(url)

- Read data content ioutil ReadAll

- Convert data to string

- Set the regular matching rule regexp MustCompile(reAccount)

- To start filtering data, you can set the filtering quantity re FindAllStringSubmatch(str, -1)

Of course, it will not be so simple in practical work,

For example, the format of the data crawled on the website is not uniform enough, there are many and miscellaneous special characters, and there is no rule. Even the data is dynamic, so there is no way to Get it through Get

However, the above problems can be solved. According to different problems, different schemes and data processing are designed. I believe that friends who meet this point will be able to solve them. In the face of problems, we must have the determination to solve them

Crawl pictures

After looking at the above example, let's try crawling the image data on the web page, such as searching for firewood dogs on a certain degree

It's such a page

Let's copy and paste the url in the url address bar to crawl the data

https://image.baidu.com/search/index?tn=baiduimage&ps=1&ct=201326592&lm=-1&cl=2&nc=1&ie=utf-8&word=%E6%9F%B4%E7%8A%AC

Since there are many pictures, we set the data matching only two pictures

Let's see DEMO

- Incidentally, the function of converting the Get url data into a string is extracted and encapsulated into a small function

- GetPic specifically uses regular expressions for matching. You can set the number of matches. Here we set the number of matches to 2

package main

import (

"io/ioutil"

"log"

"net/http"

"regexp"

)

const (

// Regular expression to match XL's account password

reAccount = `(account number|Xunlei account)(;|: )[0-9:]+(| )password:[0-9a-zA-Z]+`

// Regular expression to match the picture

rePic = `https?://[^"]+?(\.((jpg)|(png)|(jpeg)|(gif)|(bmp)))`

)

func getStr(url string)string{

resp, err := http.Get(url)

if err !=nil{

log.Fatal("http.Get error : ",err)

}

defer resp.Body.Close()

// The data content to be read is bytes

dataBytes, err := ioutil.ReadAll(resp.Body)

if err !=nil{

log.Fatal("ioutil.ReadAll error : ",err)

}

// Convert byte array to string

str := string(dataBytes)

return str

}

// Get website account password

func GetAccountAndPwd(url string,n int) {

str := getStr(url)

// Filter XL account and password

re := regexp.MustCompile(reAccount)

// How many times do you match? - 1 is all by default

results := re.FindAllStringSubmatch(str, n)

// Output results

for _, result := range results {

log.Println(result[0])

}

}

// Get website account password

func GetPic(url string,n int) {

str := getStr(url)

// Filter pictures

re := regexp.MustCompile(rePic)

// How many times do you match? - 1 is all by default

results := re.FindAllStringSubmatch(str, n)

// Output results

for _, result := range results {

log.Println(result[0])

}

}

func main() {

// Simply set l og parameters

log.SetFlags(log.Lshortfile | log.LstdFlags)

//GetAccountAndPwd("http://www.ucbug.com/jiaocheng/63149.html?_t=1582307696", -1)

GetPic("https://image.baidu.com/search/index?tn=baiduimage&ps=1&ct=201326592&lm=-1&cl=2&nc=1&ie=utf-8&word=%E6%9F%B4%E7%8A%AC",2)

}Run the above code and the results are as follows (no de duplication is done):

2021/06/xx xx:06:39 main.go:63: https://ss1.bdstatic.com/70cFuXSh_Q1YnxGkpoWK1HF6hhy/it/u=4246005838,1103140037&fm=26&gp=0.jpg 2021/06/xx xx:06:39 main.go:63: https://ss1.bdstatic.com/70cFuXSh_Q1YnxGkpoWK1HF6hhy/it/u=4246005838,1103140037&fm=26&gp=0.jpg

Sure enough, it's what we want, but just printing it and crawling it are all picture links, which certainly can't meet the needs of our real crawlers. We must download the crawling pictures for our use, which is what we want

For the convenience of demonstration, we will add the small function of downloading files to the above code, and we will download the first one

package main

import (

"fmt"

"io/ioutil"

"log"

"net/http"

"regexp"

"strings"

"time"

)

const (

// Regular expression to match the picture

rePic = `https?://[^"]+?(\.((jpg)|(png)|(jpeg)|(gif)|(bmp)))`

)

// Get web page data and convert the data into a string

func getStr(url string) string {

resp, err := http.Get(url)

if err != nil {

log.Fatal("http.Get error : ", err)

}

defer resp.Body.Close()

// The data content to be read is bytes

dataBytes, err := ioutil.ReadAll(resp.Body)

if err != nil {

log.Fatal("ioutil.ReadAll error : ", err)

}

// Convert byte array to string

str := string(dataBytes)

return str

}

// Get picture data

func GetPic(url string, n int) {

str := getStr(url)

// Filter pictures

re := regexp.MustCompile(rePic)

// How many times do you match? - 1 is all by default

results := re.FindAllStringSubmatch(str, n)

// Output results

for _, result := range results {

// Get the specific picture name

fileName := GetFilename(result[0])

// Download pictures

DownloadPic(result[0], fileName)

}

}

// Gets the name of the file

func GetFilename(url string) (filename string) {

// Found the index of the last =

lastIndex := strings.LastIndex(url, "=")

// Get the string after /, which is the source file name

filename = url[lastIndex+1:]

// Put the time stamp before the original name and spell a new name

prefix := fmt.Sprintf("%d",time.Now().Unix())

filename = prefix + "_" + filename

return filename

}

func DownloadPic(url string, filename string) {

resp, err := http.Get(url)

if err != nil {

log.Fatal("http.Get error : ", err)

}

defer resp.Body.Close()

bytes, err := ioutil.ReadAll(resp.Body)

if err != nil {

log.Fatal("ioutil.ReadAll error : ", err)

}

// File storage path

filename = "./" + filename

// Write files and set file permissions

err = ioutil.WriteFile(filename, bytes, 0666)

if err != nil {

log.Fatal("wirte failed !!", err)

} else {

log.Println("ioutil.WriteFile successfully , filename = ", filename)

}

}

func main() {

// Simply set l og parameters

log.SetFlags(log.Lshortfile | log.LstdFlags)

GetPic("https://image.baidu.com/search/index?tn=baiduimage&ps=1&ct=201326592&lm=-1&cl=2&nc=1&ie=utf-8&word=%E6%9F%B4%E7%8A%AC", 1)

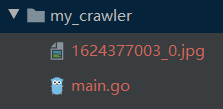

}In the above code, we added two functions to assist us in modifying the picture name and downloading the picture

- Get the name of the file and rename it with the timestamp, GetFilename

- Download the specific picture to the current directory, DownloadPic

Run the above code and you can see the following effects

2021/06/xx xx:50:04 main.go:91: ioutil.WriteFile successfully , filename = ./1624377003_0.jpg

In the current directory, a picture named 1624377003 has been successfully downloaded_ 0.jpg

The following is the image of the specific picture

Some big brothers will say that it's too slow for me to download pictures in one process. Can I download them faster and download them together with multiple processes

And crawl for our little firewood dog

Do we still remember the GO channel and sync package mentioned before? Sharing of GO channel and sync package , you can just practice it. This small function is relatively simple. Let's talk about the general idea. If you are interested, you can implement a wave,

- Let's read the data of the above website and convert it into a string

- Use regular matching to find out the corresponding series of picture links

- Put each picture link into a buffered channel, and set the buffer to 100 for the time being

- Let's open three more processes to read the data of the channel concurrently and download it locally. For the modification method of the file name, please refer to the above coding

What's up, big brothers and small partners? If you are interested, you can practice it. If you have ideas about crawling dynamic data, we can communicate and discuss it and make progress together

summary

- Share a brief description of static and dynamic web pages

- GO crawl static web page simple data

- GO crawl pictures on Web pages

- Concurrent crawling of resources on Web pages

Welcome to like, follow and collect

My friends, your support and encouragement are the driving force for me to insist on sharing and improve quality

Well, that's all for this time

Technology is open, and our mentality should be open. Embrace change, live in the sun and strive to move forward.

I'm Nezha, the Little Devil boy. Welcome to praise and pay attention to the collection. See you next time~