1, Introduction to recommendation system

The recommendation system is mainly based on the analysis and processing of the user's historical behavior data to find the content that the user may be interested in, so as to actively recommend the content that the user may be interested in to the user;

From the perspective of the long tail theory of goods, the recommendation system finds users' personalized needs by exploring users' behavior, so as to accurately recommend long tail goods to users who need it, and help users find goods they are interested in but difficult to find.

The recommendation system uses neighborhood based algorithms, one is user based collaborative filtering algorithm, the other is item based collaborative filtering algorithm;

2, Dataset preparation

We use the MovieLens dataset provided by GroupLens

These files contain 1,000,209 anonymous ratings of approximately 3,900 movies made by 6,040 MovieLens users who joined MovieLens in 2000.

Use code to load the scoring file ml-1m / ratings dat

def get_ratings():

ratings = pd.read_csv('ml-1m/ratings.dat',

sep='::',

names=['UserID', 'MovieID', 'Rating', 'Timestamp'],

nrows=100000

)

return ratings

We can view the data of the first five rows

rating = get_ratings() print(rating.head()) UserID MovieID Rating Timestamp 0 1 1193 5 978300760 1 1 661 3 978302109 2 1 914 3 978301968 3 1 3408 4 978300275 4 1 2355 5 978824291

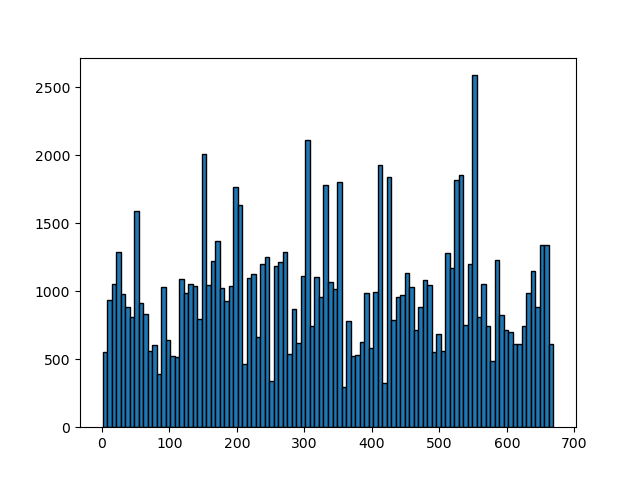

Find out the number of movies users are interested in

rating = get_ratings() plt.hist(rating['UserID'], bins=100, edgecolor='black') plt.show()

3, User based collaborative filtering algorithm

The user based collaborative filtering algorithm is the oldest algorithm in the recommendation system. The algorithm first needs to find users with similar interests to the current user, and then recommend the found items that the user is interested in but are not in the current user's interest list to the current user;

The user based collaborative filtering algorithm is mainly divided into two steps

- Find a set of users with similar interests to the current user;

The algorithm calculates the user interest similarity based on the user's positive feedback behavior on the history of items; We give users u and v, so that N(u) represents the collection of items that user u has had positive feedback, and N(v) represents the collection of items that user v has had positive feedback; We can calculate the similarity of users u and v through cosine similarity:

w_{uv} = \frac{N(u) \cap N(v)}{\sqrt {N(u) \cup N(v)}}

For example, user U has positive feedback records on a, b and c, and user V has positive feedback records on a and c;

U a b c V a c

We can calculate the interest similarity of U and V by using cosine similarity

w U V = ∣ { a , b , c } ∩ { a , c } ∣ ∣ { a , b , c } ∣ ∣ { a , c } ∣ = 2 6 w_{UV}=\frac{|\{a,b,c\} \cap \{a,c\}|}{\sqrt {|\{a,b,c\}| |\{a,c\}|}} = \frac{2}{\sqrt{6}} wUV=∣{a,b,c}∣∣{a,c}∣ ∣{a,b,c}∩{a,c}∣=6 2

We use the following user_similarity to calculate user similarity

def user_similarity(ratings):

matrix = []

rating_groups = ratings.groupby('UserID')

for u_id, u_ratings in rating_groups:

row = []

matrix.append(row)

u_movieIds = u_ratings['MovieID'].values

for v_id, v_ratings in rating_groups:

v_movieIds = v_ratings['MovieID'].values

u_v_movieIds = np.intersect1d(u_movieIds, v_movieIds)

similarity = len(u_v_movieIds)/math.sqrt(len(u_movieIds) * len(v_movieIds))

row.append(similarity)

result = pd.DataFrame(matrix, columns= rating_groups.groups.keys(), index=rating_groups.groups.keys())

return result

rating = get_ratings()

similarity_matrix = user_similarity(rating)

print(similarity_matrix.head(10))

1 2 3 ... 667 668 669

1 1.000000 0.084657 0.115406 ... 0.010504 0.068680 0.076194

2 0.084657 1.000000 0.147945 ... 0.087529 0.161416 0.048839

3 0.115406 0.147945 1.000000 ... 0.085666 0.070014 0.077674

4 0.119898 0.153704 0.152783 ... 0.083438 0.036370 0.000000

5 0.097618 0.125142 0.059708 ... 0.119562 0.142134 0.059131

6 0.163017 0.114939 0.099710 ... 0.063529 0.000000 0.032915

7 0.049341 0.284641 0.150899 ... 0.164817 0.179605 0.099627

8 0.116508 0.201633 0.083139 ... 0.090808 0.113092 0.023525

9 0.200125 0.162482 0.122407 ... 0.118842 0.178069 0.053877

10 0.240081 0.215441 0.216773 ... 0.126021 0.083229 0.096951

[10 rows x 669 columns]

In the above code, all users are directly traversed to calculate the similarity. In many cases, due to the large number of items or the few interests and concerns of each user, a large number of users do not have the so-called Union; We can first reverse the data structure to product users, then calculate the total number of products of interest to different users and the intersection of products of interest between related users, and finally calculate the cosine similarity;

import math

import numpy as np

import pandas as pd

def change_user_ratings(rating):

grouped = rating.groupby('MovieID')

result = {}

for movieId,m_rating in grouped:

result[movieId] = m_rating['UserID'].values

df = pd.DataFrame({

'MovieID': result.keys(),

'UserIDs': result.values()

})

return df.set_index(df.columns.values[0])

def cal_count(product_users):

user_counts = {}

rel_user_counts = {}

for movieId, row in product_users.iterrows():

userIds = row['UserIDs']

for uid in userIds:

if uid not in user_counts:

user_counts[uid] = 0

user_counts[uid] += 1

for vid in userIds:

if (uid, vid) not in rel_user_counts:

rel_user_counts[(uid, vid)] = 0

rel_user_counts[(uid, vid)] += 1

user_counts = pd.DataFrame({'UserID': user_counts.keys(), 'Movie_Count': user_counts.values()})

rel_user_counts = pd.DataFrame({'Rel_UserID':rel_user_counts.keys(), 'Movie_Count':rel_user_counts.values()})

return user_counts.set_index(user_counts.columns.values[0]), rel_user_counts.set_index(rel_user_counts.columns.values[0])

def cosin_similarity(user_counts, rel_user_counts):

result = []

for u, u_row in user_counts.iterrows():

row = []

result.append(row)

u_count = u_row['Movie_Count']

for v, v_row in user_counts.iterrows():

v_count = v_row['Movie_Count']

if rel_user_counts.index.isin([(u,v)]).any():

count = rel_user_counts.at[(u,v), 'Movie_Count']

row.append(count/math.sqrt(u_count * v_count))

else:

row.append(0)

return pd.DataFrame(result, index=user_counts.index.values, columns=user_counts.index.values)

def user_similarity(ratings):

rating_users = change_user_ratings(ratings)

user_counts, rel_user_counts = cal_count(rating_users)

s = cosin_similarity(user_counts, rel_user_counts)

return s

ratings = get_ratings()

similarity_matrix = user_similarity(ratings)

print(similarity_matrix.head(10))

1 2 3 ... 667 668 669

1 1.000000 0.084657 0.115406 ... 0.010504 0.068680 0.076194

2 0.084657 1.000000 0.147945 ... 0.087529 0.161416 0.048839

3 0.115406 0.147945 1.000000 ... 0.085666 0.070014 0.077674

4 0.119898 0.153704 0.152783 ... 0.083438 0.036370 0.000000

5 0.097618 0.125142 0.059708 ... 0.119562 0.142134 0.059131

6 0.163017 0.114939 0.099710 ... 0.063529 0.000000 0.032915

7 0.049341 0.284641 0.150899 ... 0.164817 0.179605 0.099627

8 0.116508 0.201633 0.083139 ... 0.090808 0.113092 0.023525

9 0.200125 0.162482 0.122407 ... 0.118842 0.178069 0.053877

10 0.240081 0.215441 0.216773 ... 0.126021 0.083229 0.096951

[10 rows x 669 columns]

- Find the items that the user is interested in and the current user has not heard of and recommend them to him;

Through the above calculation, we get the matrix of user similarity. Next, we need to find the K users with the most similar interests to the target users, and then recommend the items that only these K users like to the target users; Here, we need to further measure the interest of target users in specific products

In this formula, S(u,K) contains k users whose interests are closest to those of user u, N(i) is the set of users who have acted on item i, Wuv is the interest similarity between user u and user v, and rvi represents user v's interest in item i. because the implicit feedback data of single behavior is used, all rvi=1.

p

(

u

,

i

)

=

∑

v

∈

s

(

u

,

K

)

∩

N

(

i

)

w

u

v

r

v

i

p(u,i) = \sum_{v \in s(u,K) \cap N(i)} w_{uv} r_{vi}

p(u,i)=v∈s(u,K)∩N(i)∑wuvrvi

We use the following recommended method to implement this recommendation

def recommend(ratings,u, matrix, k):

result = pd.Series(dtype='float64');

grouped = dict(list(ratings.groupby('UserID')))

u_ratings = grouped[u][['MovieID','Rating']]

row = matrix.loc[u].sort_values(ascending=False)

for v in row.index:

if u != v:

similarity = row.loc[v]

v_ratings = grouped[v][['MovieID','Rating']]

diff = pd.concat([v_ratings, u_ratings, u_ratings]).drop_duplicates(subset=pd.Index(['MovieID']), keep=False)

for movieId, s_rating in diff.set_index('MovieID').iterrows():

like = similarity * (s_rating['Rating']/5)

s_movieId = str(movieId)

if movieId in result:

result[s_movieId] += like

else:

result[s_movieId] = like

return result.sort_values(ascending=False).head(k)

We calculate the first three items recommended to user A and they are most interested in; From the calculation results, it can be seen that goods c and e;

ratings = get_ratings() similarity_matrix = user_similarity(ratings) recommend_movies = recommend(ratings, 1, similarity_matrix, 10) print(recommend_movies.head(10)) 2049 0.240081 3292 0.212965 1067 0.204131 2559 0.193922 3620 0.168068 963 0.168068 2179 0.165928 2211 0.165928 1817 0.165928 2227 0.165928 dtype: float64

Note: due to rating According to the actual situation of data, each user and each movie will have a corresponding score, so the second algorithm does not have any performance advantages and needs to be selected according to the actual situation of their own data;