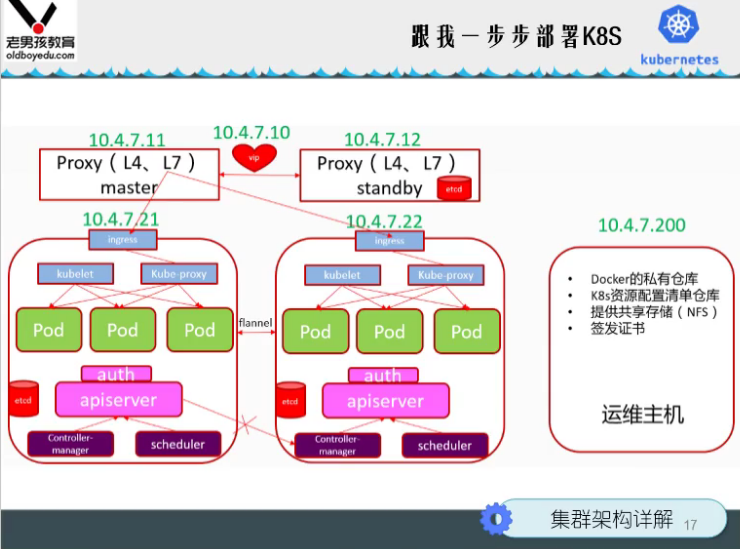

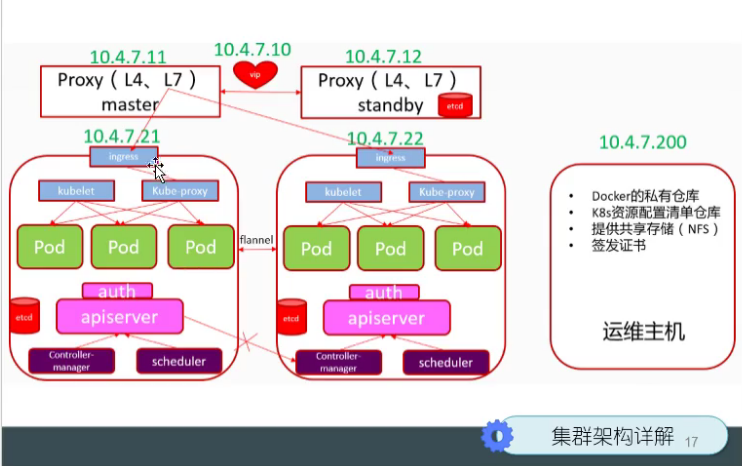

k8s environmental preparation

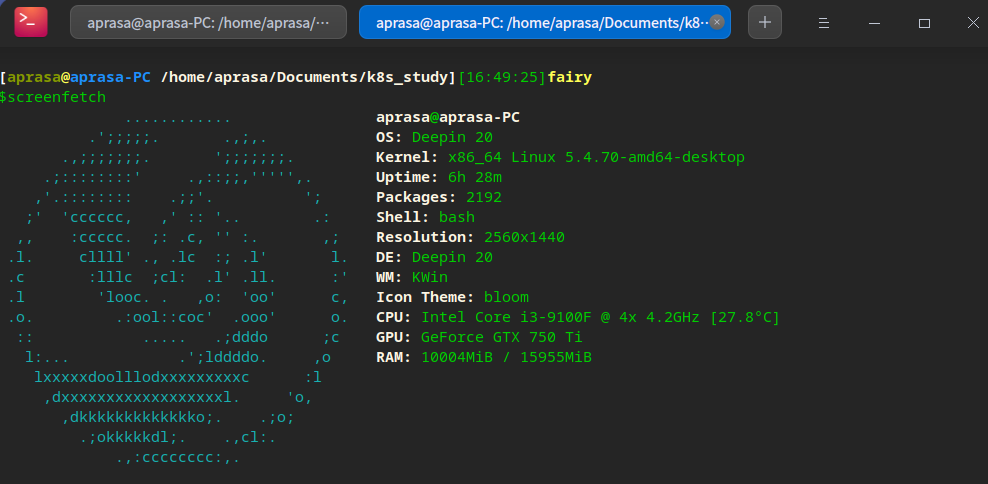

Prepare a linux computer

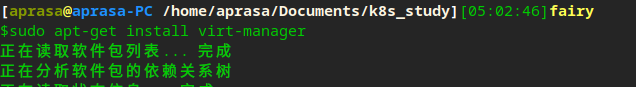

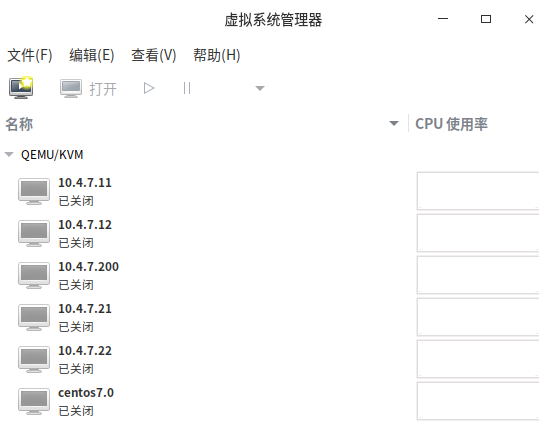

Install kvm and initialize k8s cluster nodes

sudo apt-get install virt-manager

Install os

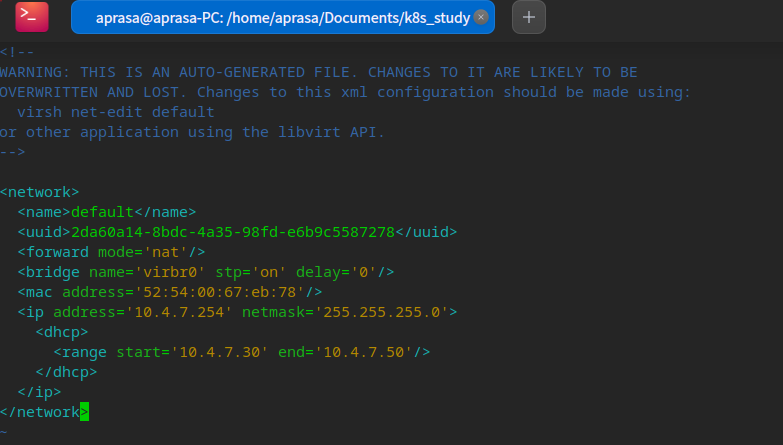

Configure kvm network segment

sudo vi /etc/libvirt/qemu/networks/default.xml # After configuration, restart the network sudo systemctl restart network-manager

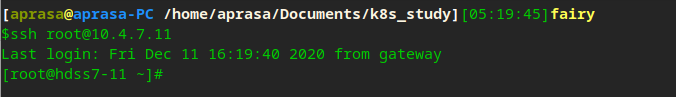

Get through ssh tunnel

# Generate local key ssh-keygen -t rsa -C "cat@test.com" # Achieve secret free login ssh-copy-id root@10.4.7.11

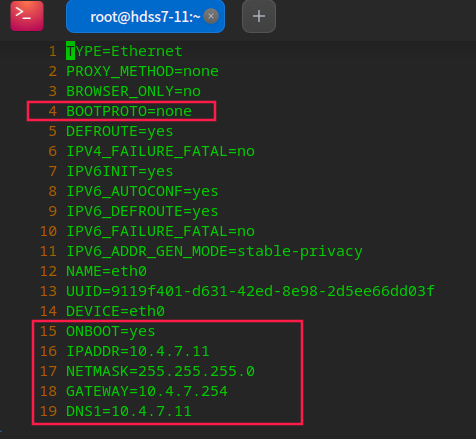

Configure basic information of each node

# Configure hostname hostnamectl set-hostname hdss7-11.host.com # Configure network information vi /etc/sysconfig/network-scripts/ifcfg-eth0 systemctl restart network

Install the base package for the Kube node

# Configure epel source yum install epel-release -y # Install basic package yum install wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils -y

Close selinux and firewall

# Close selinux setenforce 0 # Turn off firewall systemctl stop firewalld # Turn off firewall and start systemctl disable firewalld

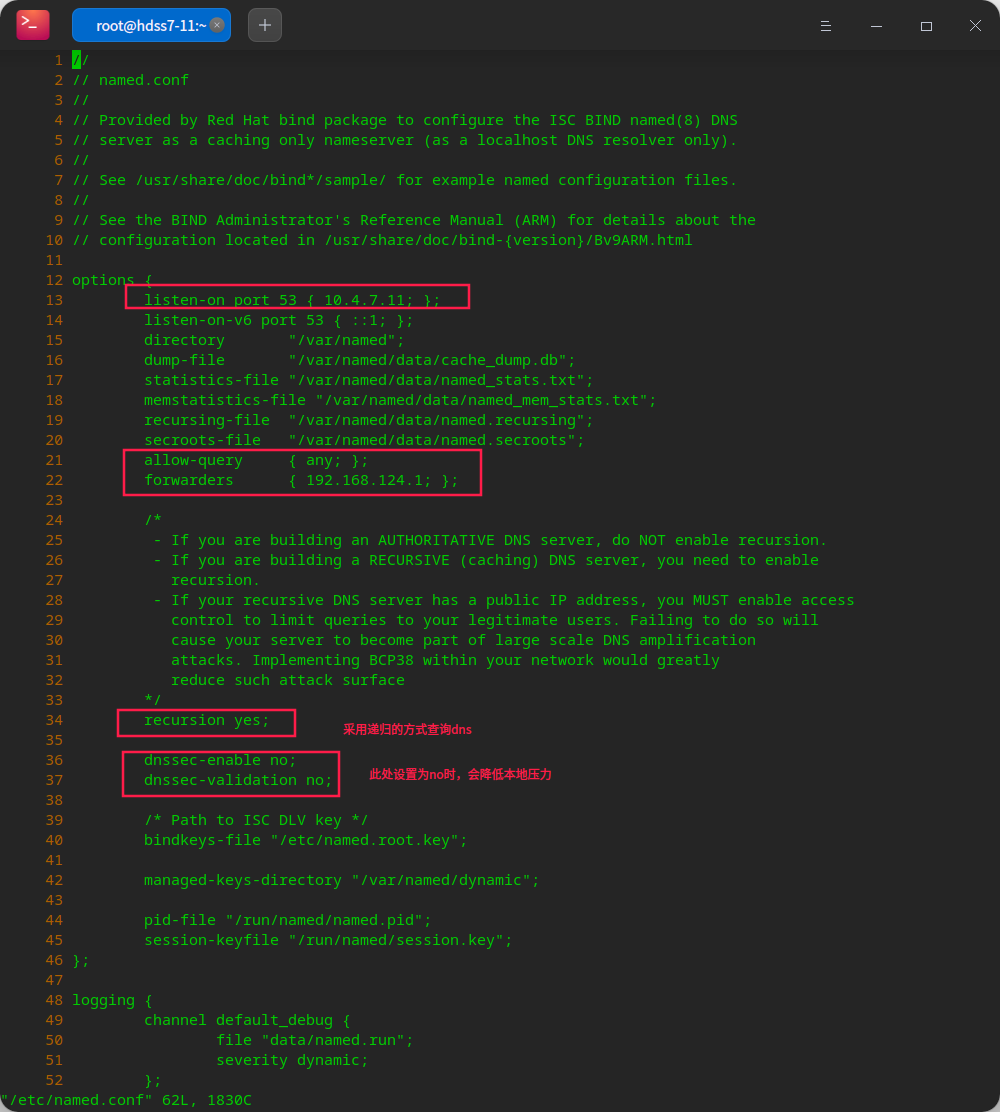

Configure dns server

Install bind

yum install bind -y

Configure dns file

vi /etc/named.conf

# Check whether the configuration reports an error named-checkconf

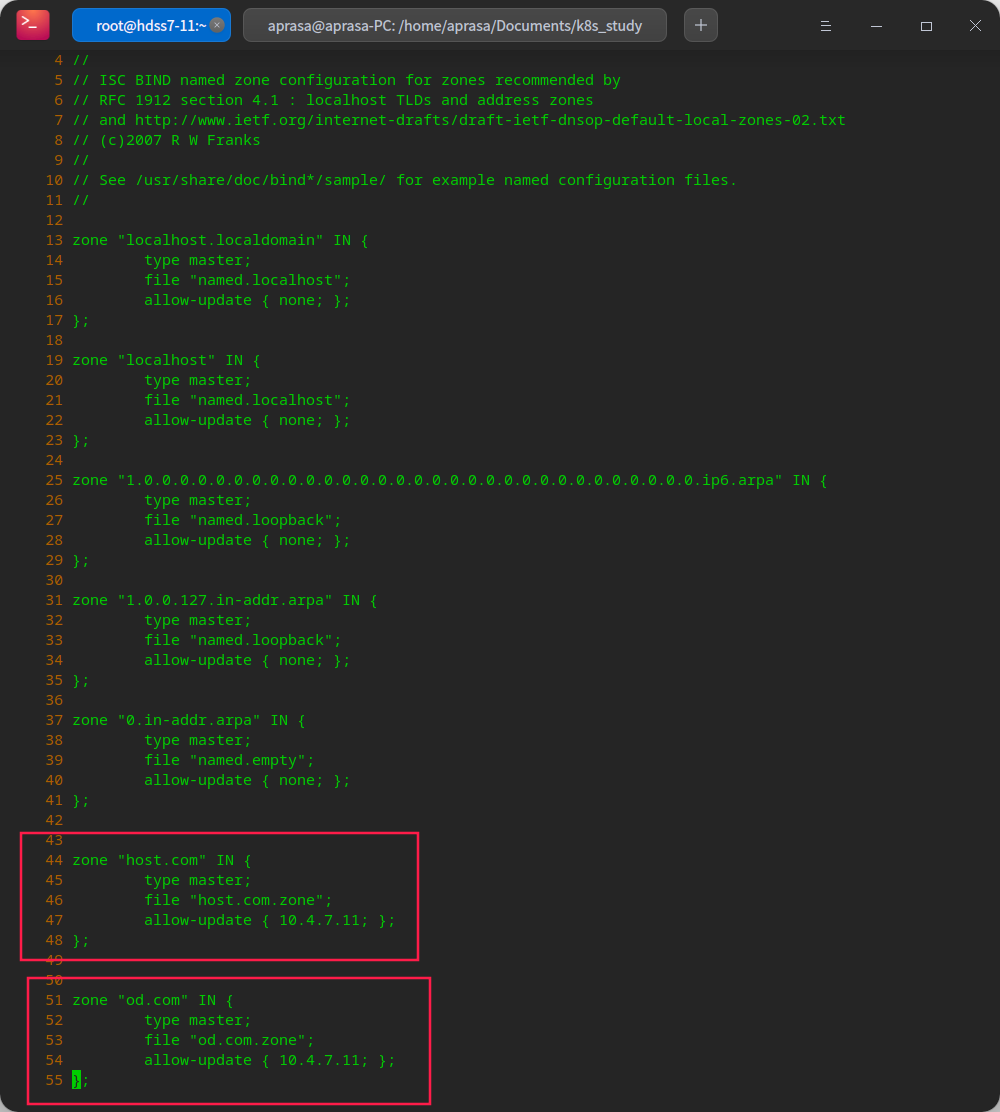

Configure dns domain

vi /etc/named.rfc1912.zones

# Add your own zone at the end

zone "host.com" IN {

type master;

file "host.com.zone";

allow-update { 10.4.7.11; };

};

zone "od.com" IN {

type master;

file "od.com.zone";

allow-update { 10.4.7.11; };

};

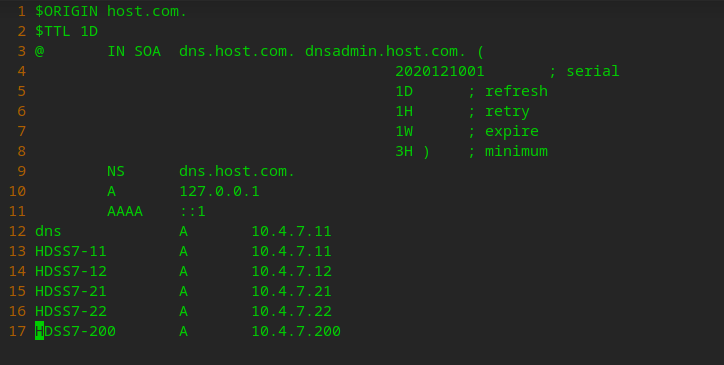

Configure dns database

vi /var/named/host.com.zone

$ORIGIN host.com.

$TTL 1D

@ IN SOA dns.host.com. dnsadmin.host.com. (

2020121001 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS dns.host.com.

A 127.0.0.1

AAAA ::1

dns A 10.4.7.11

HDSS7-11 A 10.4.7.11

HDSS7-12 A 10.4.7.12

HDSS7-21 A 10.4.7.21

HDSS7-22 A 10.4.7.22

HDSS7-200 A 10.4.7.200

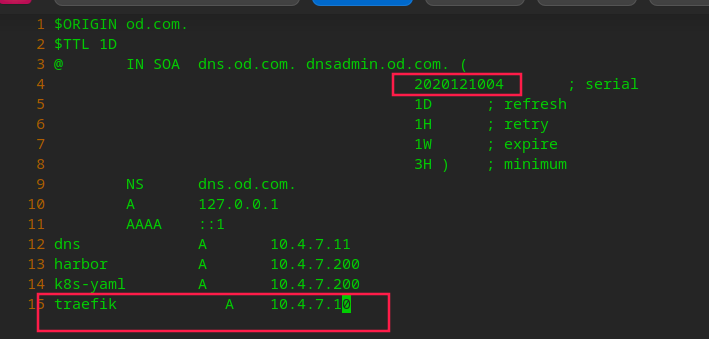

vi /var/named/od.com.zone

$ORIGIN od.com.

$TTL 1D

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020121001 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS dns.od.com.

A 127.0.0.1

AAAA ::1

dns A 10.4.7.11

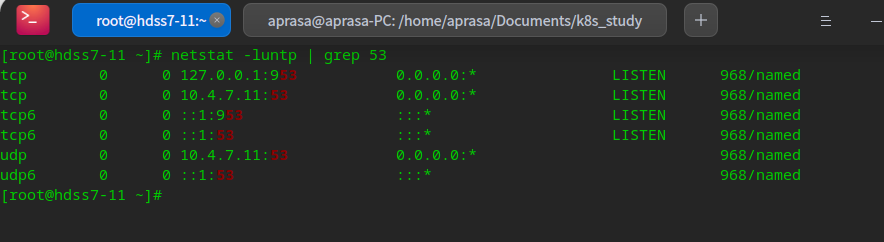

Check the configuration file and start dns

named-checkconf systemctl start named

Test whether dns is connected

# Check whether the port is turned on netstat -luntp | grep 53

# Test domain name test A record, in 10.4 7.11 server, short output dig -t A hdss7-200.host.com @10.4.7.11 +short

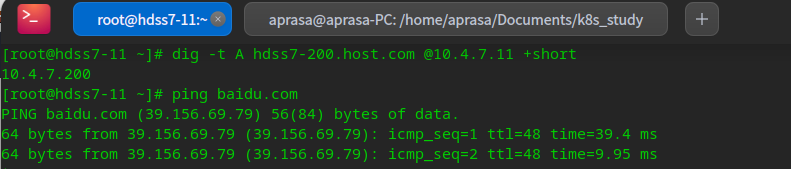

Configure certificate authority

Install cfssl

# Download binary software wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo # Add executable permissions chmod +x /usr/bin/cfssl*

Create certificate directory

cd /opt/ mkdir certs cd certs/

Generate ca certificate

# Create CA CSR JSON file vi ca-csr.json

{

"CN": "OldboyEdu",

"hosts":[

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "od",

"OU": "ops"

}

],

"ca": {

"expiry": "438000h"

}

}

#Generate certificate cfssl gencert -initca ca-csr.json | cfssl-json -bare ca

Prepare docker environment

Prepare the environment on three machines: hdss7-200, hdss7-21 and hdss7-22

# On hdss7-200,hdss7-21,hdss7-22

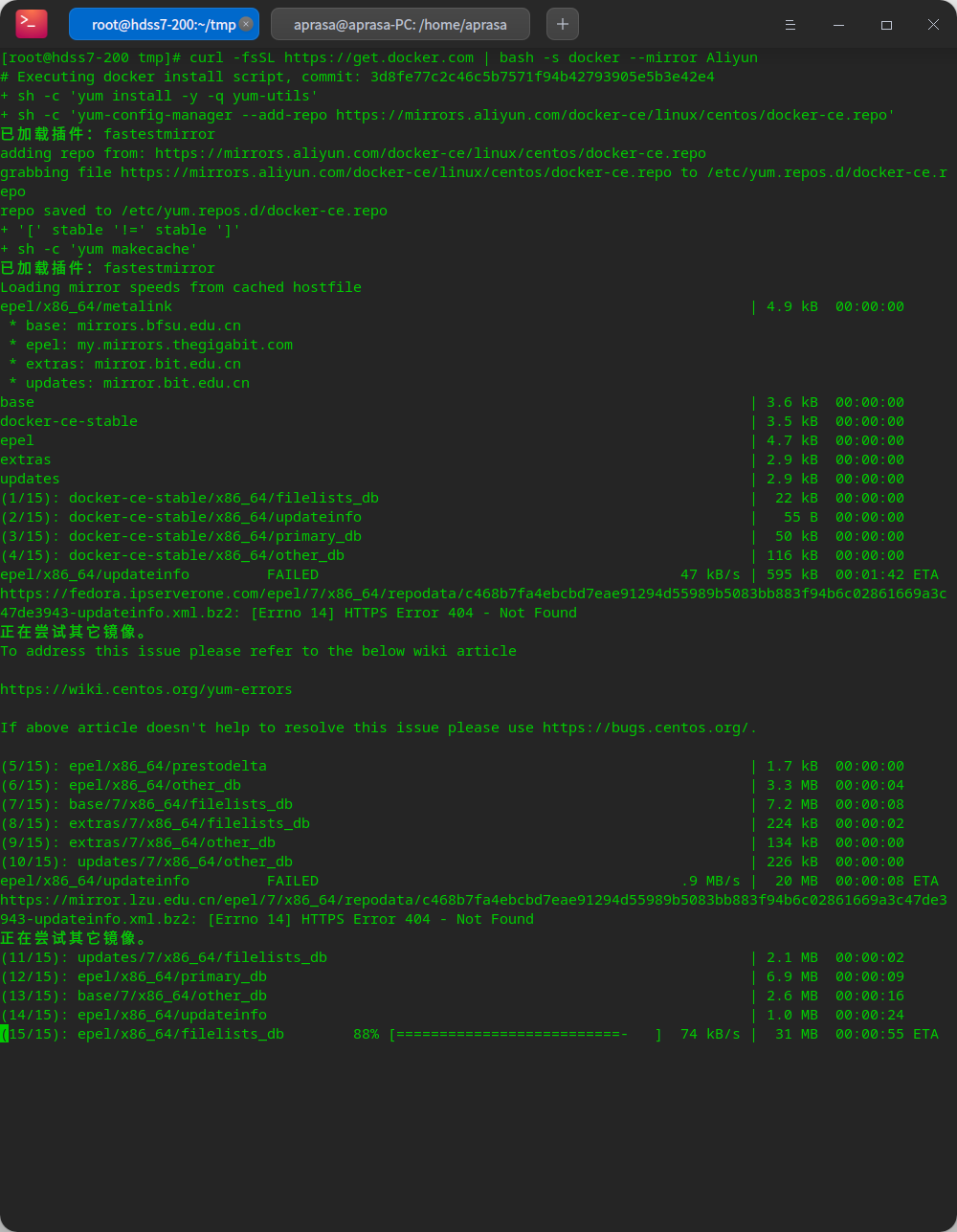

Install docker CE using an online script

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

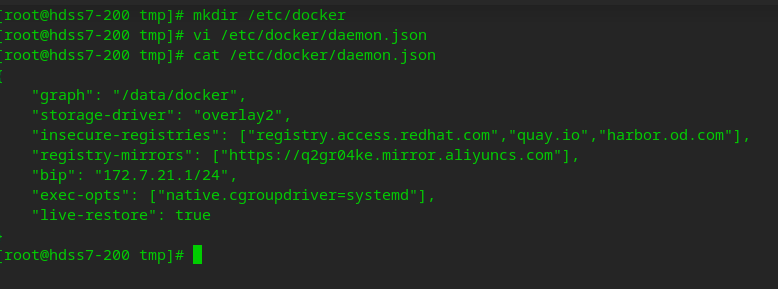

Configure docker

#create profile mkdir /etc/docker vi /etc/docker/daemon.json

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quay.io","harbor.od.com"],

"registry-mirrors": ["https://q2gr04ke.mirror.aliyuncs.com"],

"bip": "172.7.21.1/24",

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

# Create data directory mkdir -p /data/docker

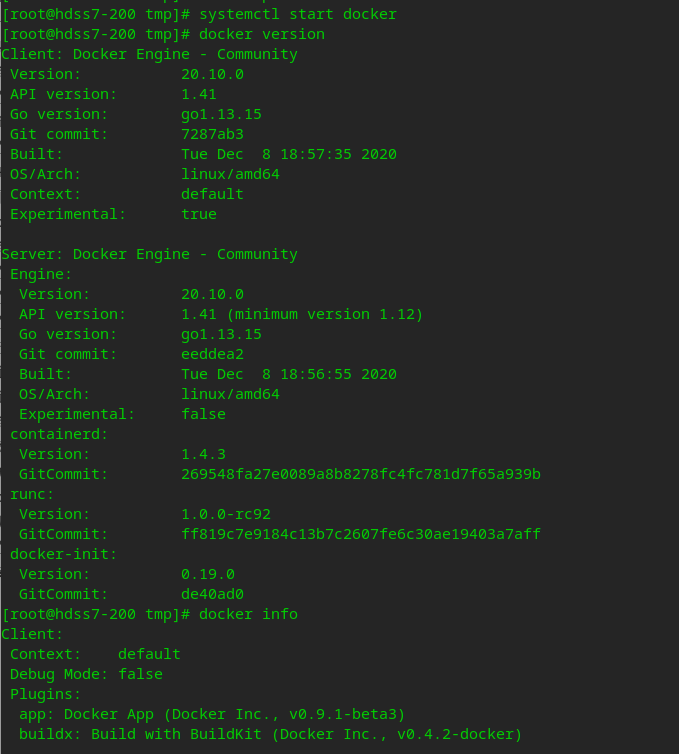

Start docker

systemctl start docker # View docker information docker version docker info

Prepare docker private warehouse harbor

# Official address https://github.com/goharbor/harbor

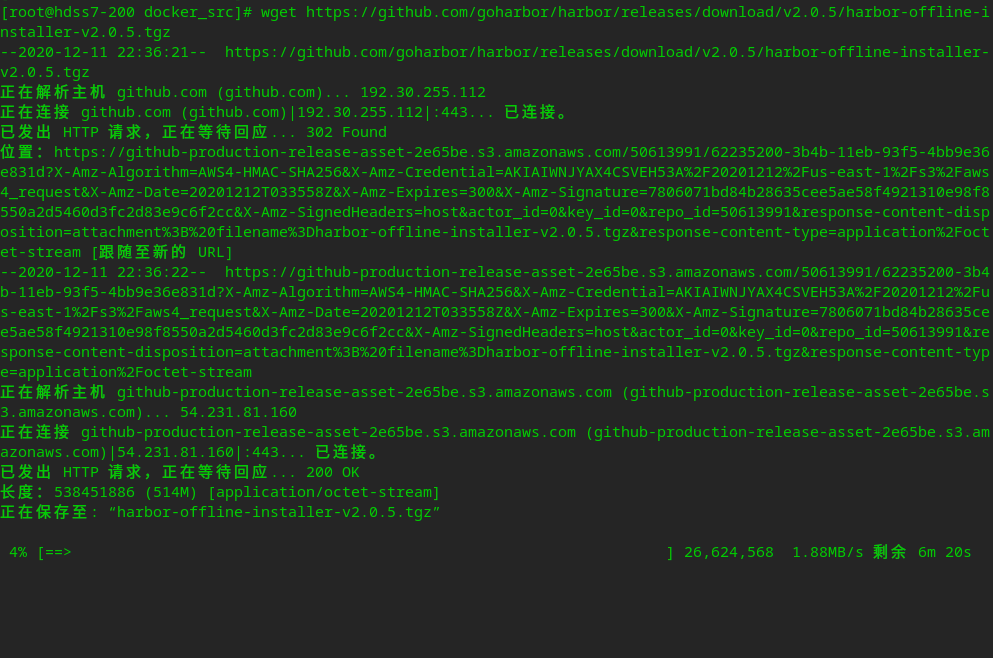

Download harbor image

cd /opt/ mkdir docker_src cd docker_src/ wget https://github.com/goharbor/harbor/releases/download/v2.0.5/harbor-offline-installer-v2.0.5.tgz

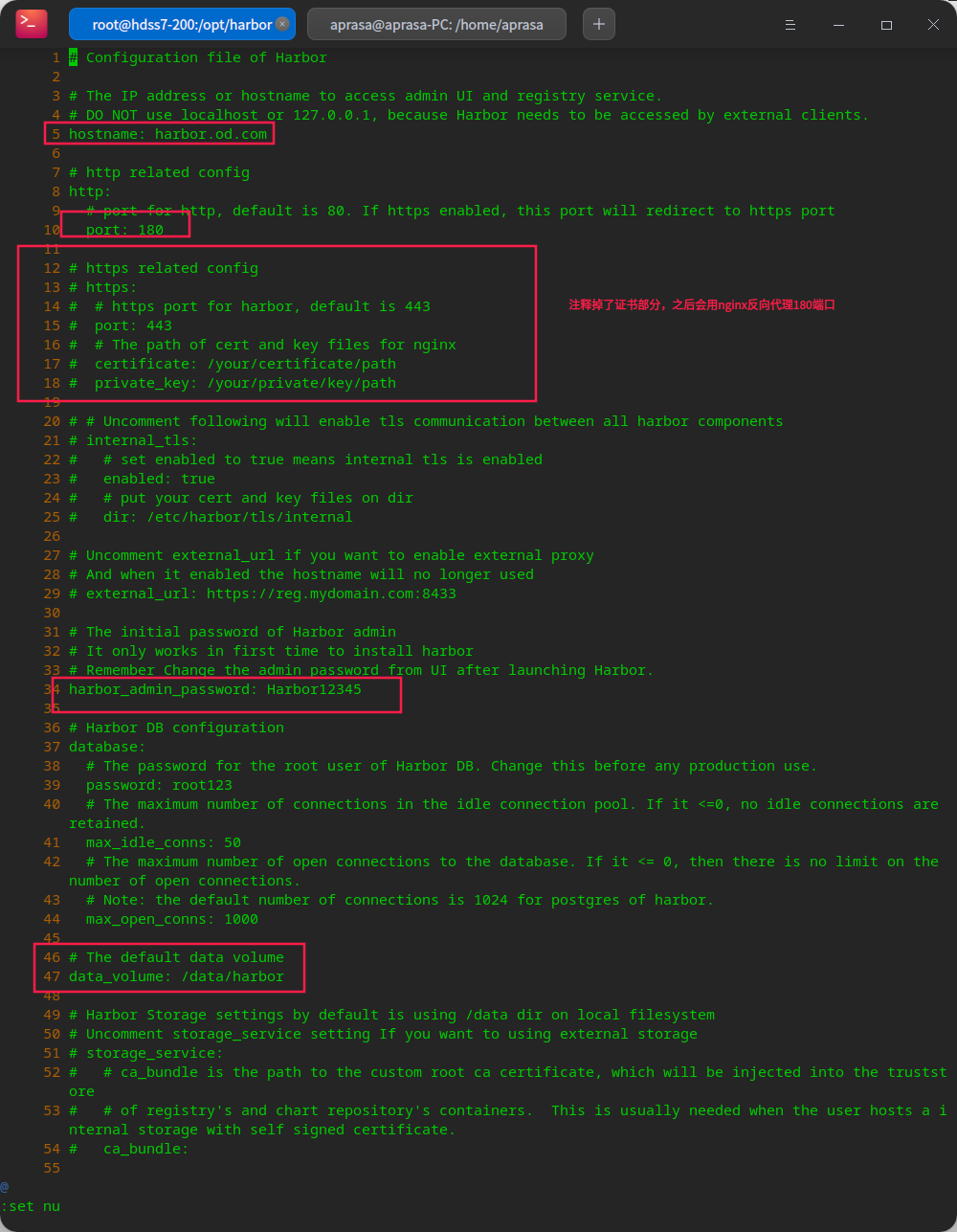

Configure harbor

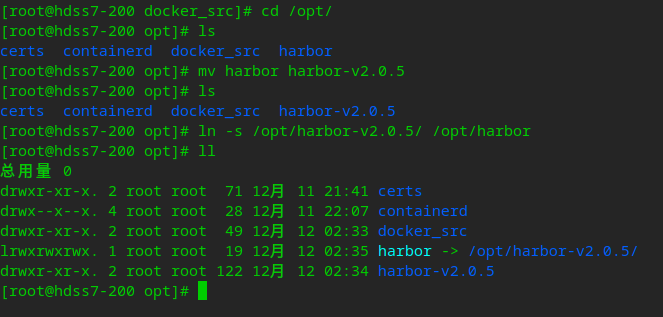

# Extract to the opt directory tar xf harbor-offline-installer-v2.0.5.tgz -C /opt/ # Mark version number cd /opt/ mv harbor harbor-v2.0.5 # Create soft links for future upgrades ln -s /opt/harbor-v2.0.5/ /opt/harbor

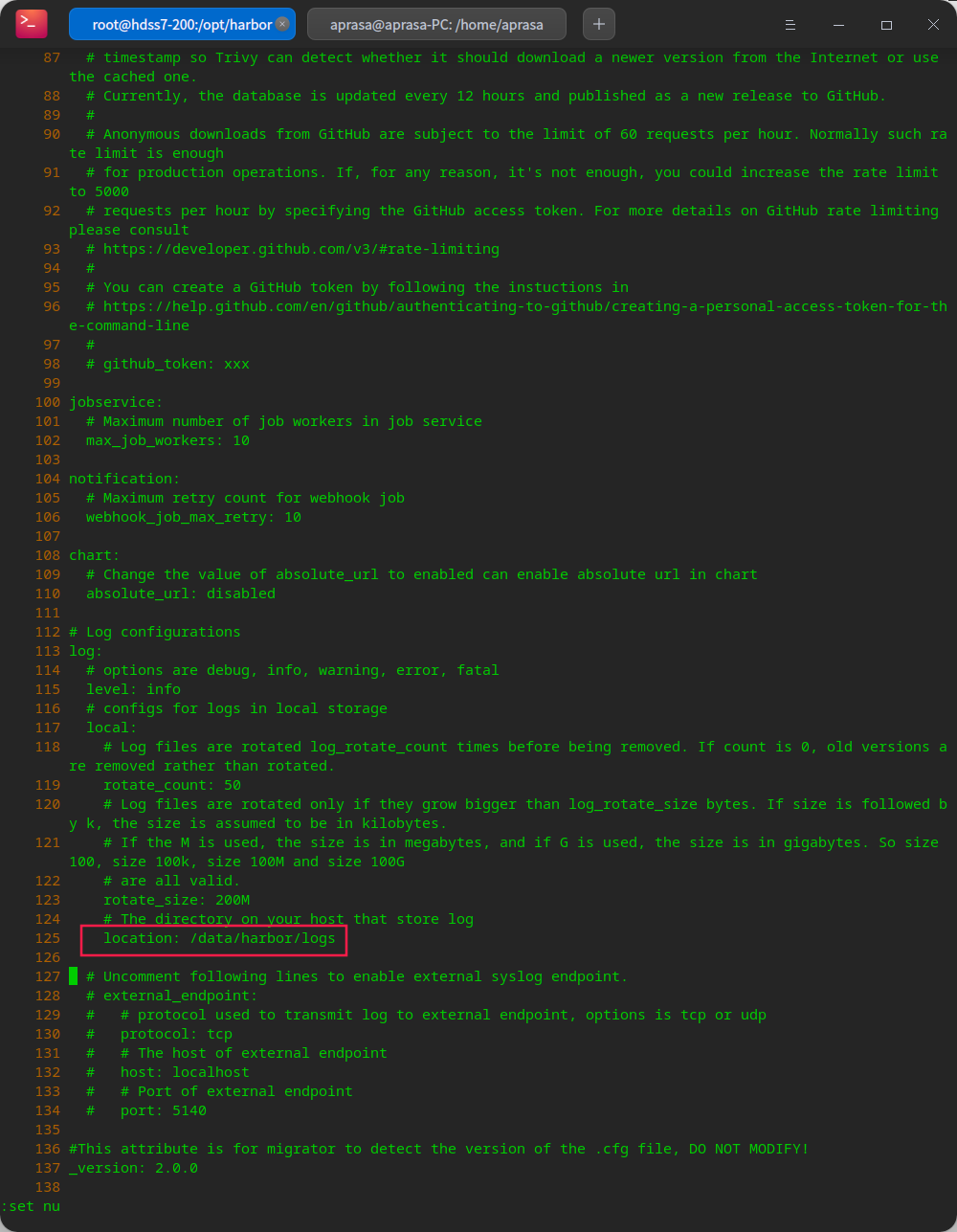

# create profile cp harbor.yml.tmpl harbor.yml # Change configuration vi harbor.yml # Create log path mkdir -p /data/harbor/logs # Create data path mkdir /data/harbor

Run harbor

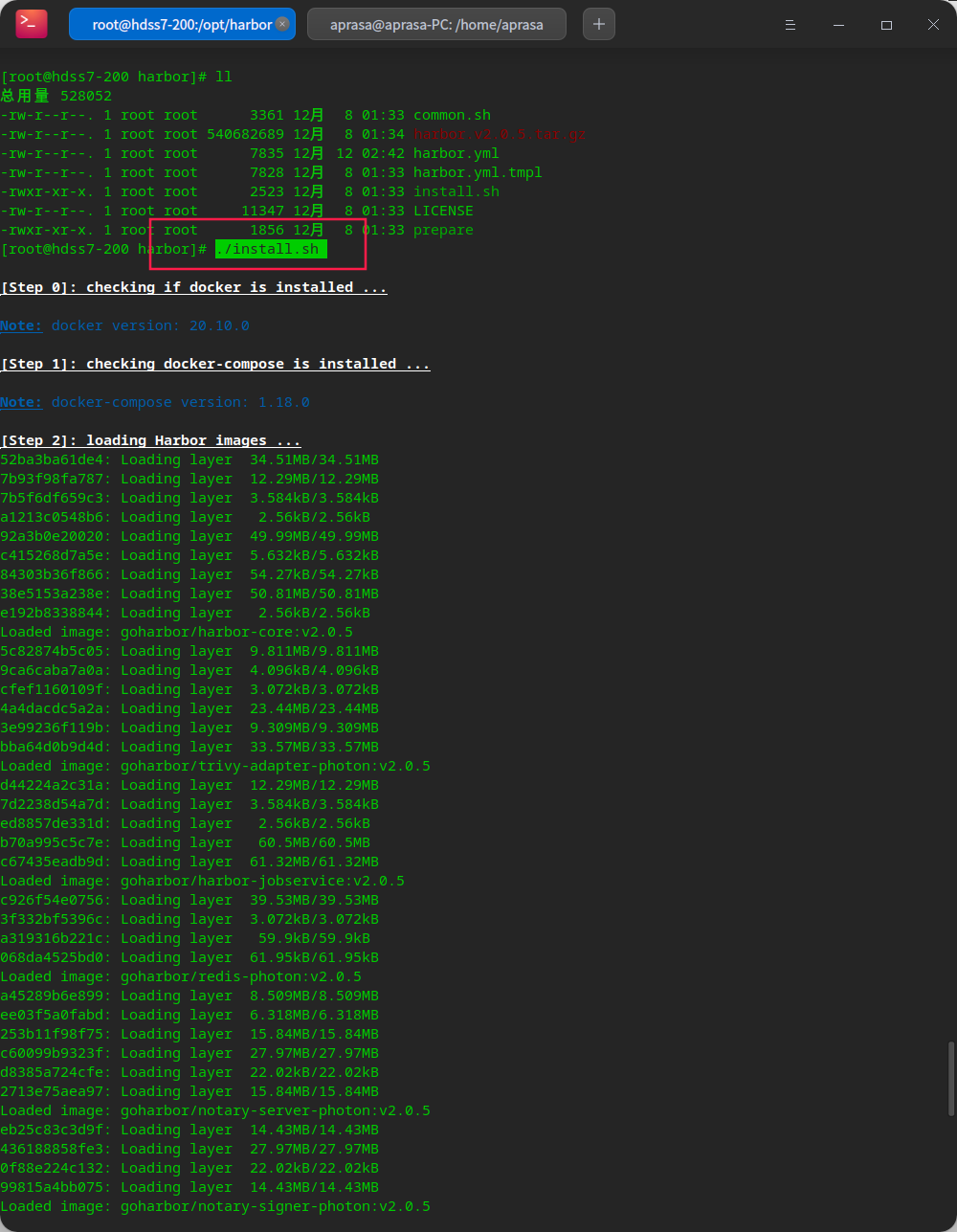

# Since harbor runs in docker, it relies on docker compose for stand-alone choreography yum install docker-compose -y # Run the installation script ./install.sh

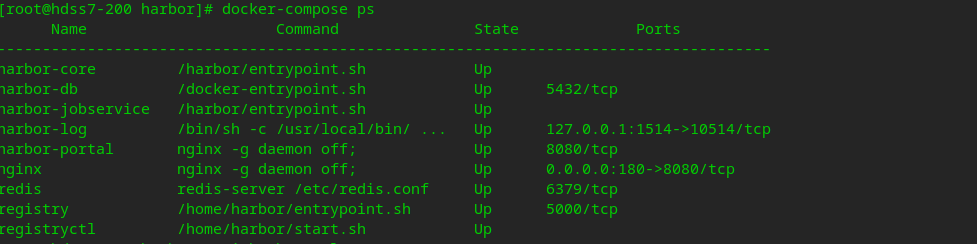

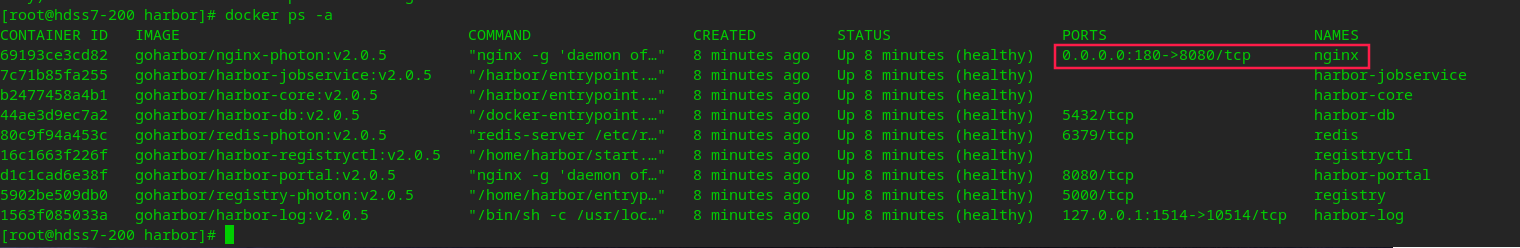

#You can use docker compose to view tasks started on a stand-alone orchestration docker-compose ps

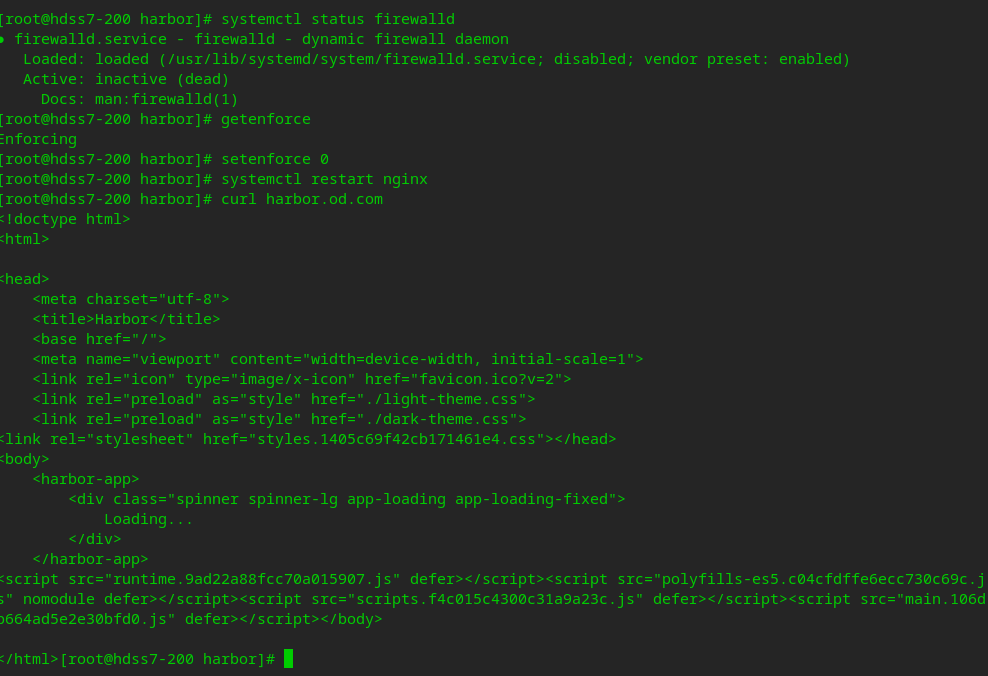

Using nginx reverse proxy harbor

# Installing nginx yum install nginx -y # Configure nginx files vi /etc/nginx/conf.d/harbor.od.com.conf

client_max_body_size 1000m; Because the image size of each layer of harbor is different, an error may be reported if this is not configured

server {

listen 80;

server_name harbor.od.com;

client_max_body_size 1000m;

location / {

proxy_pass http://127.0.0.1:180;

}

}

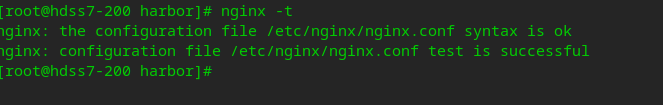

#Check whether the configuration is correct nginx -t

# Configure startup nginx systemctl enable nginx # Start nginx service systemctl start nginx

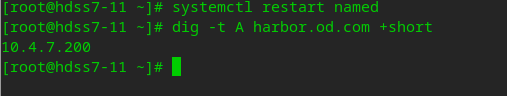

Add harbor domain name to dns server

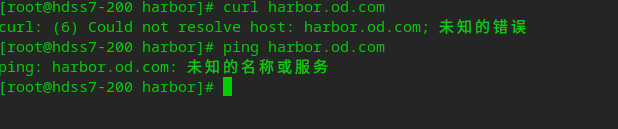

# The domain name is found to be blocked

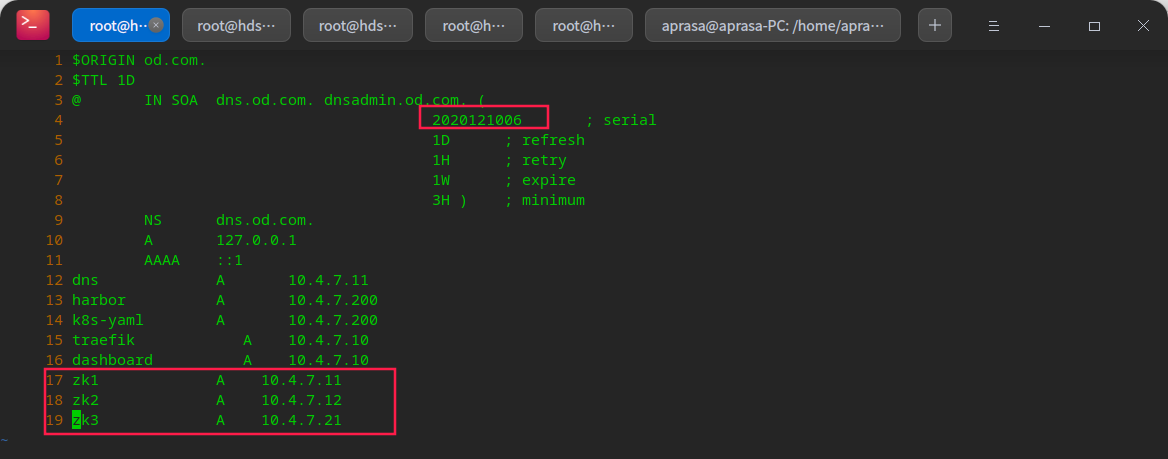

# Add domain name to hdss7-11 vi /var/named/od.com.zone

# Restart the named service systemctl restart named

As you can see, after configuring dns, you can curl

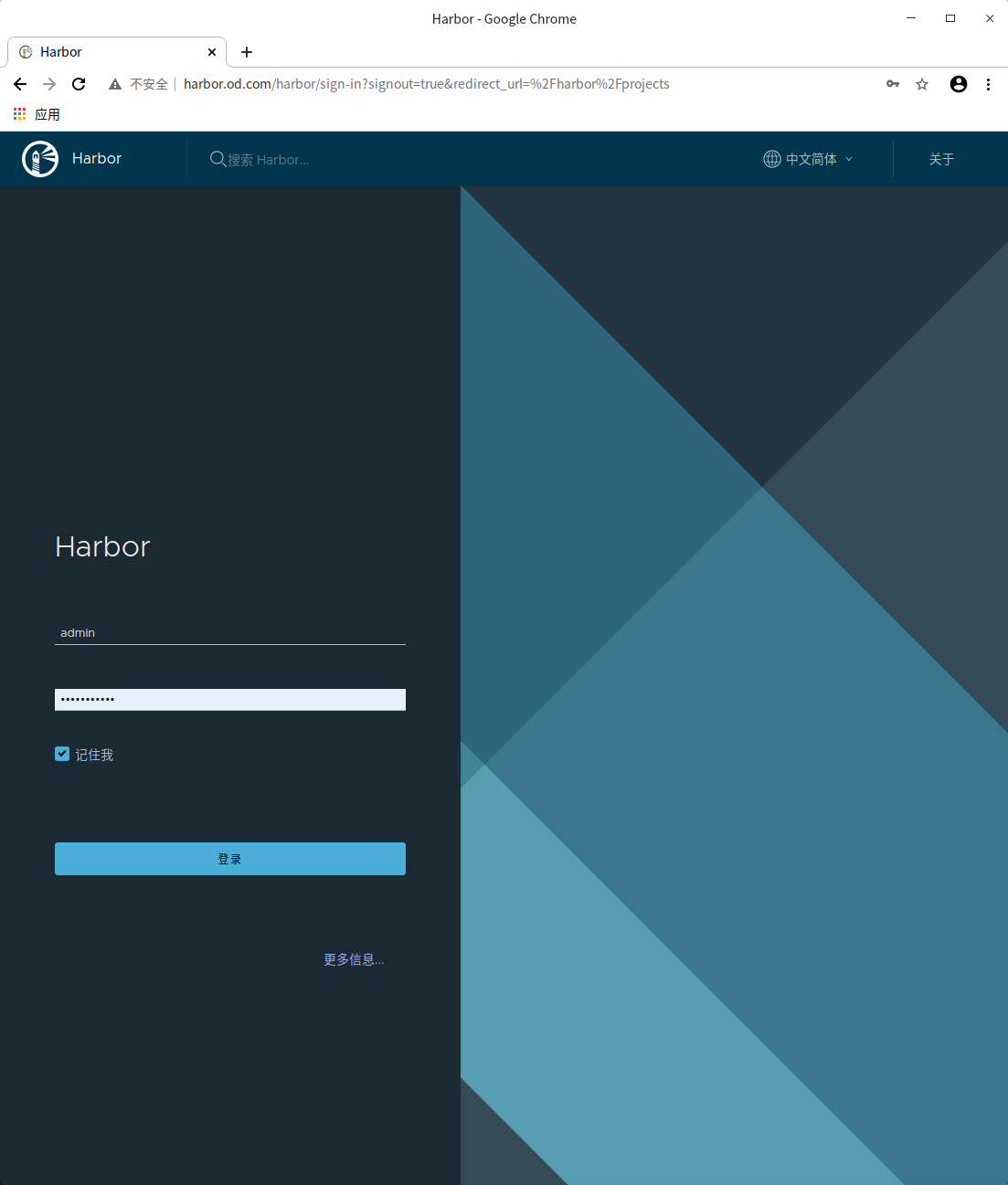

Visit harbor via browser

http://harbor.od.com/

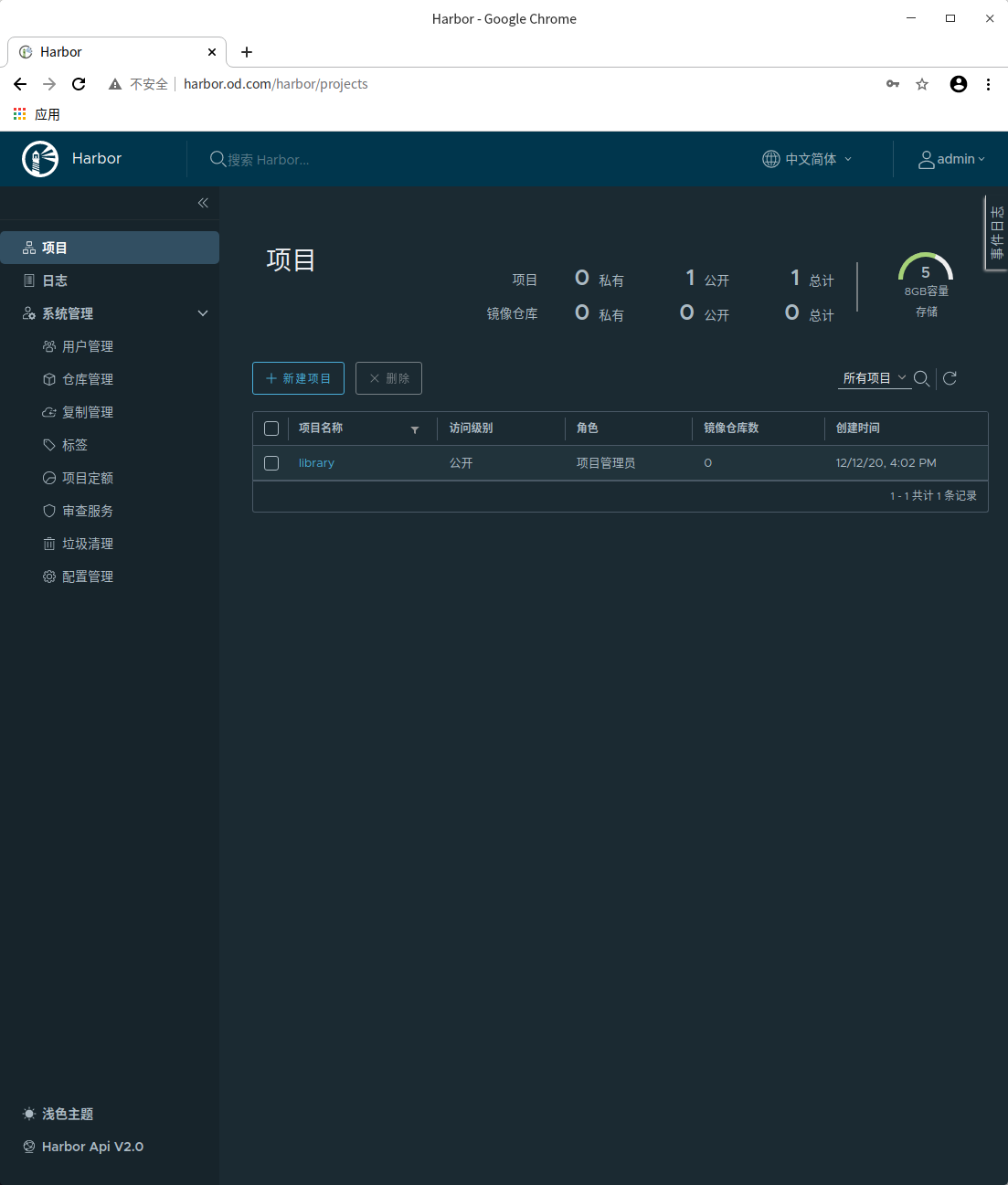

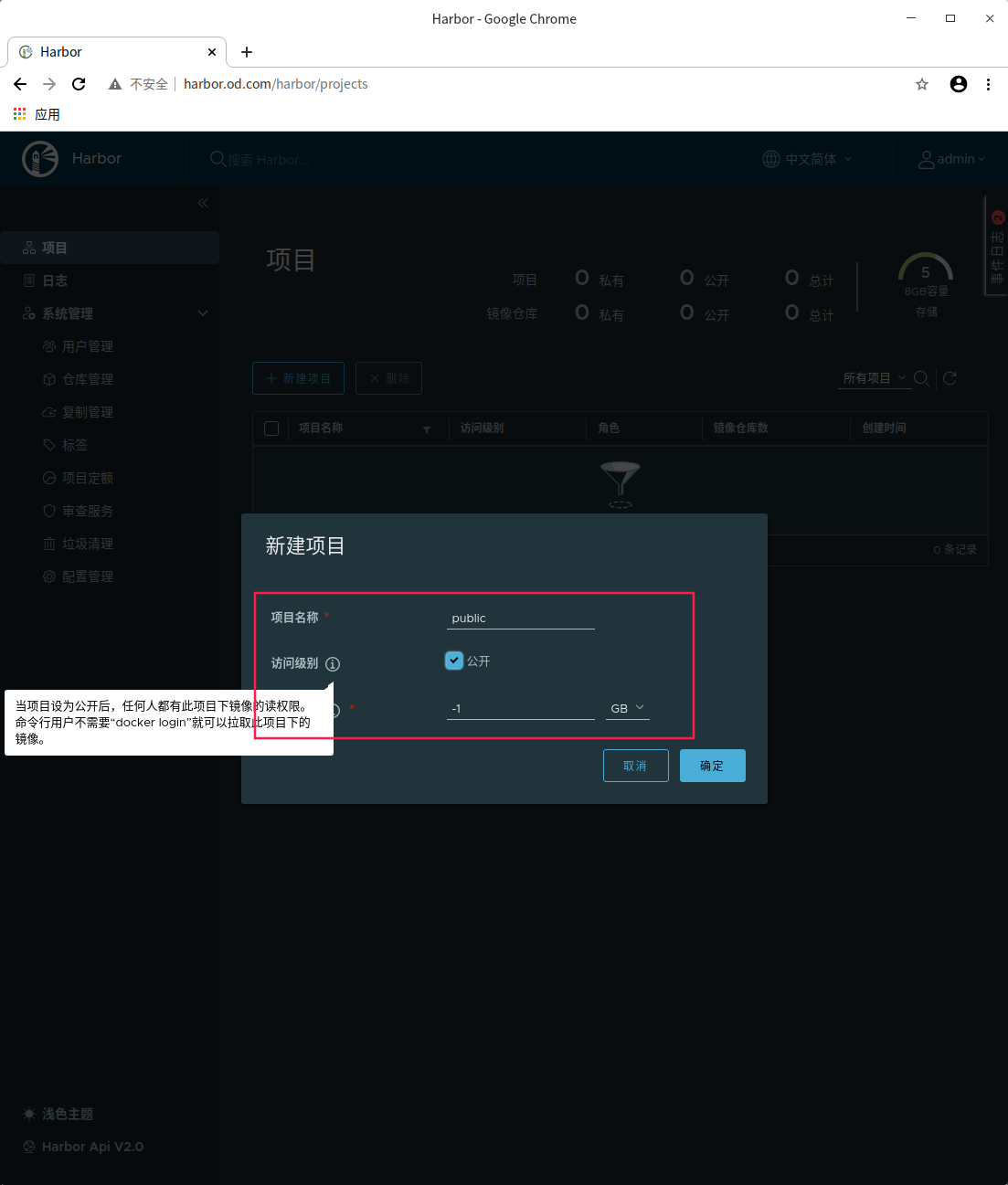

New public project warehouse

Test self built public harbor warehouse

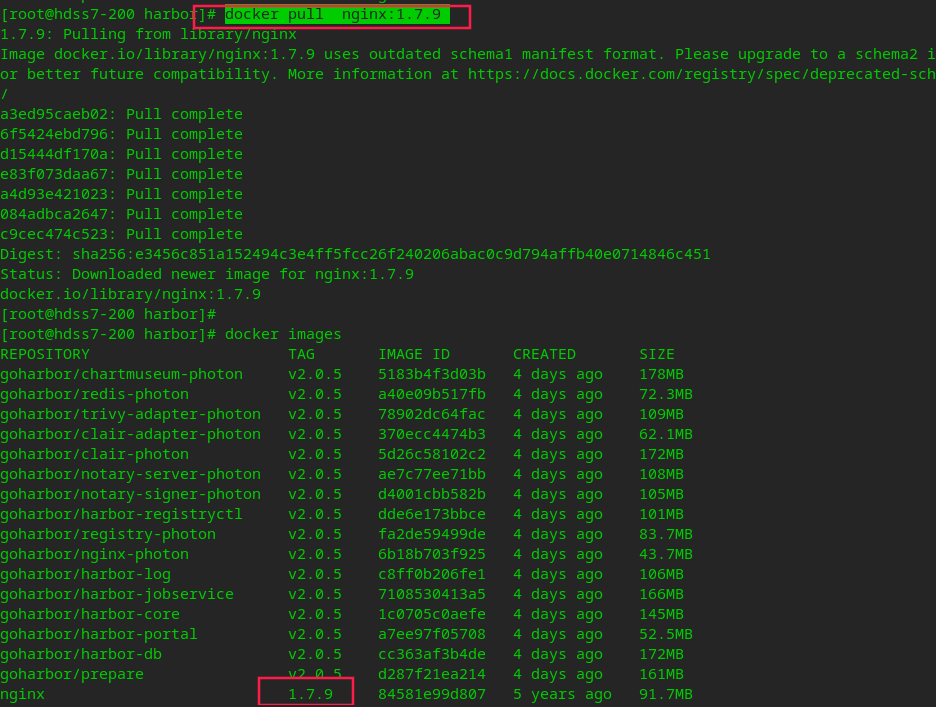

# Pull an nginx image from the public network docker pull nginx:1.7.9

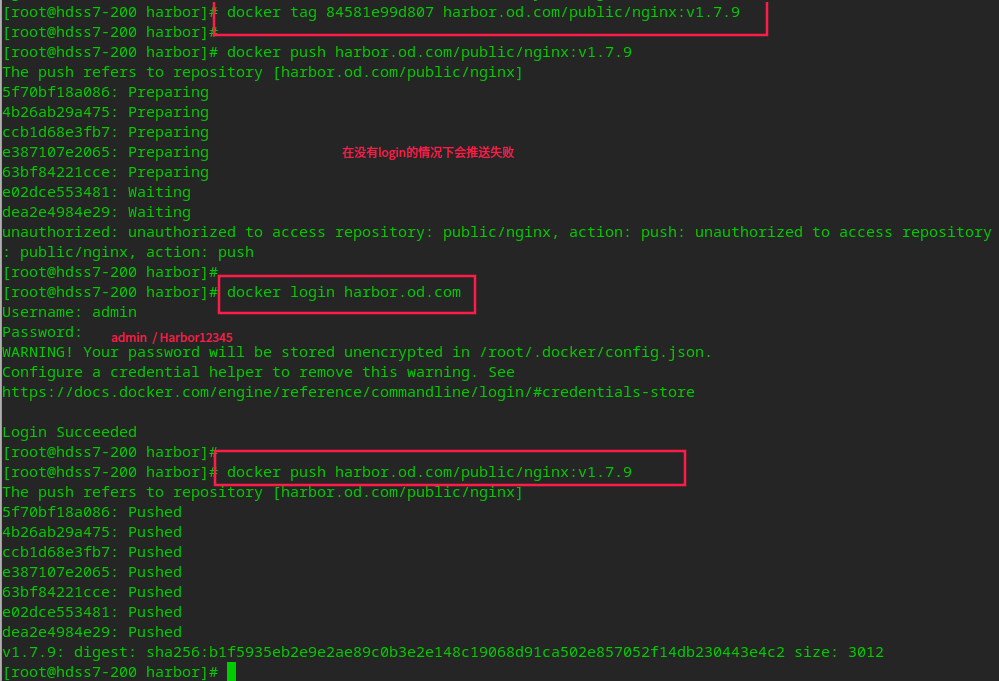

# Hit tag docker tag 84581e99d807 harbor.od.com/public/nginx:v1.7.9 # Push to local harbor warehouse docker login harbor.od.com docker push harbor.od.com/public/nginx:v1.7.9

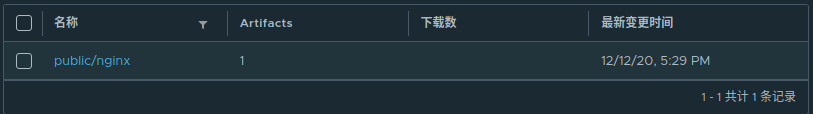

You can see the successful push on the harbor portal

Install Master node Master

Deploy kube master node service

Deploy etcd node

Cluster planning

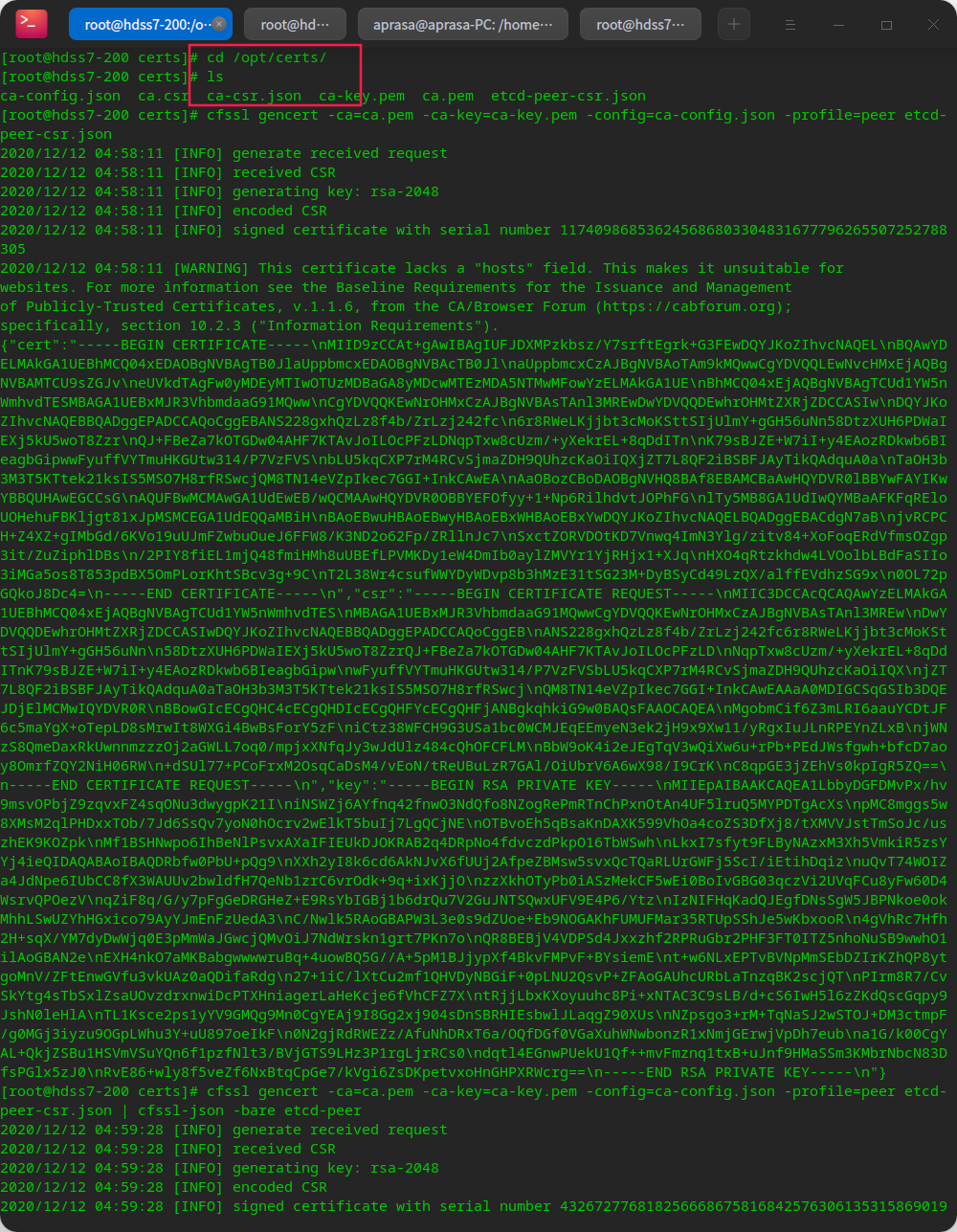

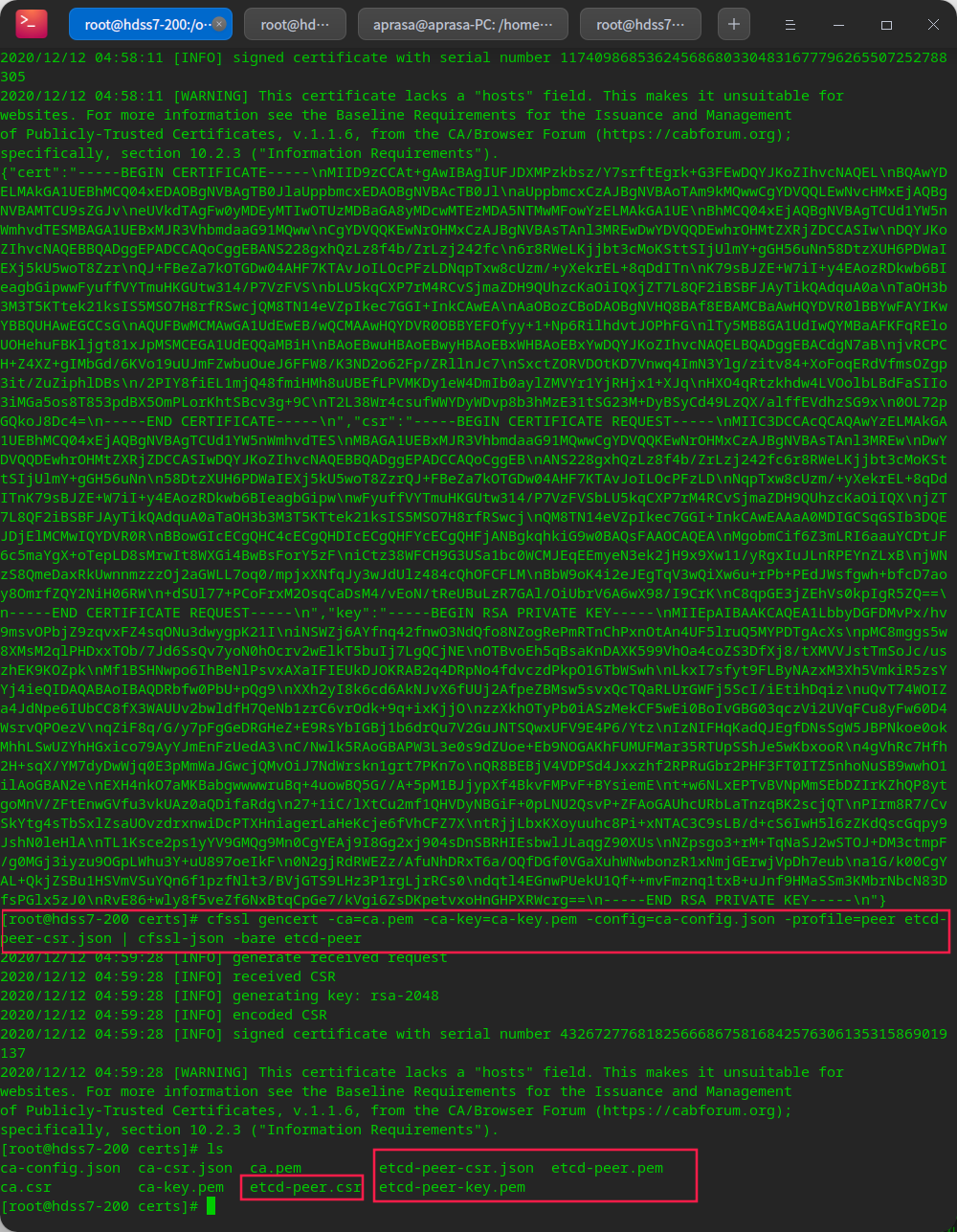

Create etcd server certificate

# Create ca configuration on hdss7-200 machine vi /opt/certs/ca-config.json

{

"signing": {

"default": {

"expiry": "438000h"

},

"profiles": {

"server": {

"expiry": "438000h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "438000h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "438000h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

# Create etcd self signed certificate configuration vi /opt/certs/etcd-peer-csr.json

hosts is 10.4 7.11. After preventing the down of the other three nodes, you can also use 10.4 7.11 when a buffer is standby

{

"CN": "k8s-etcd",

"hosts": [

"10.4.7.11",

"10.4.7.12",

"10.4.7.21",

"10.4.7.22"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "GuangZhou",

"L": "GuangZhou",

"O": "k8s",

"OU": "yw"

}

]

}

# Start issuing certificate cd /opt/certs/ cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json | cfssl-json -bare etcd-peer

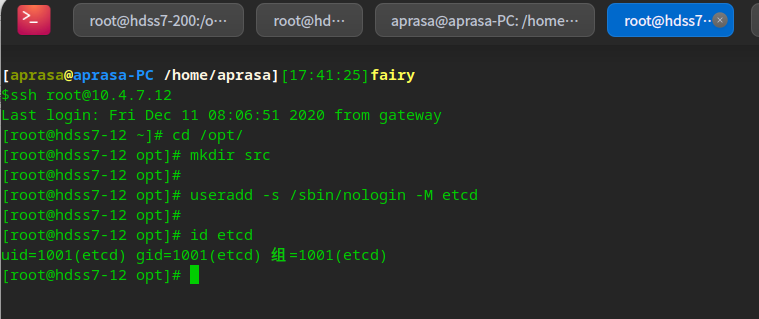

Create etcd user

useradd -s /sbin/nologin -M etcd

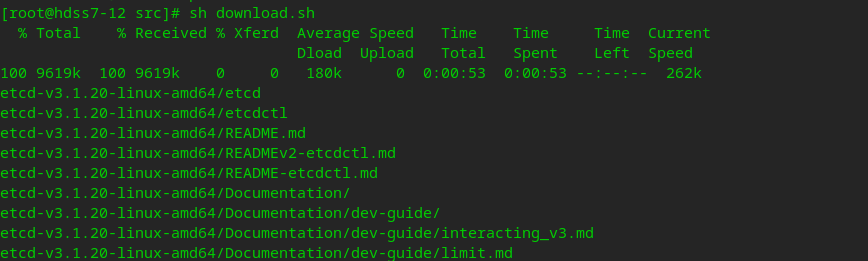

Download etcd software

cd /opt/src/ # reference https://github.com/etcd-io/etcd/releases/tag/v3.1.20 Document description, save the script locally, and then execute it sh download.sh

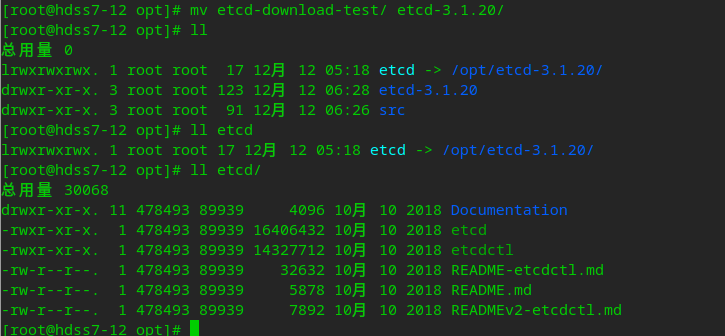

Deploy etcd

mv etcd-download-test/ etcd-3.1.20/ ln -s /opt/etcd-3.1.20/ /opt/etcd

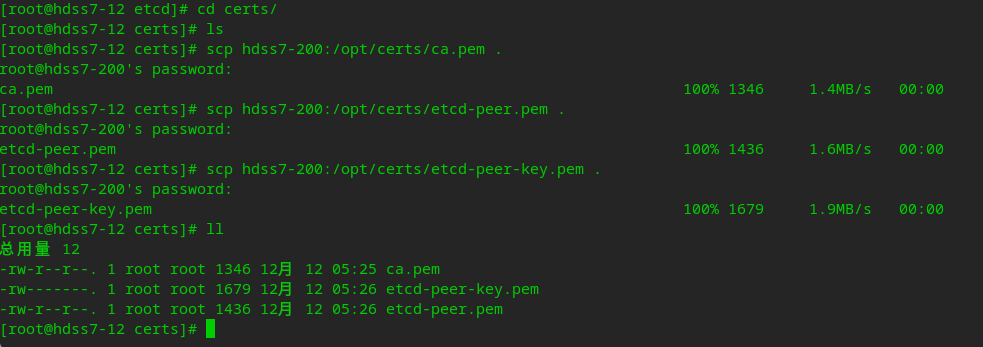

# Create related directories mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server # Copy certificate to current system cd /opt/etcd/certs scp hdss7-200:/opt/certs/ca.pem . scp hdss7-200:/opt/certs/etcd-peer.pem . scp hdss7-200:/opt/certs/etcd-peer-key.pem . # The permission of the private key is 600

Create etcd startup script

# Create startup script vi /opt/etcd/etcd-server-startup.sh

#!/bin/sh

/opt/etcd/etcd --name etcd-server-7-12 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://10.4.7.12:2380 \

--listen-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://10.4.7.12:2380 \

--advertise-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-7-12=https://10.4.7.12:2380,etcd-server-7-21=https://10.4.7.21:2380,etcd-server-7-22=https://10.4.7.22:2380 \

--ca-file /opt/etcd/certs/ca.pem \

--cert-file /opt/etcd/certs/etcd-peer.pem \

--key-file /opt/etcd/certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file /opt/etcd/certs/ca.pem \

--peer-ca-file /opt/etcd/certs/ca.pem \

--peer-cert-file /opt/etcd/certs/etcd-peer.pem \

--peer-key-file /opt/etcd/certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file /opt/etcd/certs/ca.pem \

--log-output stdout

# Give script execution permission chmod +x /opt/etcd/etcd-server-startup.sh # Change owner and group chown -R etcd.etcd /opt/etcd-3.1.20/ chown -R etcd.etcd /data/etcd/ chown -R etcd.etcd /data/logs/etcd-server/

Running etcd in the background through the supervisor program

# Install the software for the background management process yum install supervisor -y systemctl start supervisord systemctl enable supervisord # Create a startup file for supervisor vi /etc/supervisord.d/etcd-server.ini

[program:etcd-server-7-12] command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/etcd ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=etcd ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false) killasgroup=true stopasgroup=true

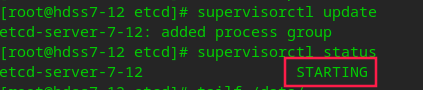

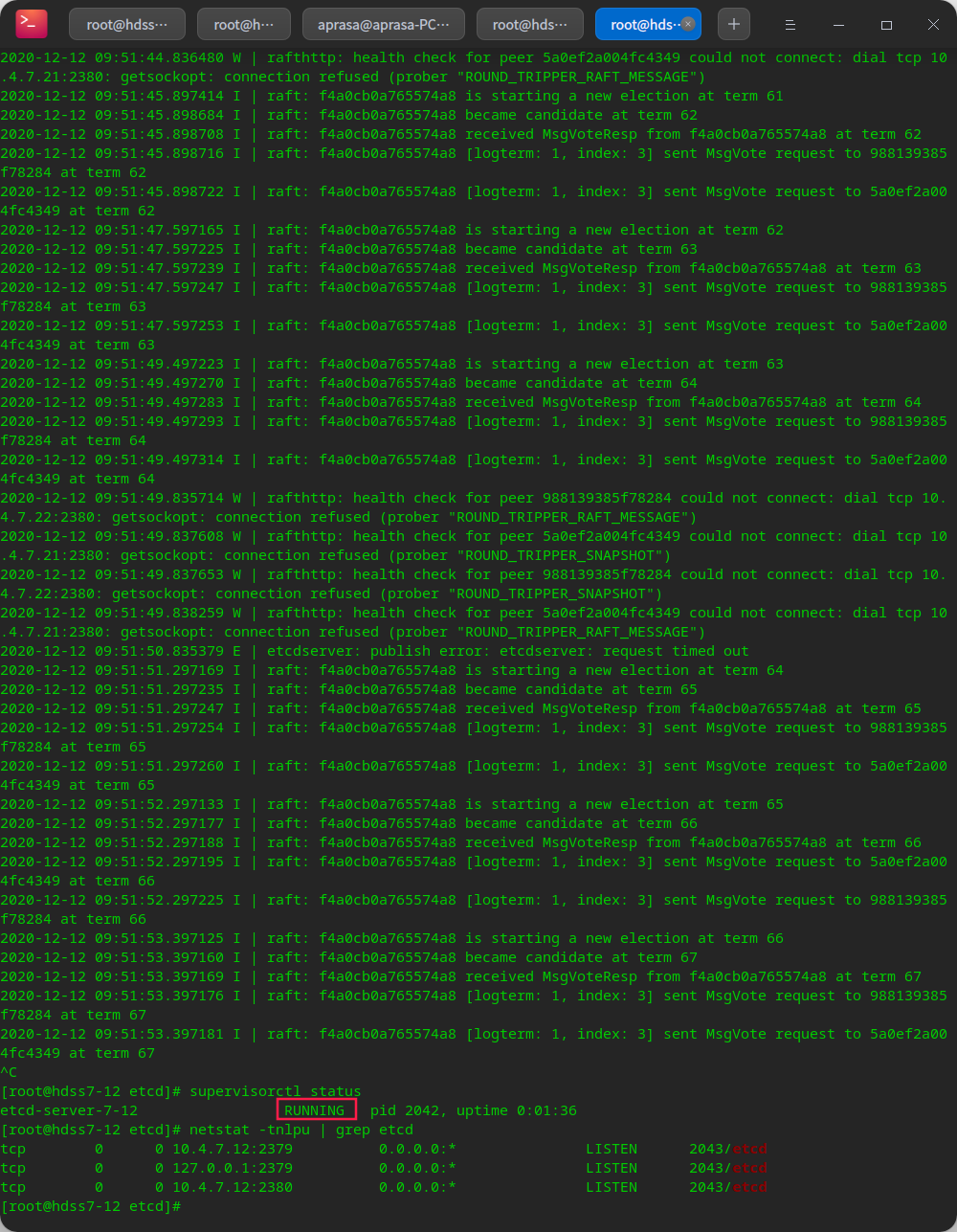

# Execute and view background startup tasks supervisorctl update supervisorctl status

You can view the log tail / data / logs / etcd server / etcd stdout. Log, I got up after a while.

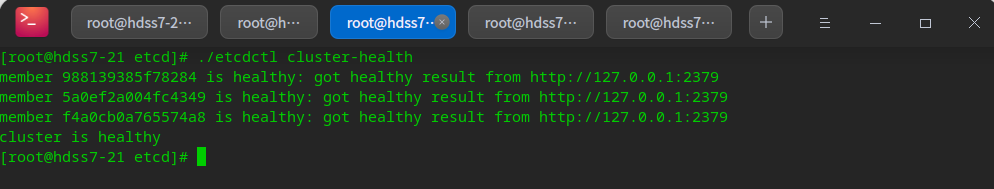

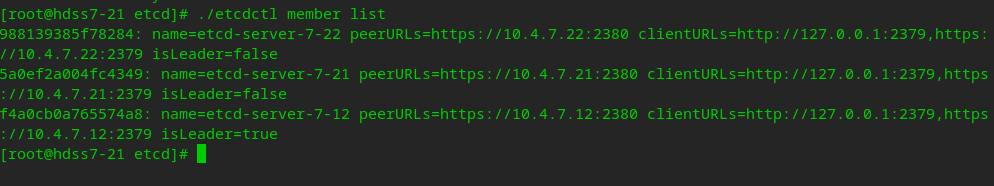

After the three machines are configured in turn, cluster inspection can be carried out

./etcdctl cluster-health ./etcdctl member list

Deploy apiserver

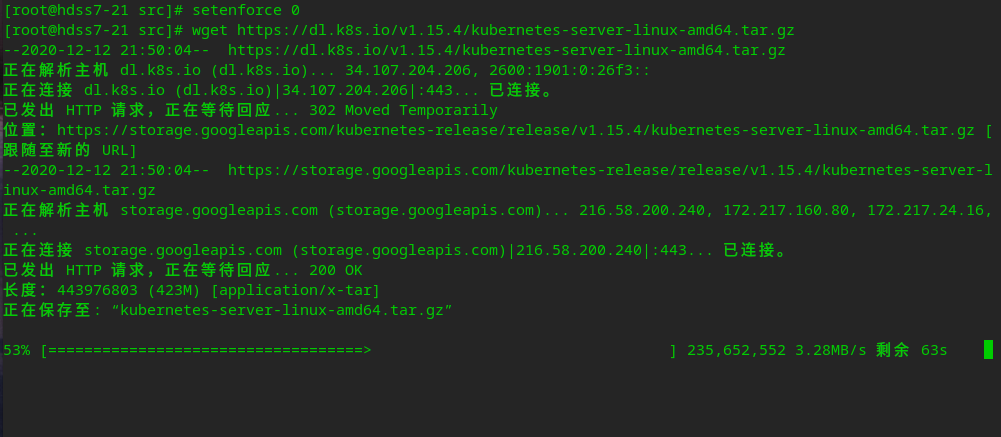

Download apiserver software

# From official github Download link found on https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.15.md#downloads-for-v1154 wget https://dl.k8s.io/v1.15.4/kubernetes-server-linux-amd64.tar.gz

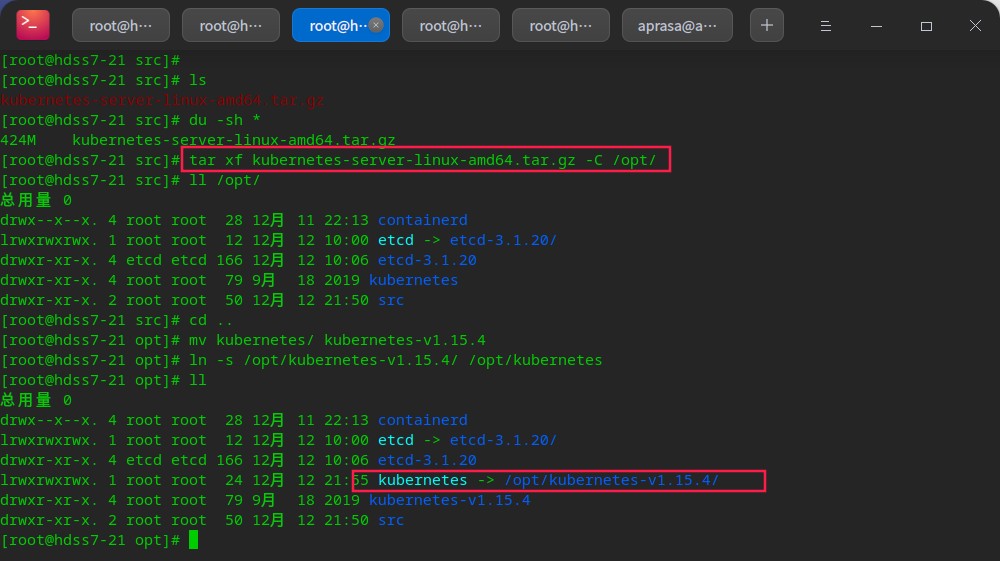

Deploy apiserver

# Unzip and deploy to the specified directory tar xf kubernetes-server-linux-amd64.tar.gz -C /opt/ mv kubernetes/ kubernetes-v1.15.4 ln -s /opt/kubernetes-v1.15.4/ /opt/kubernetes

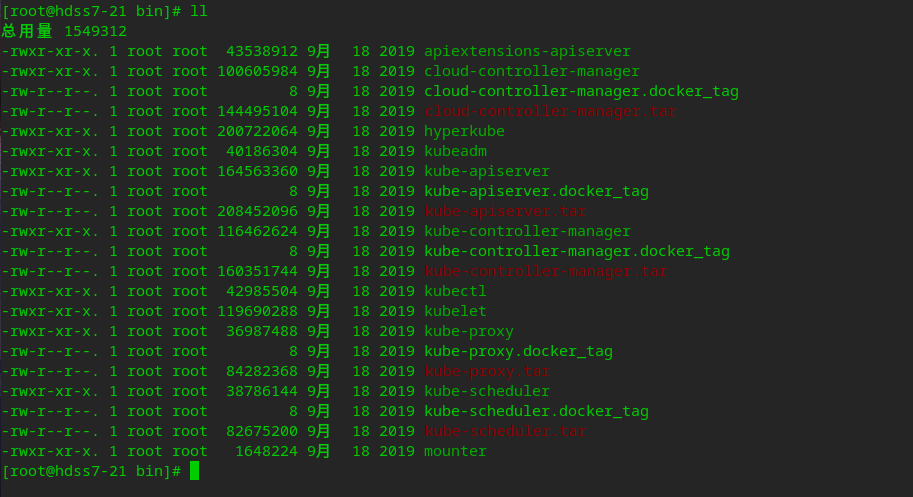

/Under opt/kubernetes/server/bin tar and docker_tag is a docker image. Since it is not deployed in kubedm, files related to docker can be deleted

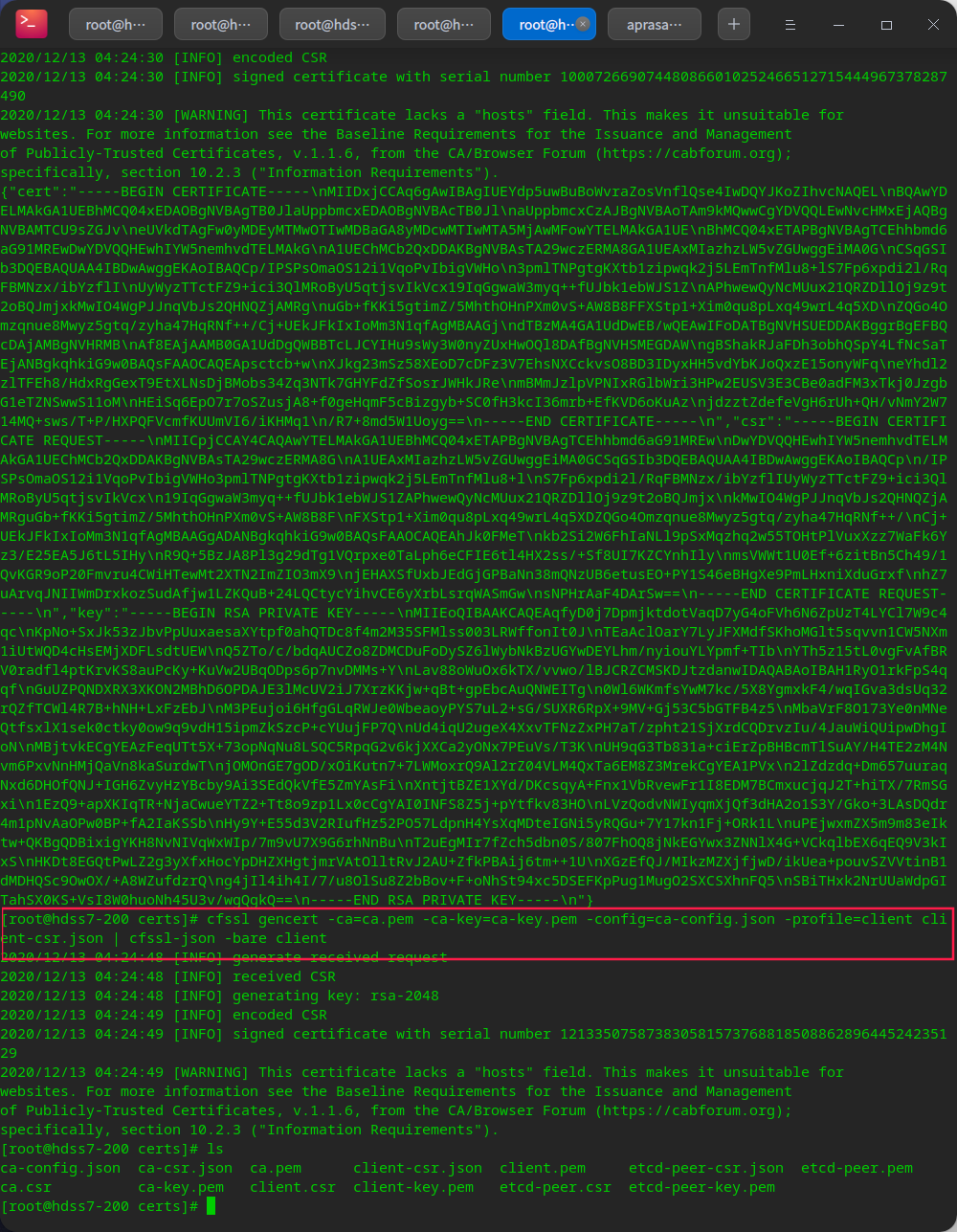

Issue client certificate

Issue certificate on hdss7-200 machine

# API server (client) and etcd(server) communication vi /opt/certs/client-csr.json

{

"CN": "k8s-node",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Hangzhou",

"L": "Hangzhou",

"O": "od",

"OU": "ops"

}

]

}

cd /opt/certs cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json | cfssl-json -bare client

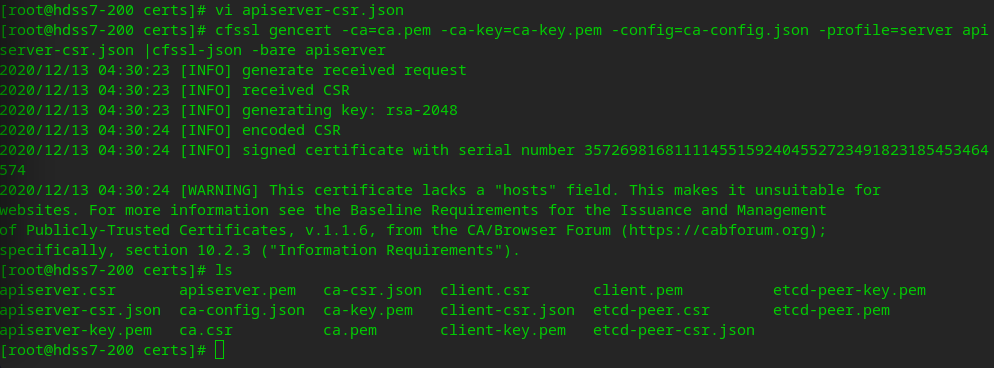

Issue apiserver certificate

vi apiserver-csr.json

{

"CN": "k8s-apiserver",

"hosts": [

"127.0.0.1",

"192.168.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"10.4.7.10",

"10.4.7.21",

"10.4.7.22",

"10.4.7.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "od",

"OU": "ops"

}

]

}

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssl-json -bare apiserver

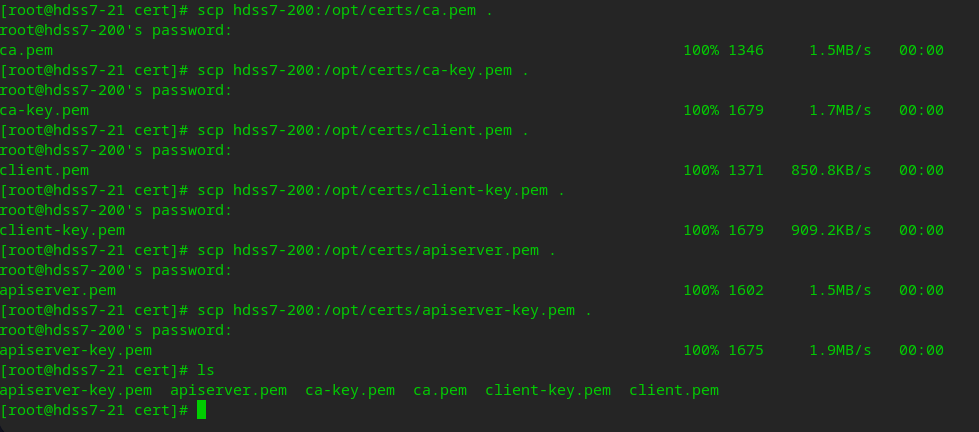

Copy the certificate to apiserver

cd /opt/kubernetes/server/bin mkdir cert cd cert/ scp hdss7-200:/opt/certs/ca.pem . scp hdss7-200:/opt/certs/ca-key.pem . scp hdss7-200:/opt/certs/client.pem . scp hdss7-200:/opt/certs/client-key.pem . scp hdss7-200:/opt/certs/apiserver.pem . scp hdss7-200:/opt/certs/apiserver-key.pem .

Create apiserver startup profile

cd /opt/kubernetes/server/bin mkdir conf cd conf/ vi audit.yaml

apiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

Create apiserver startup script

cd /opt/kubernetes/server/bin vi kube-apiserver.sh

#!/bin/bash ./kube-apiserver \ --apiserver-count 2 \ --audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \ --audit-policy-file ./conf/audit.yaml \ --authorization-mode RBAC \ --client-ca-file ./cert/ca.pem \ --requestheader-client-ca-file ./cert/ca.pem \ --enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota \ --etcd-cafile ./cert/ca.pem \ --etcd-certfile ./cert/client.pem \ --etcd-keyfile ./cert/client-key.pem \ --etcd-servers https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \ --service-account-key-file ./cert/ca-key.pem \ --service-cluster-ip-range 192.168.0.0/16 \ --service-node-port-range 3000-29999 \ --target-ram-mb=1024 \ --kubelet-client-certificate ./cert/client.pem \ --kubelet-client-key ./cert/client-key.pem \ --log-dir /data/logs/kubernetes/kube-apiserver \ --tls-cert-file ./cert/apiserver.pem \ --tls-private-key-file ./cert/apiserver-key.pem \ --v 2

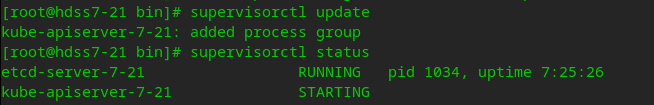

Start apiserver in the background through Supervisor

chmod +x kube-apiserver.sh mkdir -p /data/logs/kubernetes/kube-apiserver vi /etc/supervisord.d/kube-apiserver.ini

[program:kube-apiserver-7-21] command=/opt/kubernetes/server/bin/kube-apiserver.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false

supervisorctl update # View status supervisorctl status

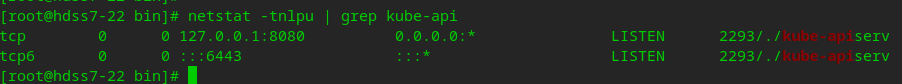

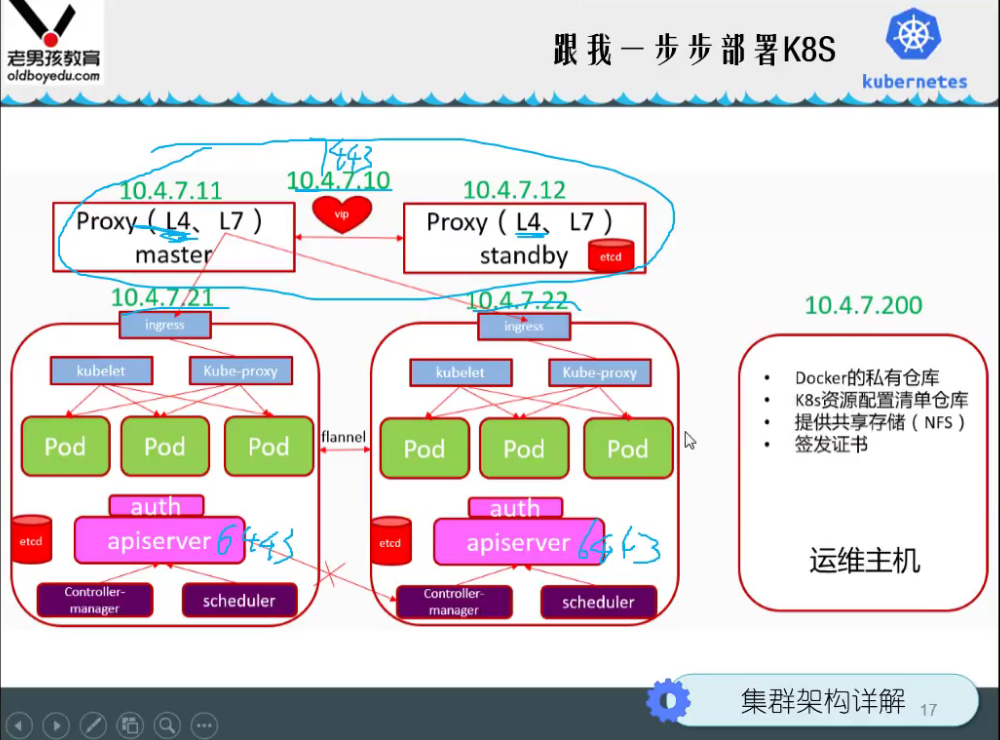

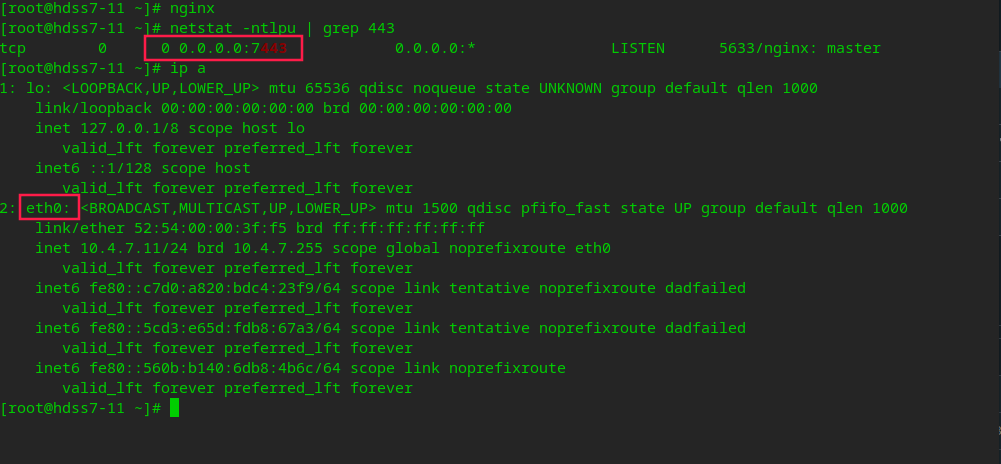

Install proxy as a four-layer reverse proxy

# View the ports occupied by apiserver netstat -tnlpu | grep kube-api

Installing nginx

# Install nginx on 7-11 and 7-12 yum install nginx -y

Configure four layer reverse proxy

# Note that it is appended at the end. Do not add it to the [seven layers] in the http statement block. Map the 6443 port to 7443 # stream is a four tier configuration vi /etc/nginx/nginx.conf

stream {

upstream kube-apiserver {

server 10.4.7.21:6443 max_fails=3 fail_timeout=30s;

server 10.4.7.22:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

}

}

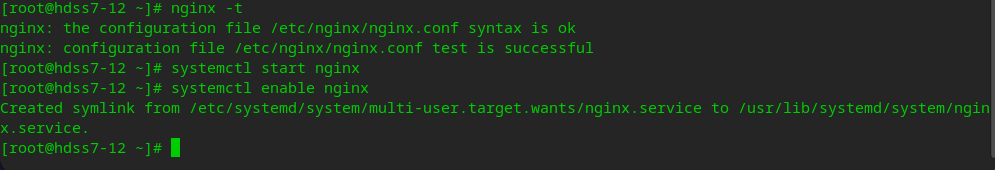

start nginx

# Check nginx configuration nginx -t # start nginx systemctl start nginx systemctl enable nginx

Install keepalived and run vip

Install keepalived

yum install keepalived -y

Configure keepalived listening script

vi /etc/keepalived/check_port.sh

#!/bin/bash

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

chmod +x /etc/keepalived/check_port.sh

keepalived master

# Save default configuration backup mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.template vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 10.4.7.11

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 251

priority 100

advert_int 1

mcast_src_ip 10.4.7.11

nopreempt

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.4.7.10

}

}

keepalived from

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 10.4.7.12

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 251

priority 90

advert_int 1

mcast_src_ip 10.4.7.12

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.4.7.10

}

}

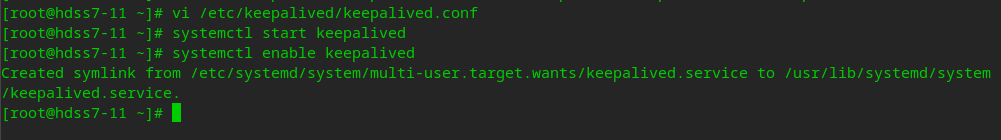

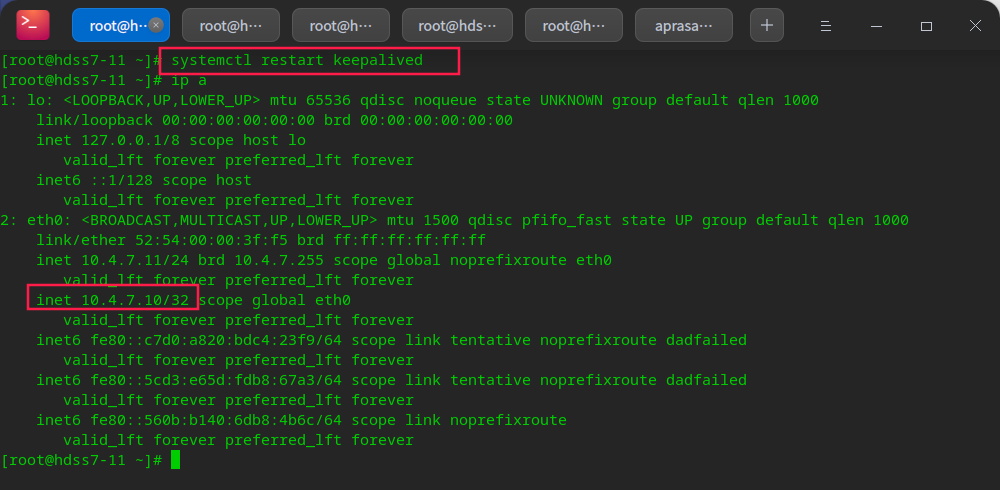

Start keepalived

systemctl start keepalived systemctl enalbe keepalived

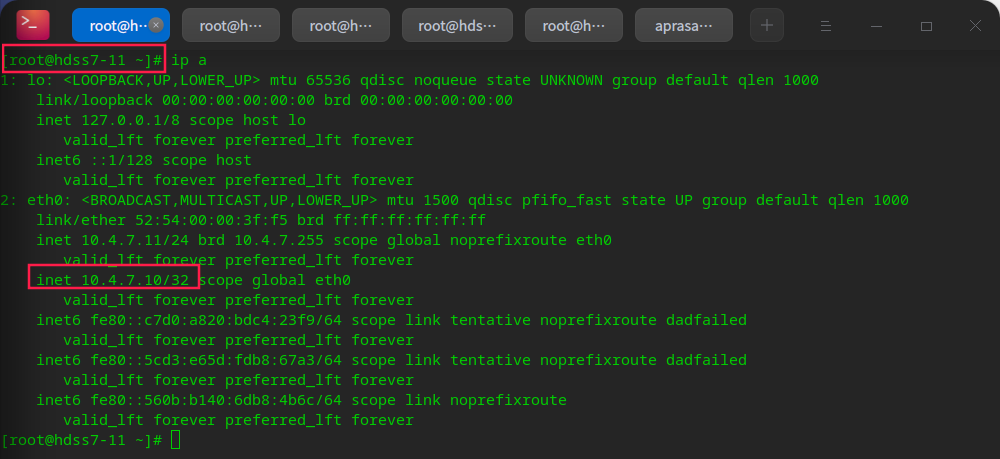

You can see that the vip is already on the primary node

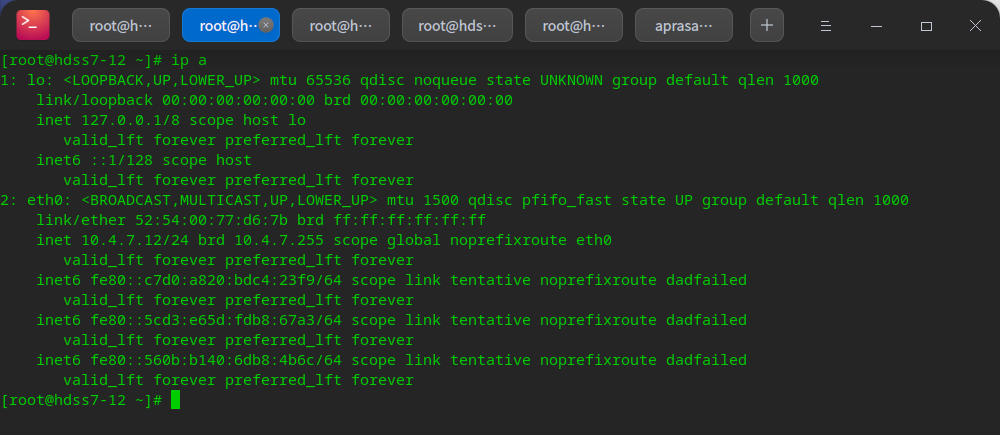

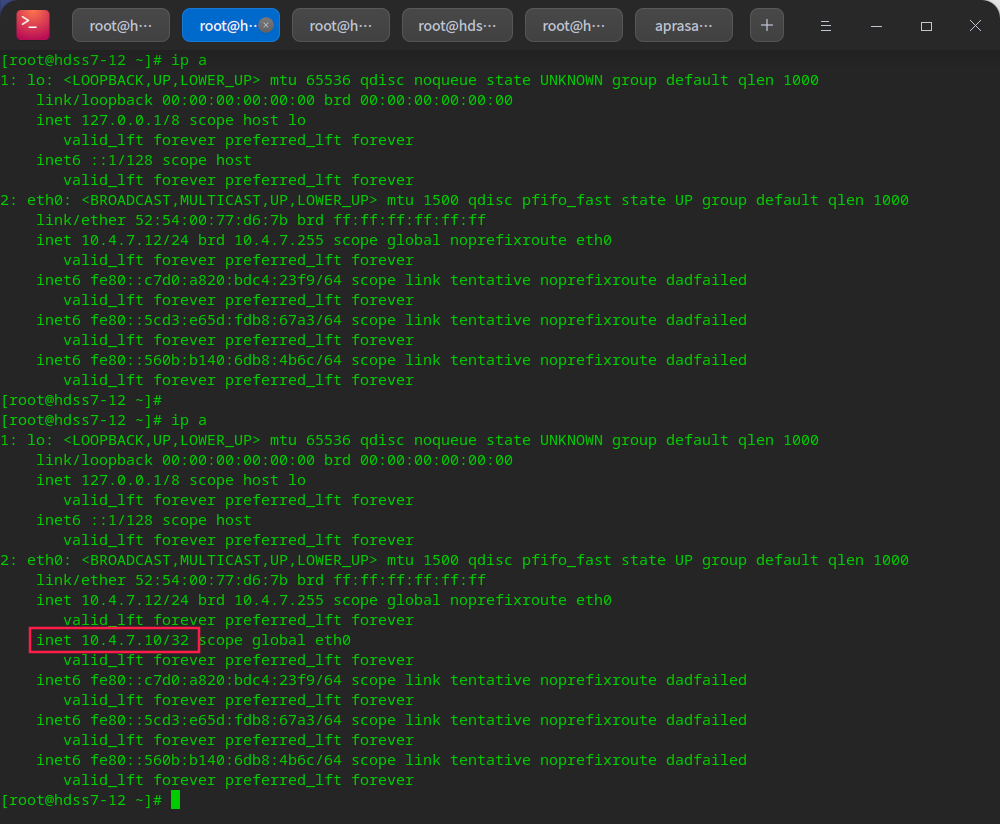

No on standby node

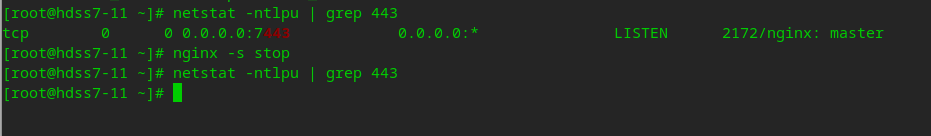

Test vip drift

# After stopping nginx on the primary node, you can see that vip drifts to the standby node nginx -s stop

# Note that on the slave node, do not configure nopreempt, which is preemptive # This is because after the master node fails, the vip will drift to the slave node # After the master node is repaired, the vip will not automatically drift over. [in production] only after confirming that everything is safe, can the backup node vip drift to the primary node be triggered

# After the repair, the ip will not drift back until the keepalived service of the master-slave node is restarted systemctl restart keepalived

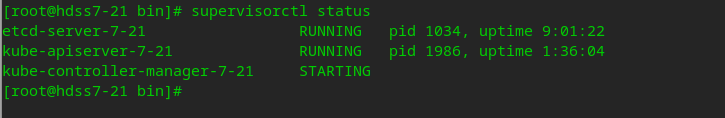

Deploy Kube controller

In 10.4 7.21 and 10.4 7.22 server

Create Kube controller startup script

vi /opt/kubernetes/server/bin/kube-controller-manager.sh

#!/bin/sh ./kube-controller-manager \ --cluster-cidr 172.7.0.0/16 \ --leader-elect true \ --log-dir /data/logs/kubernetes/kube-controller-manager \ --master http://127.0.0.1:8080 \ --service-account-private-key-file ./cert/ca-key.pem \ --service-cluster-ip-range 192.168.0.0/16 \ --root-ca-file ./cert/ca.pem \ --v 2

chmod +x /opt/kubernetes/server/bin/kube-controller-manager.sh mkdir -p /data/logs/kubernetes/kube-controller-manager

Start Kube controller in the background through the configuration of superviso r

vi /etc/supervisord.d/kube-conntroller-manager.ini

[program:kube-controller-manager-7-21] command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false)

# Execute and view background startup tasks supervisorctl update supervisorctl status

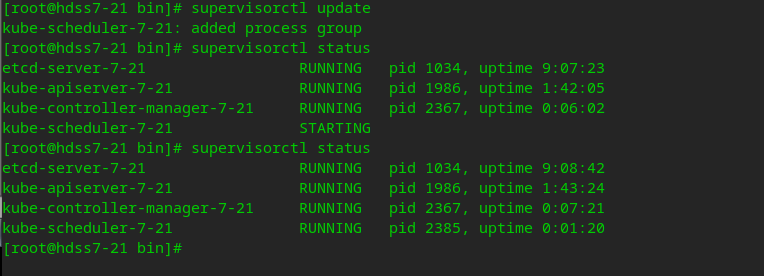

Deploy Kube scheduler

Create Kube scheduler startup script

vi /opt/kubernetes/server/bin/kube-scheduler.sh

#!/bin/sh ./kube-scheduler \ --leader-elect \ --log-dir /data/logs/kubernetes/kube-scheduler \ --master http://127.0.0.1:8080 \ --v 2

chmod +x /opt/kubernetes/server/bin/kube-scheduler.sh mkdir -p /data/logs/kubernetes/kube-scheduler

Start Kube scheduler in the background through the configuration of superviso r

vi /etc/supervisord.d/kube-scheduler.ini

[program:kube-scheduler-7-21] command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false)

# Execute and view background startup tasks supervisorctl update supervisorctl status

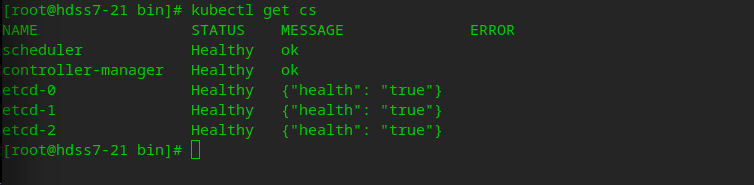

Check health status

Create kubectl soft link

ln -s /opt/kubernetes/server/bin/kubectl /usr/bin/kubectl

Check cluster health

# kubectl get cluster server role kubectl get cs

Install compute node Worker

Deploy kubelet

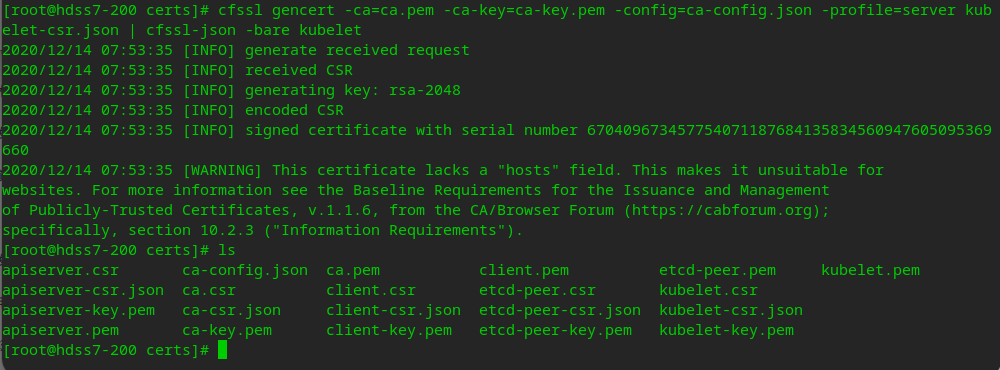

grant a certificate

# First, apply for a certificate on hdss7-200 vi /opt/certs/kubelet-csr.json

{

"CN": "k8s-kubelet",

"hosts": [

"127.0.0.1",

"10.4.7.10",

"10.4.7.21",

"10.4.7.22",

"10.4.7.23",

"10.4.7.24",

"10.4.7.25",

"10.4.7.26",

"10.4.7.27",

"10.4.7.28"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

# Apply for server certificate cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

# Distribute certificates to 21, 22 cd /opt/kubernetes/server/bin/cert/ scp hdss7-200:/opt/certs/kubelet.pem . scp hdss7-200:/opt/certs/kubelet-key.pem .

Create configuration

cd /opt/kubernetes/server/bin/conf # Enter the conf directory and execute the following commands: execute on 21 and 22

set-cluster

kubectl config set-cluster myk8s \ --certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \ --embed-certs=true \ --server=https://10.4.7.10:7443 \ --kubeconfig=kubelet.kubeconfig

set-credentials

kubectl config set-credentials k8s-node \ --client-certificate=/opt/kubernetes/server/bin/cert/client.pem \ --client-key=/opt/kubernetes/server/bin/cert/client-key.pem \ --embed-certs=true \ --kubeconfig=kubelet.kubeconfig

set-context

kubectl config set-context myk8s-context \ --cluster=myk8s \ --user=k8s-node \ --kubeconfig=kubelet.kubeconfig

use-context

kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig

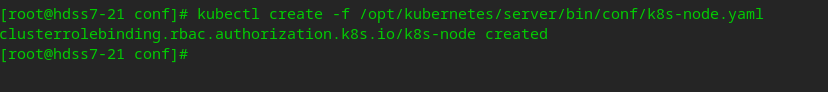

Create k8s node cluster resources

# Execute on hdss7-21: PS: because no matter which node is created, it has been synchronized to etcd vi /opt/kubernetes/server/bin/conf/k8s-node.yaml

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: k8s-node roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:node subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: k8s-node

kubectl create -f /opt/kubernetes/server/bin/conf/k8s-node.yaml

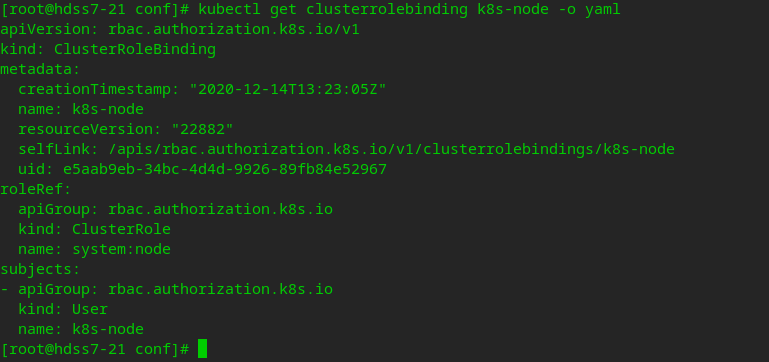

Check k8s-node resource creation status

kubectl get clusterrolebinding k8s-node -o yaml

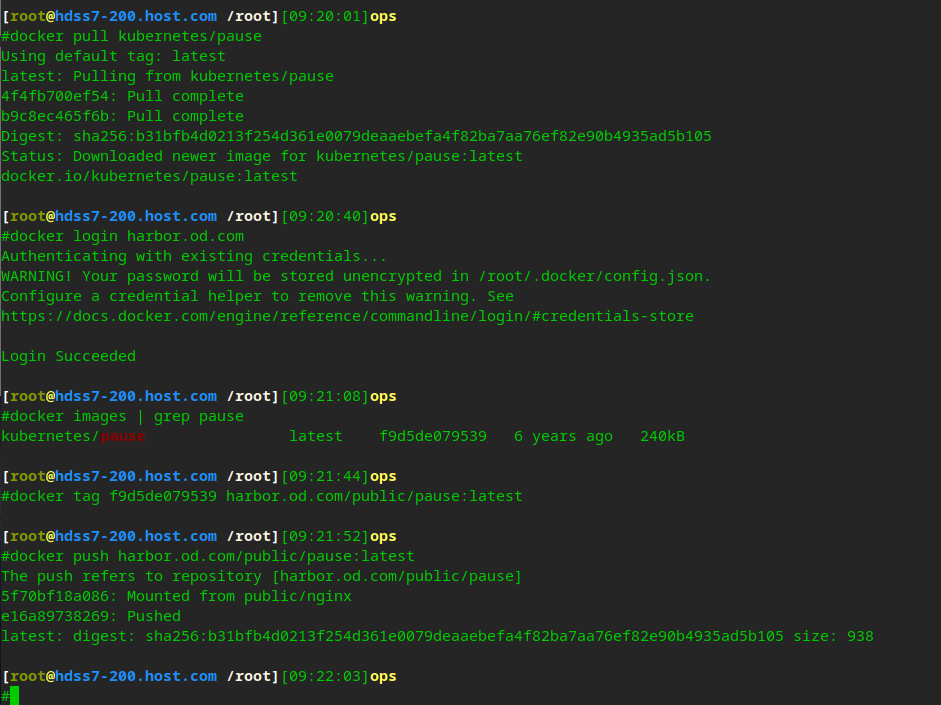

Create pause base image

# On the operation and maintenance host 200 # Download pause image from public warehouse docker pull kubernets/pause # docker login login to private warehouse docker login harbor.od.com # Tag and upload to private warehouse docker tag f9d5de079539 harbor.od.com/public/pause:latest docker push harbor.od.com/public/pause:latest

Create kubelet startup script

# Create a startup script on node 21 22 vi /opt/kubernetes/server/bin/kubelet.sh

#!/bin/sh ./kubelet \ --anonymous-auth=false \ --cgroup-driver systemd \ --cluster-dns 192.168.0.2 \ --cluster-domain cluster.local \ --runtime-cgroups=/systemd/system.slice \ --kubelet-cgroups=/systemd/system.slice \ --fail-swap-on="false" \ --client-ca-file ./cert/ca.pem \ --tls-cert-file ./cert/kubelet.pem \ --tls-private-key-file ./cert/kubelet-key.pem \ --hostname-override hdss7-21.host.com \ --image-gc-high-threshold 20 \ --image-gc-low-threshold 10 \ --kubeconfig ./conf/kubelet.kubeconfig \ --log-dir /data/logs/kubernetes/kube-kubelet \ --pod-infra-container-image harbor.od.com/public/pause:latest \ --root-dir /data/kubelet

chmod +x /opt/kubernetes/server/bin/kubelet.sh mkdir -p /data/logs/kubernetes/kube-kubelet mkdir -p /data/kubelet

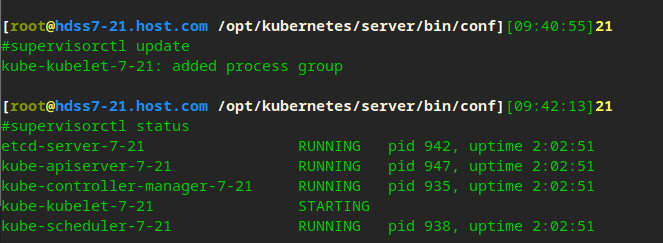

Start kubelet in the background through Supervisor

vi /etc/supervisord.d/kube-kubelet.ini

[program:kube-kubelet-7-21] command=/opt/kubernetes/server/bin/kubelet.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false)

supervisorctl update supervisorctl status

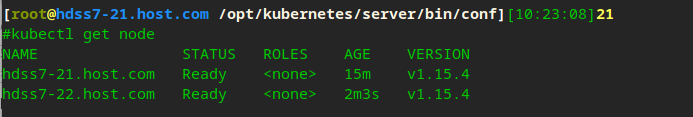

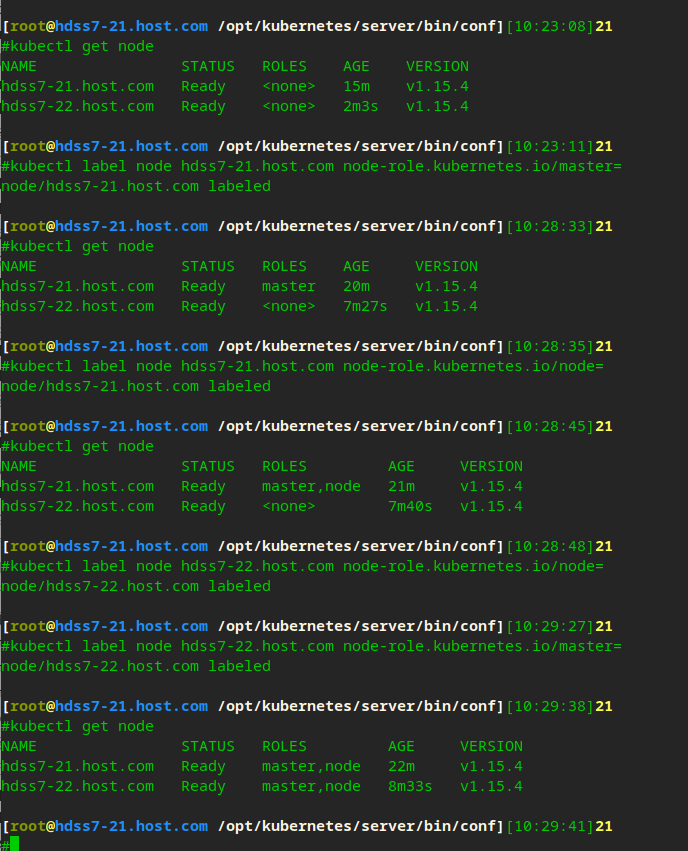

Label node

kubectl label node hdss7-21.host.com node-role.kubernetes.io/master=

Deploy Kube proxy

Main function: connect Pod network and cluster network

grant a certificate

# Apply for a certificate on hdss7-200 vi /opt/certs/kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

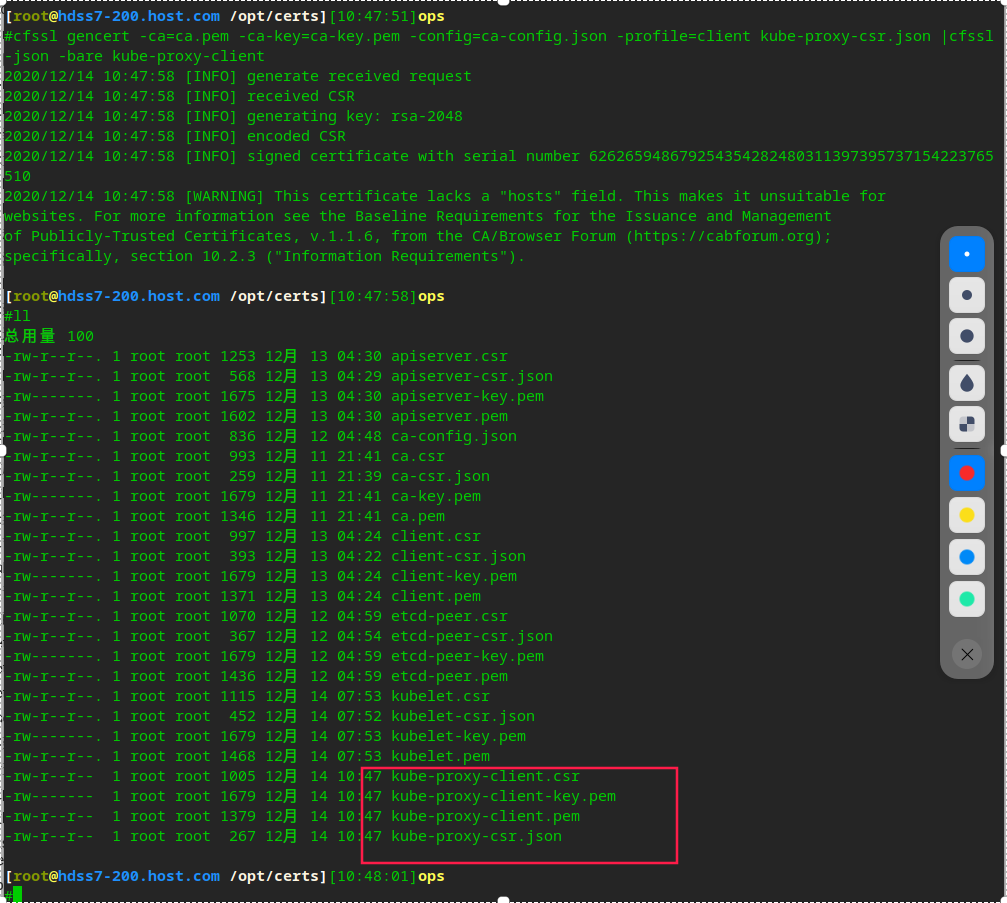

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json |cfssl-json -bare kube-proxy-client

# Copy certificate to 21, 22 cd /opt/kubernetes/server/bin/cert/ scp hdss7-200:/opt/certs/kube-proxy-client-key.pem . scp hdss7-200:/opt/certs/kube-proxy-client.pem .

Create configuration

# To 21, 22 cd /opt/kubernetes/server/bin/conf

set-cluster

kubectl config set-cluster myk8s \ --certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \ --embed-certs=true \ --server=https://10.4.7.10:7443 \ --kubeconfig=kube-proxy.kubeconfig

set-credentials

kubectl config set-credentials kube-proxy \ --client-certificate=/opt/kubernetes/server/bin/cert/kube-proxy-client.pem \ --client-key=/opt/kubernetes/server/bin/cert/kube-proxy-client-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig

set-context

kubectl config set-context myk8s-context \ --cluster=myk8s \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig

use-context

kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

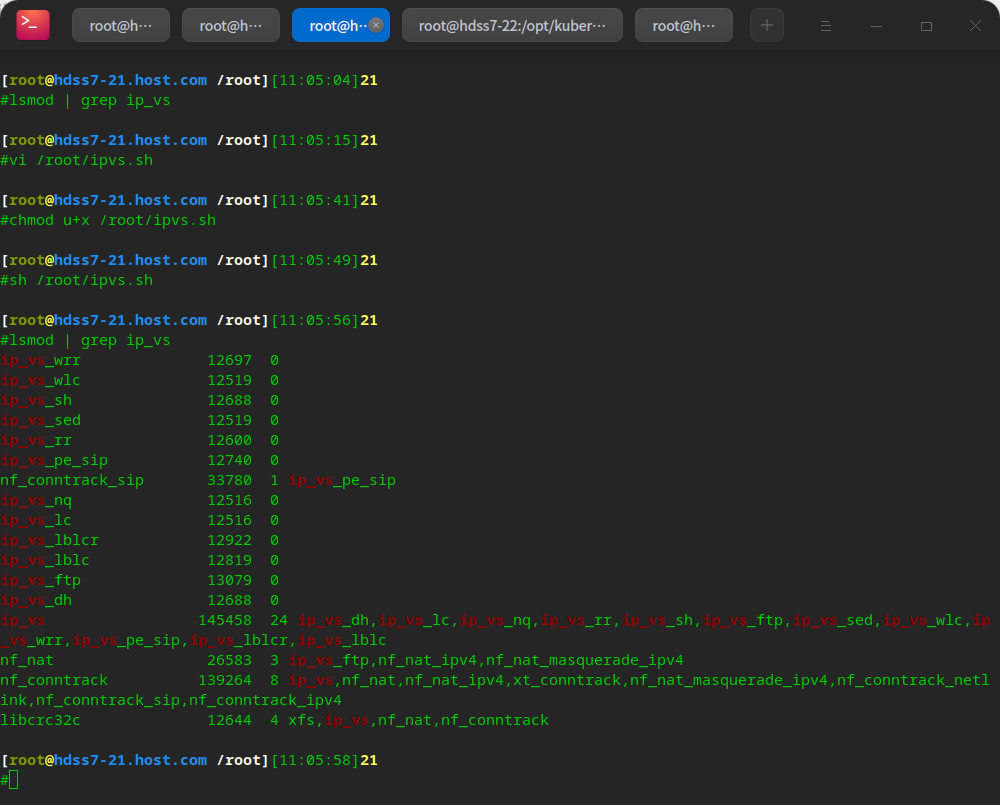

Load ipvs module

# To 21, 22 vi /root/ipvs.sh

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")

do

/sbin/modinfo -F filename $i &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $i

fi

done

chmod u+x /root/ipvs.sh sh /root/ipvs.sh

Create Kube proxy startup script

# To 21, 22 vi /opt/kubernetes/server/bin/kube-proxy.sh

#!/bin/sh ./kube-proxy \ --cluster-cidr 172.7.0.0/16 \ --hostname-override hdss7-21.host.com \ --proxy-mode=ipvs \ --ipvs-scheduler=nq \ --kubeconfig ./conf/kube-proxy.kubeconfig

chmod +x /opt/kubernetes/server/bin/kube-proxy.sh mkdir -p /data/logs/kubernetes/kube-proxy

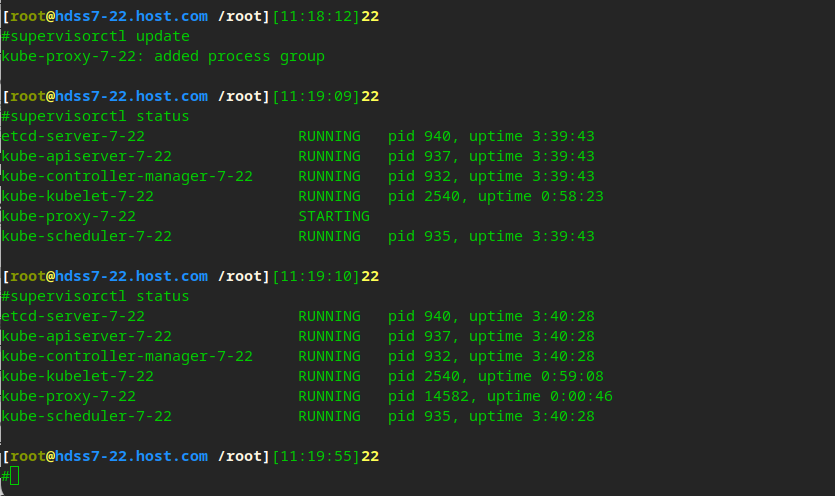

Start Kube proxy in the background through Supervisor

vi /etc/supervisord.d/kube-proxy.ini

[program:kube-proxy-7-21] command=/opt/kubernetes/server/bin/kube-proxy.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false)

supervisorctl update supervisorctl status

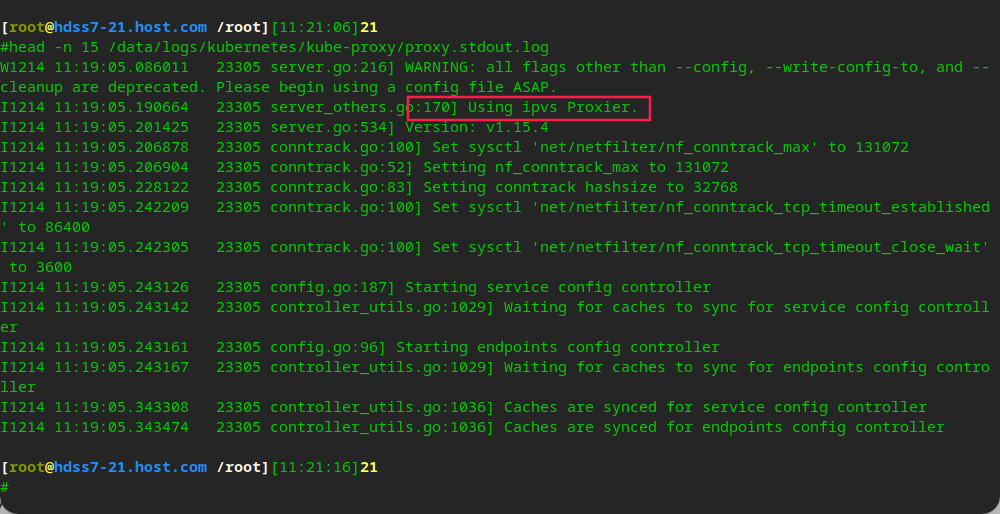

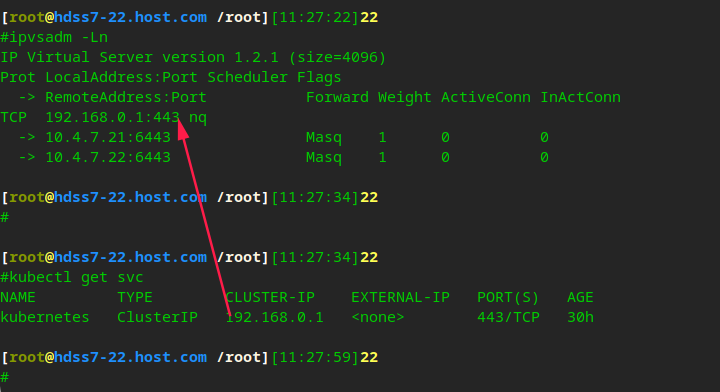

Verify the ipvs algorithm used after startup

Check the log and find that there is ipvs algorithm scheduling

# Install ipvsadm to view yum install ipvsadm -y ipvsadm -Ln

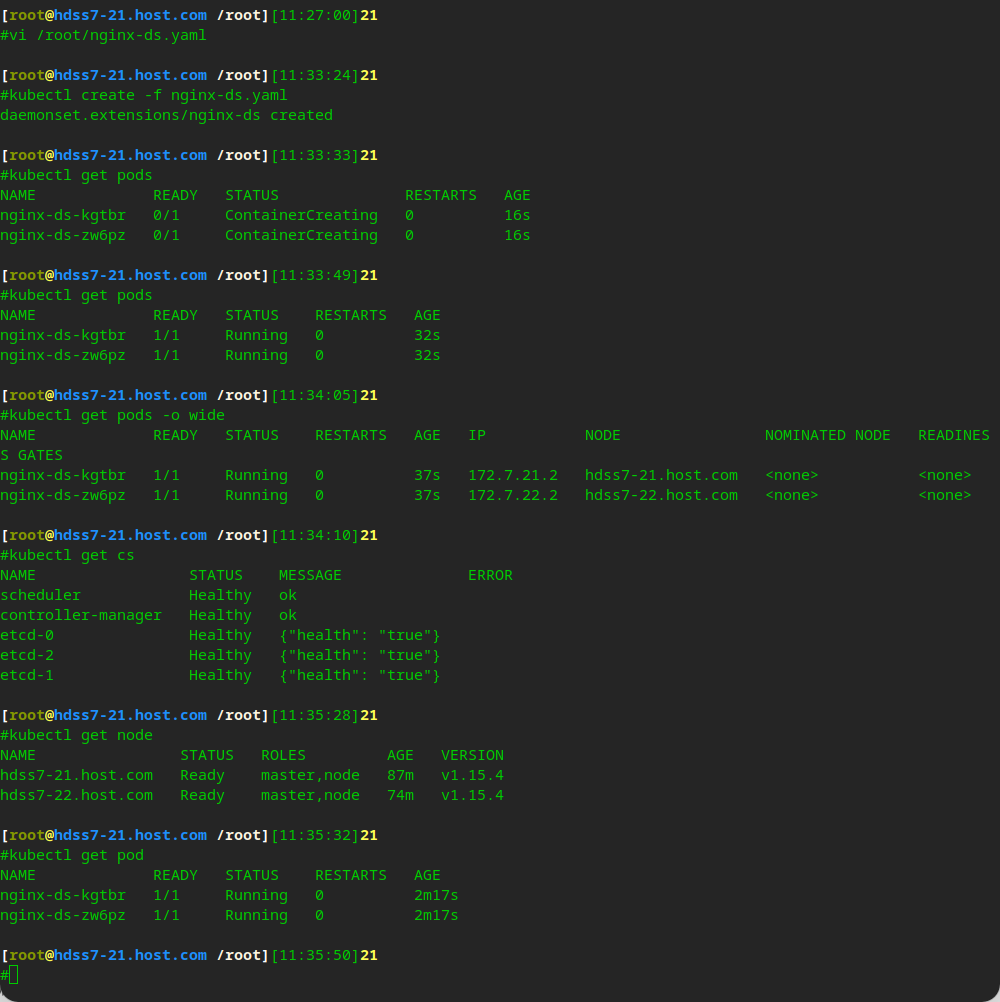

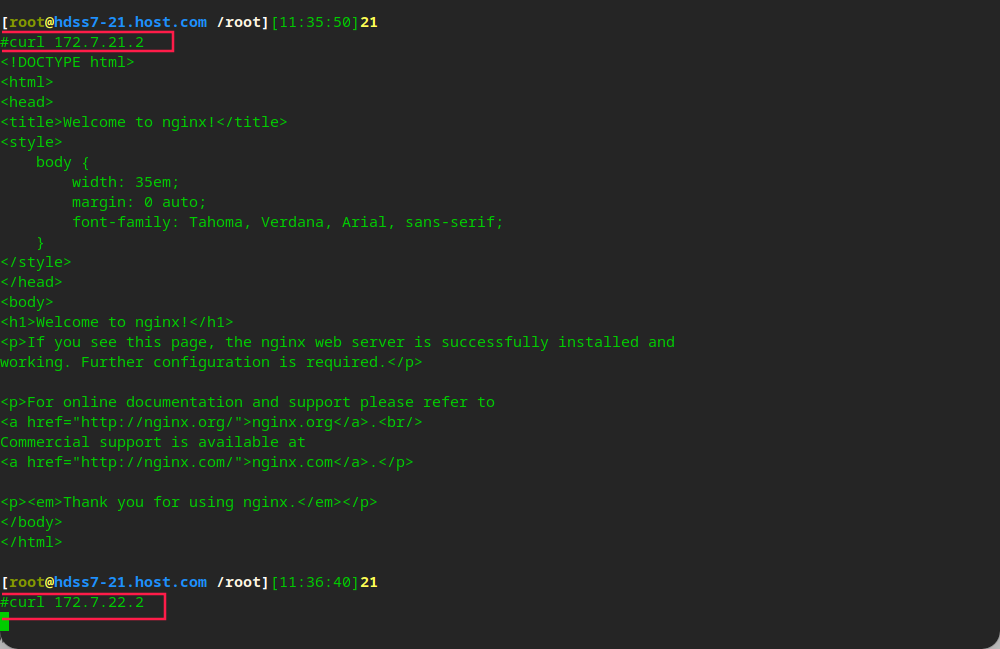

Verify kubernets cluster

Create a resource configuration list at any operation node

# Just execute on 21 vi /root/nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.od.com/public/nginx:v1.7.9

ports:

- containerPort: 80

kubectl create -f nginx-ds.yaml kubectl get pods kubectl get cs kubectl get nodes

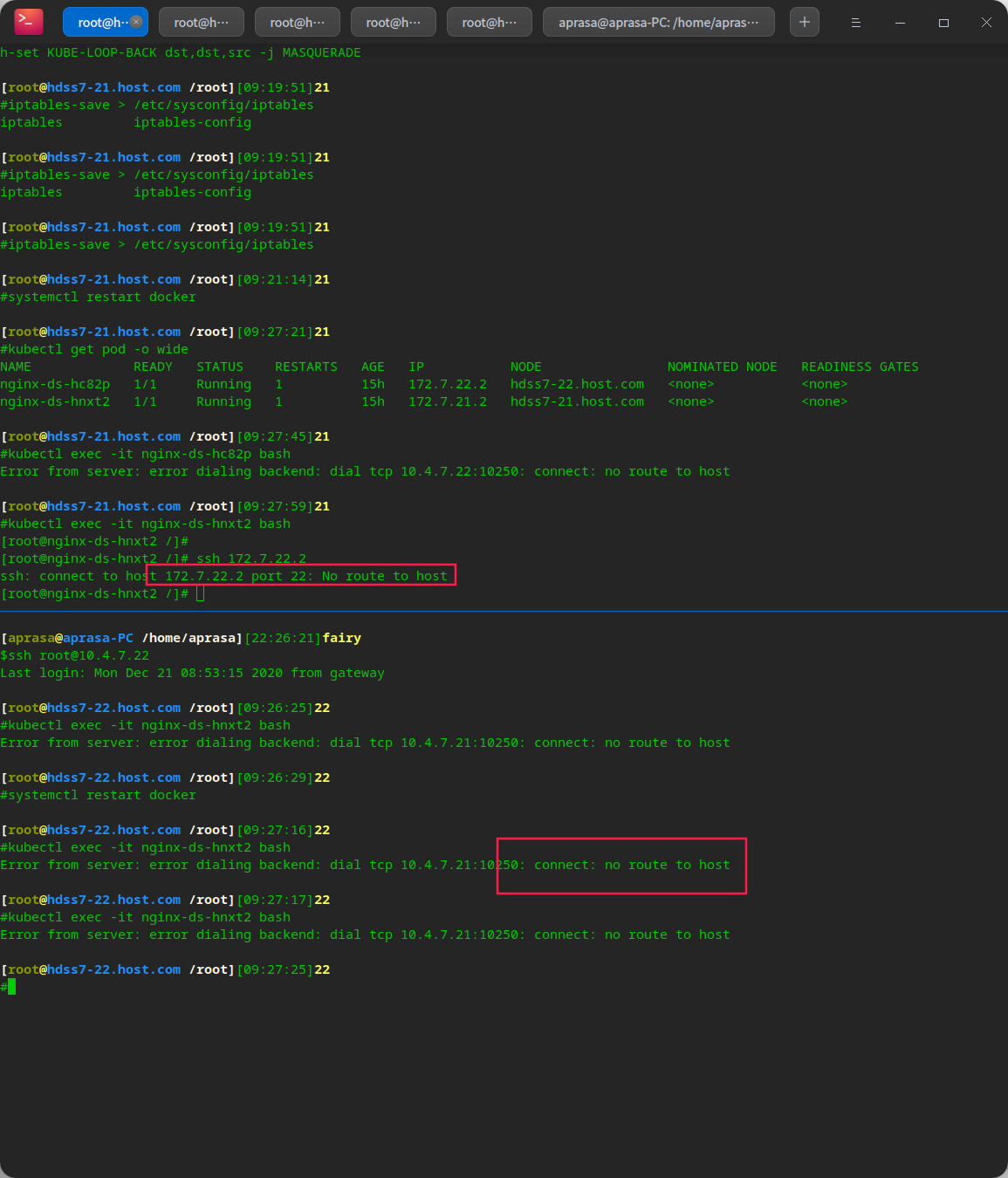

The current status of pod does not support cross host communication

flannel needs to be installed to realize cross host communication

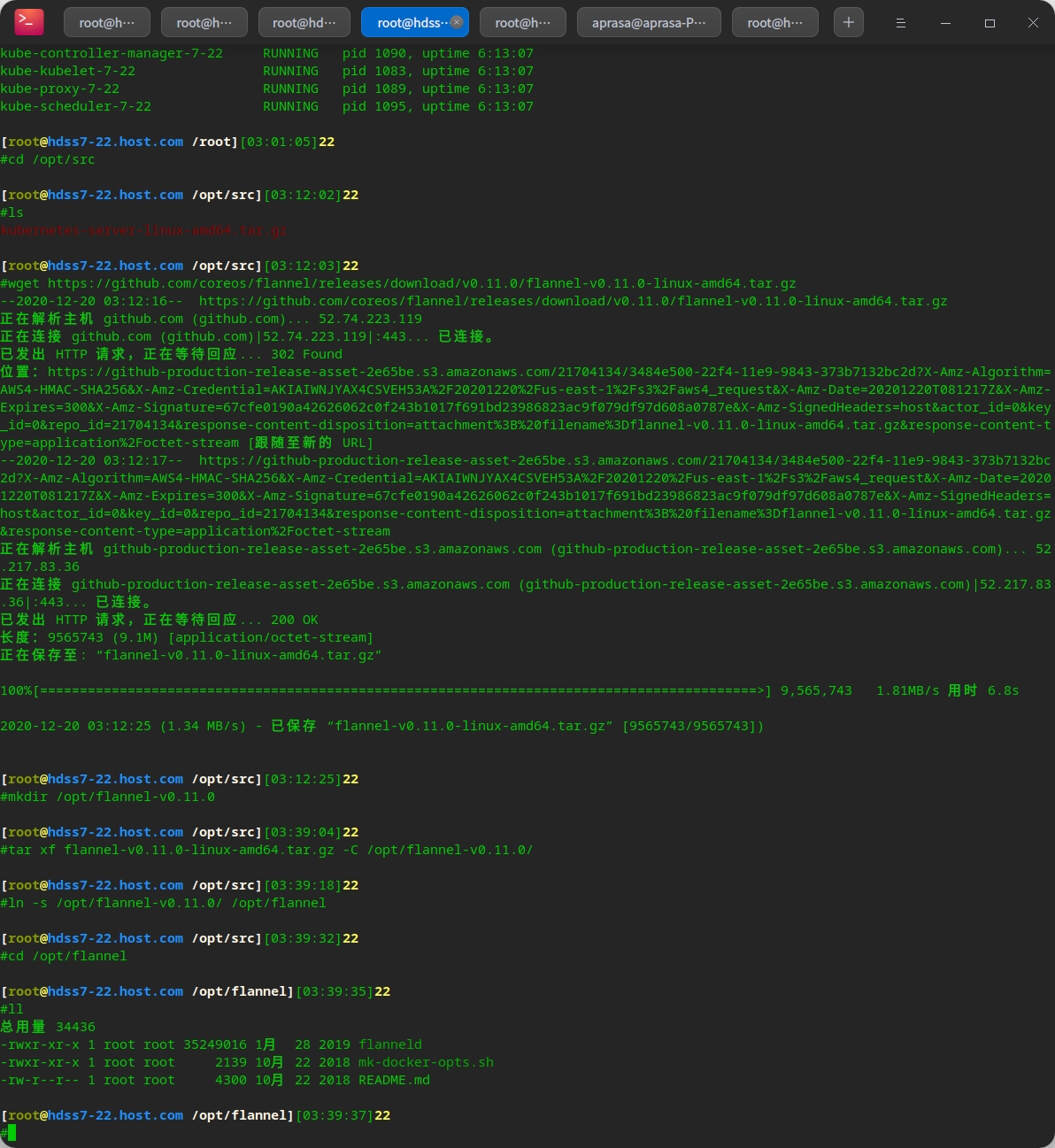

Deploy CNI network plug-in Flannel

Deploy Flannel

Download Flannel and deploy to the directory

# Flannel download address: https://github.com/coreos/flannel/releases/ # Operation on 21 22 machines cd /opt/src/ wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz # Create a directory and extract it mkdir /opt/flannel-v0.11.0 tar xf flannel-v0.11.0-linux-amd64.tar.gz -C /opt/flannel-v0.11.0/ # Deploy to directory ln -s /opt/flannel-v0.11.0/ /opt/flannel

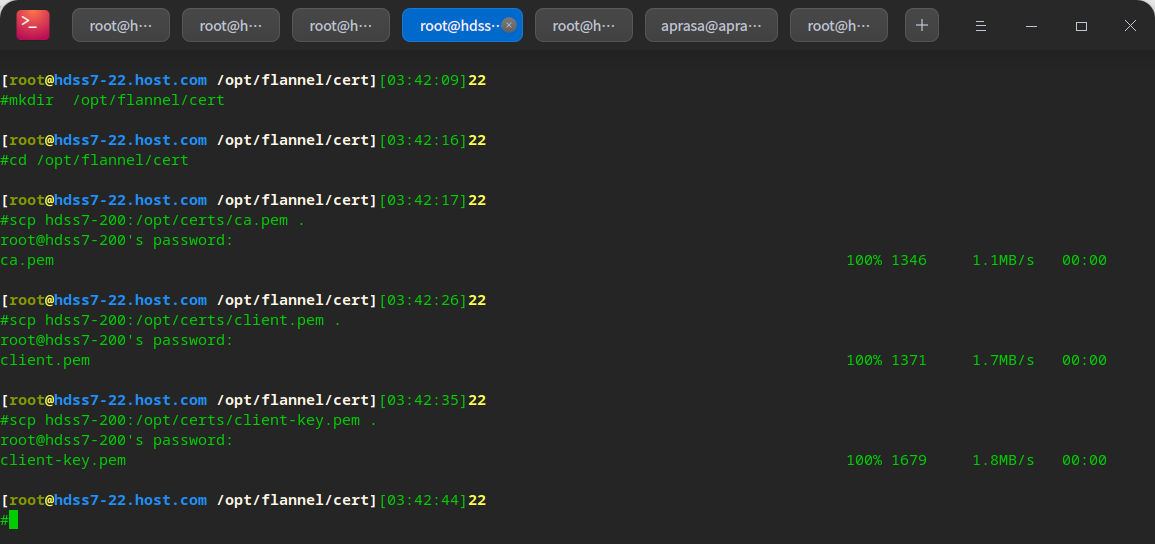

Copy certificate

mkdir /opt/flannel/cert cd /opt/flannel/cert # Because flannel needs to communicate with etcd, store and configure it as the client of etcd scp hdss7-200:/opt/certs/ca.pem . scp hdss7-200:/opt/certs/client.pem . scp hdss7-200:/opt/certs/client-key.pem .

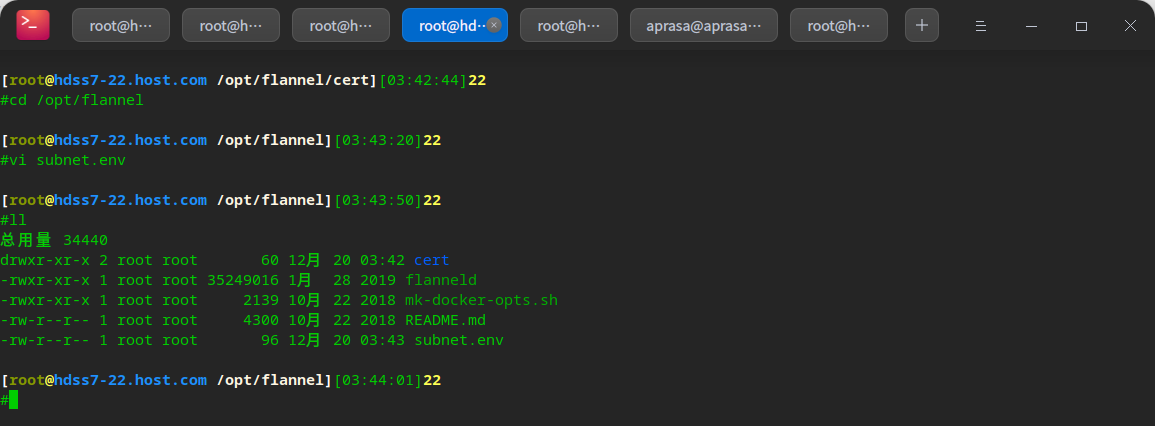

Create configuration

cd /opt/flannel vi subnet.env

FLANNEL_NETWORK=172.7.0.0/16 FLANNEL_SUBNET=172.7.22.1/24 FLANNEL_MTU=1500 FLANNEL_IPMASQ=false

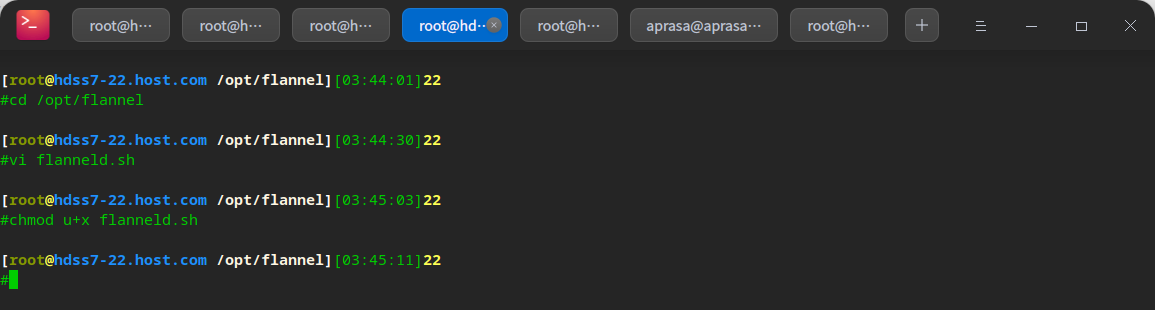

Create startup script

cd /opt/flannel vi flanneld.sh

#!/bin/sh ./flanneld \ --public-ip=10.4.7.22 \ --etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \ --etcd-keyfile=./cert/client-key.pem \ --etcd-certfile=./cert/client.pem \ --etcd-cafile=./cert/ca.pem \ --iface=eth0 \ --subnet-file=./subnet.env \ --healthz-port=2401

chmod u+x flanneld.sh

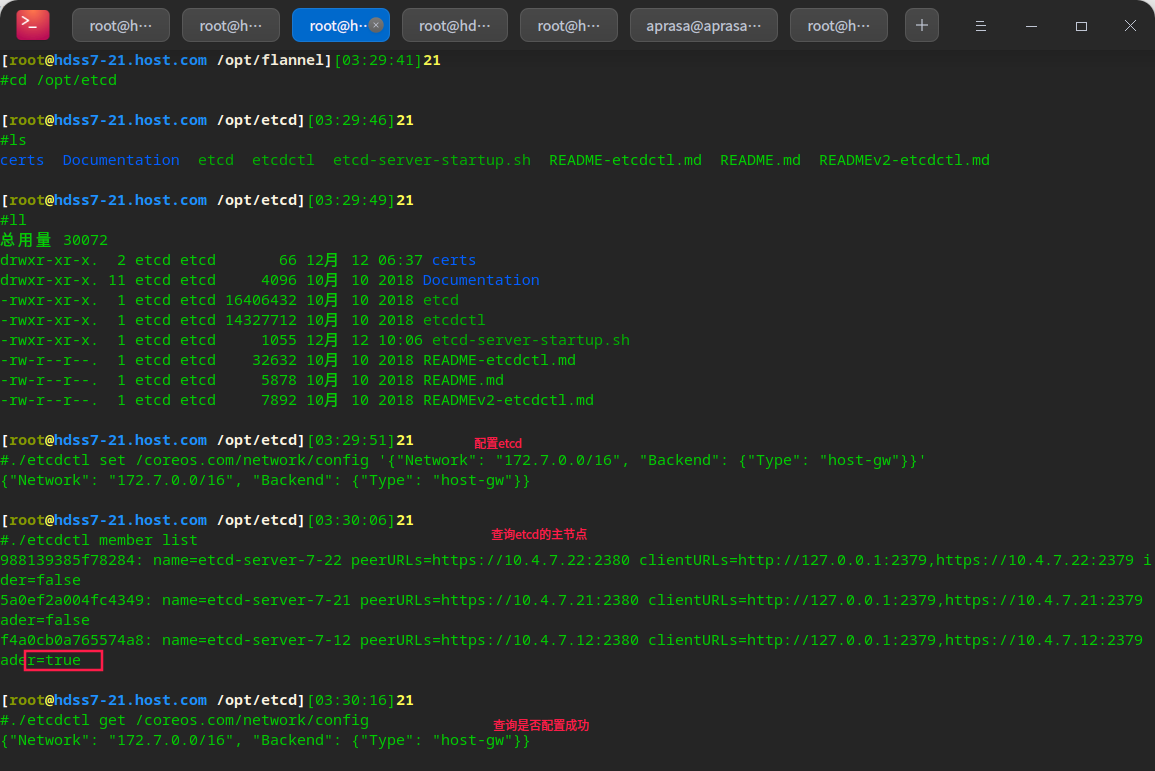

Operate etcd and add host GW

# Flannel relies on etcd to store information, and the flannel network configuration needs to be set on etcd

# This configuration operation can be performed once, and the etcd is written. There is no need to repeat the operation

cd /opt/etcd

./etcdctl set /coreos.com/network/config '{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}'

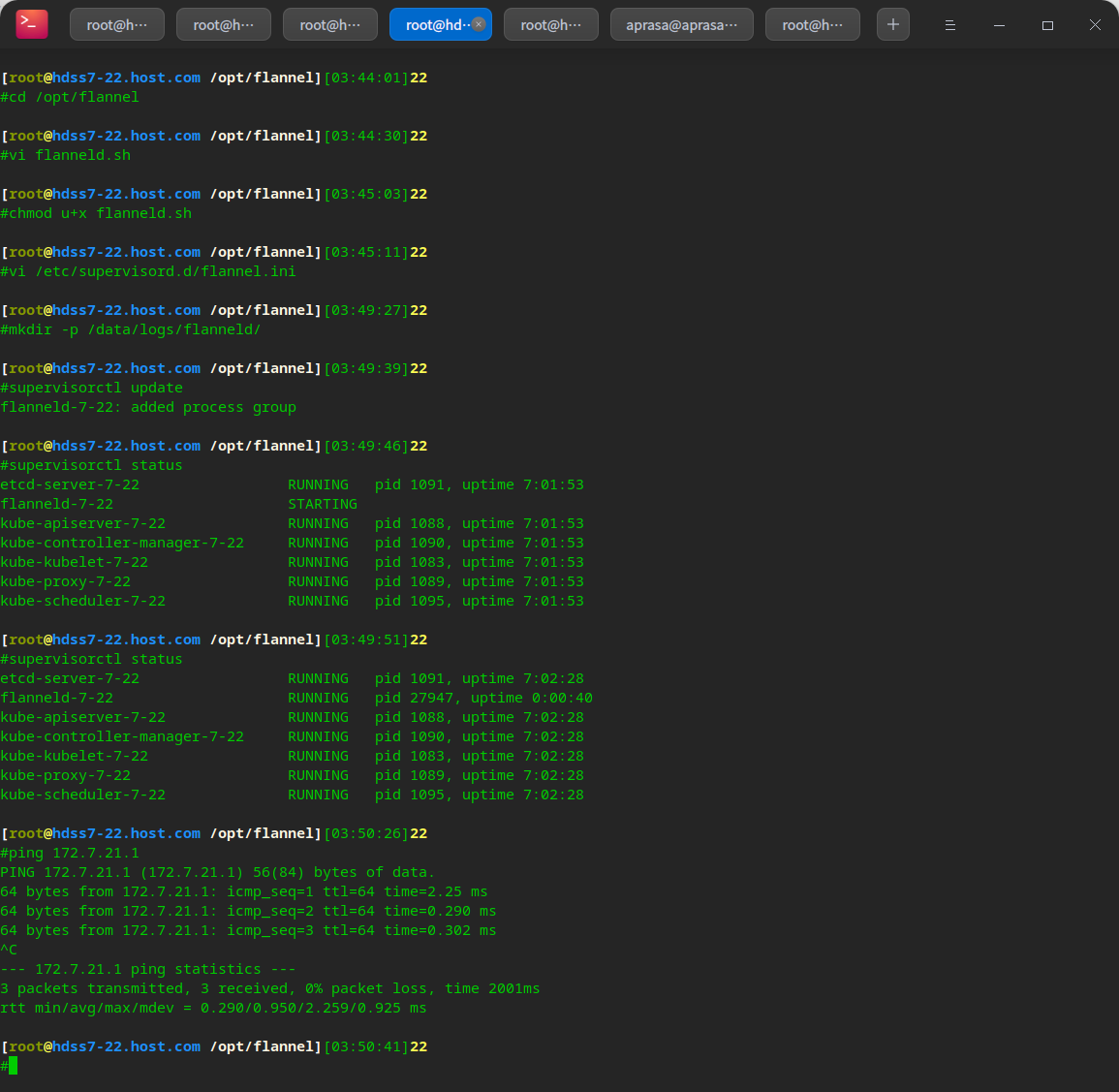

Create supervisor configuration

vi /etc/supervisord.d/flannel.ini

[program:flanneld-7-22] command=/opt/flannel/flanneld.sh ; the program (relative uses PATH, can take args) numprocs=1 ; number of processes copies to start (def 1) directory=/opt/flannel ; directory to cwd to before exec (def no cwd) autostart=true ; start at supervisord start (default: true) autorestart=true ; retstart at unexpected quit (default: true) startsecs=30 ; number of secs prog must stay running (def. 1) startretries=3 ; max # of serial start failures (default 3) exitcodes=0,2 ; 'expected' exit codes for process (default 0,2) stopsignal=QUIT ; signal used to kill process (default TERM) stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10) user=root ; setuid to this UNIX account to run the program redirect_stderr=true ; redirect proc stderr to stdout (default false) stdout_logfile=/data/logs/flanneld/flanneld.stdout.log ; stderr log path, NONE for none; default AUTO stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB) stdout_logfile_backups=4 ; # of stdout logfile backups (default 10) stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0) stdout_events_enabled=false ; emit events on stdout writes (default false)

mkdir -p /data/logs/flanneld/ supervisorctl update

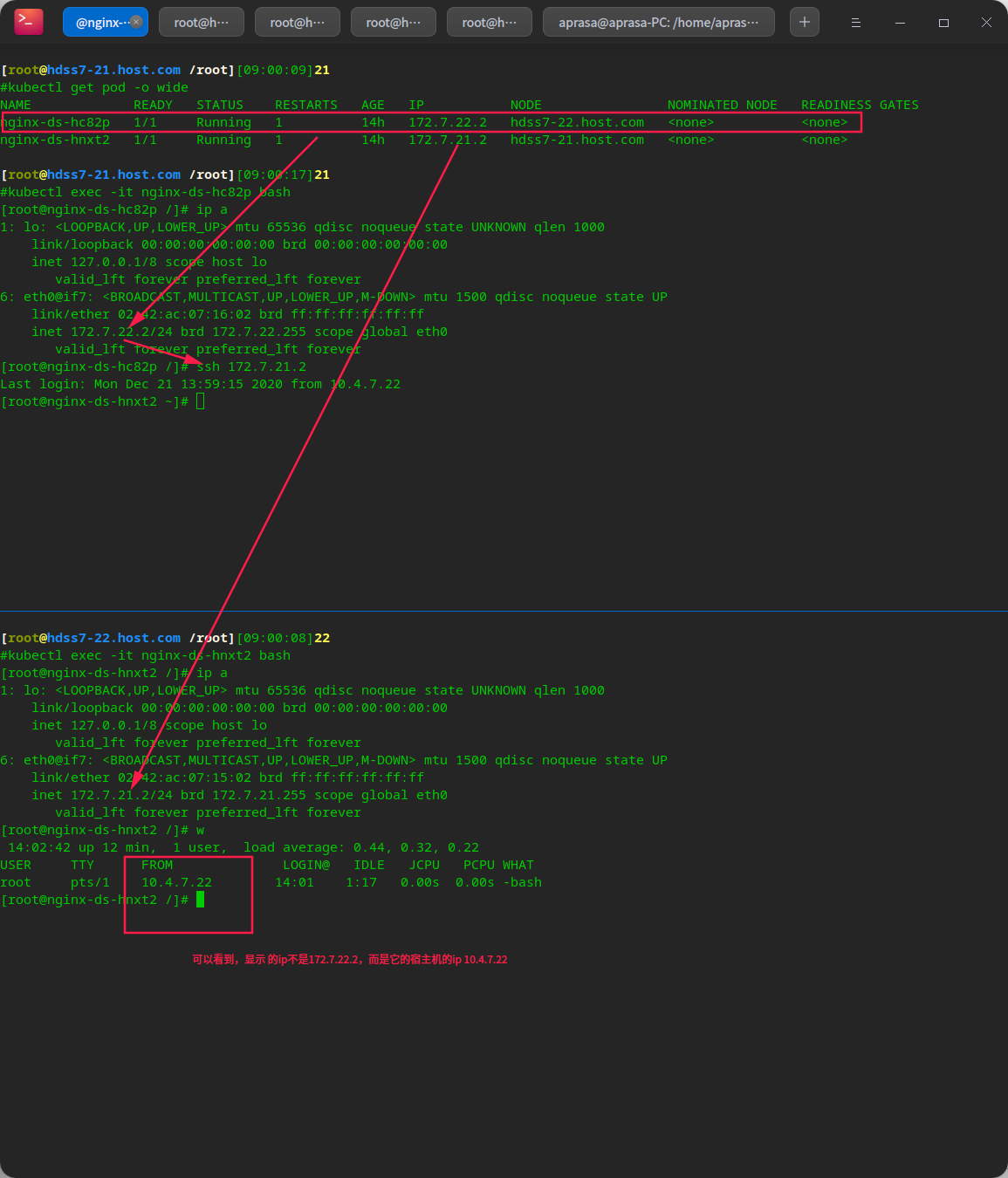

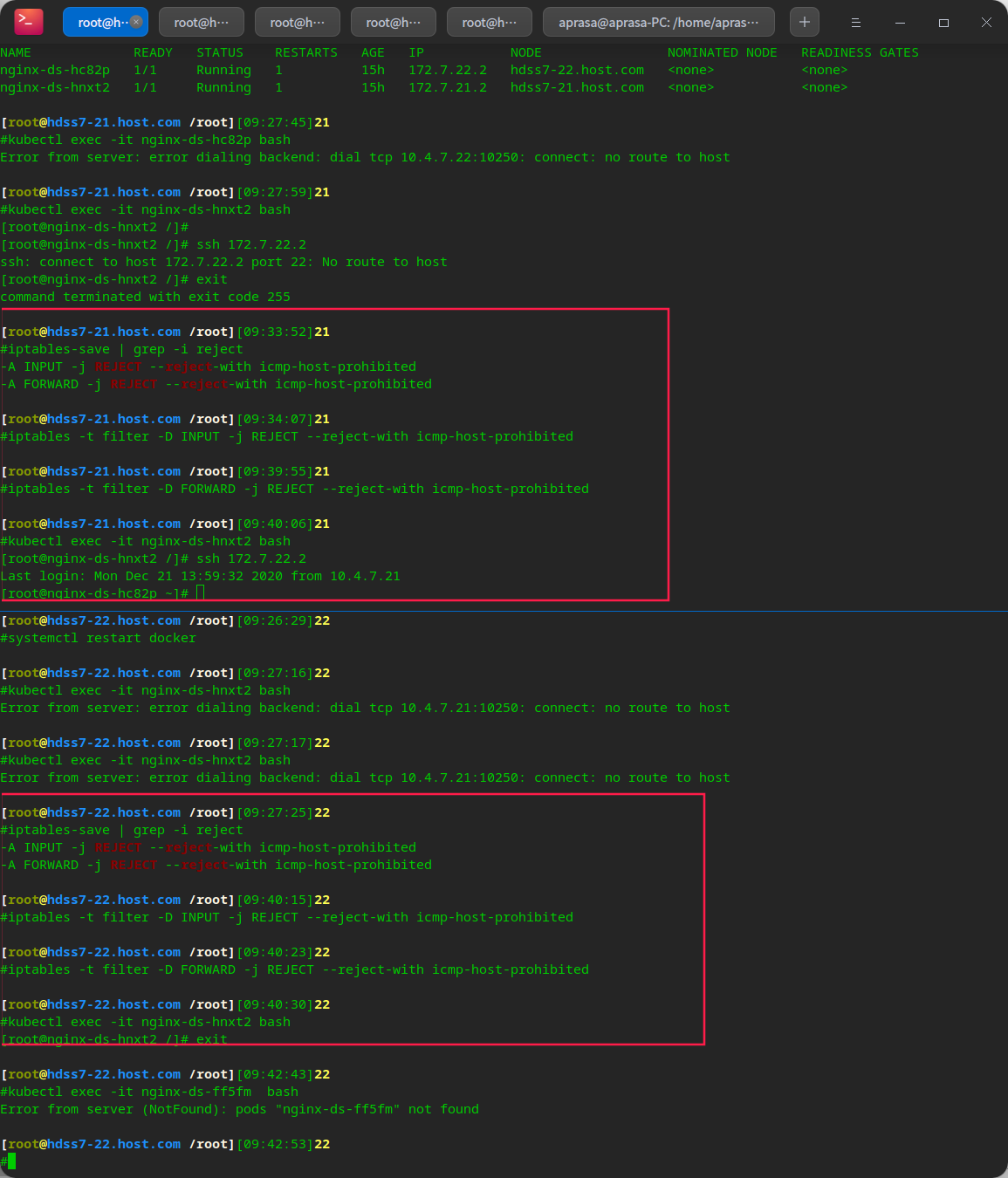

The k8s internal container must be configured with SNAT rules

# Let the container pods see the real pod IP instead of the ip on the node (physical machine) # The pod accesses the external network with SNAT, because the external does not recognize the ip of the pod. However, when a pod accesses a pod, it should display its own ip address

Before configuration

The ip address of the host is used by default

# You can see that when the above pod accesses the following pod, # The host ip of the above pod is displayed

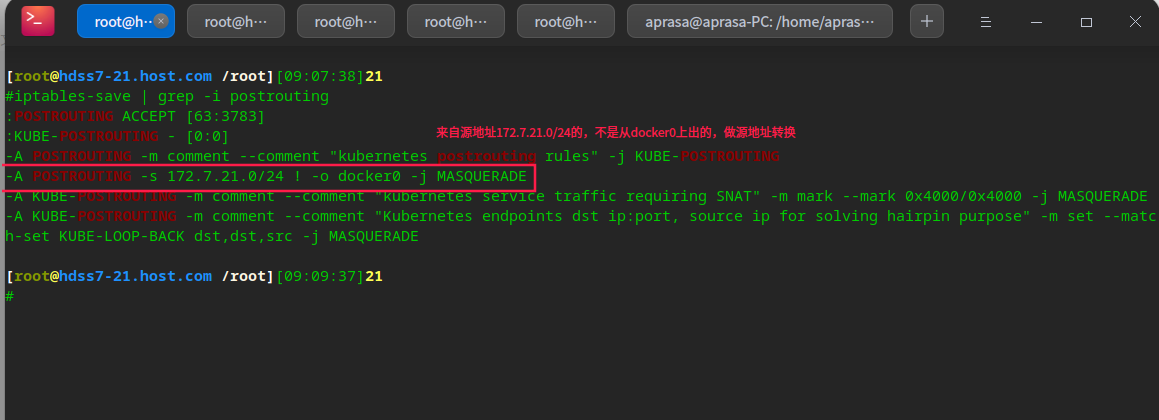

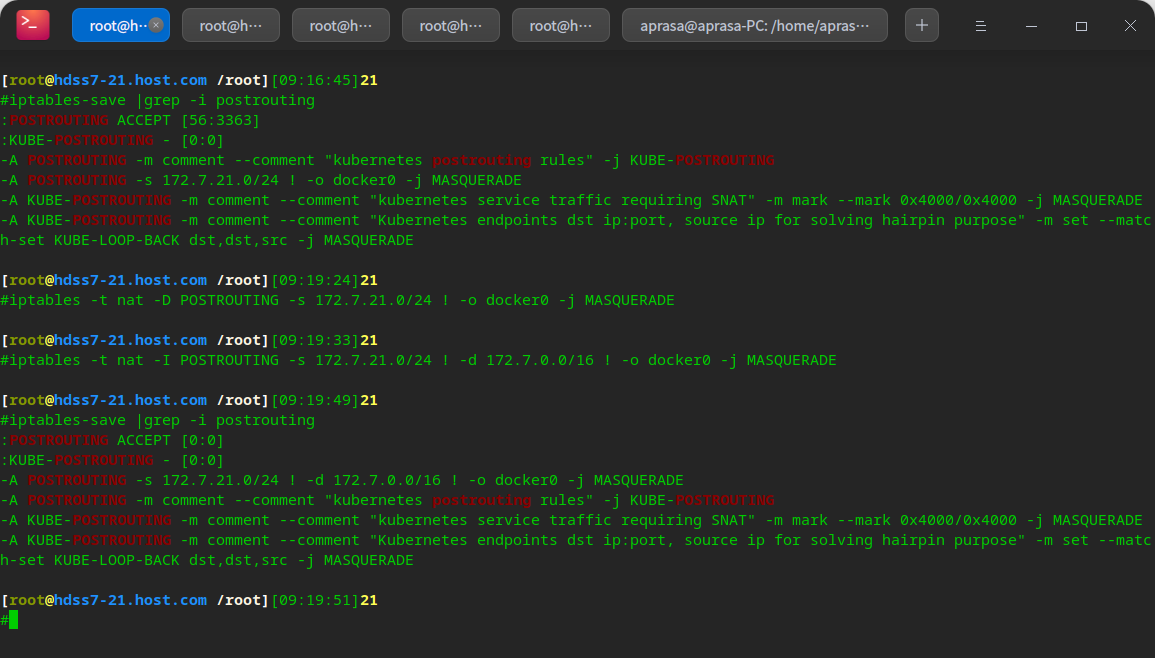

View SNAT table in iptables

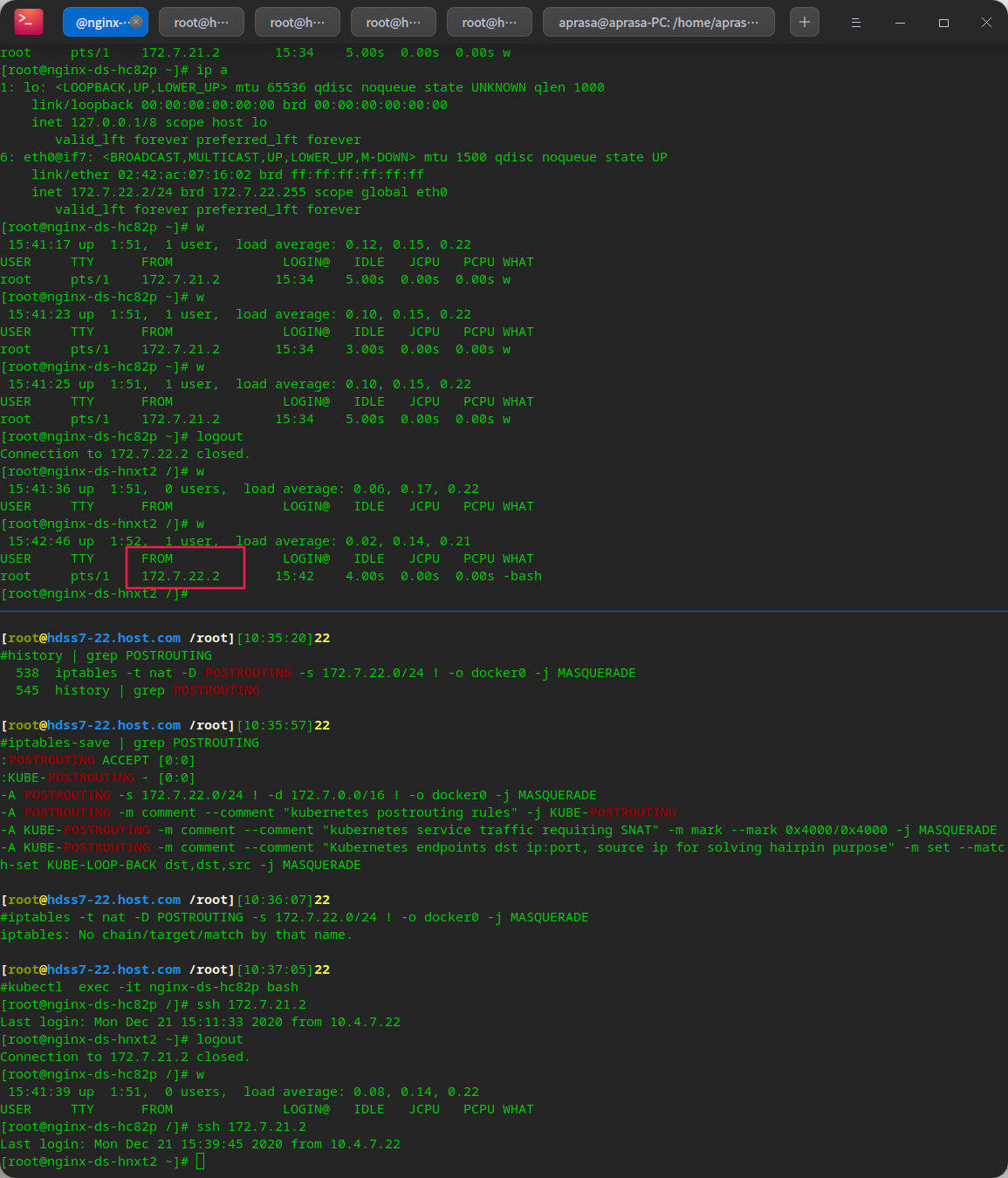

# Here, you need to modify iptables to optimize the SNAT rule. Otherwise, when accessing, other nodes record the IP 10.4 of the node node 7.22, instead of 172.7 within the pod cluster 22.x

# View POSTROUTING forwarding rules of SNAT table in iptables iptables-save | grep -i postrouting

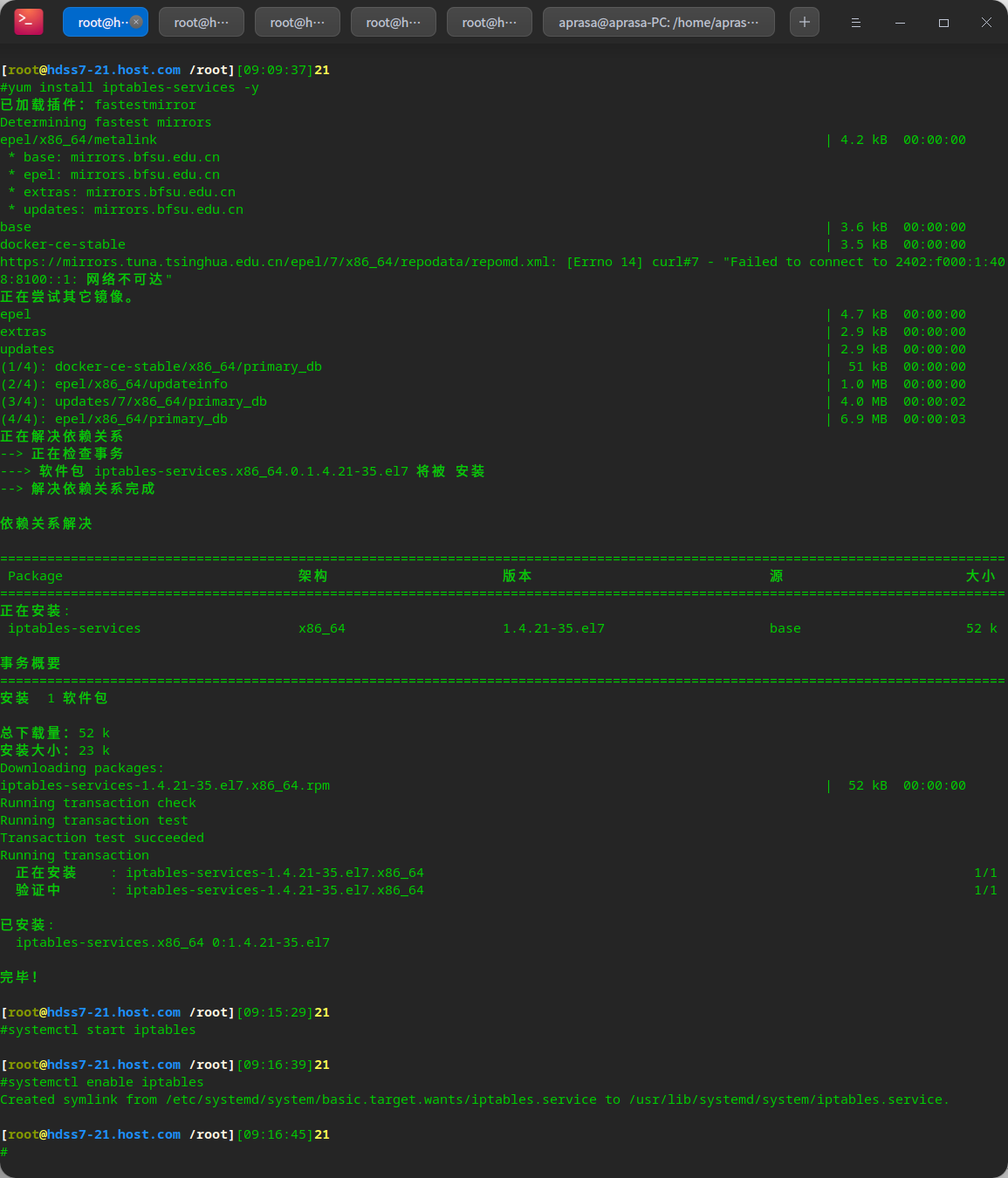

Configure iptables optimization options

# Configure iptables optimization options # Install iptables because CentOS 7 does not have iptables by default yum install iptables-services -y # Start iptables systemctl start iptables systemctl enable iptables

# View rules iptables-save |grep -i postrouting # Delete original rule iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE # Insert a new rule. The target address is not 172.7 0.0/16 network before SNAT conversion iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

# Save rule iptables-save > /etc/sysconfig/iptables # Note that it needs to be done on the host machine, that is, the machine of 21 and 22

# It can be found that after configuring iptables, the network is blocked

# This is because iptables has strict rules after it is installed # Just remove some rules

# Finally, save it iptables-save > /etc/sysconfig/iptables # Finally, the ip that can be displayed as pod is normal

Installing and deploying coredns

k8s service discovery: the process of locating different services

Start delivering services to k8s by deploying containers

http service deployed k8s

Configure nginx

# First, create an nginx virtual host on the operation and maintenance host hdss7-200 to obtain the resource configuration list vi /etc/nginx/conf.d/k8s-yaml.od.com.conf

server {

listen 80;

server_name k8s-yaml.od.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

}

mkdir -p /data/k8s-yaml/coredns nginx -t nginx -s reload

Add domain name resolution

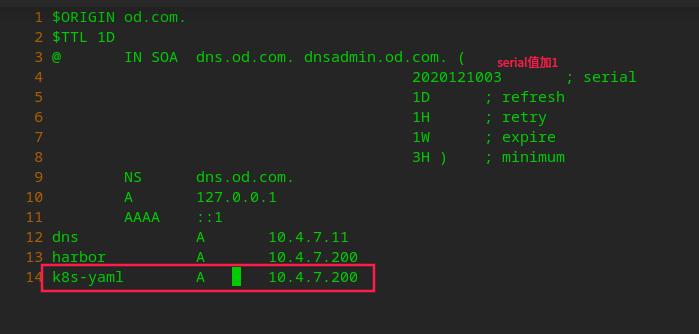

# Add domain name resolution on the self built dns host hdss-11 # Add a parsing record at the end vi /var/named/od.com.zone

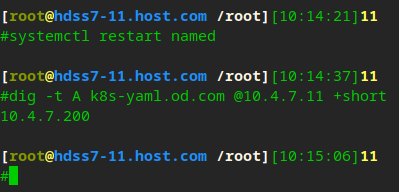

# Restart service systemctl restart named # You can see that the parsing is normal dig -t A k8s-yaml.od.com @10.4.7.11 +short

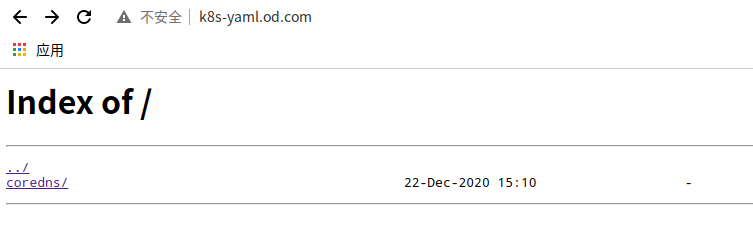

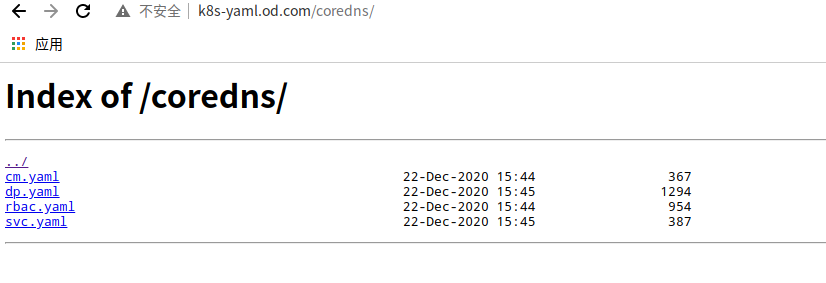

# As you can see, it can be accessed in the browser

Preparing coredns images

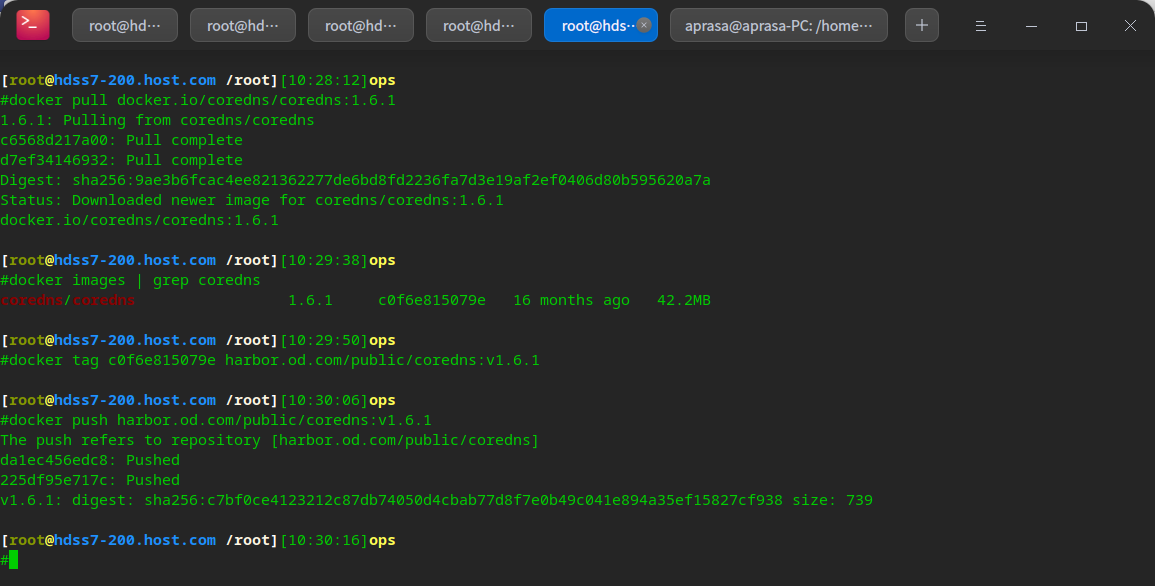

# Official website https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dns/coredns # Deploy coredns on the operation and maintenance host hdss7-200 cd /data/k8s-yaml/coredns # The software will be delivered in k8s as a container # Download official image docker pull docker.io/coredns/coredns:1.6.1 # Then put it in a private warehouse docker tag c0f6e815079e harbor.od.com/public/coredns:v1.6.1 docker push harbor.od.com/public/coredns:v1.6.1

Prepare resource allocation list

# Official profile list # https://github.com/kubernetes/kubernetes/blob/master/cluster/addons/dns/coredns/coredns.yaml.base cd /data/k8s-yaml/coredns

rbac.yaml – get cluster related permissions

vi rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

cm.yaml – configuration of the cluster by configmap

vi cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 192.168.0.0/16 #service resource cluster address

forward . 10.4.7.11 #Superior DNS address

cache 30

loop

reload

loadbalance

}

dp.yaml pod controller

vi dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.od.com/public/coredns:v1.6.1

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

svc.yaml - service resource

vi svc.yaml

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 192.168.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP

You can see that it has been synchronized on http

Create resources and complete deployment

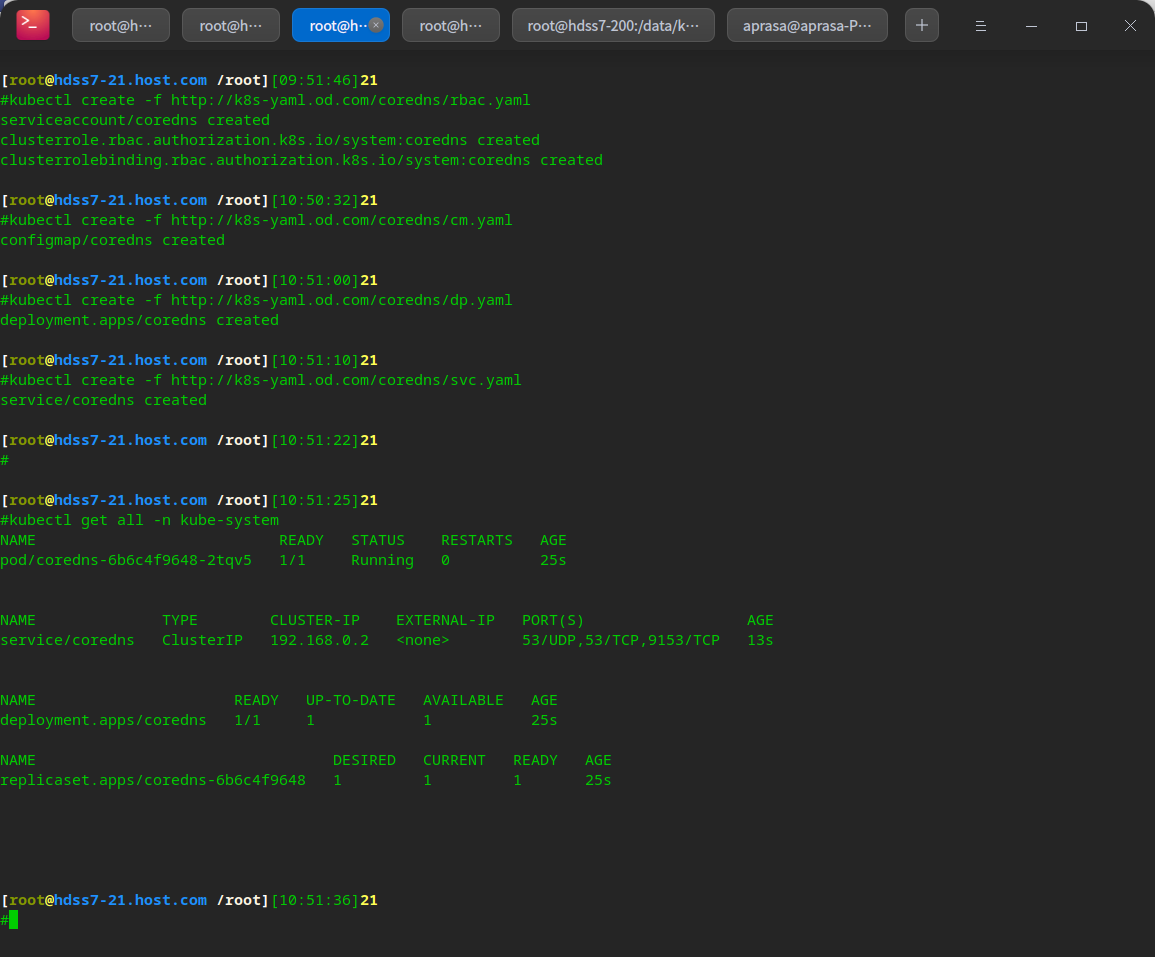

# Create resources by using the http request resource configuration list yaml: on any node node kubectl create -f http://k8s-yaml.od.com/coredns/rbac.yaml kubectl create -f http://k8s-yaml.od.com/coredns/cm.yaml kubectl create -f http://k8s-yaml.od.com/coredns/dp.yaml kubectl create -f http://k8s-yaml.od.com/coredns/svc.yaml # View operation kubectl get all -n kube-system

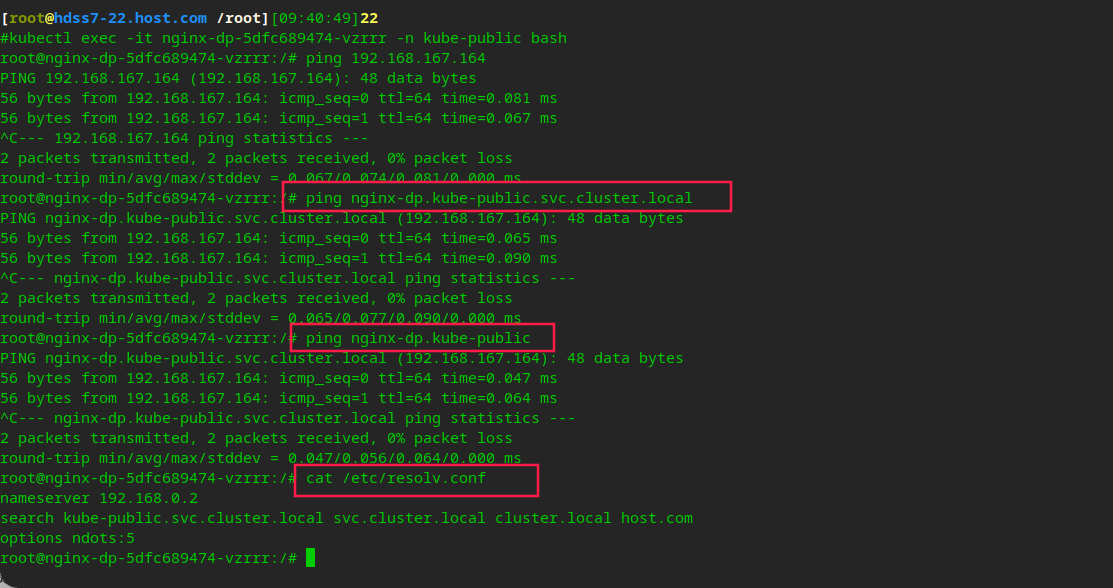

# You can see that in the pod, there are dns resolution, search domain and other settings

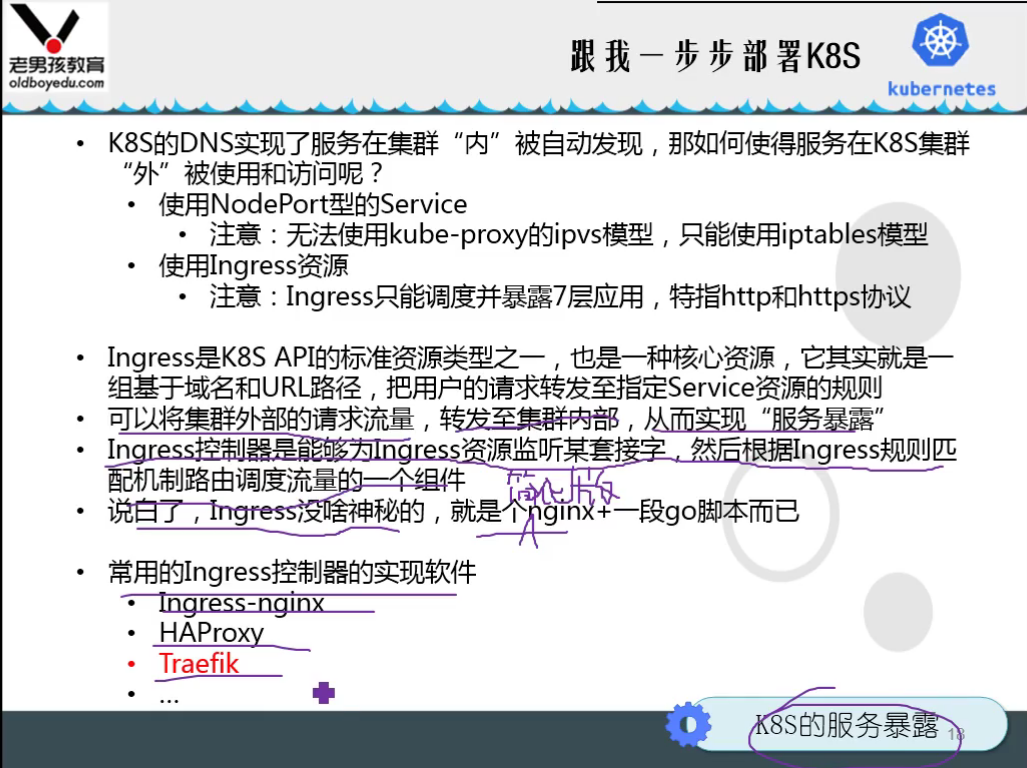

Traifik of deployment service exposure ingress

Take traefik as an example

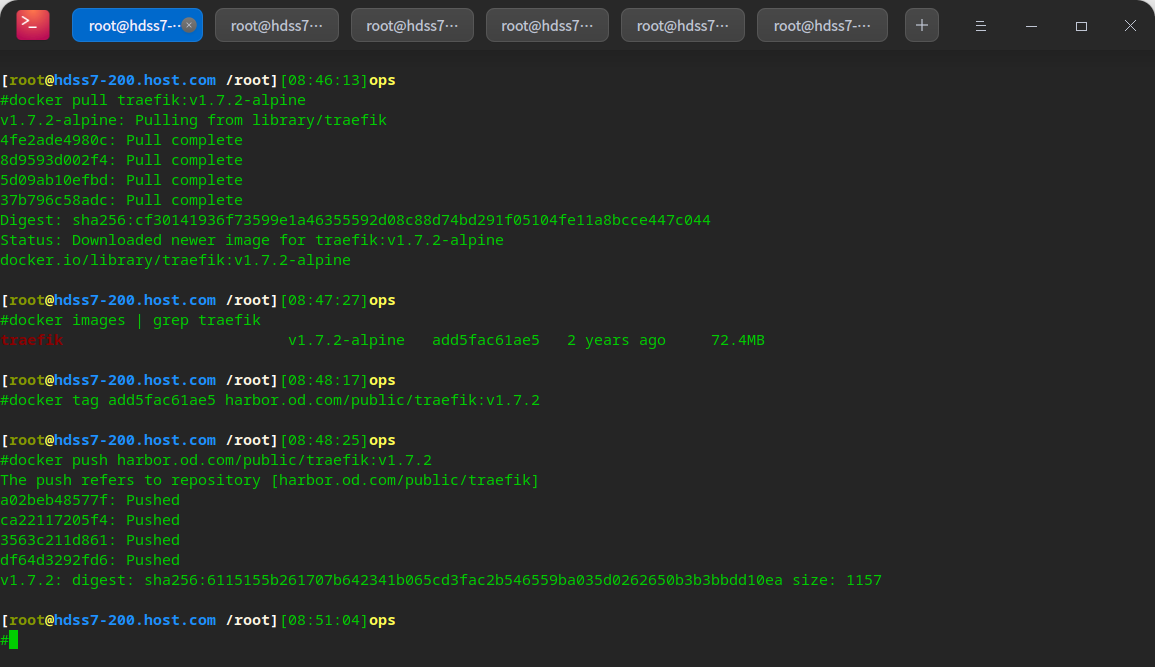

Download traefik to private warehouse

# On hdss7-200: # git address: https://github.com/traefik/traefik docker pull traefik:v1.7.2-alpine docker tag add5fac61ae5 harbor.od.com/public/traefik:v1.7.2 docker push harbor.od.com/public/traefik:v1.7.2

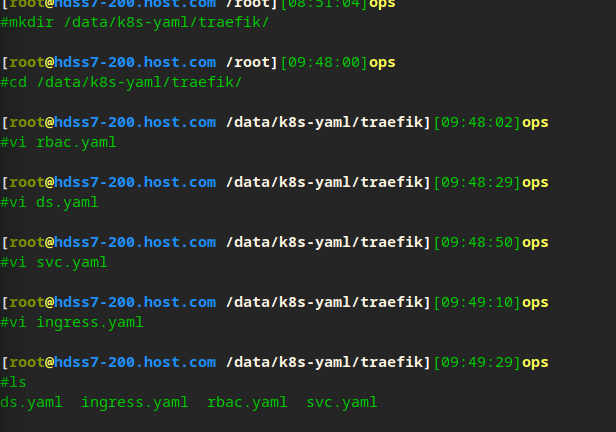

Create resource configuration list

Create rbac

cd /data/k8s-yaml/traefik/ vi rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

Create ds

vi ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

containers:

- image: harbor.od.com/public/traefik:v1.7.2

name: traefik-ingress

ports:

- name: controller

containerPort: 80

hostPort: 81

- name: admin-web

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --insecureskipverify=true

- --kubernetes.endpoint=https://10.4.7.10:7443

- --accesslog

- --accesslog.filepath=/var/log/traefik_access.log

- --traefiklog

- --traefiklog.filepath=/var/log/traefik.log

- --metrics.prometheus

Create svc

vi svc.yaml

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress

ports:

- protocol: TCP

port: 80

name: controller

- protocol: TCP

port: 8080

name: admin-web

Create ingress

vi ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-web-ui

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefik.od.com

http:

paths:

- path: /

backend:

serviceName: traefik-ingress-service

servicePort: 8080

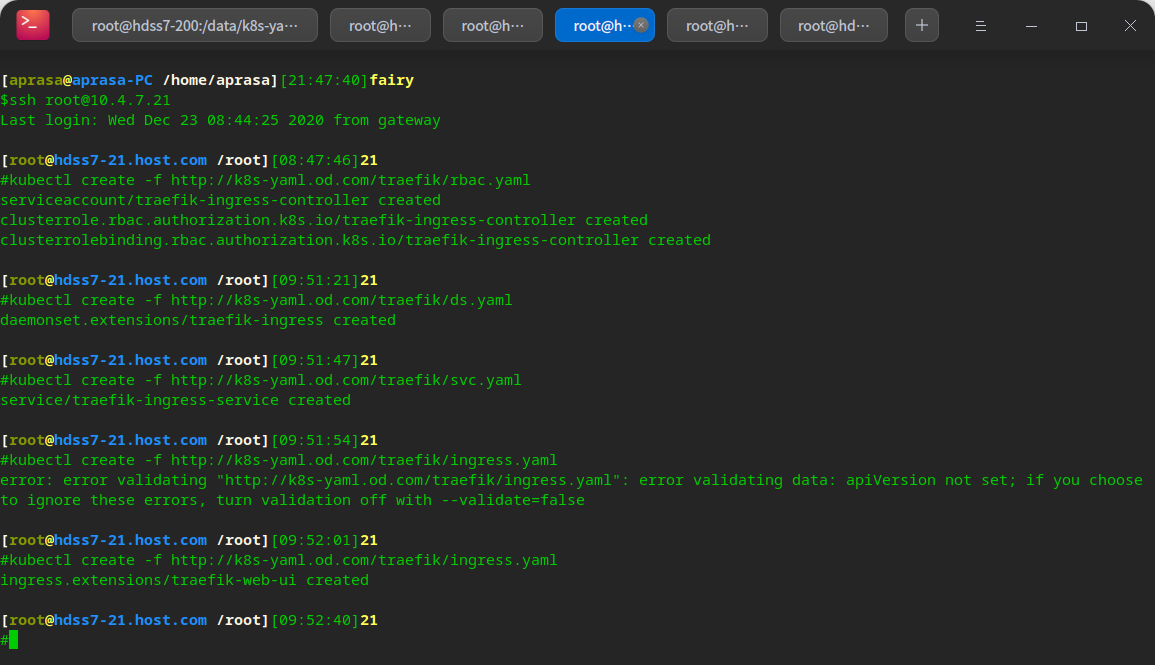

Create resource on node

kubectl create -f http://k8s-yaml.od.com/traefik/rbac.yaml kubectl create -f http://k8s-yaml.od.com/traefik/ds.yaml kubectl create -f http://k8s-yaml.od.com/traefik/svc.yaml kubectl create -f http://k8s-yaml.od.com/traefik/ingress.yaml

You can see that port 81 is enabled

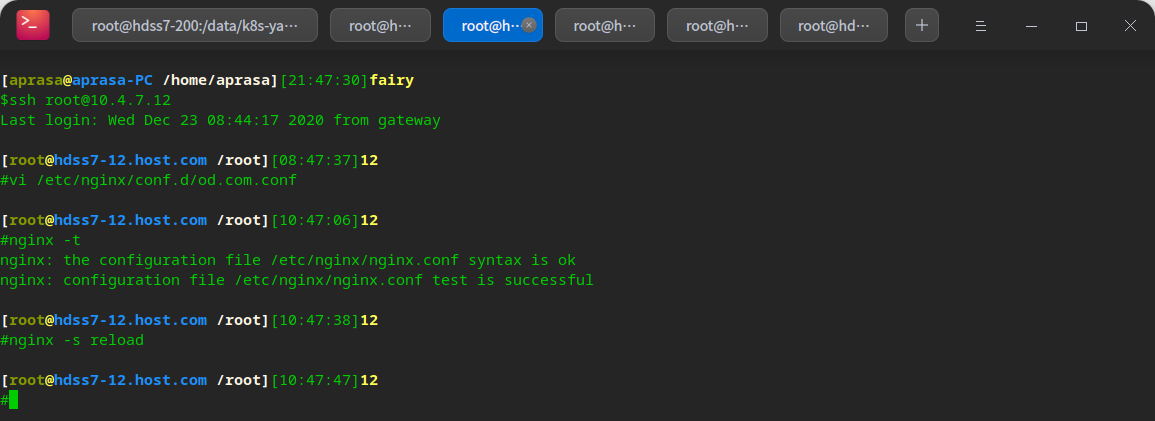

Configure nginx parsing

# hdss7-11,hdss7-12 seven layer inversion vi /etc/nginx/conf.d/od.com.conf

upstream default_backend_traefik {

server 10.4.7.21:81 max_fails=3 fail_timeout=10s;

server 10.4.7.22:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.od.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

# Test and restart nginx nginx -t nginx -s reload

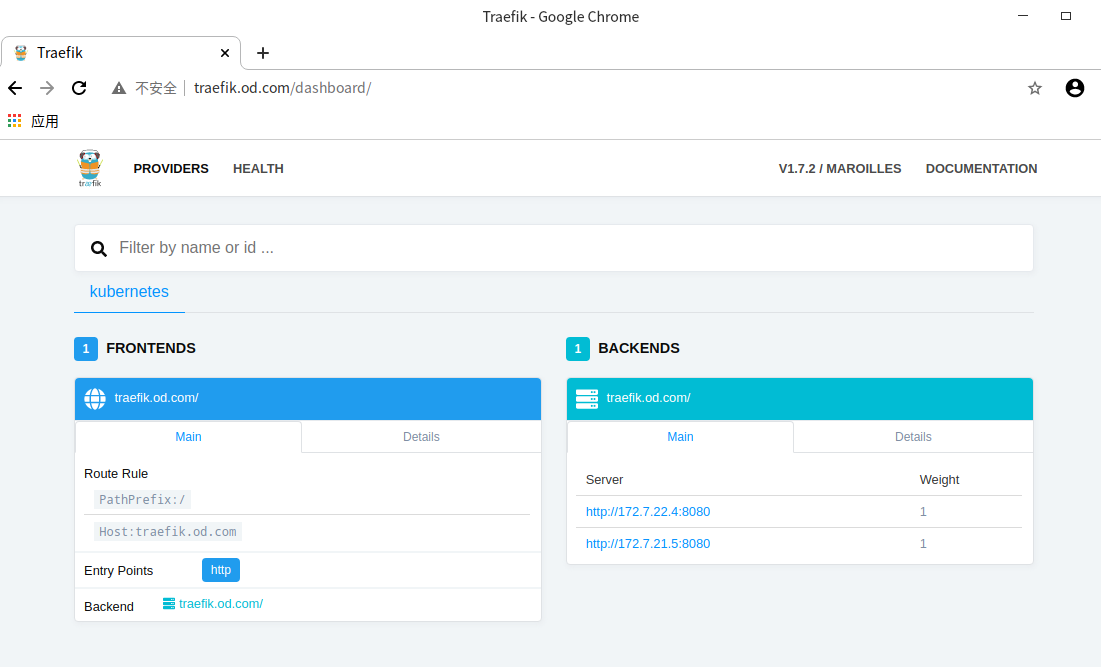

Resolve domain name

# Add domain name resolution on hdss7-11: in ingress host value in yaml: vi /var/named/od.com.zone

systemctl restart named # Then we can access the domain name through the browser outside the cluster: # http://traefik.od.com # our host virtual network card specifies a bind domain name resolution server

dashboard installation

Prepare dashboard image

# First, download the image and upload it to our private warehouse: hdss7-200 docker pull k8scn/kubernetes-dashboard-amd64:v1.8.3 docker tag fcac9aa03fd6 harbor.od.com/public/dashboard:v1.8.3 docker push harbor.od.com/public/dashboard:v1.8.3

Prepare resource allocation list

rbac.yaml

mkdir -p /data/k8s-yaml/dashboard cd /data/k8s-yaml/dashboard vi rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

dp.yaml

vi dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: harbor.od.com/public/dashboard:v1.8.3

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard-admin

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

svc.yaml

vi svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443

ingress.yaml

vi ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: dashboard.od.com

http:

paths:

- backend:

serviceName: kubernetes-dashboard

servicePort: 443

Create resource

# Execute on any node kubectl create -f http://k8s-yaml.od.com/dashboard/rbac.yaml kubectl create -f http://k8s-yaml.od.com/dashboard/dp.yaml kubectl create -f http://k8s-yaml.od.com/dashboard/svc.yaml kubectl create -f http://k8s-yaml.od.com/dashboard/ingress.yaml

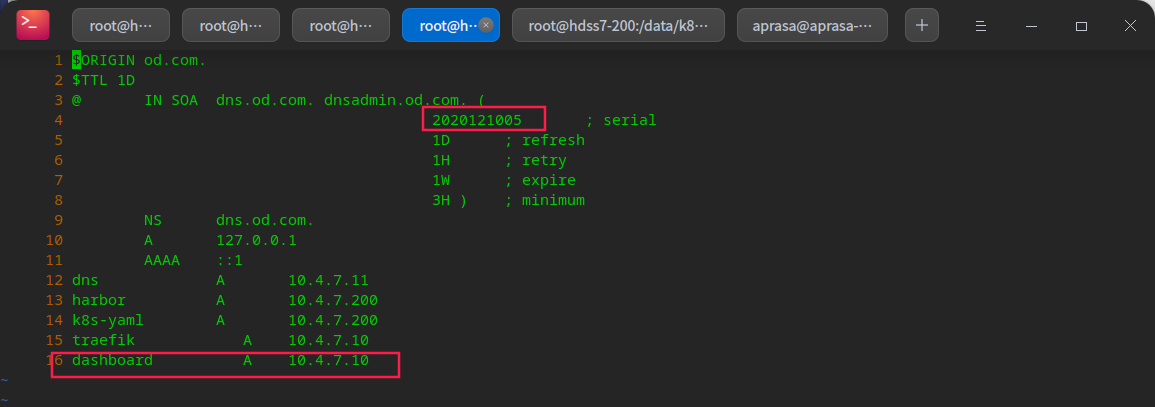

Add domain name resolution

vi /var/named/od.com.zone # dashboard A 10.4.7.10 # In case of large amount of data, do not restart the service directly. Specify an area of reload with rndc systemctl restart named

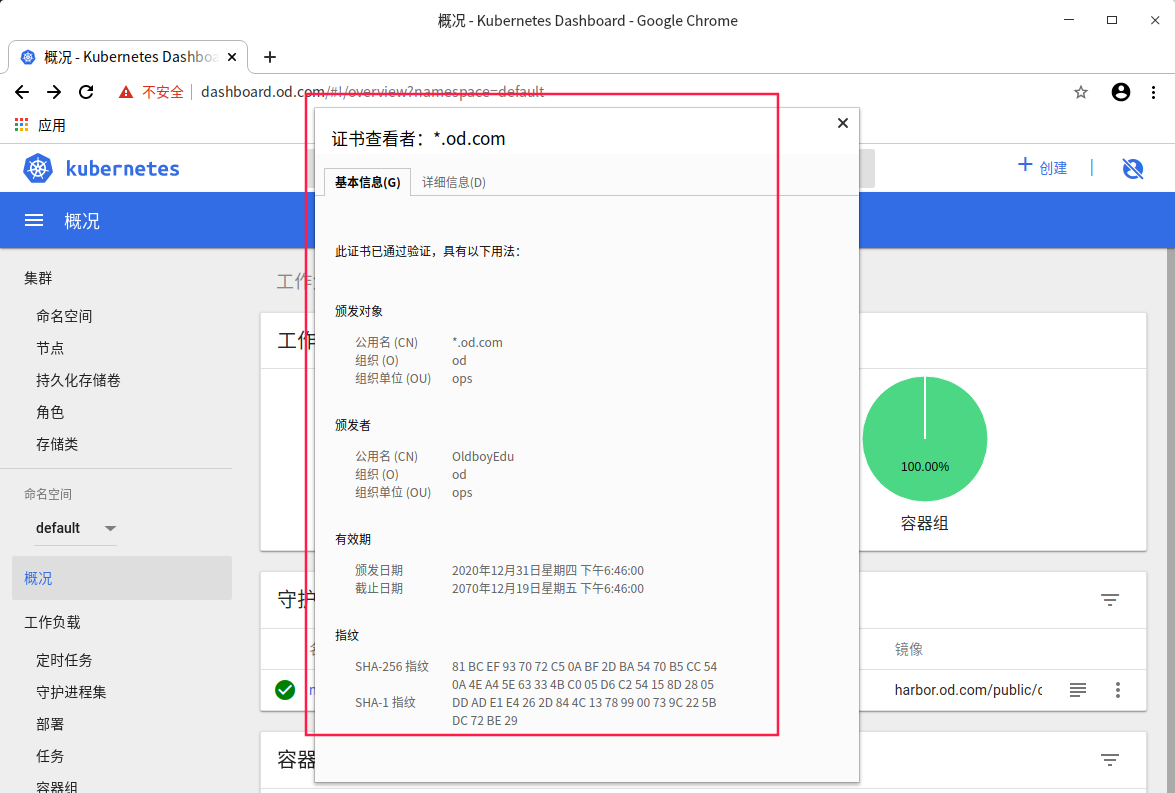

Test: access via browser

grant a certificate

# Still use cfssl to apply for a certificate: hdss7-200 cd /opt/certs/ vi dashboard-csr.json

{

"CN": "*.od.com",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server dashboard-csr.json |cfssl-json -bare dashboard

Configure nginx proxy https

# Copy to our nginx server: 7-11, 7-12 cd /etc/nginx/ mkdir certs cd certs scp hdss7-200:/opt/certs/dash* ./ cd /etc/nginx/conf.d/ vi dashboard.od.com.conf

server {

listen 80;

server_name dashboard.od.com;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.od.com;

ssl_certificate "certs/dashboard.pem";

ssl_certificate_key "certs/dashboard-key.pem";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

nginx -t nginx -s reload

As you can see, the certificate information is displayed

Smooth upgrade k8s

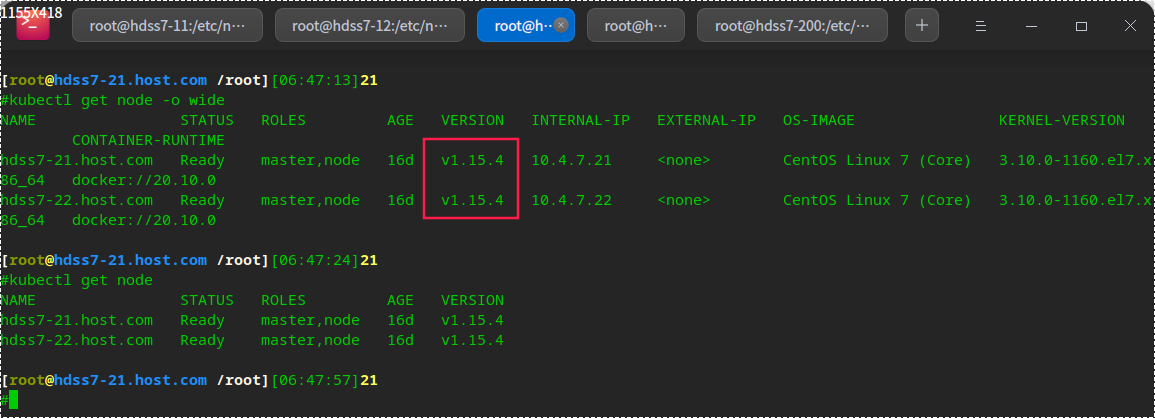

View current deployment version

Select the machine with low node load

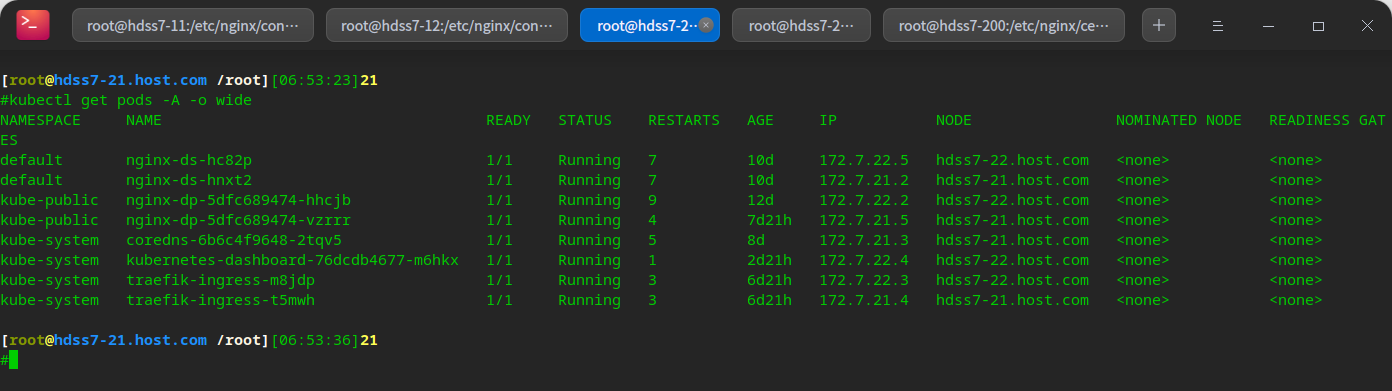

kubectl get pods -A -o wide # When we encounter vulnerabilities in K8S, or in order to meet the requirements, we may sometimes need to upgrade or downgrade the version, # In order to reduce the impact on the business, try to upgrade when the business is at a low ebb: here, 21 machines are selected for operation

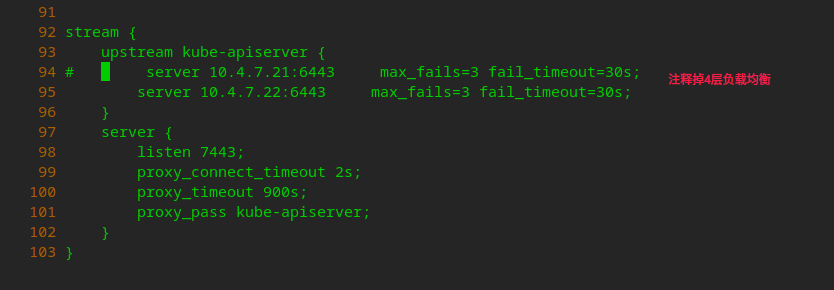

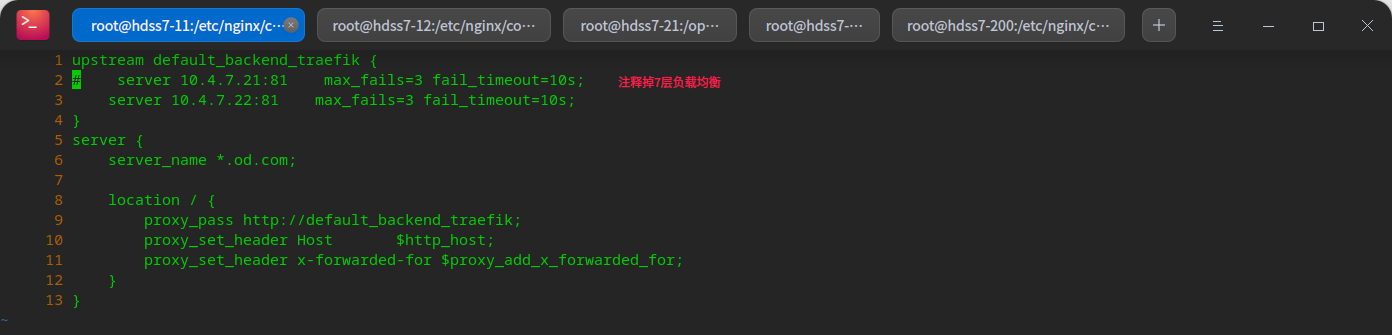

Remove the load balancing of layers 4 and 7

# Remove layer 4 load balancing vi /etc/nginx/nginx.conf

# Remove layer 7 load balancing vi /etc/nginx/conf.d/od.com.conf

# Restart nginx nginx -t nginx -s reload

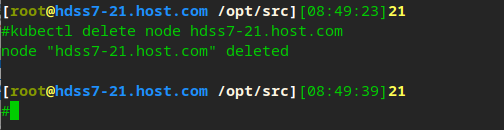

Offline node

kubectl delete node hdss7-21.host.com

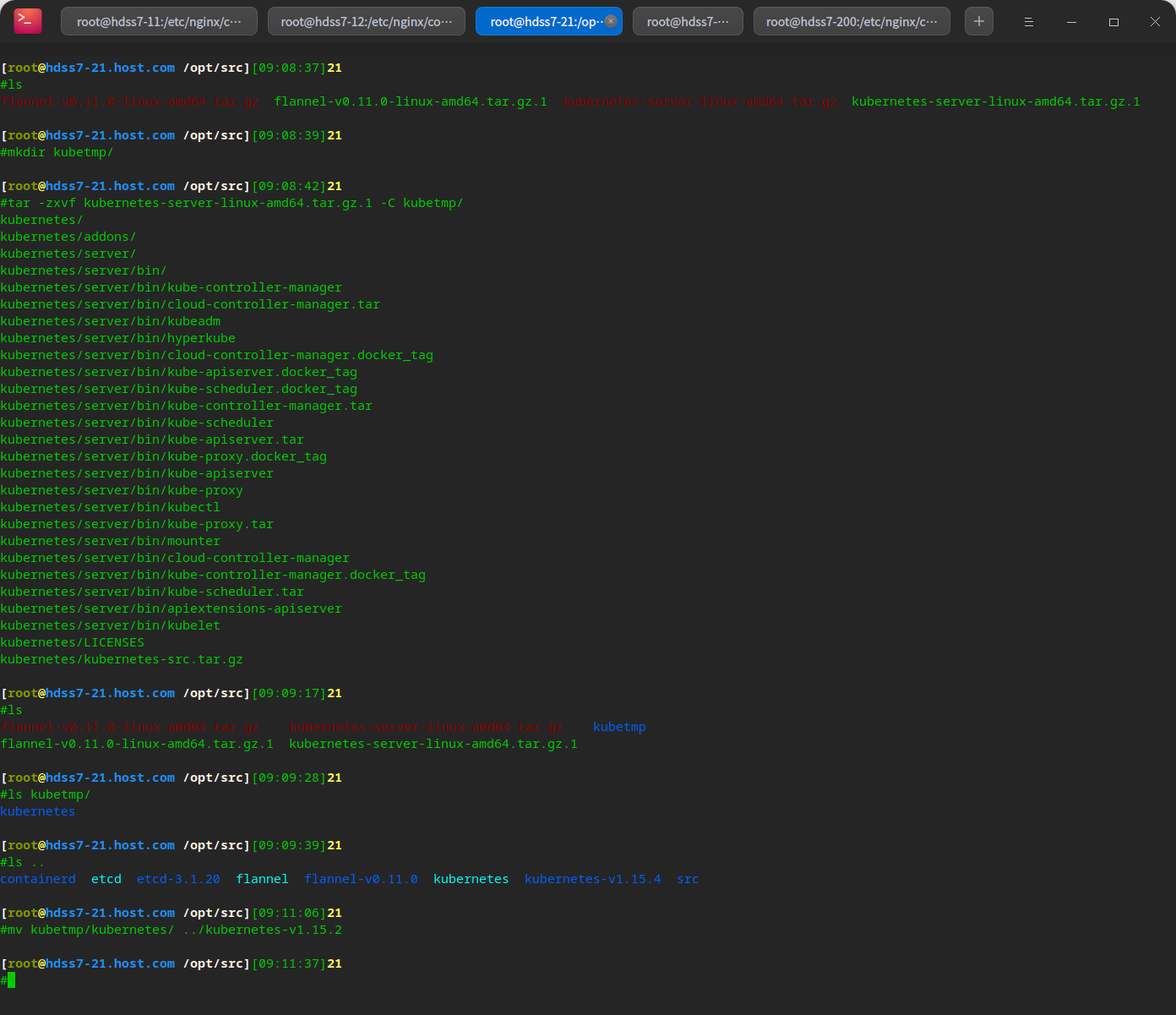

Deploy new version package

cd /opt/src/ wget https://dl.k8s.io/v1.15.2/kubernetes-server-linux-amd64.tar.gz # decompression mkdir kubetmp/ tar -zxvf kubernetes-server-linux-amd64.tar.gz.1 -C kubetmp/ # Migrate to deployment directory mv kubetmp/kubernetes/ ../kubernetes-v1.15.2

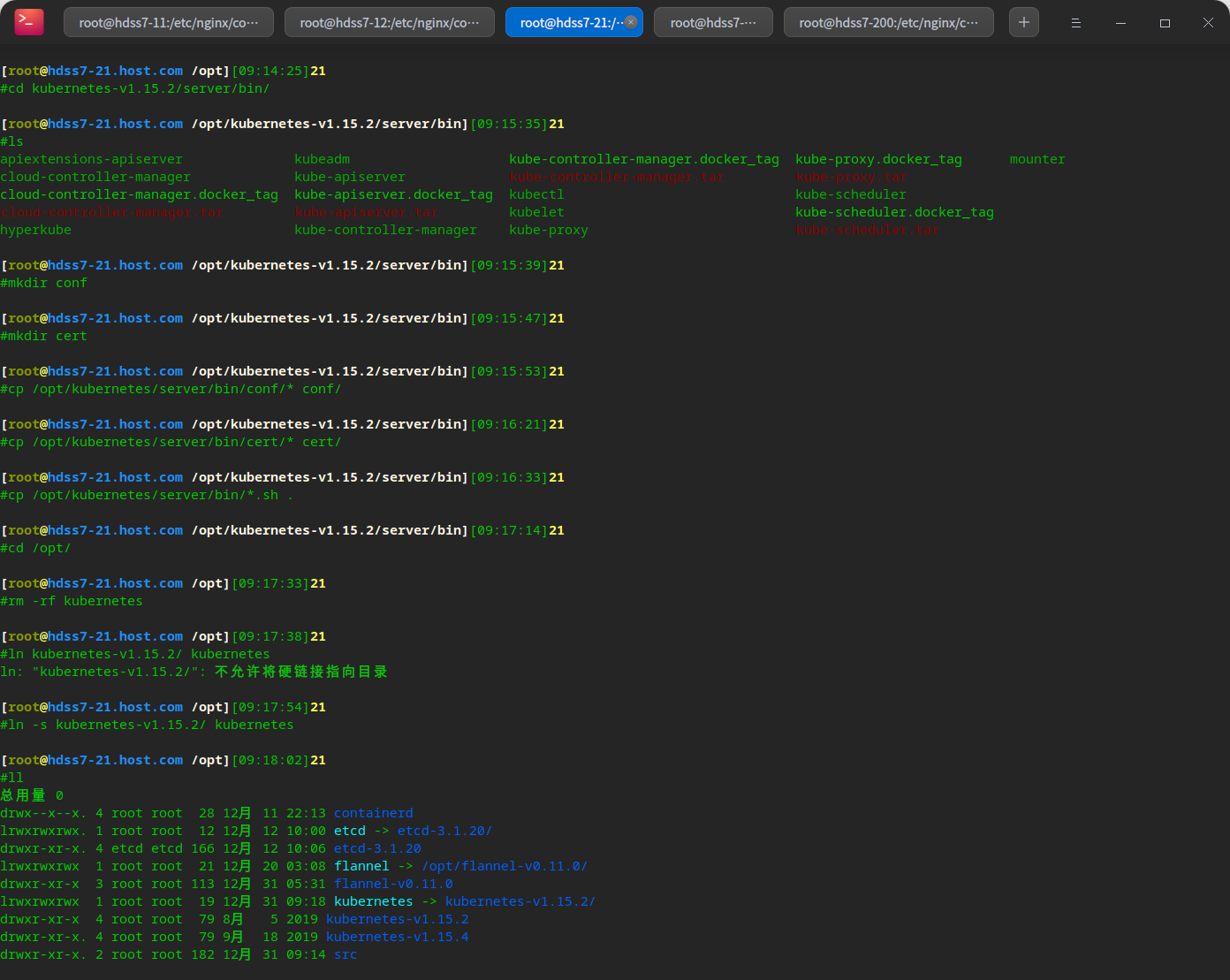

Update configuration

# Copy configuration from original version cd /opt/kubernetes-v1.15.2/server/bin/ mkdir conf mkdir cert cp /opt/kubernetes/server/bin/conf/* conf/ cp /opt/kubernetes/server/bin/cert/* cert/ cp /opt/kubernetes/server/bin/*.sh . # Update soft links cd /opt/ rm -rf kubernetes ln -s kubernetes-v1.15.2/ kubernetes

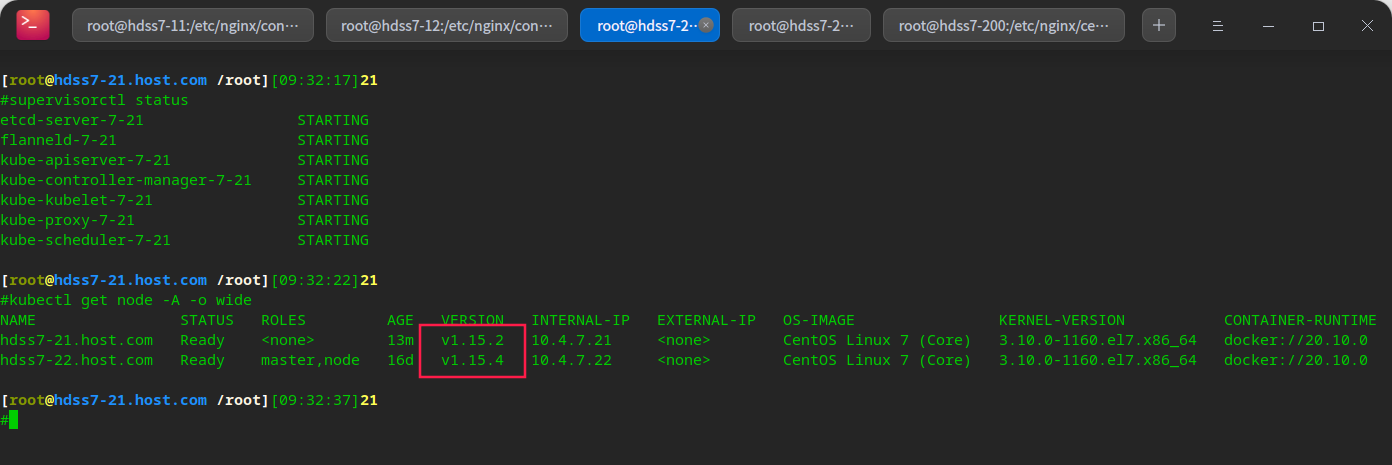

Upgrade version

supervisorctl status supervisorctl restart all # You can see that the version has changed smoothly

Restore layer 4 and 7 load balancing

# Remove relevant notes vi /etc/nginx/nginx.conf vi /etc/nginx/conf.d/od.com.conf # Restart nginx nginx -t nginx -s reload

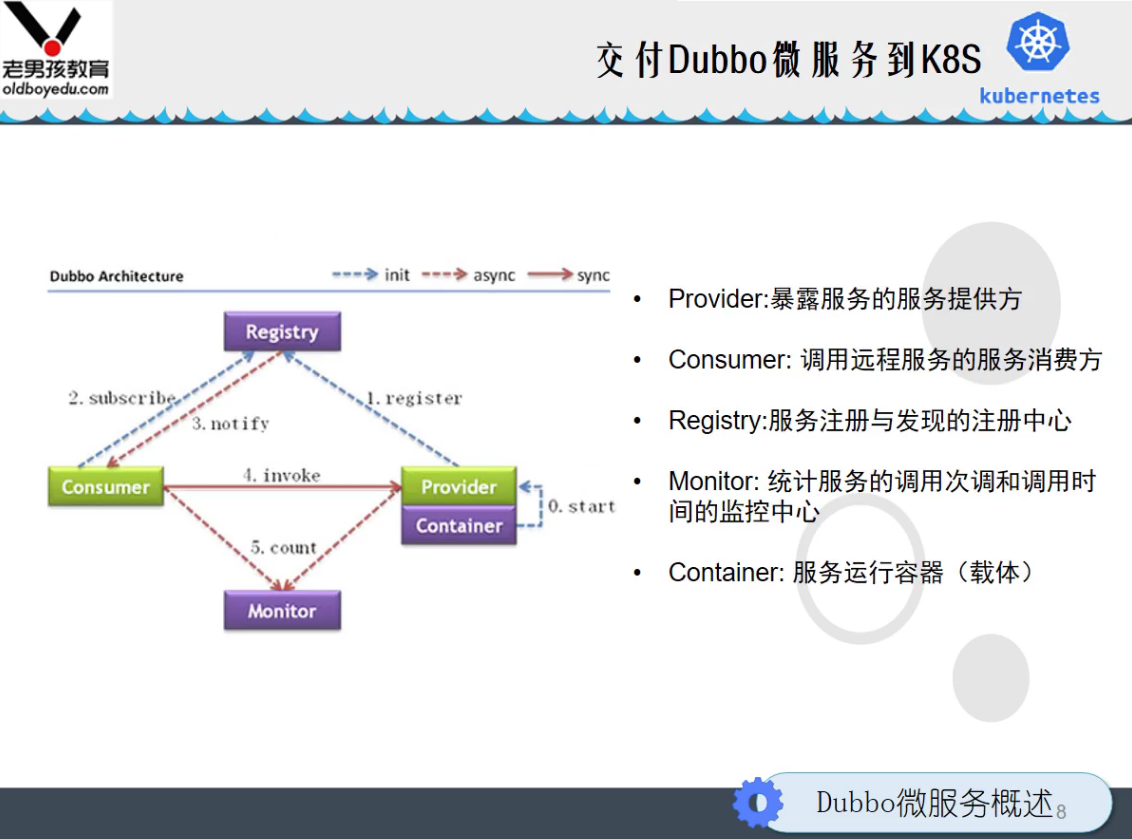

Deliver dubbo service to k8s cluster

dubbo architecture diagram

dubbo in k8s architecture diagram

# Because zookeeper is a stateful service # It is not recommended to deliver stateful services to k8s, such as mysql, zk, etc

Deploy dubbo service

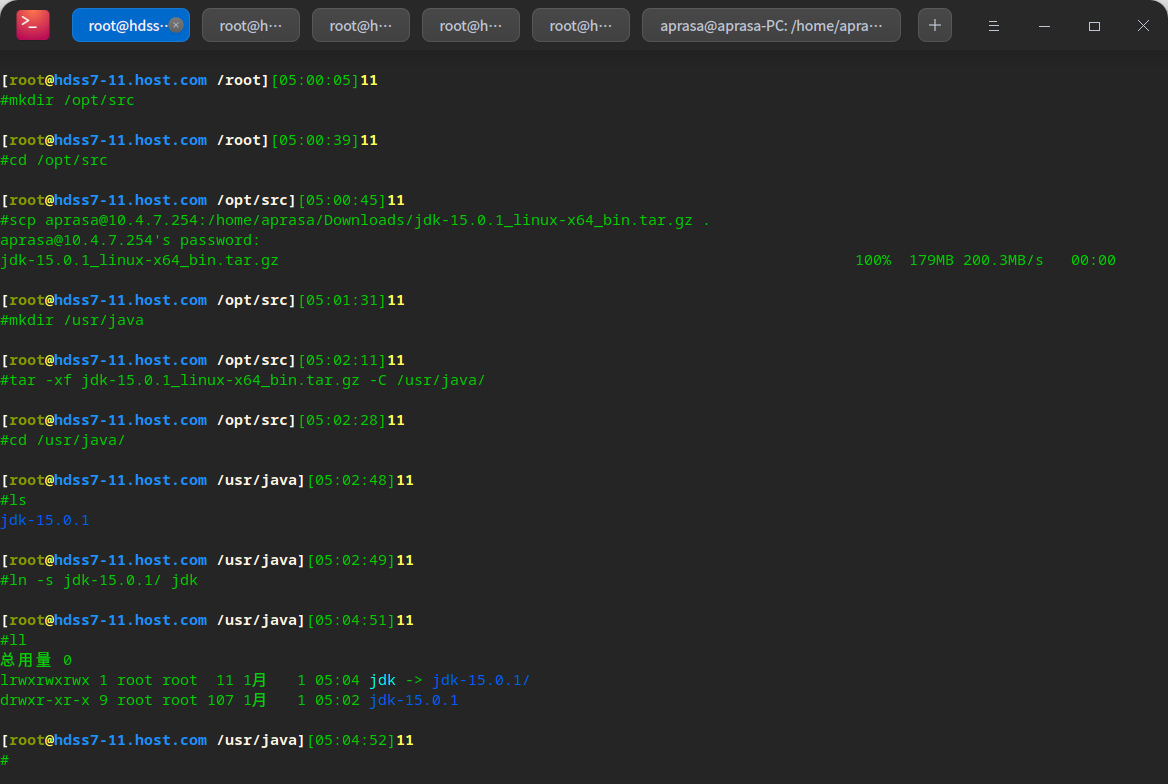

First deploy zk cluster: zk is a java service and needs to rely on jdk. Please download the jdk yourself:

Cluster distribution: 7-11, 7-12, 7-21

Install Jdk

mkdir /opt/src cd /opt/src # Copy the prepared bag in scp aprasa@10.4.7.254:/home/aprasa/Downloads/jdk-15.0.1_linux-x64_bin.tar.gz . mkdir /usr/java cd /opt/src tar -xf jdk-15.0.1_linux-x64_bin.tar.gz -C /usr/java/ cd /usr/java/ # Create jdk soft link ln -s jdk-15.0.1/ jdk

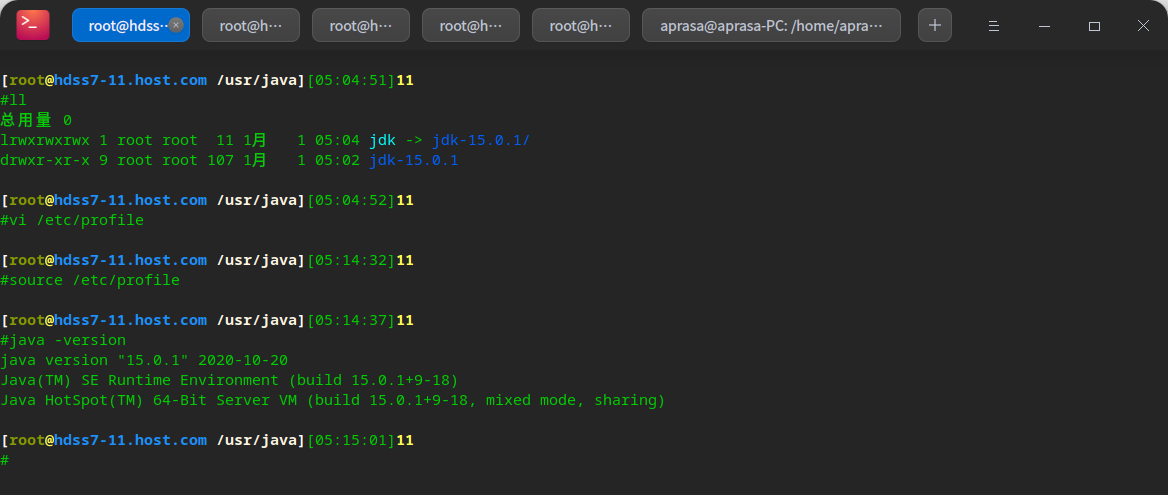

vi /etc/profile

#JAVA HOME export JAVA_HOME=/usr/java/jdk export PATH=$JAVA_HOME/bin:$PATH export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

source /etc/profile java -version

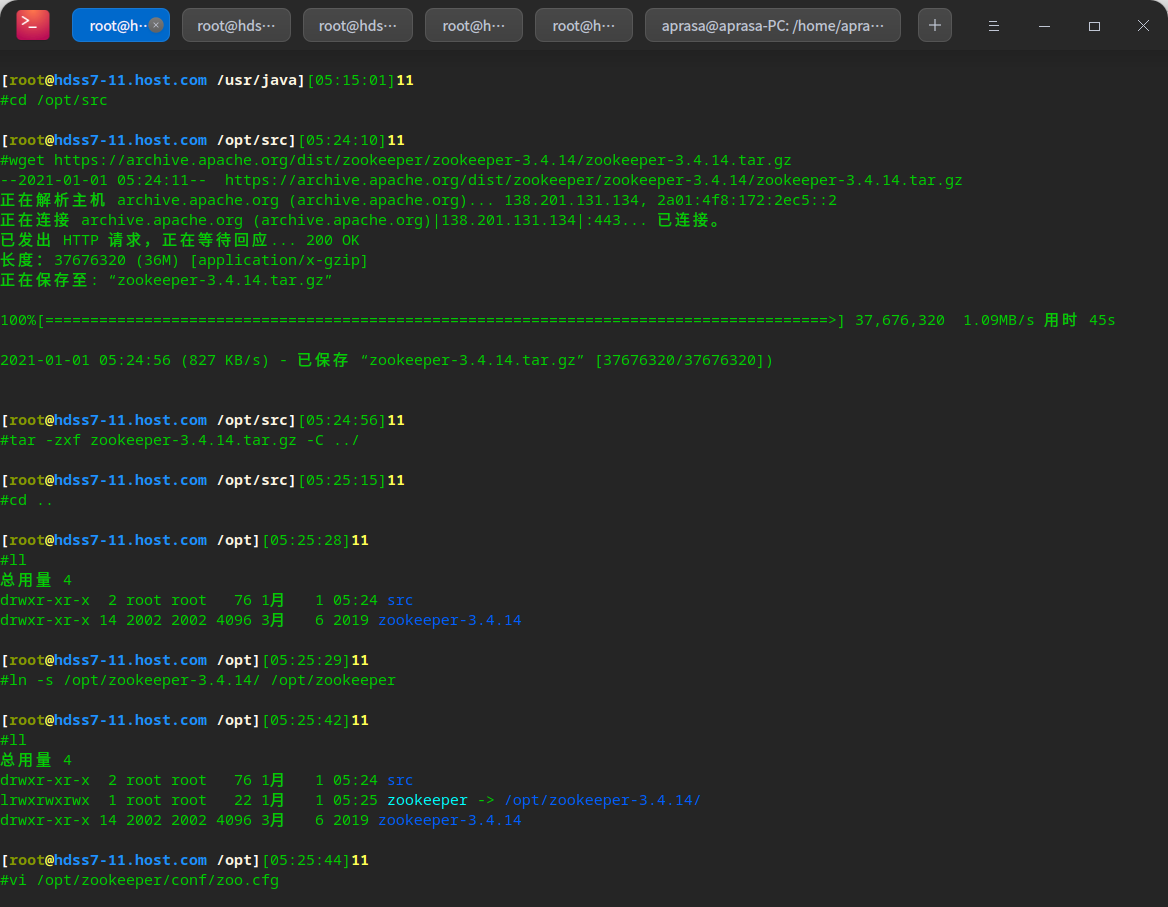

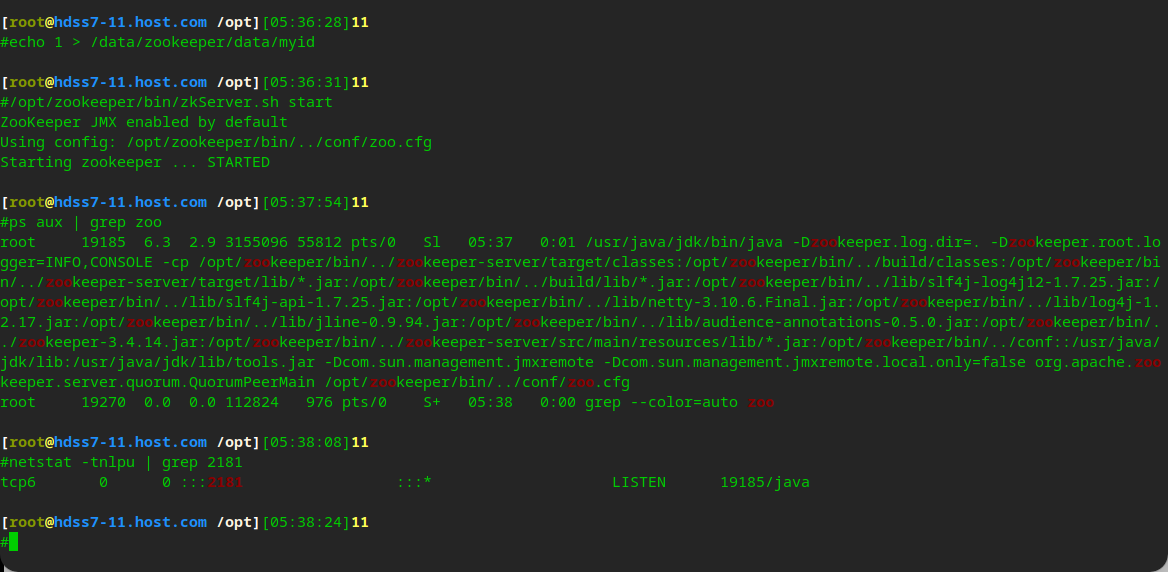

Deploy zk

# Download zookeeper cd /opt/src wget https://archive.apache.org/dist/zookeeper/zookeeper-3.4.14/zookeeper-3.4.14.tar.gz tar -zxf zookeeper-3.4.14.tar.gz -C ../ ln -s /opt/zookeeper-3.4.14/ /opt/zookeeper # Create log and data directory mkdir -pv /data/zookeeper/data /data/zookeeper/logs # to configure vi /opt/zookeeper/conf/zoo.cfg

tickTime=2000 initLimit=10 syncLimit=5 dataDir=/data/zookeeper/data dataLogDir=/data/zookeeper/logs clientPort=2181 server.1=zk1.od.com:2888:3888 server.2=zk2.od.com:2888:3888 server.3=zk3.od.com:2888:3888

# Add dns resolution vi /var/named/od.com.zone

systemctl restart named

# Modify zk cluster and configure myid # 7-11 echo 1 > /data/zookeeper/data/myid # 7-12 echo 2 > /data/zookeeper/data/myid # 7-21 echo 3 > /data/zookeeper/data/myid

# Start zk /opt/zookeeper/bin/zkServer.sh start

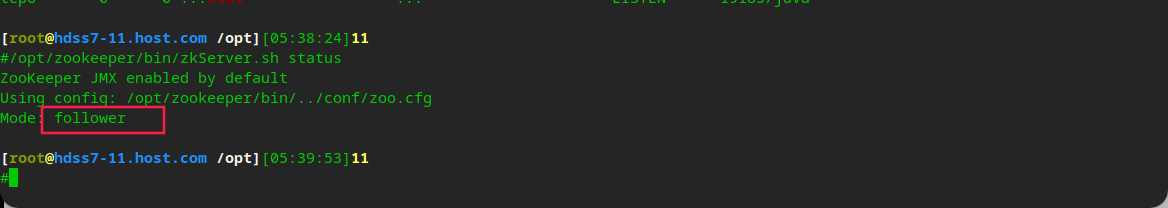

# View zk cluster status /opt/zookeeper/bin/zkServer.sh status # You can see that 11, 21 are flower s and 12 are leader s

Deploy jenkins

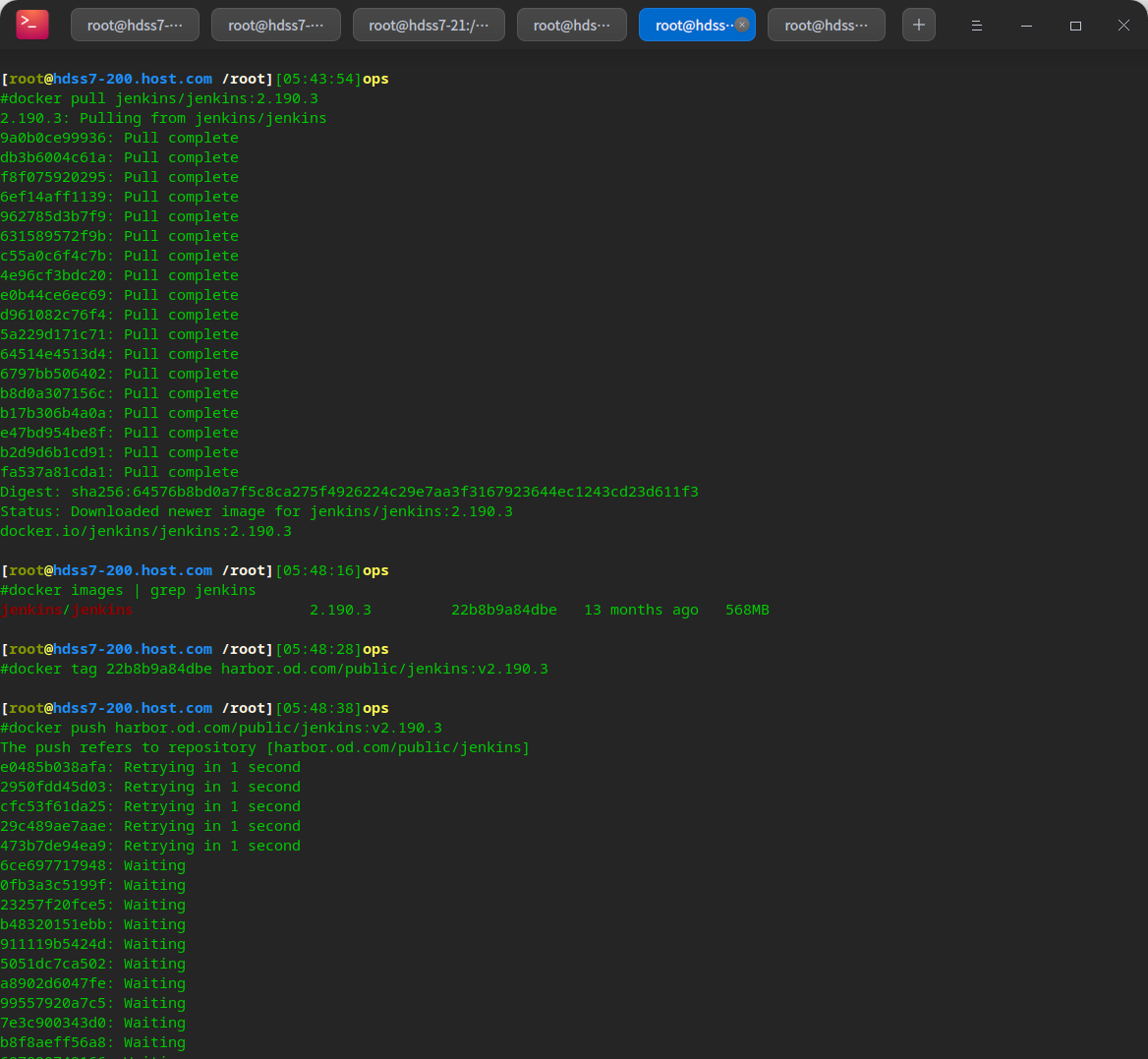

# Prepare to mirror to private warehouse docker pull jenkins/jenkins:2.190.3 docker tag 22b8b9a84dbe harbor.od.com/public/jenkins:v2.190.3 docker push harbor.od.com/public/jenkins:v2.190.3

Customize jenkins dockerfile

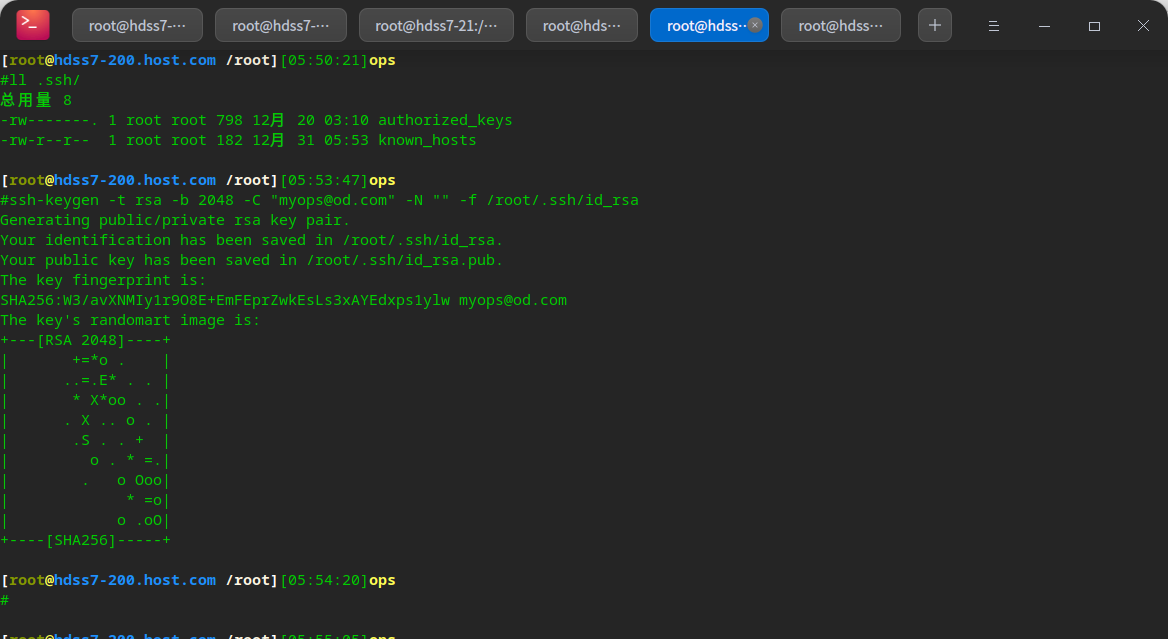

# The ssh key is created to encapsulate the private key into the jenkins image ssh-keygen -t rsa -b 2048 -C "myops@od.com" -N "" -f /root/.ssh/id_rsa

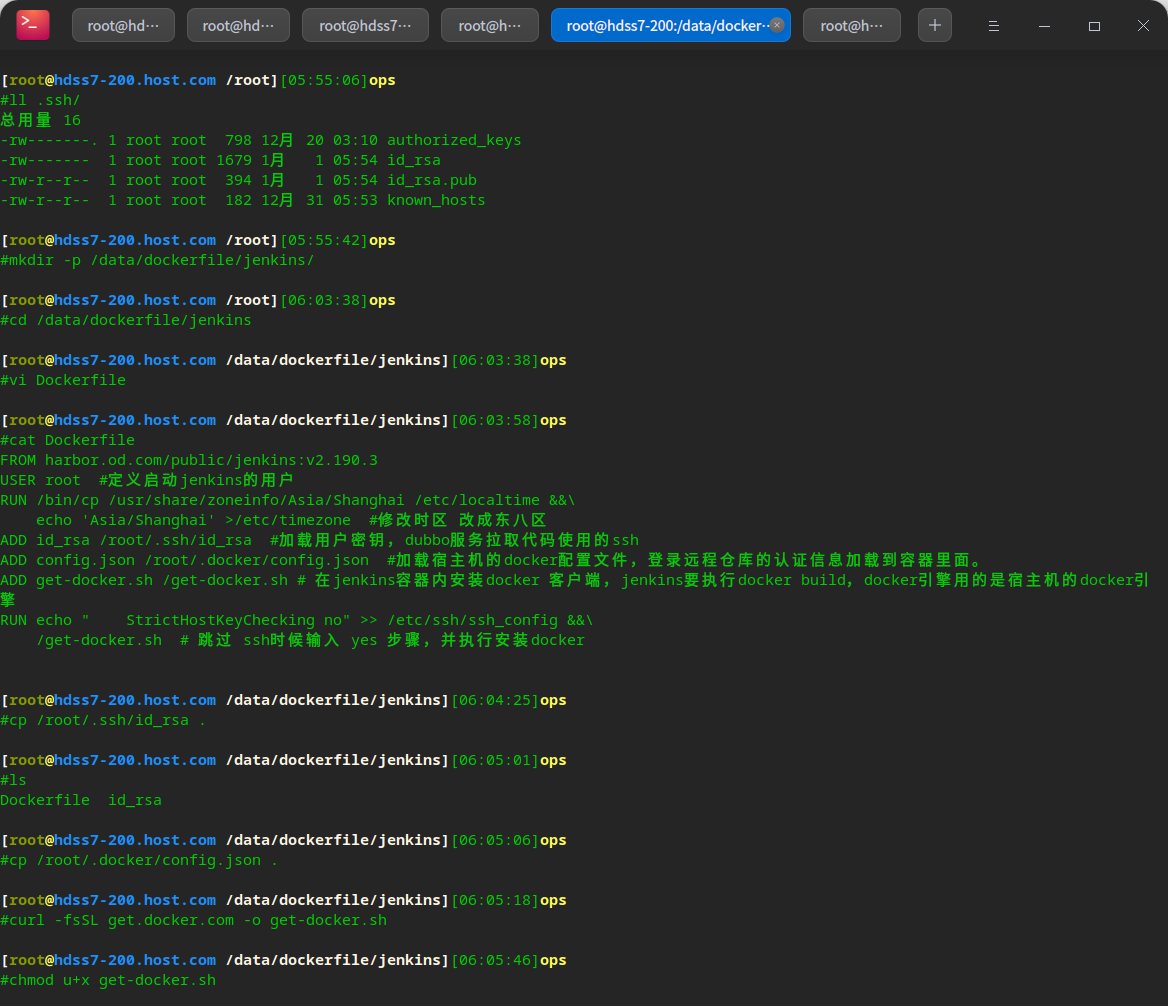

mkdir -p /data/dockerfile/jenkins/ cd /data/dockerfile/jenkins vi Dockerfile # Prepare configuration related files cp /root/.ssh/id_rsa . cp /root/.docker/config.json . curl -fsSL get.docker.com -o get-docker.sh chmod u+x get-docker.sh

FROM harbor.od.com/public/jenkins:v2.190.3

USER root #Define the user who starts jenkins

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone #Change the time zone to East Zone 8

ADD id_rsa /root/.ssh/id_rsa #Load the user key, and the dubbo service pulls the ssh used by the code

ADD config.json /root/.docker/config.json #Load the docker configuration file of the host machine, and load the authentication information of logging in to the remote warehouse into the container.

ADD get-docker.sh /get-docker.sh # Install the docker client in jenkins container. jenkins needs to execute docker build. The docker engine uses the docker engine of the host

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

/get-docker.sh # When skipping ssh, enter yes and install docker

# Prepare private warehouse infra (meaning infrastructure)

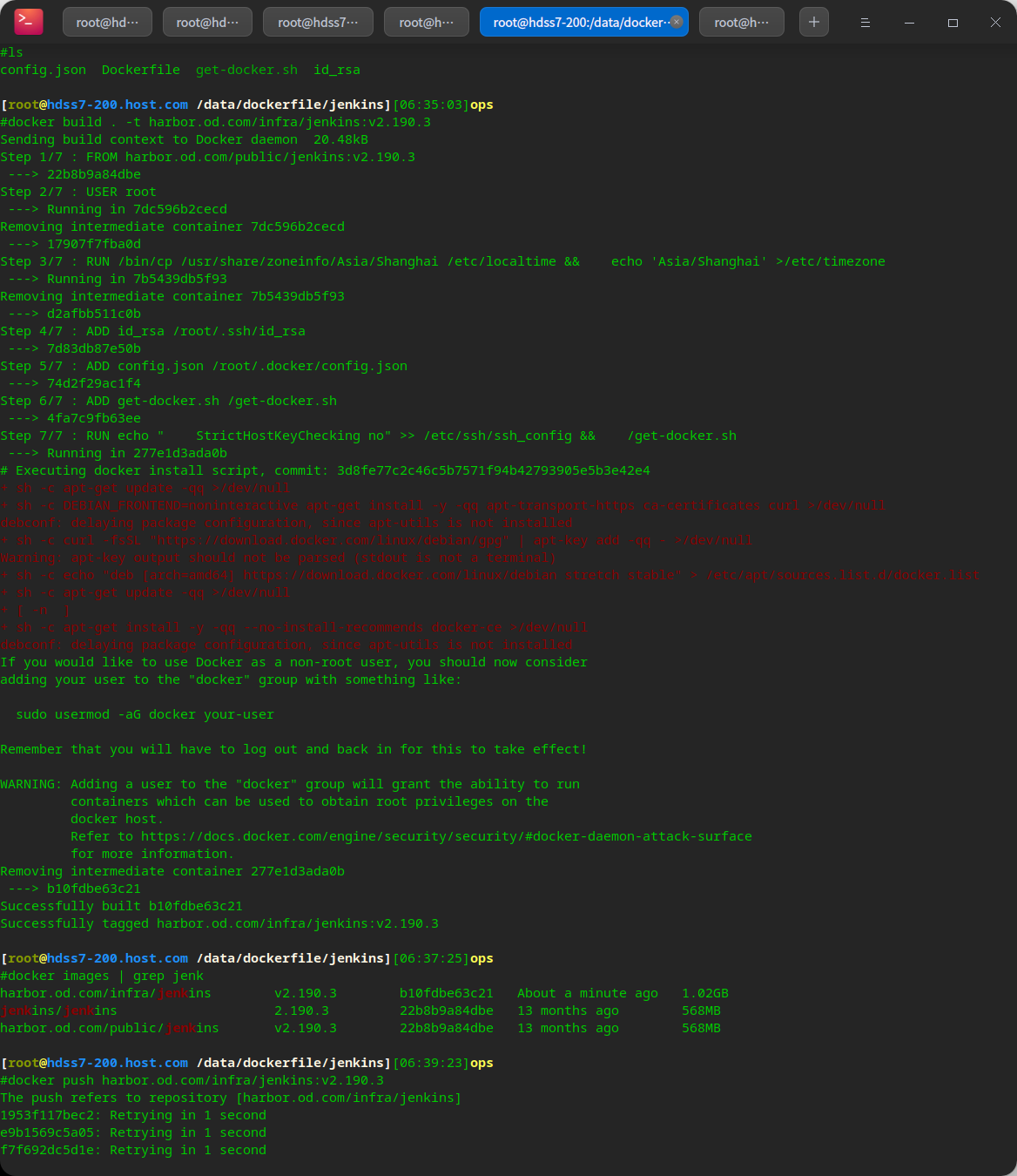

Building jenkins images

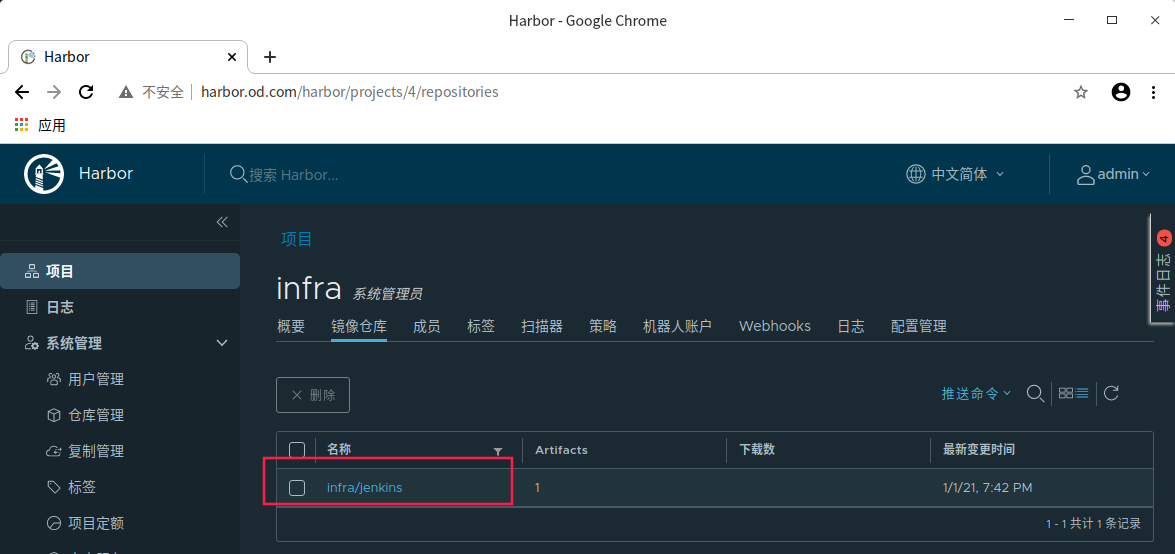

docker build . -t harbor.od.com/infra/jenkins:v2.190.3 # Upload private warehouse docker push harbor.od.com/infra/jenkins:v2.190.3

Create a k8s namespace for jenkins

kubectl create ns infra

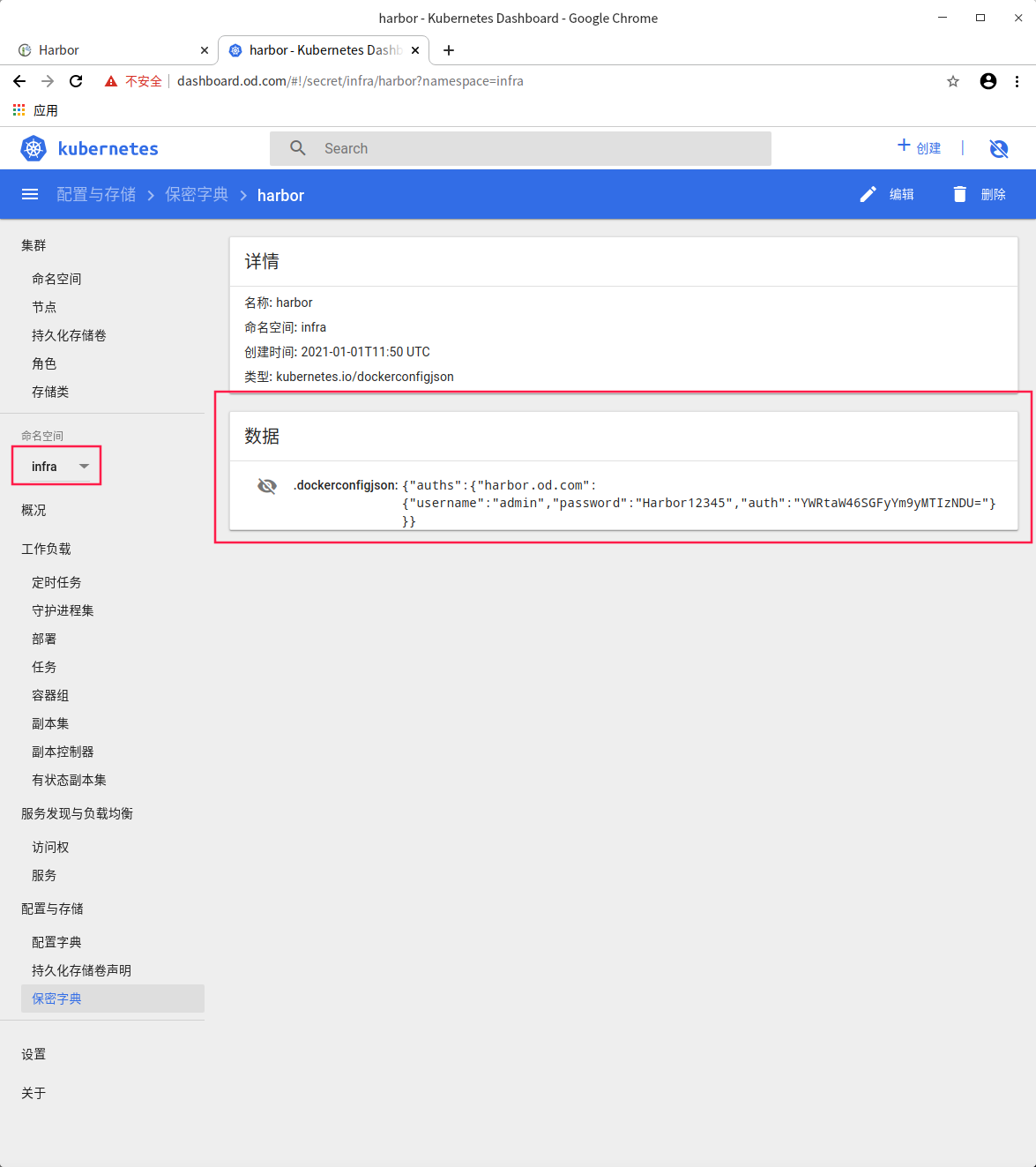

Create a secret

# To access our private warehouse infra: # Create a secret, the resource type is docker registry, the name is harbor, and docker server = harbor od. COM, docker username = admin, docker password = harbor12345 - N specify the private warehouse name infra kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n infra

Preparing shared storage

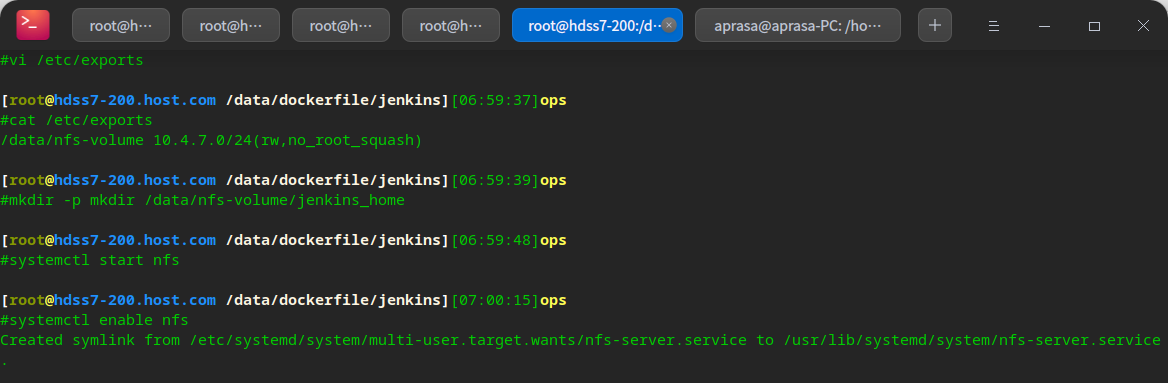

# In order to store some persistent data in jenkins, we need to use shared storage and mount it: the simplest NFS shared storage is used here, because k8s NFS module is supported by default # Install on the O & M host and all node nodes: yum install nfs-utils -y # Use 7-200 as the server vi /etc/exports # /data/nfs-volume 10.4.7.0/24(rw,no_root_squash) mkdir -p mkdir /data/nfs-volume/jenkins_home systemctl start nfs systemctl enable nfs

Prepare jenkins resource configuration list

# Use nfs in the resource configuration list cd /data/k8s-yaml/ mkdir jenkins cd jenkins

vi dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

labels:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data

nfs:

server: hdss7-200

path: /data/nfs-volume/jenkins_home

- name: docker

hostPath:

path: /run/docker.sock

type: ''

containers:

- name: jenkins

image: harbor.od.com/infra/jenkins:v2.190.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512m -Xms512m

volumeMounts:

- name: data

mountPath: /var/jenkins_home

- name: docker

mountPath: /run/docker.sock

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

vi svc.yaml

kind: Service

apiVersion: v1

metadata:

name: jenkins

namespace: infra

spec:

ports:

- protocol: TCP

port: 80

targetPort: 8080

selector:

app: jenkins

vi ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

spec:

rules:

- host: jenkins.od.com

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 80

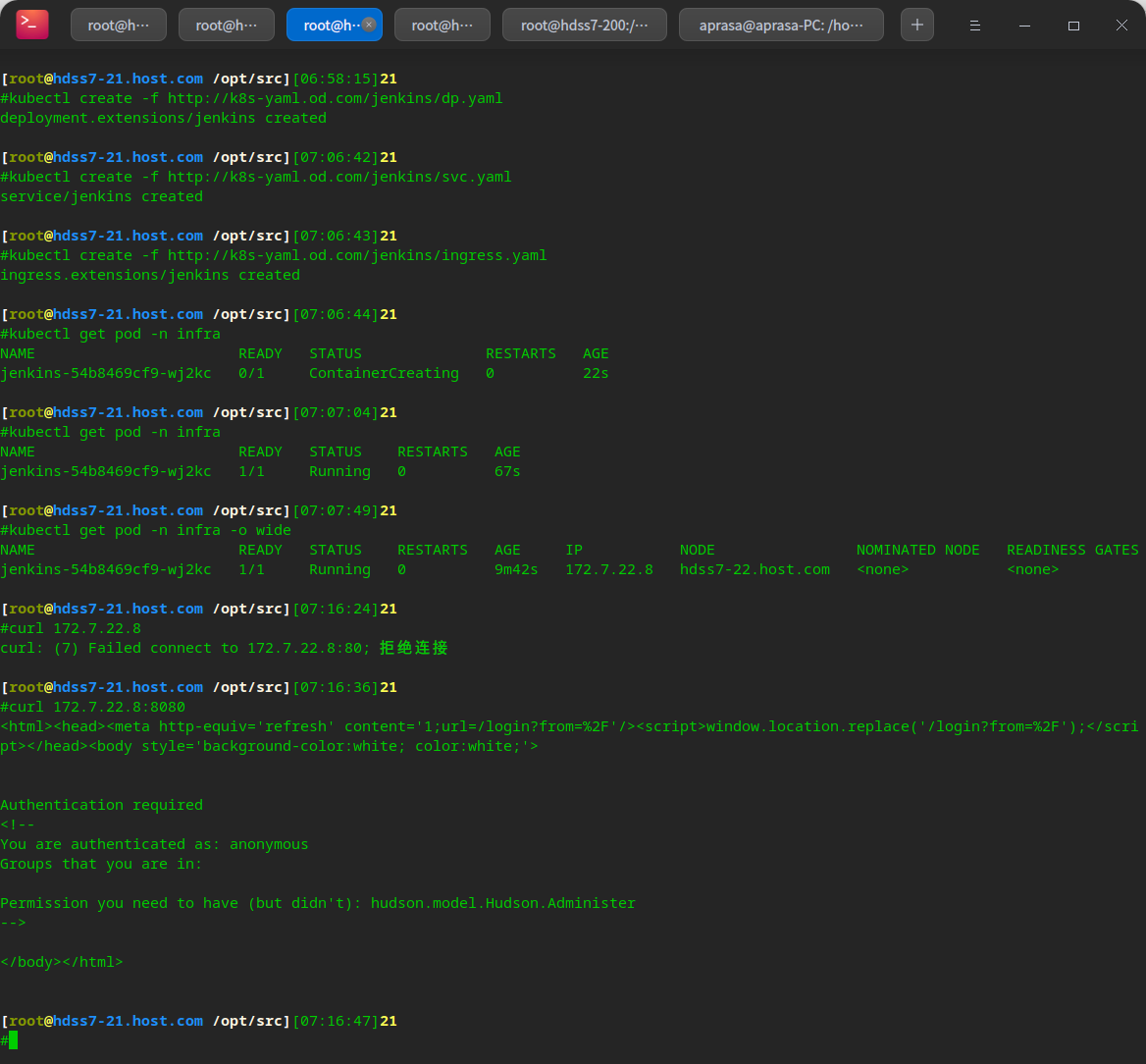

Application resource configuration list: node node

kubectl create -f http://k8s-yaml.od.com/jenkins/dp.yaml kubectl create -f http://k8s-yaml.od.com/jenkins/svc.yaml kubectl create -f http://k8s-yaml.od.com/jenkins/ingress.yaml

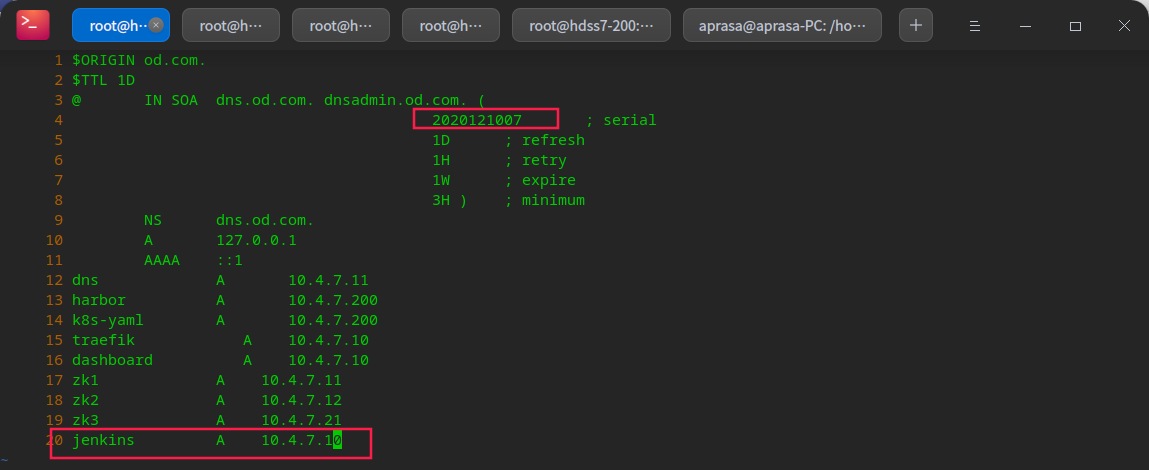

Add dns resolution: 7-11

vi /var/named/od.com.zone systemctl restart named

You can see visiting jenkins