Using ioutil Readall reads a large Response Body. The problem of reading the Body timeout occurs.

Predecessors lead the way

Stackoverflow morganbaz's view is:

Use iotil Readall is very inefficient to read the large Response Body in go language; In addition, if the Response Body is large enough, there is a risk of memory leakage.

data,err:= iotil.ReadAll(r)

if err != nil {

return err

}

json.Unmarshal(data, &v)

There is a more effective way to parse json data, using the Decoder type

err := json.NewDecoder(r).Decode(&v)

if err != nil {

return err

}

From the perspective of memory and time, this method is not only more concise, but also more efficient.

- The Decoder does not need to allocate a huge byte of memory to accommodate data reading -- it can simply reuse a small buffer to get all the data and parse it incrementally. This saves a lot of time for memory allocation and eliminates the pressure of GC

- JSON Decoder can start parsing data when the first data block enters -- it doesn't have to wait for everything to finish downloading.

Descendants cool

Here I would like to add two points to the previous ideas.

- Official ioutil Readall reads the reader through slices with an initial size of 512 bytes. Our response body is about 50M. It is obvious that slice expansion will be triggered frequently, resulting in unnecessary memory allocation and pressure on gc.

Timing of go slice capacity expansion: if the demand is less than 256 bytes, the capacity shall be expanded by 2 times; If it exceeds 256 bytes, the capacity shall be expanded by 1.25 times.

- How to understand the risk of memory leakage brought by morganbaz?

Memory leak refers to that the heap memory dynamically allocated by the program is not released for some reason, resulting in a waste of system memory, slow down the running speed of the program, promotion, system crash and other serious consequences.

ioutil.ReadAll reading a large Body will trigger slice expansion. It's reasonable that this practice will only waste memory and will eventually be released by gc. Why did the original author emphasize the risk of memory leakage?

I consulted some children's boots. For highly concurrent server programs that need to run for a long time, failure to release memory in time may eventually lead to the depletion of all memory in the system. This is an implicit memory leak.

Since ancient times, JSON serialization has been a battleground for strategists

morganbaz boss proposed to use the standard library encoding/json to deserialize while reading,

Reduce memory allocation and speed up deserialization.

Since ancient times, JSON serialization has been a battleground for strategists , all major languages have different implementation ideas for serialization, and the performance is quite different.

Next, we use the high-performance JSON serialization library JSON iterator and native ioutil ReadAll+ json. Compare the unmarshal method.

By the way, I also test the results of my recent practice of pprof.

# go get "github.com/json-iterator/go"

package main

import (

"bytes"

"flag"

"log"

"net/http"

"os"

"runtime/pprof"

"time"

jsoniter "github.com/json-iterator/go"

)

var cpuprofile = flag.String("cpuprofile", "", "write cpu profile to file.")

var memprofile = flag.String("memprofile", "", "write mem profile to file")

func main() {

flag.Parse()

if *cpuprofile != "" {

f, err := os.Create(*cpuprofile)

if err != nil {

log.Fatal(err)

}

pprof.StartCPUProfile(f)

defer pprof.StopCPUProfile()

}

c := &http.Client{

Timeout: 60 * time.Second,

// Transport: tr,

}

body := sendRequest(c, http.MethodPost)

log.Println("response body length:", body)

if *memprofile != "" {

f, err := os.Create(*memprofile)

if err != nil {

log.Fatal("could not create memory profile: ", err)

}

defer f.Close() // error handling omitted for example

if err := pprof.WriteHeapProfile(f); err != nil {

log.Fatal("could not write memory profile: ", err)

}

}

}

func sendRequest(client *http.Client, method string) int {

endpoint := "http://xxxxx.com/table/instance?method=batch_query"

expr := "idc in (logicidc_hd1,logicidc_hd2,officeidc_hd1)"

var json = jsoniter.ConfigCompatibleWithStandardLibrary

jsonData, err := json.Marshal([]string{expr})

log.Println("Start request:" + time.Now().Format("2006-01-02 15:04:05.010"))

response, err := client.Post(endpoint, "application/json", bytes.NewBuffer(jsonData))

if err != nil {

log.Fatalf("Error sending request to api endpoint, %+v", err)

}

log.Println("End of server processing, Ready to receive Response:" + time.Now().Format("2006-01-02 15:04:05.010"))

defer response.Body.Close()

var resp Response

var records = make(map[string][]Record)

resp.Data = &records

err= json.NewDecoder(response.Body).Decode(&resp)

if err != nil {

log.Fatalf("Couldn't parse response body, %+v", err)

}

log.Println("Client read+End of parsing:" + time.Now().Format("2006-01-02 15:04:05.010"))

var result = make(map[string]*Data, len(records))

for _, r := range records[expr] {

result[r.Ins.Id] = &Data{Active: "0", IsProduct: true}

}

return len(result)

}

# The deserialized object type is omitted

Memory comparison

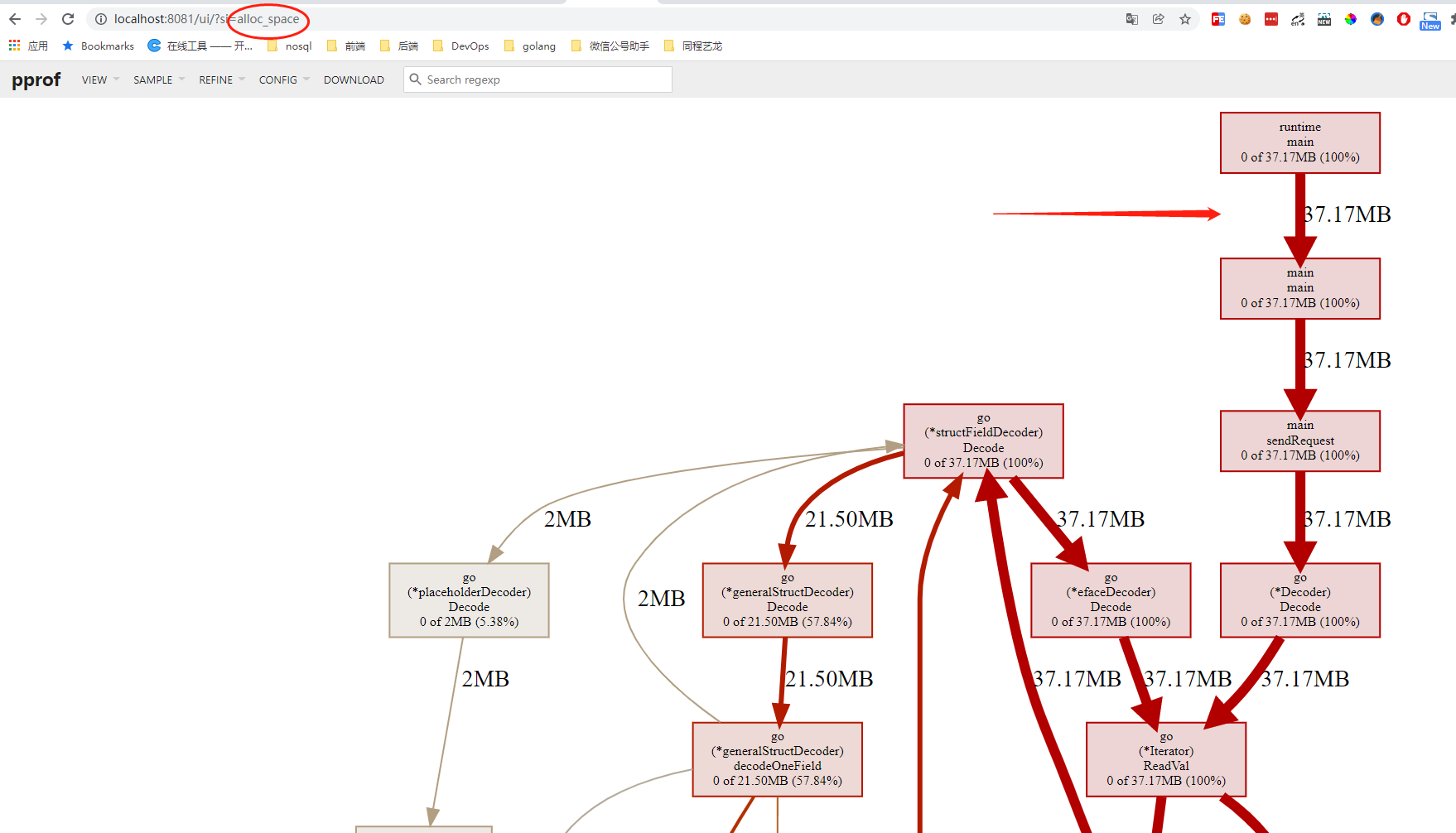

---JSON iterator deserializes while reading---

--- io.ReadAll + json.Unmarshal deserialization

We can click in to see io ReadAll + json. What is the memory consumption of unmarshal?

Total: 59.59MB 59.59MB (flat, cum) 100%

626 . . func ReadAll(r Reader) ([]byte, error) {

627 . . b := make([]byte, 0, 512)

628 . . for {

629 . . if len(b) == cap(b) {

630 . . // Add more capacity (let append pick how much).

631 59.59MB 59.59MB b = append(b, 0)[:len(b)]

632 . . }

633 . . n, err := r.Read(b[len(b):cap(b)])

634 . . b = b[:len(b)+n]

635 . . if err != nil {

636 . . if err == EOF {

It can also be confirmed from the above figure Readall # stores the entire response for The body continuously expands the initial 512 byte slice to generate resident memory 59M.

You can also compare alloc_space allocate memory and inuse_space is resident in memory, and the difference between the two can be roughly understood as the part released by gc.

From the results, JSON iterator is better than io ReadAll + json. Unmarshal allocated memory is relatively small.

My harvest

1.ioutil.ReadAll reads a large response Body risk: poor performance and risk of memory leakage

2. Implicit memory leak: for highly concurrent and long-running web programs, failure to release memory in time will eventually lead to memory depletion.

3.json serialization is a must for strategists. JSON iterator is a high-performance serializer compatible with the usage of standard encode/json api

4. Posture of pprof memory diagnosis & Significance of debugging index.