SlowFast - deployment and testing

brief introduction

GitHub:

https://github.com/facebookresearch/SlowFast

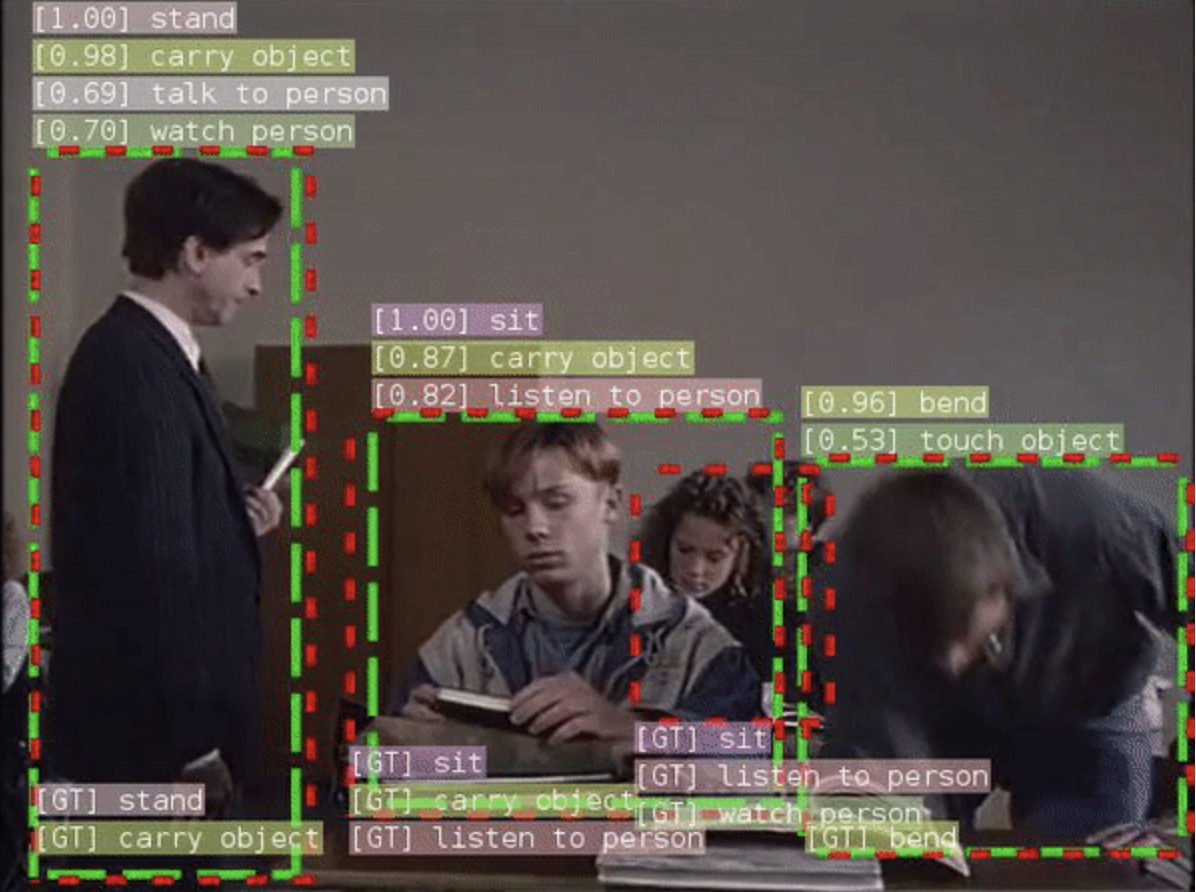

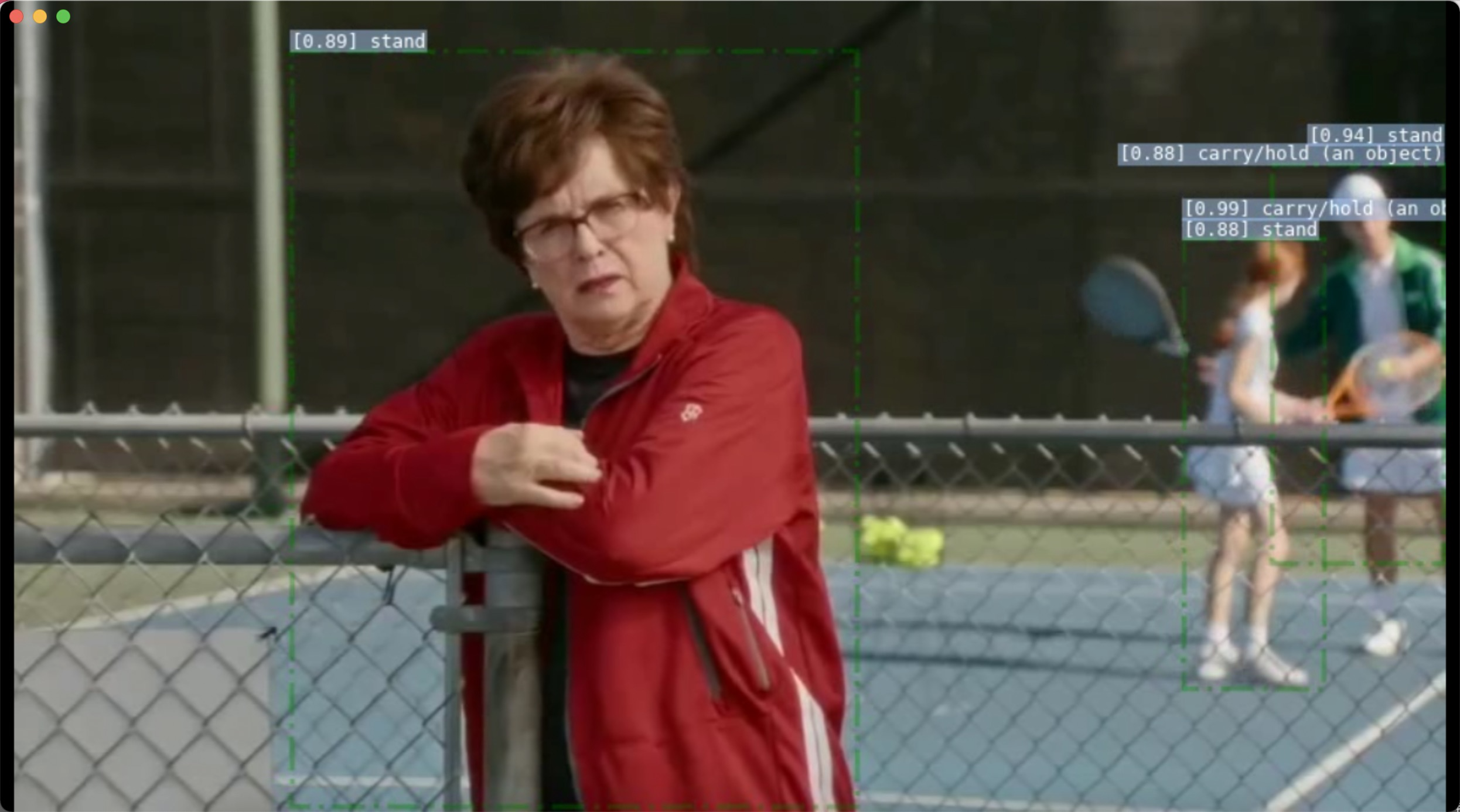

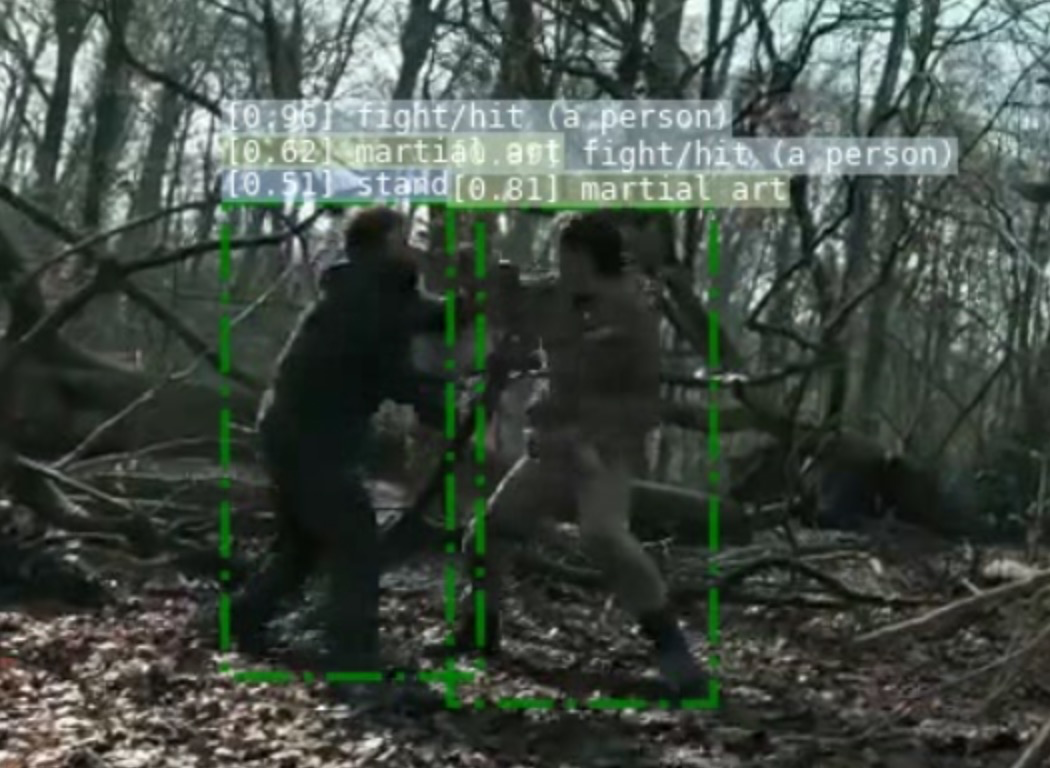

The official operation example diagram is like this.

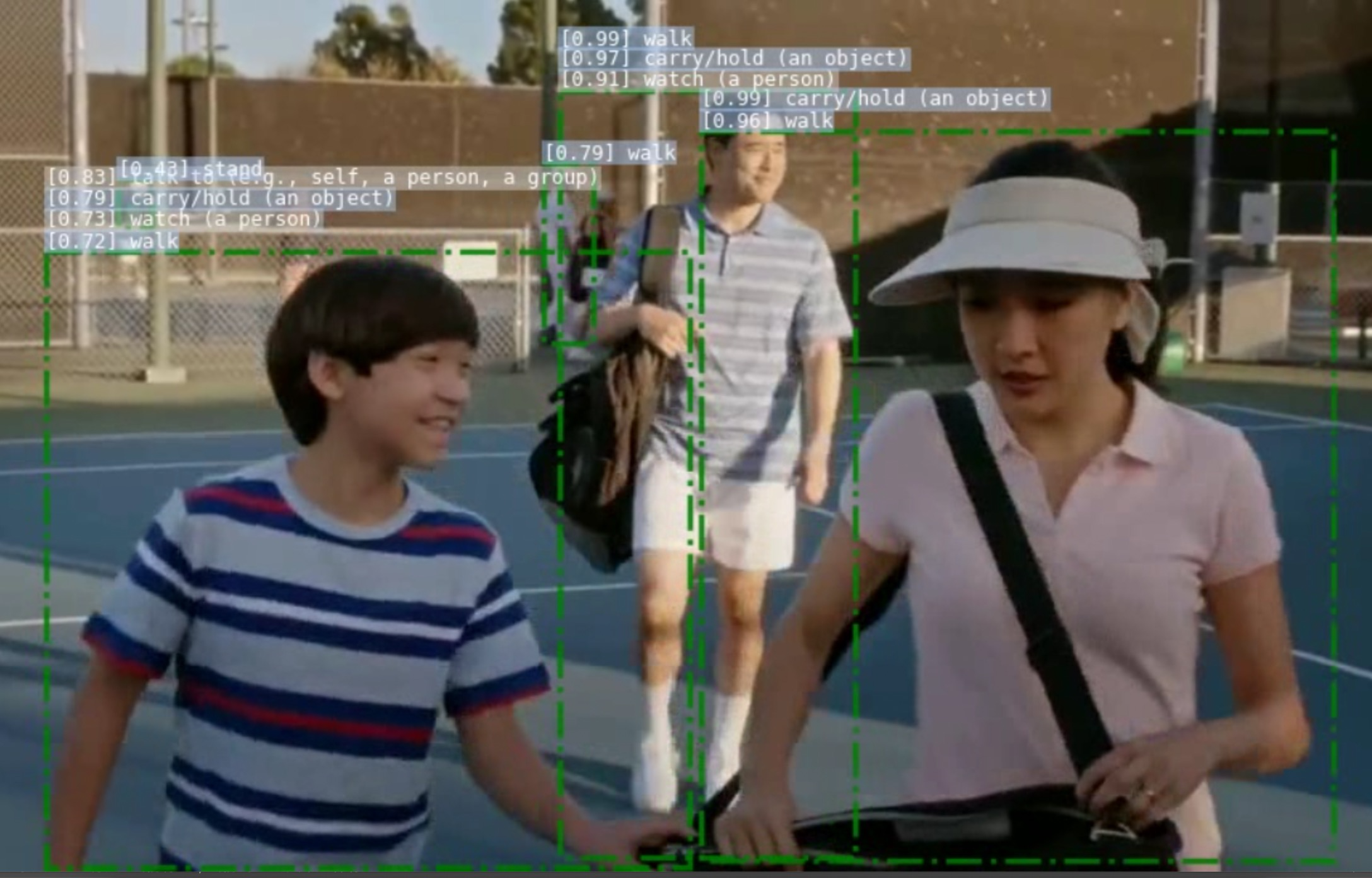

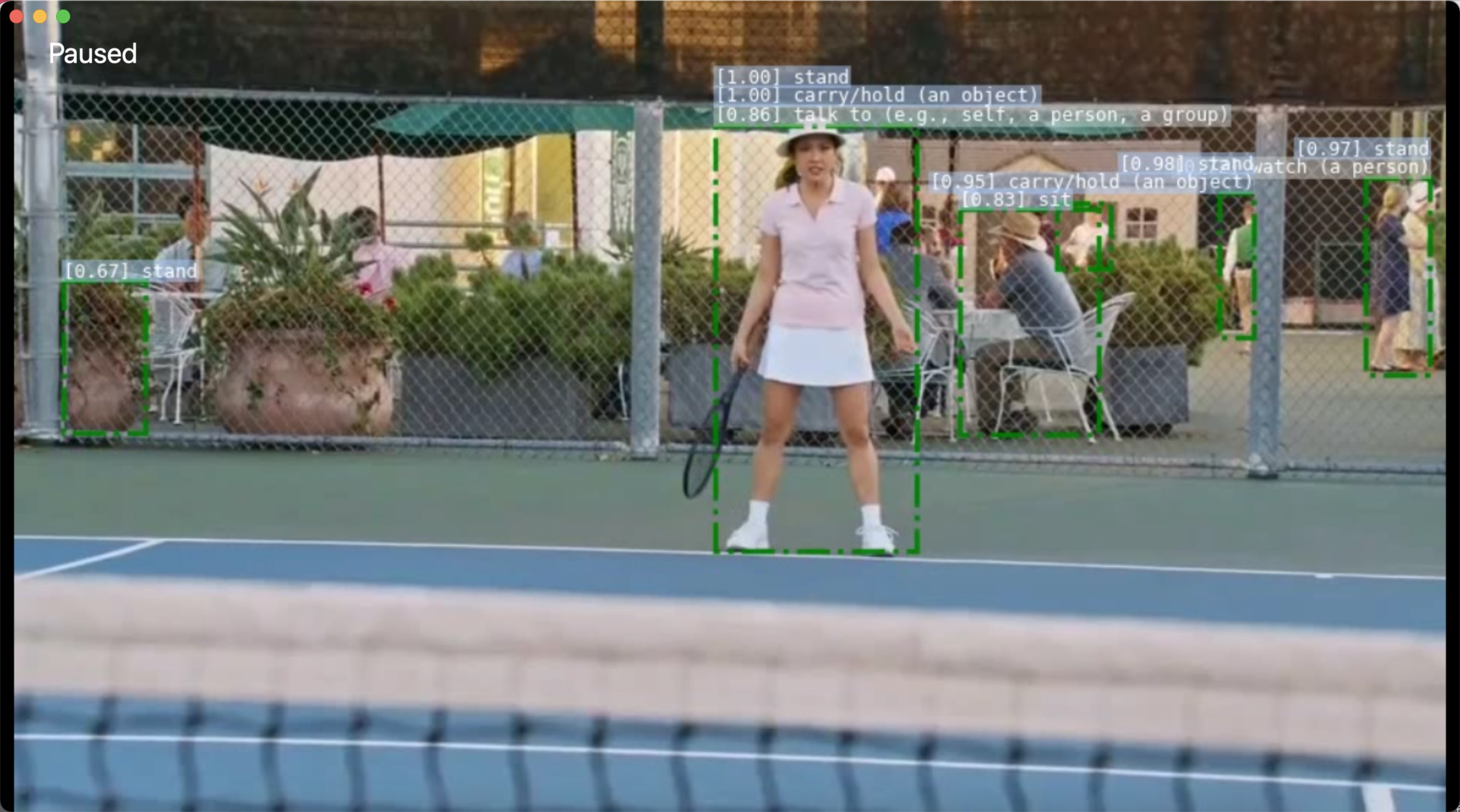

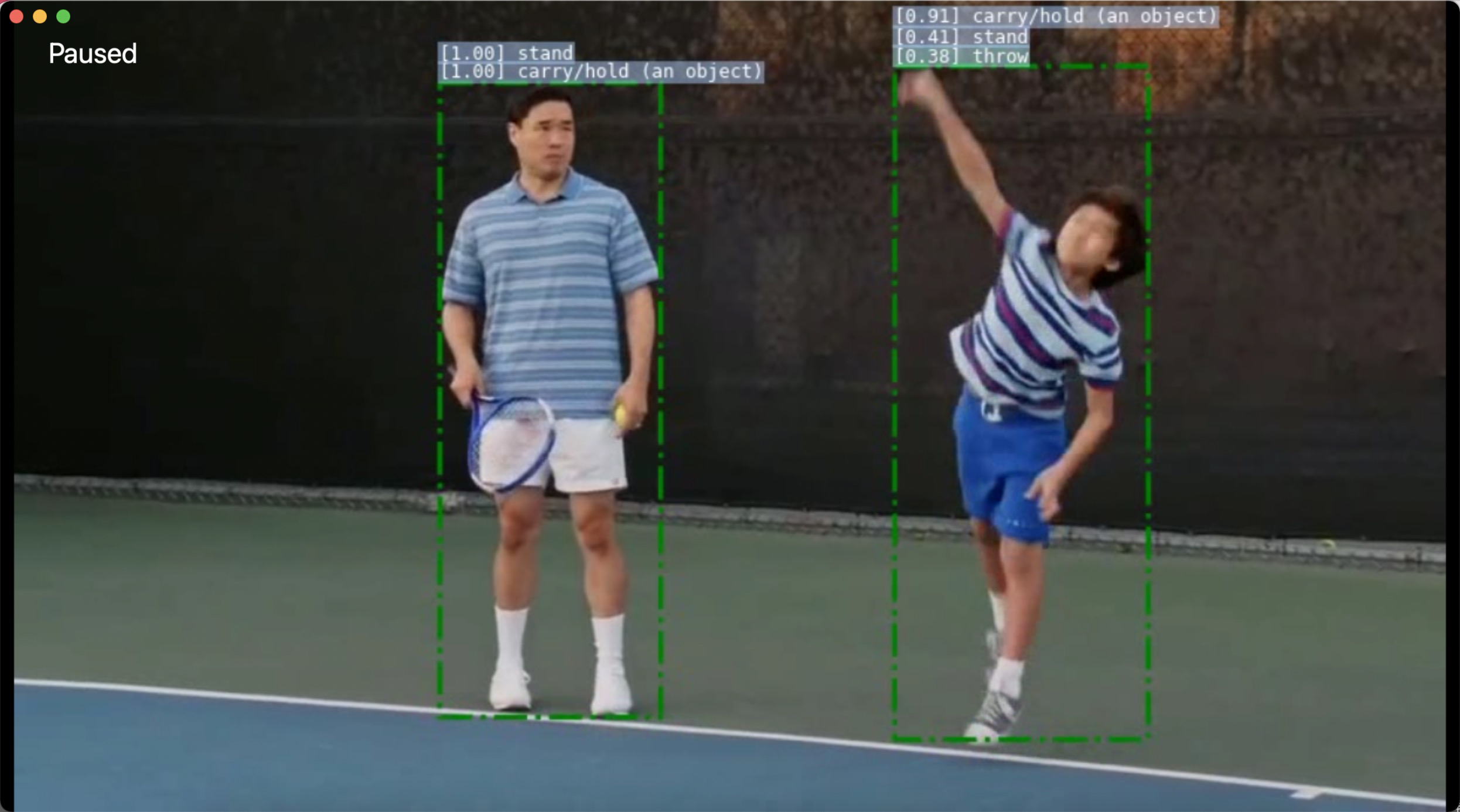

The features are: the recognized action is atomic action; Each person may be recognized multiple actions at the same time.

Installation deployment

Deployment platform: Polar chain AI cloud

Deployment machine: Tesla V100

Deployment environment: Python 1.6.0, python 3.7, CUDA 10.2

Pay attention here!!!!!!!!

Select a Python Framework version of 1.6.0, so that you can select Python of 3.7.

Since Detectron2 requires pytoch > = 1.7, you will need to upgrade the version of pytoch later.

But be sure to choose Python as 3.7!!!!!!!!

Make sure Python is 3.7!!!!!!!!

Installation process:

pip install 'git+https://github.com/facebookresearch/fvcore' pip install simplejson conda install av -c conda-forge y pip install -U iopath pip install psutil pip install moviepy pip install tensorboard pip install pytorchvideo pip install torch==1.7.0 torchvision==0.8.0 torchaudio==0.7.0 pip install torch==1.7.1+cu110 torchvision==0.8.2+cu110 torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html git clone https://github.com/facebookresearch/detectron2.git pip install -e detectron2 git clone https://github.com/facebookresearch/SlowFast.git cd SlowFast python setup.py build develop

After installation, fvcore, detectron2 and slowfast are successfully installed.

The following error is prompted.

error: Could not find suitable distribution for Requirement.parse('PIL')

But I think Installation tutorial The PIL library is not specifically mentioned in the, and the pilot library already exists when you view it with the pip list.

Pilot, PIL learn more

Then it didn't affect the subsequent inference test. I was tired, so I didn't care about him first.

After installation, use pip list to view:

fvcore 0.1.5 detectron2 0.5 /root/detectron2 sklearn 0.0 slowfast 1.0 /root/SlowFast torch 1.7.1+cu110 torchaudio 0.7.2 torchvision 0.8.2+cu110

test

After the basic installation and deployment, how to use it next.

Getting Started with PySlowFast

This document provides a brief intro of launching jobs in PySlowFast for training and testing. Before launching any job, make sure you have properly installed the PySlowFast following the instruction in README.md and you have prepared the dataset following DATASET.md with the correct format.

Prepare pkl file and upload

Took a look at dataset The introduction in MD includes Kinetics (132GB), AVA (500GB +), Charades, Something-Something V2.

Downloading and processing are difficult.

Bloggers have tried to prepare according to the preparation method of AVA. Download, crop, extract frames, Download annotation files (this is OK). The original video was more than 19GB. After the whole process, the computer ran for more than two days, day and night.

[write the processing training method for this part of AVA data set later]

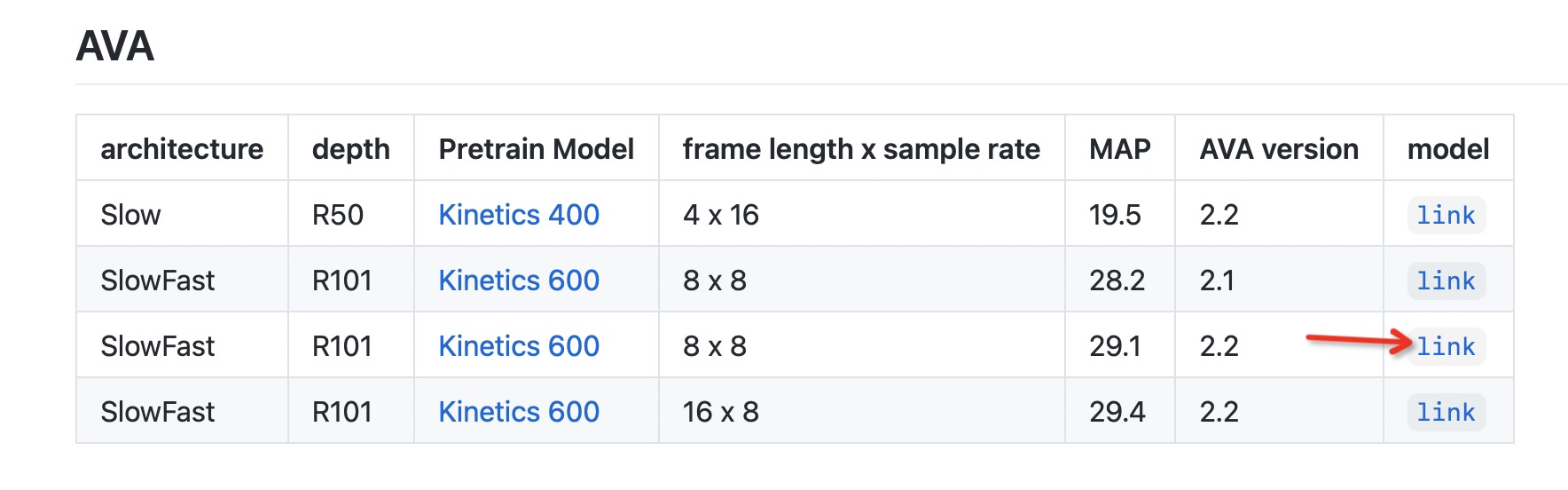

So, let's play with the official gifts. stay MODEL_ZOO.md , download the following model.

(the explanation of this table may be explained later when interpreting the paper)

The downloaded file name is: [SLOWFAST_32x2_R101_50_50.pkl]

Upload to the cloud after downloading. I am uploading to / root/SlowFast path.

Upload Ava JSON file

Make Ava The JSON file is as follows, recording 80 action categories.

Upload to / root/SlowFast/demo/AVA path.

{"bend/bow (at the waist)": 0, "crawl": 1, "crouch/kneel": 2, "dance": 3, "fall down": 4, "get up": 5, "jump/leap": 6, "lie/sleep": 7, "martial art": 8, "run/jog": 9, "sit": 10, "stand": 11, "swim": 12, "walk": 13, "answer phone": 14, "brush teeth": 15, "carry/hold (an object)": 16, "catch (an object)": 17, "chop": 18, "climb (e.g., a mountain)": 19, "clink glass": 20, "close (e.g., a door, a box)": 21, "cook": 22, "cut": 23, "dig": 24, "dress/put on clothing": 25, "drink": 26, "drive (e.g., a car, a truck)": 27, "eat": 28, "enter": 29, "exit": 30, "extract": 31, "fishing": 32, "hit (an object)": 33, "kick (an object)": 34, "lift/pick up": 35, "listen (e.g., to music)": 36, "open (e.g., a window, a car door)": 37, "paint": 38, "play board game": 39, "play musical instrument": 40, "play with pets": 41, "point to (an object)": 42, "press": 43, "pull (an object)": 44, "push (an object)": 45, "put down": 46, "read": 47, "ride (e.g., a bike, a car, a horse)": 48, "row boat": 49, "sail boat": 50, "shoot": 51, "shovel": 52, "smoke": 53, "stir": 54, "take a photo": 55, "text on/look at a cellphone": 56, "throw": 57, "touch (an object)": 58, "turn (e.g., a screwdriver)": 59, "watch (e.g., TV)": 60, "work on a computer": 61, "write": 62, "fight/hit (a person)": 63, "give/serve (an object) to (a person)": 64, "grab (a person)": 65, "hand clap": 66, "hand shake": 67, "hand wave": 68, "hug (a person)": 69, "kick (a person)": 70, "kiss (a person)": 71, "lift (a person)": 72, "listen to (a person)": 73, "play with kids": 74, "push (another person)": 75, "sing to (e.g., self, a person, a group)": 76, "take (an object) from (a person)": 77, "talk to (e.g., self, a person, a group)": 78, "watch (a person)": 79}

Prepare yaml file

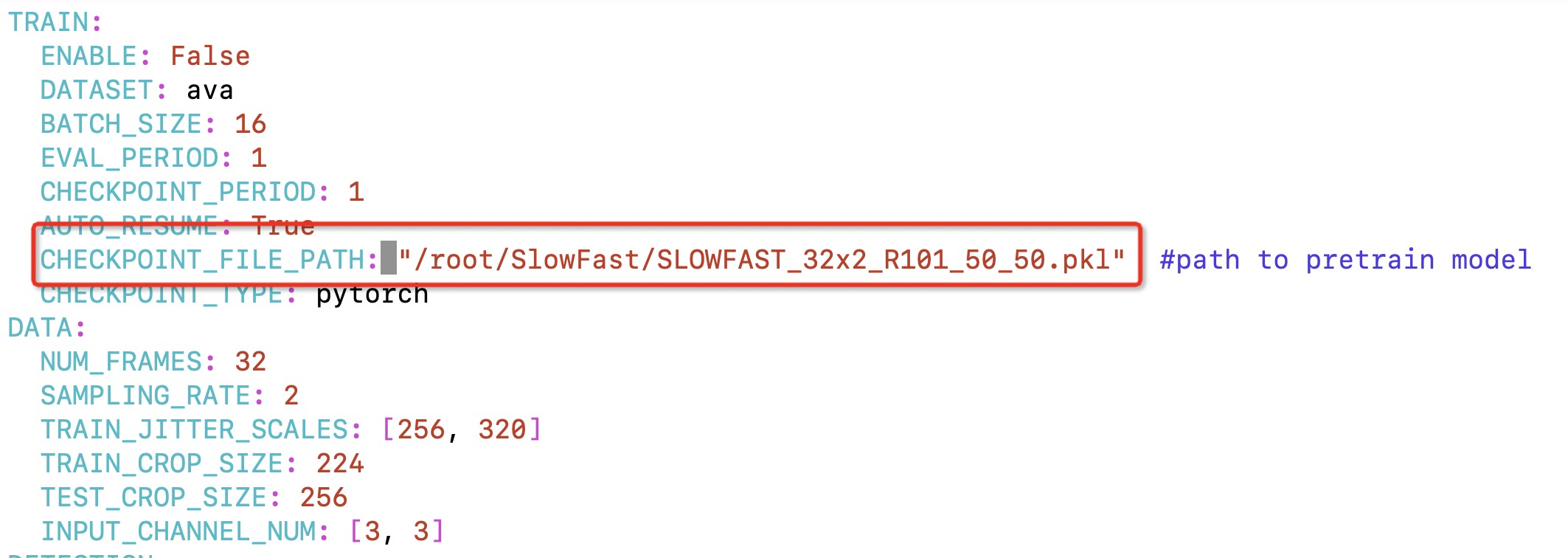

Under the path of / root/SlowFast/demo/AVA, modify the [SLOWFAST_32x2_R101_50_50.yaml] file:

- Checkpoint under TRAIN_ FILE_ Path, which is modified to the location of the pkl file downloaded and uploaded to the cloud in the previous step.

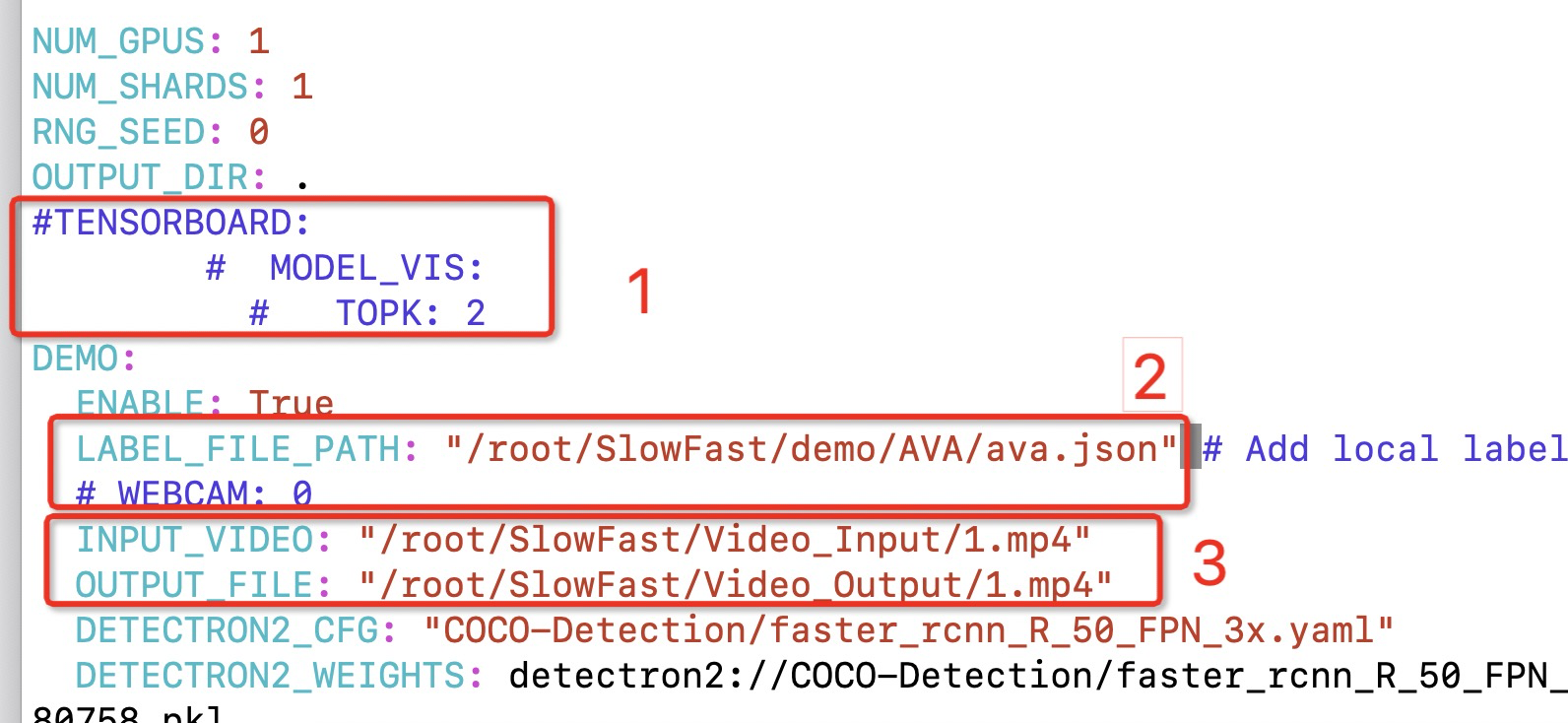

- TENSORBOARD,MODEL_ Comment out the three lines of vis and TOPK.

- Under DEMO, comment out WEBCAM and add INPUT_VIDEO,OUTPUT_FILE, the assigned path and name can be customized.

- Under DEMO, LABEL_FILE_PATH write Ava JSON location.

Prepare material

Prepare mp4 test material files and upload them to the corresponding folder.

I created video in the / root/SlowFast path_ Input,Video_Output folder.

In video_ Under the input folder is a file named 1 Mp4 material.

function

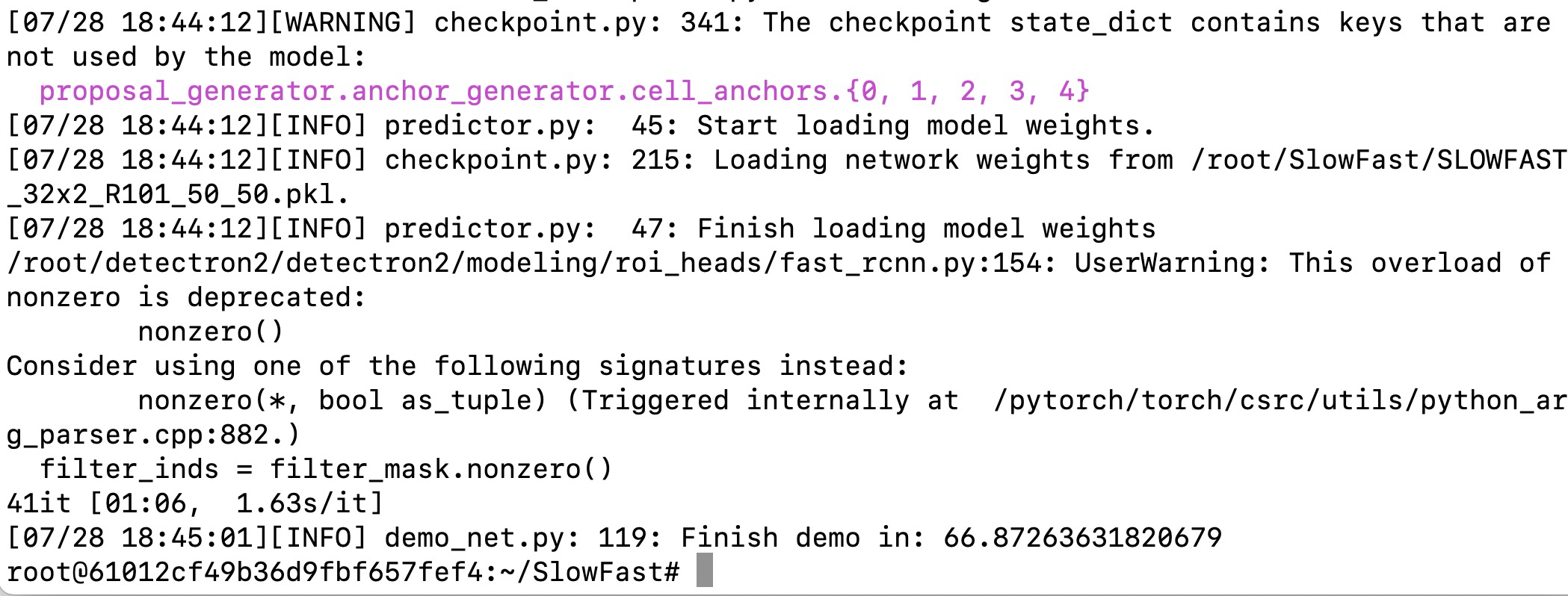

Under the / root/SlowFast path, execute:

python tools/run_net.py --cfg demo/AVA/SLOWFAST_32x2_R101_50_50.yaml

Operation results

Three video clips were run this time.

The first video clip comes from the American drama "newcomer".

The second and third videos are Jackie Chan's plays, which are downloaded from station b. Although one video is a little blurred, it's very touching to be able to recognize critical art.

Postscript - unimportant painful process

At first, I configured in such an environment:

Pytorch 1.7.0, Python 3.8.8, CUDA 11.0

It is the same as the above steps (this sentence does not need to be upgraded to pytorch),

Finally, when installing slowfast, the problem of PIL will also occur

But that's not the point

When running inference, the following will appear:

Traceback (most recent call last):

File "tools/run_net.py", line 6, in <module>

from slowfast.utils.misc import launch_job

File "/root/SlowFast/slowfast/utils/misc.py", line 21, in <module>

from slowfast.models.batchnorm_helper import SubBatchNorm3d

File "/root/SlowFast/slowfast/models/__init__.py", line 6, in <module>

from .video_model_builder import ResNet, SlowFast # noqa

File "/root/SlowFast/slowfast/models/video_model_builder.py", line 18, in <module>

from . import head_helper, resnet_helper, stem_helper

File "/root/SlowFast/slowfast/models/head_helper.py", line 8, in <module>

from detectron2.layers import ROIAlign

ImportError: cannot import name 'ROIAlign' from 'detectron2.layers' (unknown location)

Thank you for your blog:

https://blog.csdn.net/WhiffeYF/article/details/113527759

Let me go back from Python 3 7. Even when installing detectron2, you have to upgrade pytoch to 1.7.0, but this difficult error will not occur.

And upgrade to pytorch1 7, use this sentence:

pip install torch==1.7.1+cu110 torchvision==0.8.2+cu110 torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html

At that time, simply upgrade pytorch to 1.7, not cuda10 When 2 is upgraded to 11, the problem of Could not run 'torch vision:: NMS' will appear.

Fortunately, I've met this before and solved it.

In this blog:

https://blog.csdn.net/weixin_41793473/article/details/118574493?spm=1001.2014.3001.5501

The problem of PIL mentioned earlier:

Installed /root/SlowFast

Processing dependencies for slowfast==1.0

Searching for sklearn

Reading https://pypi.org/simple/sklearn/

Downloading https://files.pythonhosted.org/packages/1e/7a/dbb3be0ce9bd5c8b7e3d87328e79063f8b263b2b1bfa4774cb1147bfcd3f/sklearn-0.0.tar.gz#sha256=e23001573aa194b834122d2b9562459bf5ae494a2d59ca6b8aa22c85a44c0e31

Best match: sklearn 0.0

Processing sklearn-0.0.tar.gz

Writing /tmp/easy_install-bhbw6vsc/sklearn-0.0/setup.cfg

Running sklearn-0.0/setup.py -q bdist_egg --dist-dir /tmp/easy_install-bhbw6vsc/sklearn-0.0/egg-dist-tmp-2gs9d87i

file wheel-platform-tag-is-broken-on-empty-wheels-see-issue-141.py (for module wheel-platform-tag-is-broken-on-empty-wheels-see-issue-141) not found

file wheel-platform-tag-is-broken-on-empty-wheels-see-issue-141.py (for module wheel-platform-tag-is-broken-on-empty-wheels-see-issue-141) not found

file wheel-platform-tag-is-broken-on-empty-wheels-see-issue-141.py (for module wheel-platform-tag-is-broken-on-empty-wheels-see-issue-141) not found

warning: install_lib: 'build/lib' does not exist -- no Python modules to install

creating /opt/conda/lib/python3.8/site-packages/sklearn-0.0-py3.8.egg

Extracting sklearn-0.0-py3.8.egg to /opt/conda/lib/python3.8/site-packages

Adding sklearn 0.0 to easy-install.pth file

Installed /opt/conda/lib/python3.8/site-packages/sklearn-0.0-py3.8.egg

Searching for PIL

Reading https://pypi.org/simple/PIL/

No local packages or working download links found for PIL

error: Could not find suitable distribution for Requirement.parse('PIL')