Starting with Firefox 18, if the HTTPS page contains unencrypted HTTP content, the browser will output a warning on the console and record the Mixed Active Content request. Starting from Firefox 23, the browser will block HTTP requests in HTTPS pages that may affect web page security by default (that is, block Mixed Active Content). This will sacrifice the compatibility of some websites, but it is very helpful to improve security.

Obtaining Mixed Content is equivalent to initiating a partially encrypted connection, in which the unencrypted part may be attacked by a man in the middle. Different types of Mixed Content have different degrees of harm. Mixed Passive Content may enable the middleman to obtain the user's device information or let the user see incorrect pictures, audio and other information. Mixed Active Content may cause the user's sensitive data to be stolen, such as account and password.

Why doesn't the Mixed Content Blocker block all HTTP requests?

Mixed Content can be divided into two categories:

Mixed Passive Content

Mixed Active Content

Mixed Passive Content (a.k.a. Mixed Display Content)

Mixed Passive Content refers to some HTTP contents in HTTPS pages that have little impact on security, such as Image, Audio, Video, etc. Even if these contents are tampered with by the middleman, the impact is only - the middleman knows the user's browser information (through user agent included by HTTP headers) and the user sees an incorrect picture. These tampered contents cannot modify the DOM tree or execute. In addition, Mixed Passive Content is ubiquitous on the Web. Therefore, Firefox does not block Mixed Passive Content by default.

Mixed Active Content (a.k.a. Mixed Script Content)

Mixed Active Content refers to some HTTP content that can modify the DOM tree in the HTTPS page, such as JavaScript, CSS, XMLHttpRequest, iFrame, etc. After these HTTP contents are modified by intermediaries, the security of the original HTTPS contents may be affected, resulting in the theft of sensitive user data. Therefore, Firefox blocks Mixed Active Content by default.

Deep thinking

Why should Frame be Mixed Active Content?

There are several reasons why Frame cannot be classified as Mixed Passive Content:

A frame can jump the outer reliable HTTPS page to the fake page of maliciously stealing information.

If an HTTPS page is nested with an HTTP frame, and this frame contains a form to input user information, the user information will be transmitted by HTTP, which is in danger of being stolen by an intermediate attacker, but the user doesn't know it and thinks everything is in secure HTTPS.

How to determine whether Mixed Content is Active or Passive?

Whether the Mixed Content will affect the DOM structure of the page. (Yes -> Active, No -> Passive)

Solution:

If the page contains HTTP content such as JavaScript, CSS, XMLHttpRequest, iFrame, etc.

Use relative links

Modify the http link to https (https connection support is required)

Let the browser automatically determine whether to access http or https, such as < img SRC = "/ / www.sslzhengshu. COM / statistics / images / logo1. JPG" / >

Scheme II:

Recently, I conducted the whole station Https transformation on the leading company's website. This paper records a problem encountered in the transformation process that the front-end browser blocked access due to the jump of the back-end 302. I feel that such a problem has certain universality, so I edited it and hope it can be helpful to people who encounter similar problems.

Problem recurrence

After a period of research work, we finally transformed the company's environment into https access mode, and opened the company's test environment home page with full confidence, https://test.xxx.com . Everything was normal. Just when I thought the transformation was about to be completed, the problem appeared.

Enter the home page normally, enter the user name and password to log in, and the page will not move. Call up the Firefox console to check and find that such a line reports an error.

(figure I)

Open the network panel to view the following:

(Figure 2)

The front end initiates an https Ajax request, and the back end returns a status code of 302 and a location of http: / / This results in mixed access. The 302 jump, which should have been automatically processed by Ajax, was thus prohibited by the browser.

problem analysis

1. What is mixed content

When users visit a page using HTTPS, the connection between them and the web server is encrypted with SSL, so as to protect the connection from sniffer and man in the middle attacks.

If the HTTPS page includes content encrypted by an ordinary plaintext HTTP connection, the connection is only partially encrypted: non encrypted content can be invaded by sniffers and modified by man in the middle attackers, so the connection is no longer protected. When this happens to a web page, it is called a mixed content page.

Details can be found at https://developer.mozilla.org...

2. Why does the Location change from https to http after the backend jump.

Our backend is developed in Java and deployed with Tomcat. For servlets, HttpServletResponse.sendRedirect(String url) method is generally used to realize page Jump (302 jump). So is this the problem? The answer is No. The url parameter in sendRedirect(String url) method can pass in absolute address and relative address. When using, we usually pass in the relative address, which is automatically converted into the absolute address by the method, that is, the address returned to the Location parameter in the browser. The sendRedirect() method will determine the scheme of the absolute address after splicing according to the currently accessed scheme, that is, if the access address starts with https, the absolute address of the jump link will also be https, http is the same. In this example, we pass in the relative address. The beginning of the absolute path address of the jump link is determined by the request address, that is, the HttpServletRequest request request protocol received by the back-end program must start with http.

We can see that the address request address in Figure 2 starts with https. Why does it become an http request after reaching the back-end program? Let's move on.

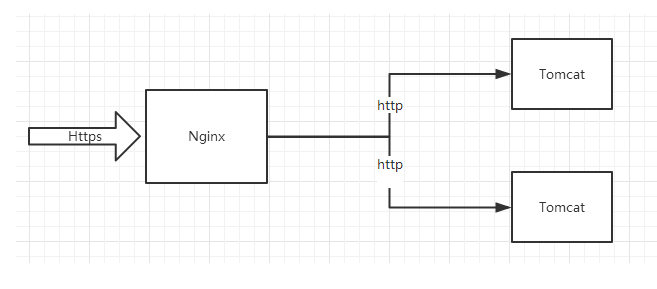

(Figure 3)

To facilitate the explanation, I drew an architecture diagram of HTTPS configuration. We use Nginx as the reverse proxy server, and the upstream server uses Tomcat. We configure HTTPS at the Nginx layer, and Nginx is responsible for handling HTTPS requests. However, the processing method of Nginx itself stipulates that when sending a request to the upstream server, it is requested in the form of http. This explains why the request received by our back-end code is the http protocol. I really want to finally know.

resolvent

The problem is finally clear. The next step is to solve it.

1. Solution 1.0

Since the code running on the Tomcat server becomes an http request after passing through the Nginx proxy, the relative address passed in by the sendRedirect method will become http with the request address. Then we no longer use relative addresses, but absolute addresses. In this way, the jump address is all up to us. We can jump wherever we want. Mom doesn't have to worry about us anymore.

Early transformation:

/**

* Re implement sendRedirect.

* @param request

* @param response

* @param url

* @throws IOException

*/

public static void sendRedirect(HttpServletRequest request, HttpServletResponse response, String url) throws IOException{

if(url.startsWith("http://")||url.startsWith("https://")){

//Absolute path, direct jump.

response.sendRedirect(url);

return;

}

// Collect request information in preparation for splicing absolute addresses.

String serverName = request.getServerName();

int port = request.getServerPort();

String contextPath = request.getContextPath();

String servletPath = request.getServletPath();

String queryString = request.getQueryString();

// Splice absolute address

StringBuilder absoluteUrl = new StringBuilder();

// Force https

absoluteUrl.append("https").append("://").append(serverName);

//80 and 443 bit http and https default interfaces without splicing.

if (port != 80 && port != 443) {

absoluteUrl.append(":").append(port);

}

if (contextPath != null) {

absoluteUrl.append(contextPath);

}

if (servletPath != null) {

absoluteUrl.append(servletPath);

}

// Add relative addresses.

absoluteUrl.append(url);

if (queryString != null) {

absoluteUrl.append(queryString);

}

// Jump to absolute address.

response.sendRedirect(absoluteUrl.toString());

}We have our own sendRedirect() method, but there is a small flaw. We convert all relative addresses into absolute addresses starting with HTTP. This is not suitable for websites that support https and HTTP. Therefore, we need to make a reservation with the front-end request to let the front-end send another Ajax like access, Customize a request header to tell us whether to access https or http. We judge the custom header in the back-end code and determine the code behavior.

/**

* Re implement sendRedirect.

* @param request

* @param response

* @param url

* @throws IOException

*/

public static void sendRedirect(HttpServletRequest request, HttpServletResponse response, String url) throws IOException{

if(url.startsWith("http://")||url.startsWith("https://")){

//Absolute path, direct jump.

response.sendRedirect(url);

return;

}

//Assume that the front-end request header is http_https_scheme. The values that can be passed in include HTTP or HTTPS. If not, it defaults to HTTPS.

if(("http").equals(request.getHeader("http_https_scheme"))){

//http request, default behavior.

response.sendRedirect(url);

return;

}

// Collect request information in preparation for splicing absolute addresses.

String serverName = request.getServerName();

int port = request.getServerPort();

String contextPath = request.getContextPath();

String servletPath = request.getServletPath();

String queryString = request.getQueryString();

// Splice absolute address

StringBuilder absoluteUrl = new StringBuilder();

// Force https

absoluteUrl.append("https").append("://").append(serverName);

//80 and 443 bit http and https default interfaces without splicing.

if (port != 80 && port != 443) {

absoluteUrl.append(":").append(port);

}

if (contextPath != null) {

absoluteUrl.append(contextPath);

}

if (servletPath != null) {

absoluteUrl.append(servletPath);

}

// Add relative addresses.

absoluteUrl.append(url);

if (queryString != null) {

absoluteUrl.append(queryString);

}

// Jump to absolute address.

response.sendRedirect(absoluteUrl.toString());

}The above is the modified code, and the request header judgment logic is added. In this way, our method supports the mixed mode of http and https.

further more: Let's go further on the above code. In fact, we just rearrange the logic of sendRedirect, but the pattern of the static method we use. Can we directly override the sendRedirect() method in the response?

/** * Override the sendRedirect method* */ public class HttpsServletResponseWrapper extends HttpServletResponseWrapper { private final HttpServletRequest request; public HttpsServletResponseWrapper(HttpServletRequest request,HttpServletResponse response) { super(response); this.request=request; } @Override public void sendRedirect(String location) throws IOException { if(location.startsWith("http://") ||location.startsWith("https: / / ") {/ / absolute path, direct jump. super.sendRedirect(location); return;} / / assuming that the front-end request header is http_https_scheme, the values that can be passed in include HTTP or HTTPS. If ((" HTTP "). Equals (request. Getheader (" http_https_scheme ")) {/ / HTTP request, default behavior. super.sendRedirect(location); return;} //Collect request information and prepare for splicing absolute addresses. String serverName = request.getServerName(); int port = request.getServerPort(); String contextPath = request.getContextPath(); String servletPath = request.getServletPath(); String queryString = request.getQueryString(); / / splicing absolute addresses StringBuilder absoluteUrl = new StringBuilder() ; / / force HTTPS absoluteurl.append ("HTTPS"). Append (": / /). Append (servername); / / 80 and 443 bit HTTP and HTTPS default interfaces without splicing. If (port! = 80 & & port! = 443) {absoluteurl.append (": "). Append (port);} if (contextpath! = null) {absoluteurl.append (contextpath);} if (servletpath! = null) {absoluteurl.append (servletpath);} //Add relative address. Absoluteurl. Append (location); if (querystring! = null) {absoluteurl. Append (querystring);} / / jump to absolute address. super.sendRedirect(absoluteUrl.toString());}}The specific logic is the same. We just inherit the wrapper class HttpServletResponseWrapper, use an observer pattern here, and rewrite the sendRedirect() method logic. We can use our custom HttpsServletResponseWrapper like this

public void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

String location="/login";

new HttpsServletResponseWrapper(request, response).sendRedirect(location);

}Further:

Now that we have a new HttpServletResponseWrapper, it is a little redundant for us to manually wrap HttpServletResponse where necessary. We can use the servlet filter mechanism to automatically wrap.

public class HttpsServletResponseWrapperFilter implements Filter{

@Override

public void destroy() {

// TODO Auto-generated method stub

}

@Override

public void doFilter(ServletRequest request, ServletResponse response, FilterChain chain) throws IOException, ServletException {

chain.doFilter(request, new HttpsServletResponseWrapper((HttpServletRequest)request, (HttpServletResponse)response));

}

@Override

public void init(FilterConfig arg0) throws ServletException {

// TODO Auto-generated method stub

}

}Setting the filter mapping in web.xml, you can directly use the HttpServletResponse object without wrapping, because the response has been wrapped as HttpsServletResponseWrapper when the request passes through the HttpsServletResponseWrapperFilter.

public void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

String location="/login";

response.sendRedirect(location);

}So far, we have seamlessly embedded the code logic into our back-end code, which looks more elegant.

2. Solution 2.0

In version 1.0, our focus is on the back-end code running in the upstream service of Nginx. We achieve our goal through the transformation of the code. Now let's change our thinking and focus on Nginx. Since our scheme is lost after the Nginx agent, does Nginx provide us with a mechanism to retain the scheme after the agent? The answer is yes of

location / {

proxy_set_header X-Forwarded-Proto $scheme;

}A simple line of configuration solves our problem. Nginx retains the scheme during proxy, so that we can directly use the HttpServletResponse.sendRedirect() method during jump.

Summary

We have solved the problem by modifying the code in solution 1.0 and modifying the configuration in solution 2.0. There are many ways to solve the problem in daily development. As long as you understand the principle of the problem, you can find a solution in any link of the problem. This work record is written here. Of course, there are other ways to solve the problem. If you have other solutions You can leave me a message about your solution.