2021SC@SDUSC

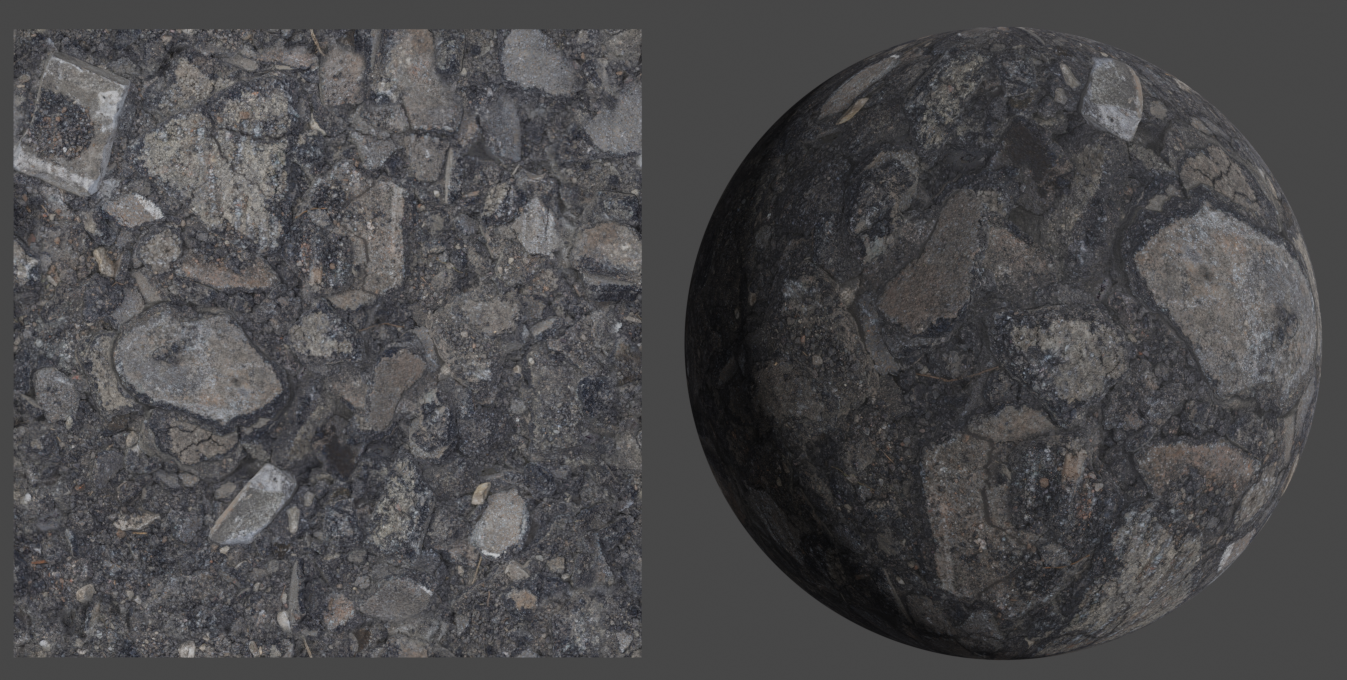

In real life, the most common function of textures is to decorate our object models. They stick to the surface like stickers, making the surface of the object have patterns. But in fact, texture is not only useful in renderers, it can also be used to store large amounts of data, such as terrain information using textures.

Material is a dataset whose primary function is to provide data and illumination algorithms to the renderer. Maps are part of the data and can be classified into different types depending on their use.

Materials contain maps, maps contain textures, which are the most basic unit of data entry. One of the meanings of a Map in English is "mapping", which maps textures to 3D surfaces through UV coordinates. Maps contain a lot of information besides textures, such as UV coordinates, Map input and output control, and so on.

Two methods:

- From data: texture mapping - reading color or other information from two-dimensional images

- Program Texture: Shader - Write a small program that calculates color/information as a function of location

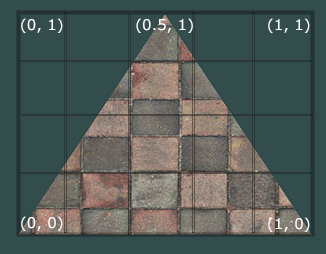

UV Coordinates

In order to map textures onto triangle patches, we need to specify which part of each vertex of the triangle corresponds to the texture. Thus, each vertex stores two-dimensional (u, v) "texture coordinates" for each vertex P, and UV determines the two-dimensional planar position of the vertex texture. Fragment interpolation is then performed on other fragments of the graph.

Obtain UV coordinates:

- Manually provided by user

- Automatically use parameterized optimization

- Mathematical mapping (not necessarily every vertex is required)

Texture UV Optimization Target: Flatten 3D objects onto two-dimensional UV coordinates

- For each vertex, find coordinates U, V to minimize distortion

- The distance in the UV corresponds to the distance on the grid, and the angle of the 3D triangle is the same as that in the UV plane.

- Cutting is usually required (discontinuity)

Texture coordinates are on the x- and y-axes, ranging from 0 to 1. Getting texture color using texture coordinates is called sampling. Texture coordinates start at (0, 0), which is the lower left corner of the texture picture, and end at (1, 1), which is the upper right corner of the texture picture. We want the lower left corner of the triangle to correspond to the lower left corner of the texture, so we set the texture coordinates of the lower left corner vertex of the triangle to (0, 0); The top vertex of the triangle corresponds to the top-middle position of the picture, so we set its texture coordinate to (0.5,1.0); Similarly, the lower right vertex is set to (1, 0). All we need to do is pass these three texture coordinates to the vertex shader, which is then passed to the fragment shader, where it interpolates the texture coordinates for each fragment.

For a simple example, texture coordinates can be expressed as follows:

float vertices[] = {

0.5f, 0.5f, 0.0f, // Upper Right Corner

0.5f, -0.5f, 0.0f, // Lower Right Corner

-0.5f, -0.5f, 0.0f, // Lower left quarter

-0.5f, 0.5f, 0.0f // Top left corner

};

unsigned int indices[] = {

// Index starts at 0

0, 1, 3, // First triangle

1, 2, 3 // Second triangle

};

Using textures

Steps for using textures:

- Specify Texture

- Read or Generate Images

- Assign to Texture

- Enable Texture

- Assign texture coordinates to vertices

- Specify texture parameters: packaging, filtering, Mipmap

glGenTextures(1, &texture);

glBindTexture(GL_TEXTURE_2D, texture);

glTexImage2D(GL_TEXTURE_2D, 0, GL_DEPTH_COMPONENT,

SHADOW_WIDTH, SHADOW_HEIGHT, 0, GL_DEPTH_COMPONENT, GL_FLOAT, nullptr);

// Set wrapping and filtering for currently bound texture objects

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_BORDER);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_BORDER);

- The glGenTextures function first needs to input the number of textures generated, then store them in the unsigned int array of the second parameter, just like other objects, so that any texture instruction can configure the currently bound texture later

- The glBindTexture function call binds this texture to the currently active texture unit, texture unit GL_TEXTURE0 is always activated by default

- Texture is generated using the glTexImage2D function. When glTexImage2D is called, the currently bound texture object is appended with a texture image

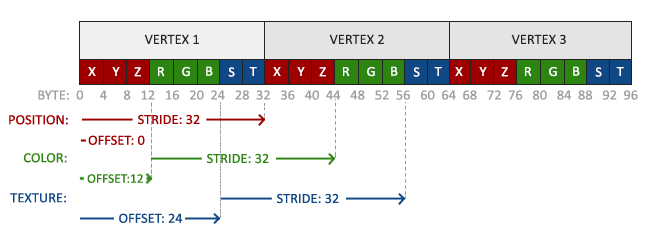

Update vertex data using texture coordinates:

float vertices[] = {

// --- Position----------- Color----- Texture Coordinates-

0.5f, 0.5f, 0.0f, 1.0f, 0.0f, 0.0f, 1.0f, 1.0f, // Upper Right

0.5f, -0.5f, 0.0f, 0.0f, 1.0f, 0.0f, 1.0f, 0.0f, // lower right

-0.5f, -0.5f, 0.0f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f, // Lower Left

-0.5f, 0.5f, 0.0f, 1.0f, 1.0f, 0.0f, 0.0f, 1.0f // Upper Left

};

Mipmap

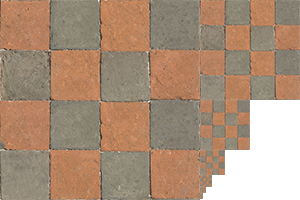

Suppose we have a large room with thousands of objects, each with textures. Some objects may be far away, but their textures will have the same resolution as those near them. Since distant objects may only produce very few fragments, it is difficult for OpenGL to obtain the correct color values for these fragments from high-resolution textures because it requires only one texture color to be picked up for a fragment that crosses a large portion of the texture. This creates an unrealistic feeling on small objects, not to mention the problem of wasting memory using high-resolution textures on them.

How to resolve aliasing: Multilevel Fading Texture Mipmap

Simply put, a series of texture images are created, and the latter is half of the former. Shrink a map by a multiple of 2 until it is 1X1 and store the reduced map. In rendering, the distance from the eye position of a pixel determines that a color is assigned to a pixel from an appropriate layer. The layer is built from the original image, and each layer stores 1/4 of the previous layer. The corresponding layer is found according to the area size, and the color value is calculated by interpolation (non-integer layer needs interpolation between layers).

bool TexturePainter::paintStroke(QPainter &painter, const TexturePainterStroke &stroke)

{

size_t targetTriangleIndex = 0;

if (!intersectRayAndPolyhedron(stroke.mouseRayNear,

stroke.mouseRayFar,

m_context->object->vertices,

m_context->object->triangles,

m_context->object->triangleNormals,

&m_targetPosition,

&targetTriangleIndex)) {

return false;

}

if (PaintMode::None == m_paintMode)

return false;

if (nullptr == m_context->colorImage) {

qDebug() << "TexturePainter paint color image is null";

return false;

}

const std::vector<std::vector<QVector2D>> *uvs = m_context->object->triangleVertexUvs();

if (nullptr == uvs) {

qDebug() << "TexturePainter paint uvs is null";

return false;

}

const std::vector<std::pair<QUuid, QUuid>> *sourceNodes = m_context->object->triangleSourceNodes();

if (nullptr == sourceNodes) {

qDebug() << "TexturePainter paint source nodes is null";

return false;

}

const std::map<QUuid, std::vector<QRectF>> *uvRects = m_context->object->partUvRects();

if (nullptr == uvRects)

return false;

const auto &triangle = m_context->object->triangles[targetTriangleIndex];

QVector3D coordinates = barycentricCoordinates(m_context->object->vertices[triangle[0]],

m_context->object->vertices[triangle[1]],

m_context->object->vertices[triangle[2]],

m_targetPosition);

double triangleArea = areaOfTriangle(m_context->object->vertices[triangle[0]],

m_context->object->vertices[triangle[1]],

m_context->object->vertices[triangle[2]]);

auto &uvCoords = (*uvs)[targetTriangleIndex];

QVector2D target2d = uvCoords[0] * coordinates[0] +

uvCoords[1] * coordinates[1] +

uvCoords[2] * coordinates[2];

double uvArea = areaOfTriangle(QVector3D(uvCoords[0].x(), uvCoords[0].y(), 0.0),

QVector3D(uvCoords[1].x(), uvCoords[1].y(), 0.0),

QVector3D(uvCoords[2].x(), uvCoords[2].y(), 0.0));

double radiusFactor = std::sqrt(uvArea) / std::sqrt(triangleArea);

std::vector<QRect> rects;

const auto &sourceNode = (*sourceNodes)[targetTriangleIndex];

auto findRects = uvRects->find(sourceNode.first);

const int paddingSize = 2;

if (findRects != uvRects->end()) {

for (const auto &it: findRects->second) {

if (!it.contains({target2d.x(), target2d.y()}))

continue;

rects.push_back(QRect(it.left() * m_context->colorImage->height() - paddingSize,

it.top() * m_context->colorImage->height() - paddingSize,

it.width() * m_context->colorImage->height() + paddingSize + paddingSize,

it.height() * m_context->colorImage->height() + paddingSize + paddingSize));

break;

}

}

QRegion clipRegion;

if (!rects.empty()) {

std::sort(rects.begin(), rects.end(), [](const QRect &first, const QRect &second) {

return first.top() < second.top();

});

clipRegion.setRects(&rects[0], rects.size());

painter.setClipRegion(clipRegion);

}

double radius = m_radius * radiusFactor * m_context->colorImage->height();

QVector2D middlePoint = QVector2D(target2d.x() * m_context->colorImage->height(),

target2d.y() * m_context->colorImage->height());

QRadialGradient gradient(QPointF(middlePoint.x(), middlePoint.y()), radius);

gradient.setColorAt(0.0, m_brushColor);

gradient.setColorAt(1.0, Qt::transparent);

painter.fillRect(middlePoint.x() - radius,

middlePoint.y() - radius,

radius + radius,

radius + radius,

gradient);

return true;

}