What is AQS?

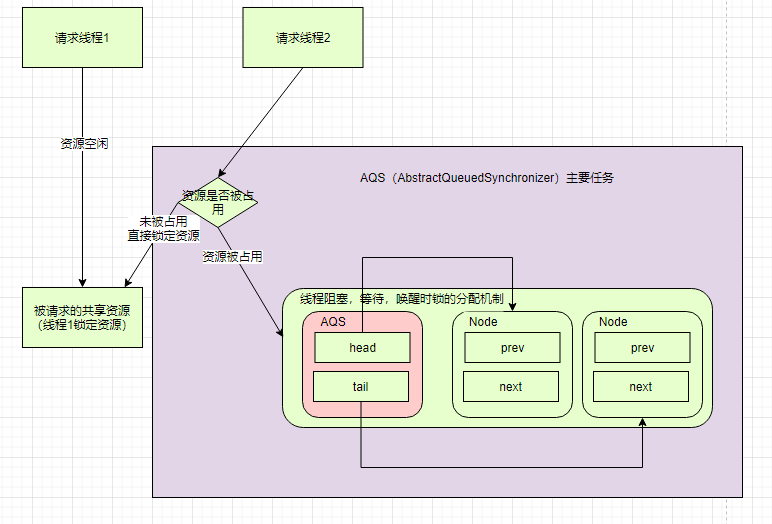

In Lock, a synchronization queue AQS is used. Its full name is AbstractQueuedSynchronizer. It is a synchronization tool and the core component used by Lock to realize thread synchronization.

java. util. Most of the tools in concurrent are implemented through AQS.

The core idea of AQS is that if the requested shared resource is idle, the thread requesting the resource is set as a valid worker thread, and the shared resource is set to the locked state. If the requested shared resources are occupied, a set of mechanism for thread blocking and waiting and lock allocation when waking up is required. This mechanism AQS is implemented with CLH queue lock, that is, the thread that cannot obtain the lock temporarily is added to the queue.

If you don't understand, don't be afraid to continue to look down. Look back at this picture after reading it.

Next, we will mainly explain the locking process through the lock() Lock in the Lock interface implemented by ReentrantLock.

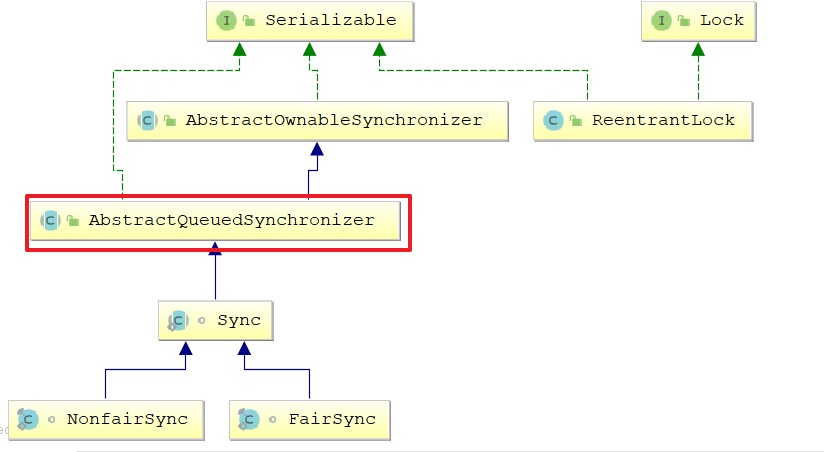

The class diagram of AQS (AbstractQueuedSynchronizer) is as follows:

Class diagram

Code example

Code example:

public class AtomicDemo {

private static int count=0;

static Lock lock=new ReentrantLock();

public static void inc(){

//This paper mainly explores the implementation process of the source code through lock()

lock.lock();

try {

Thread.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

count++;

System.out.println(count);

lock.unlock();

}

public static void main(String[] args) throws

InterruptedException {

for(int i=0;i<1000;i++){

new Thread(()->{AtomicDemo.inc();}).start();;

}

Thread.sleep(3000); System.out.println("result:"+count);

}

}

lock() method in ReentrantLock

public void lock() {

sync.lock();

}

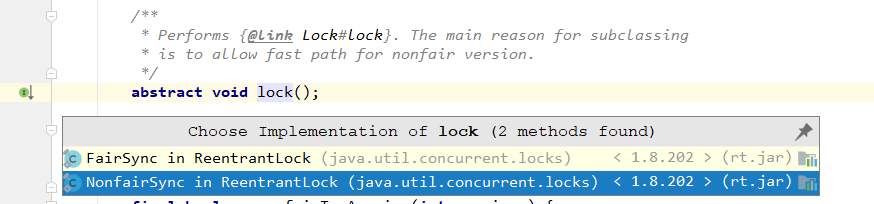

sync.lock() method

abstract void lock();

sync.lock() has two implementation classes, one is fair policy implementation class and the other is unfair policy implementation class. (fair strategy and unfair strategy will be explained later)

ReentrantLock defaults to an unfair method implementation class. It can be determined according to the construction method of ReentrantLock.

++++++++++++Split line (this is an episode)+++++++++++++++++

ReentrantLock construction method code

/**

* Creates an instance of {@code ReentrantLock}.

* This is equivalent to using {@code ReentrantLock(false)}.

*/

public ReentrantLock() {

sync = new NonfairSync();

}

/**

* Creates an instance of {@code ReentrantLock} with the

* given fairness policy.

*

* @param fair {@code true} if this lock should use a fair ordering policy

*/

public ReentrantLock(boolean fair) {

sync = fair ? new FairSync() : new NonfairSync();

}

++++++++++++End of split line+++++++++++++++++++++

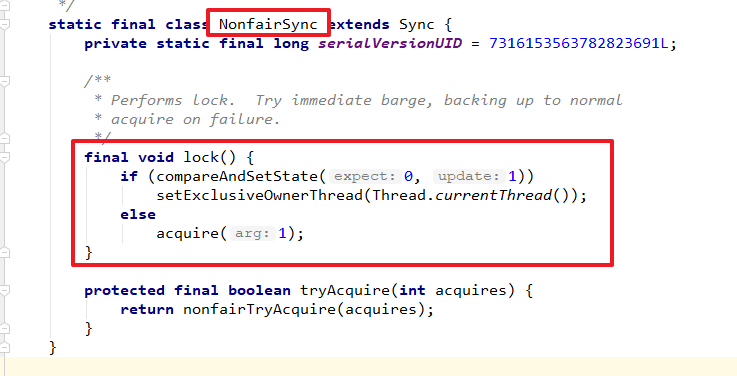

Back to sync Implementation of lock() method

The lock() method first attempts to change the status from 0 to 1 through CAS. If the direct modification is successful, the precondition is naturally that the lock state is 0, then the OWNER of the thread is directly modified to the current thread, which is an ideal situation. If the concurrency granularity is set appropriately, it is also an optimistic situation.

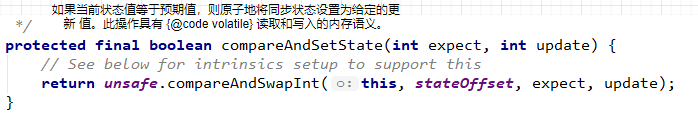

Next, analyze this code. The first thing to enter is the compareAndSetState() method. Its main function is to determine whether the current resource is occupied. If the resource is not occupied, it is the ideal state.

The implementation of the code is as follows:

acquire(1) method

If it is not ideal, it will enter the acquire(1) method

public final void acquire(int arg) {

//if(!tryAcquire()) is used as the first condition in the call determination of tryAcquire(). If true is returned, the determination will not be tenable, and naturally the subsequent acquirequeueueueueueueued action will not be executed. If this happens, it is ideal

if (!tryAcquire(arg) &&

acquireQueued(addWaiter(Node.EXCLUSIVE), arg))

selfInterrupt();

}

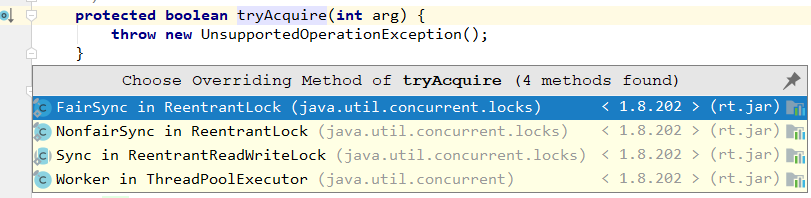

Next, let's go to try acquire()

There are many implementation schemes of tryAcquire() in ReentrantLock class. First, let's analyze the implementation method of fairness policy

//Fair strategy

protected final boolean tryAcquire(int acquires) {

final Thread current = Thread.currentThread();

int c = getState();//First, get the state of the lock

if (c == 0) {//If the status is 0

if (!hasQueuedPredecessors() &&//Determine whether the thread needs to be queued

compareAndSetState(0, acquires)) {//Try to set the status to the parameter passed in (here is 1)

setExclusiveOwnerThread(current);//If the setting is successful, it means that you have obtained the lock

return true;

}

}

//The embodiment of thread reentry. As for what reentry is, you can find it yourself first. This time, I won't explain it

else if (current == getExclusiveOwnerThread())//If the status is not 0, determine whether the current thread is the Owner of the exclusive lock

{

int nextc = c + acquires;//If it is the Owner, try to increase the status to acquire (that is, increase by 1)

if (nextc < 0)//If the status value is out of bounds, an exception prompt will be thrown

throw new Error("Maximum lock count exceeded");

setState(nextc);

return true;//If it does not cross the boundary, set the status in and return true (it implements a function similar to bias, which can be re entered, but no further requisition is required)

}

return false;//If the status is not 0 and it is not owner, false is returned

}

addWaiter() method

private Node addWaiter(Node mode) {

//A Node object is created here, which connects the current thread and the incoming Node Exclusive incoming, that is, the Node theoretically contains these two information. tail in the code is an attribute of AQS. It must be null at the beginning, that is, it will not enter the if judgment area of the first layer, but directly enter the enq(node) code

Node node = new Node(Thread.currentThread(), mode);

// Try the fast path of enq; backup to full enq on failure

Node pred = tail;

if (pred != null) {

node.prev = pred;

if (compareAndSetTail(pred, node)) {

pred.next = node;

return node;

}

}

enq(node);

return node;

}

Enter enq() method

//When you see the tail, you should guess that AQS is a linked list. Yes, and it should also have a head reference to point to the head node of the linked list. When AQS is initialized, the head and tail are null and move back and forth during runtime. At this point, we at least know that AQS is a state based linked list management method.

private Node enq(final Node node) {

for (;;) {

Node t = tail;

if (t == null) { // Must initialize

if (compareAndSetHead(new Node()))

tail = head;

} else {

node.prev = t;

if (compareAndSetTail(t, node)) {

t.next = node;

return t;

}

}

}

}

Next, return to the acquirequeueueueueueueueued () method

final boolean acquireQueued(final Node node, int arg) {

boolean failed = true;

try {

boolean interrupted = false;

for (;;) {

final Node p = node.predecessor();//This method returns the previous node of the node node, that is, only when the current node is head, can further attempts to requisition through tryAcquire(arg) be successful

if (p == head && tryAcquire(arg)) {//p is node Predcessor () gets that this method returns the previous node of the node node, that is, only when the current node is head, can further attempts to requisition through tryAcquire(arg) be successful

setHead(node);//Call the setHead(Node) operation, which will internally take the incoming node as the node pointed by the AQS head. Set the thread attribute to null (because the lock has been obtained now, it is no longer necessary to record the thread corresponding to this node), and then assign the perv reference of this node to null.

p.next = null; // help GC / / further assign the next reference of the previous node to null. After such modification, the structure of the queue becomes the following. In this way, the executed node can release the memory area instead of unlimited growth of the queue, and the FIFO is really formed

failed = false;

return interrupted;

}

if (shouldParkAfterFailedAcquire(p, node) //This method will internally determine whether the state of the previous node is: "Node.SIGNAL". If so, it will return true. If not, it will return false. However, it will do some more operations: determine whether the state of the node is greater than 0. If it is greater than 0, it will be considered as "CANCELLED" (we do not specify the values of several States, but those greater than 0 can only be CANCELLED), Therefore, it will start from the previous node and gradually cycle to find a node that is not "CANCELLED", and then point to each other with the reference of the next and prev of this node; If the state of the previous node is not greater than 0, try to change the state to "Node.SIGNAL" through CAS. Naturally, if the value will be returned in the next cycle, it should return true&&

parkAndCheckInterrupt())//Via locksupport Park (this) suspends the current thread to the waiting state. It needs to wait for an interrupt and unpark method to wake it up. Through the waiting of such a FIFO mechanism, the Lock operation is realized.

interrupted = true;

}

} finally {

if (failed)

cancelAcquire(node);

}

}

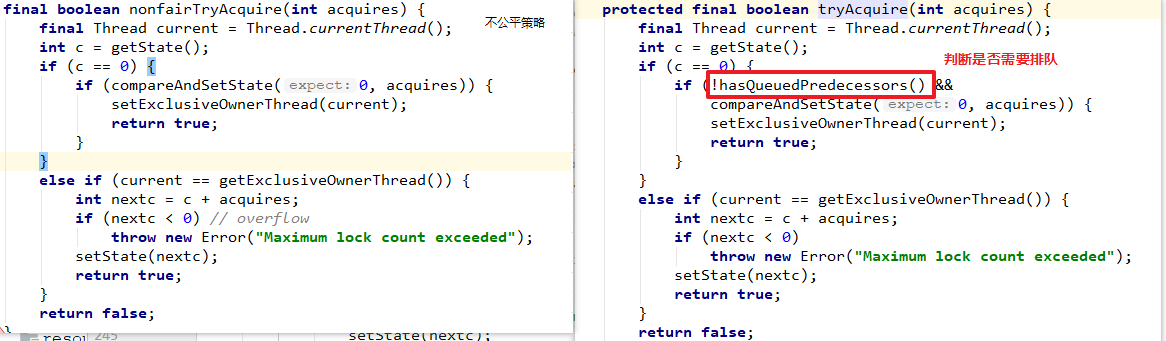

Comparison between fair strategy and unfair strategy

The only difference between fair strategy and unfair strategy is to judge whether to queue.