**

0. Preface

**

Some time ago, I participated in a competition and needed to complete the voice positioning function of ROS robot, but for various reasons, I failed to complete this function. Now I will sort out the overall idea as follows.

**

1. Hardware introduction

**

First of all, the purpose of this blog is not to advertise, but to complete the function.

The hardware used here is microphone array repeater mic array v2.0. The microphone array integrates four microphones, which are respectively located at four points of the cross, so it can easily obtain the azimuth of the sound source centered on the microphone array; at the same time, it supports USB port and existing operating systems such as Windows, Linux, MacOS, etc. Based on the above two characteristics, the microphone array can be used to realize voice location. For more information about this microphone array, refer to: Microphone array details This array combines with raspberry pie to make smart speakers: Smart speaker

**

2. Voice location

**

Next, I will explain the idea of speech location using speaker mic array v2.0.

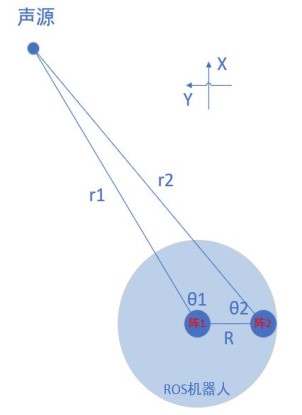

As mentioned above, the microphone array can obtain the azimuth of the sound source in real time, but not the distance from the sound source to the microphone array directly. Therefore, the solution is to use two microphone arrays, named array 1 and array 2 respectively, to build a triangle through the triangle theorem on one side and two corners, and calculate the side length of the triangle, so as to obtain the sound source to the machine The distance between human centers is shown as follows:

In the figure, R is known as the distance between two microphone arrays; θ 1 is the angle calculated by microphone array 1, and the center of microphone array 1 is aligned with the center of ROS robot; θ 2 is the angle calculated by microphone array 2. Therefore, r1 is the distance from the required sound source to the ROS robot.

The distance is obtained from the sine theorem of triangle: r1=R*sin(θ 2)/sin(180 - θ 1 - θ 2)

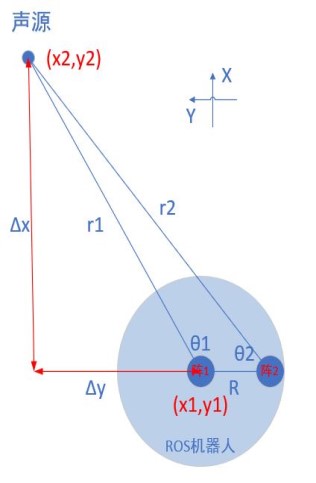

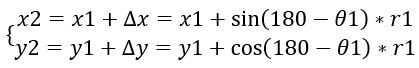

After obtaining the distance, the coordinate of the sound source on the map can be obtained according to the distance and the center coordinate of the robot. Let the coordinates of the robot center be (x1,y1), known; the coordinates of the sound source be (x2,y2), to be solved. As shown in the figure:

According to the triangle Pythagorean theorem:

The position of the sound source on the map (x2,y2) can be obtained. With (x1,y1) as the starting point and (x2,y2) as the end point, the voice positioning function can be completed.

Although this method can realize the voice location function under ROS, the cost is a little high, and it is not easy to be miniaturized and lightweight, so it can be used as a reference method. If you guys have better solutions, please leave a message below.

The procedure for acquiring the sound source angle of a single microphone array is as follows:

# -*- coding: utf-8 -*- import sys import struct import usb.core import usb.util USAGE = """Usage: python {} -h -p show all parameters -r read all parameters NAME get the parameter with the NAME NAME VALUE set the parameter with the NAME and the VALUE """ # parameter list # name: (id, offset, type, max, min , r/w, info) PARAMETERS = { 'AECFREEZEONOFF': (18, 7, 'int', 1, 0, 'rw', 'Adaptive Echo Canceler updates inhibit.', '0 = Adaptation enabled', '1 = Freeze adaptation, filter only'), 'AECNORM': (18, 19, 'float', 16, 0.25, 'rw', 'Limit on norm of AEC filter coefficients'), 'AECPATHCHANGE': (18, 25, 'int', 1, 0, 'ro', 'AEC Path Change Detection.', '0 = false (no path change detected)', '1 = true (path change detected)'), 'RT60': (18, 26, 'float', 0.9, 0.25, 'ro', 'Current RT60 estimate in seconds'), 'HPFONOFF': (18, 27, 'int', 3, 0, 'rw', 'High-pass Filter on microphone signals.', '0 = OFF', '1 = ON - 70 Hz cut-off', '2 = ON - 125 Hz cut-off', '3 = ON - 180 Hz cut-off'), 'RT60ONOFF': (18, 28, 'int', 1, 0, 'rw', 'RT60 Estimation for AES. 0 = OFF 1 = ON'), 'AECSILENCELEVEL': (18, 30, 'float', 1, 1e-09, 'rw', 'Threshold for signal detection in AEC [-inf .. 0] dBov (Default: -80dBov = 10log10(1x10-8))'), 'AECSILENCEMODE': (18, 31, 'int', 1, 0, 'ro', 'AEC far-end silence detection status. ', '0 = false (signal detected) ', '1 = true (silence detected)'), 'AGCONOFF': (19, 0, 'int', 1, 0, 'rw', 'Automatic Gain Control. ', '0 = OFF ', '1 = ON'), 'AGCMAXGAIN': (19, 1, 'float', 1000, 1, 'rw', 'Maximum AGC gain factor. ', '[0 .. 60] dB (default 30dB = 20log10(31.6))'), 'AGCDESIREDLEVEL': (19, 2, 'float', 0.99, 1e-08, 'rw', 'Target power level of the output signal. ', '[−inf .. 0] dBov (default: −23dBov = 10log10(0.005))'), 'AGCGAIN': (19, 3, 'float', 1000, 1, 'rw', 'Current AGC gain factor. ', '[0 .. 60] dB (default: 0.0dB = 20log10(1.0))'), 'AGCTIME': (19, 4, 'float', 1, 0.1, 'rw', 'Ramps-up / down time-constant in seconds.'), 'CNIONOFF': (19, 5, 'int', 1, 0, 'rw', 'Comfort Noise Insertion.', '0 = OFF', '1 = ON'), 'FREEZEONOFF': (19, 6, 'int', 1, 0, 'rw', 'Adaptive beamformer updates.', '0 = Adaptation enabled', '1 = Freeze adaptation, filter only'), 'STATNOISEONOFF': (19, 8, 'int', 1, 0, 'rw', 'Stationary noise suppression.', '0 = OFF', '1 = ON'), 'GAMMA_NS': (19, 9, 'float', 3, 0, 'rw', 'Over-subtraction factor of stationary noise. min .. max attenuation'), 'MIN_NS': (19, 10, 'float', 1, 0, 'rw', 'Gain-floor for stationary noise suppression.', '[−inf .. 0] dB (default: −16dB = 20log10(0.15))'), 'NONSTATNOISEONOFF': (19, 11, 'int', 1, 0, 'rw', 'Non-stationary noise suppression.', '0 = OFF', '1 = ON'), 'GAMMA_NN': (19, 12, 'float', 3, 0, 'rw', 'Over-subtraction factor of non- stationary noise. min .. max attenuation'), 'MIN_NN': (19, 13, 'float', 1, 0, 'rw', 'Gain-floor for non-stationary noise suppression.', '[−inf .. 0] dB (default: −10dB = 20log10(0.3))'), 'ECHOONOFF': (19, 14, 'int', 1, 0, 'rw', 'Echo suppression.', '0 = OFF', '1 = ON'), 'GAMMA_E': (19, 15, 'float', 3, 0, 'rw', 'Over-subtraction factor of echo (direct and early components). min .. max attenuation'), 'GAMMA_ETAIL': (19, 16, 'float', 3, 0, 'rw', 'Over-subtraction factor of echo (tail components). min .. max attenuation'), 'GAMMA_ENL': (19, 17, 'float', 5, 0, 'rw', 'Over-subtraction factor of non-linear echo. min .. max attenuation'), 'NLATTENONOFF': (19, 18, 'int', 1, 0, 'rw', 'Non-Linear echo attenuation.', '0 = OFF', '1 = ON'), 'NLAEC_MODE': (19, 20, 'int', 2, 0, 'rw', 'Non-Linear AEC training mode.', '0 = OFF', '1 = ON - phase 1', '2 = ON - phase 2'), 'SPEECHDETECTED': (19, 22, 'int', 1, 0, 'ro', 'Speech detection status.', '0 = false (no speech detected)', '1 = true (speech detected)'), 'FSBUPDATED': (19, 23, 'int', 1, 0, 'ro', 'FSB Update Decision.', '0 = false (FSB was not updated)', '1 = true (FSB was updated)'), 'FSBPATHCHANGE': (19, 24, 'int', 1, 0, 'ro', 'FSB Path Change Detection.', '0 = false (no path change detected)', '1 = true (path change detected)'), 'TRANSIENTONOFF': (19, 29, 'int', 1, 0, 'rw', 'Transient echo suppression.', '0 = OFF', '1 = ON'), 'VOICEACTIVITY': (19, 32, 'int', 1, 0, 'ro', 'VAD voice activity status.', '0 = false (no voice activity)', '1 = true (voice activity)'), 'STATNOISEONOFF_SR': (19, 33, 'int', 1, 0, 'rw', 'Stationary noise suppression for ASR.', '0 = OFF', '1 = ON'), 'NONSTATNOISEONOFF_SR': (19, 34, 'int', 1, 0, 'rw', 'Non-stationary noise suppression for ASR.', '0 = OFF', '1 = ON'), 'GAMMA_NS_SR': (19, 35, 'float', 3, 0, 'rw', 'Over-subtraction factor of stationary noise for ASR. ', '[0.0 .. 3.0] (default: 1.0)'), 'GAMMA_NN_SR': (19, 36, 'float', 3, 0, 'rw', 'Over-subtraction factor of non-stationary noise for ASR. ', '[0.0 .. 3.0] (default: 1.1)'), 'MIN_NS_SR': (19, 37, 'float', 1, 0, 'rw', 'Gain-floor for stationary noise suppression for ASR.', '[−inf .. 0] dB (default: −16dB = 20log10(0.15))'), 'MIN_NN_SR': (19, 38, 'float', 1, 0, 'rw', 'Gain-floor for non-stationary noise suppression for ASR.', '[−inf .. 0] dB (default: −10dB = 20log10(0.3))'), 'GAMMAVAD_SR': (19, 39, 'float', 1000, 0, 'rw', 'Set the threshold for voice activity detection.', '[−inf .. 60] dB (default: 3.5dB 20log10(1.5))'), # 'KEYWORDDETECT': (20, 0, 'int', 1, 0, 'ro', 'Keyword detected. Current value so needs polling.'), 'DOAANGLE': (21, 0, 'int', 359, 0, 'ro', 'DOA angle. Current value. Orientation depends on build configuration.') } class Tuning: TIMEOUT = 100000 def __init__(self, dev): self.dev = dev def write(self, name, value): try: data = PARAMETERS[name] except KeyError: return if data[5] == 'ro': raise ValueError('{} is read-only'.format(name)) id = data[0] # 4 bytes offset, 4 bytes value, 4 bytes type if data[2] == 'int': payload = struct.pack(b'iii', data[1], int(value), 1) else: payload = struct.pack(b'ifi', data[1], float(value), 0) self.dev.ctrl_transfer( usb.util.CTRL_OUT | usb.util.CTRL_TYPE_VENDOR | usb.util.CTRL_RECIPIENT_DEVICE, 0, 0, id, payload, self.TIMEOUT) def read(self, name): try: data = PARAMETERS[name] except KeyError: return id = data[0] cmd = 0x80 | data[1] if data[2] == 'int': cmd |= 0x40 length = 8 response = self.dev.ctrl_transfer( usb.util.CTRL_IN | usb.util.CTRL_TYPE_VENDOR | usb.util.CTRL_RECIPIENT_DEVICE, 0, cmd, id, length, self.TIMEOUT) response = struct.unpack(b'ii', response.tostring()) if data[2] == 'int': result = response[0] else: result = response[0] * (2.**response[1]) return result def set_vad_threshold(self, db): self.write('GAMMAVAD_SR', db) def is_voice(self): return self.read('VOICEACTIVITY') @property def direction(self): return self.read('DOAANGLE') @property def version(self): return self.dev.ctrl_transfer( usb.util.CTRL_IN | usb.util.CTRL_TYPE_VENDOR | usb.util.CTRL_RECIPIENT_DEVICE, 0, 0x80, 0, 1, self.TIMEOUT)[0] def close(self): """ close the interface """ usb.util.dispose_resources(self.dev) def find(vid=0x2886, pid=0x0018): dev = usb.core.find(idVendor=vid, idProduct=pid) if not dev: return return Tuning(dev) def main(): if len(sys.argv) > 1: if sys.argv[1] == '-p': print('name\t\t\ttype\tmax\tmin\tr/w\tinfo') print('-------------------------------') for name in sorted(PARAMETERS.keys()): #Print all parameters data = PARAMETERS[name] print('{:16}\t{}'.format(name, b'\t'.join([str(i) for i in data[2:7]]))) for extra in data[7:]: print('{}{}'.format(' '*60, extra)) else: dev = find() #Finding microphone array devices on Linux if not dev: print('No device found') sys.exit(1) if sys.argv[1] == '-r': #Get all parameter settings from device print('{:24} {}'.format('name', 'value')) print('-------------------------------') for name in sorted(PARAMETERS.keys()): print('{:24} {}'.format(name, dev.read(name))) else: name = sys.argv[1].upper() if name in PARAMETERS: if len(sys.argv) > 2: # To get the angle, the command line input should be (provided to the root directory of the file): tuning.py DOAANGLE dev.write(name, sys.argv[2]) print('{}: {}'.format(name, dev.read(name))) else: print('{} is not a valid name'.format(name)) dev.close() else: print(USAGE.format(sys.argv[0])) if __name__ == '__main__': main()