Common current limiting algorithms

Counter algorithm

The counter algorithm uses the counter to limit the current, which is a little simple and rough. Generally, we will limit the number of requests that can pass in one second. For example, the current limit qps is 60. The implementation idea of the algorithm is to add a counter. In the next 1s, for each request, add 1 to the counter. If the accumulated number reaches 60, all subsequent requests will be rejected. After 1s, restore the counter to 0 and restart counting. The specific implementation can be as follows: for each service call, you can add 1 to the counter and return the latest value through the incrementAndGet() method in AtomicLong, but this has a disadvantage and is vulnerable to attack. For example, if someone sends 60 requests at the beginning of 1s, your normal requests will be intercepted

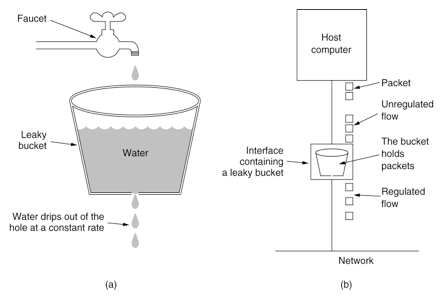

Leaky bucket algorithm

In order to eliminate the "spike phenomenon", the leaky bucket algorithm can be used to limit the flow. The name of the leaky bucket algorithm is very vivid. There is a container inside the algorithm, which is similar to the funnel used in life. When the request comes in, it is equivalent to water pouring into the funnel, and then flowing out slowly and uniformly from the small opening at the lower end. No matter how much flow is above, the speed of outflow from below remains unchanged. No matter how unstable the service caller is, the flow is limited by the leaky bucket algorithm, and the request is processed every 10 milliseconds. Because the processing speed is fixed and the incoming speed of requests is unknown, many requests may come in suddenly. Requests that have not been processed in time are put in the bucket first. Since it is a bucket, there must be a capacity limit. If the bucket is full, new incoming requests will be discarded.

In terms of algorithm implementation, a queue can be prepared to save requests. In addition, a scheduled executorservice can periodically obtain requests from the queue and execute them. Multiple concurrent executions can be obtained at one time.

This algorithm also has disadvantages after use: it can not deal with short-time burst traffic.

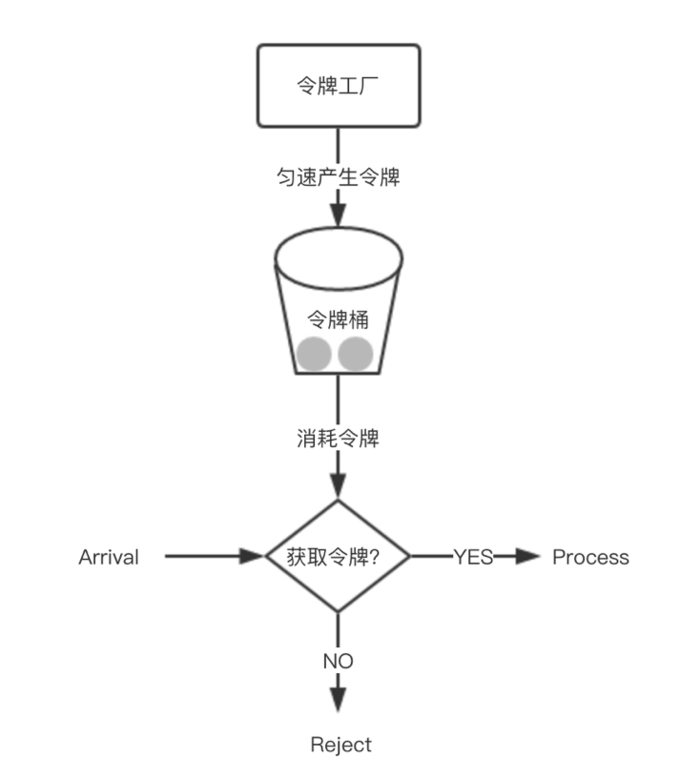

Token Bucket

In a sense, token bucket algorithm is an improvement of leaky bucket algorithm. Bucket algorithm can limit the rate of request calls, while token bucket algorithm can limit the average rate of calls and allow a certain degree of burst calls. In the token bucket algorithm, there is a bucket for storing a fixed number of tokens. There is a mechanism in the algorithm, which puts tokens into the bucket at a certain rate. Each request call needs to obtain the token first. Only when you get the token can you have the opportunity to continue execution. Otherwise, you can choose to wait for the available token or refuse directly. The action of putting tokens is continuous. If the number of tokens in the bucket reaches the upper limit, the tokens will be discarded. Therefore, this situation exists. There are a large number of available tokens in the bucket. At this time, incoming requests can be directly executed with the tokens. For example, if qps is set to 100, there will be 100 tokens in the bucket one second after the initialization of the current limiter, At this time, the service has not been fully started. When the service is provided, the current limiter can resist 100 instantaneous requests. Therefore, only when there is no token in the bucket will the request wait, and finally it is equivalent to executing at a certain rate.

Implementation idea: a queue can be prepared to save tokens. In addition, tokens are regularly generated through a thread pool and placed in the queue. For each request, a token is obtained from the queue and continues to be executed.

Spring Cloud Gateway current limiting

Firstly, the start dependency of gateway and the reactive dependency of redis are introduced into the pom file of the project. The code is as follows:

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-gateway</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifatId>spring-boot-starter-data-redis-reactive</artifactId>

</dependency>

Make the following configuration in the configuration file:

server:

port: 8081

spring:

cloud:

gateway:

routes:

- id: limit_route

uri: http://httpbin.org:80/get

predicates:

- After=2017-01-20T17:42:47.789-07:00[America/Denver]

filters:

- name: RequestRateLimiter

args:

key-resolver: '#{@hostAddrKeyResolver}'

redis-rate-limiter.replenishRate: 1

redis-rate-limiter.burstCapacity: 3

application:

name: gateway-limiter

redis:

host: localhost

port: 6379

database: 0

In the above configuration file, the port of the specified program is 8081, redis information is configured, and the current limiting filter of RequestRateLimiter is configured. The filter needs to be configured with three parameters:

burstCapacity, total capacity of token bucket.

replenishRate, the average rate of token bucket filling per second.

Key resolver, the name of the Bean object of the parser for the current limiting key. It uses a SpEL expression to get the Bean object from the Spring container according to #{@ beanName}.

KeyResolver needs to implement the resolve method. For example, if the current is limited according to Hostname, it needs to be judged by hostAddress. After implementing KeyResolver, you need to register the Bean of this class in the Ioc container.

public class HostAddrKeyResolver implements KeyResolver {

@Override

public Mono<String> resolve(ServerWebExchange exchange) {

return Mono.just(exchange.getRequest().getRemoteAddress().getAddress().getHostAddress());

}

}

@Bean

public HostAddrKeyResolver hostAddrKeyResolver() {

return new HostAddrKeyResolver();

}

You can limit the current according to the uri. At this time, the KeyResolver code is as follows:

public class UriKeyResolver implements KeyResolver {

@Override

public Mono<String> resolve(ServerWebExchange exchange) {

return Mono.just(exchange.getRequest().getURI().getPath());

}

}

@Bean

public UriKeyResolver uriKeyResolver() {

return new UriKeyResolver();

}

You can also limit the flow in the user's dimension:

@Bean

KeyResolver userKeyResolver() {

return exchange -> Mono.just(exchange.getRequest().getQueryParams().getFirst("user"));

}

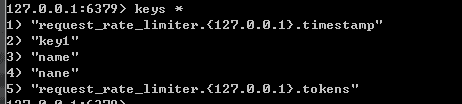

Use jmeter for pressure measurement, configure 10thread to request lcoalhost:8081, and the cycle interval is 1s. It can be seen from the pressure test results that some requests pass and some requests fail. Check the key s in redis through the redis client. As follows:

It can be seen that RequestRateLimiter uses redis for current limiting, and stores two keys in redis. Pay attention to the meaning of these two keys. You can see the lua source code.