Nowadays, more Internet companies are trying to move micro services to the cloud. This can manage micro service clusters more easily through some mature cloud container management platforms, thus improving the stability of micro services and improving the team development efficiency. However, there are some technical difficulties in cloud migration. Today's article mainly introduces how to build a set of SpringBoot case tutorials based on K8s deployment from 0.

Basic environment preparation:

- mac operating system

- Simple Web project for SpringBoot

Minicube environment construction

Install a k8s environment suitable for our beginners. A better recommendation is to use the minicube tool. At the same time, using this tool can better reduce our learning threshold for k8s.

First, we need to download the minicube file:

curl -Lo minikube https://github.com/kubernetes/minikube/releases/download/v1.5.0/minikube-linux-amd64 && chmod +x minikube && sudo mv minikube /usr/local/bin/

When installing minicube, you may get stuck when trying to download the image. For example, the following exceptions occur:

[idea @ Mac]>>>>>>minikube start --registry-mirror=https://w4i0ckag.mirror.aliyuncs.com 😄 Darwin 10.15.3 Upper minikube v1.16.0 ✨ Use based on existing profiles docker Driver 👍 Starting control plane node minikube in cluster minikube 🚜 Pulling base image ... E0126 17:03:30.131026 34416 cache.go:180] Error downloading kic artifacts: failed to download kic base image or any fallback image 🔥 Creating docker container (CPUs=2, Memory=1988MB) ... 🤦 StartHost failed, but will try again: creating host: create: creating: setting up container node: preparing volume for minikube container: docker run --rm --entrypoint /usr/bin/test -v minikube:/var gcr.io/k8s-minikube/kicbase:v0.0.15-snapshot4@sha256:ef1f485b5a1cfa4c989bc05e153f0a8525968ec999e242efff871cbb31649c16 -d /var/lib: exit status 125 stdout: stderr: Unable to find image 'gcr.io/k8s-minikube/kicbase:v0.0.15-snapshot4@sha256:ef1f485b5a1cfa4c989bc05e153f0a8525968ec999e242efff871cbb31649c16' locally docker: Error response from daemon: Get https://gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers). See 'docker run --help'. 🤷 docker "minikube" container is missing, will recreate. 🔥 Creating docker container (CPUs=2, Memory=1988MB) ... 😿 Failed to start docker container. Running "minikube delete" may fix it: recreate: creating host: create: creating: setting up container node: preparing volume for minikube container: docker run --rm --entrypoint /usr/bin/test -v minikube:/var gcr.io/k8s-minikube/kicbase:v0.0.15-snapshot4@sha256:ef1f485b5a1cfa4c989bc05e153f0a8525968ec999e242efff871cbb31649c16 -d /var/lib: exit status 125 stdout: stderr: Unable to find image 'gcr.io/k8s-minikube/kicbase:v0.0.15-snapshot4@sha256:ef1f485b5a1cfa4c989bc05e153f0a8525968ec999e242efff871cbb31649c16' locally docker: Error response from daemon: Get https://gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers). See 'docker run --help'. ❌ Exiting due to GUEST_PROVISION: Failed to start host: recreate: creating host: create: creating: setting up container node: preparing volume for minikube container: docker run --rm --entrypoint /usr/bin/test -v minikube:/var gcr.io/k8s-minikube/kicbase:v0.0.15-snapshot4@sha256:ef1f485b5a1cfa4c989bc05e153f0a8525968ec999e242efff871cbb31649c16 -d /var/lib: exit status 125 stdout: stderr: Unable to find image 'gcr.io/k8s-minikube/kicbase:v0.0.15-snapshot4@sha256:ef1f485b5a1cfa4c989bc05e153f0a8525968ec999e242efff871cbb31649c16' locally docker: Error response from daemon: Get https://gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers). See 'docker run --help'. 😿 If the above advice does not help, please let us know: 👉 https://github.com/kubernetes/minikube/issues/new/choose

At this time, you can try to install the corresponding image file on the host machine first:

docker pull anjone/kicbase

Then, minicube uses the local image when starting, which can reduce the time-consuming process of minicube start.

After the minicube is downloaded, the start-up phase begins:

minikube start --vm-driver=docker --base-image="anjone/kicbase"

If the startup fails, try to replace the specified image warehouse, such as the following paragraph:

minikube start --registry-mirror=https://bmtb46e4.mirror.aliyuncs.com --vm-driver=docker --base-image="anjone/kicbase" --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

Here I will briefly introduce the meaning of startup parameters:

– registry mirror the address here will be consistent with the address of the image warehouse pointed to in the docker.daemon file inside the started minicube.

– VM driver virtual machine engine refers to the internal of minicube, which uses docker as the core

– base image declares the basic image. If there is a corresponding image inside the host, there is no need to pull additional images

– image repository pulls the mirrored warehouse

When minicube is started successfully, it is roughly as follows:

[idea @ Mac]>>>>>>minikube start --vm-driver=docker --base-image="anjone/kicbase"

😄 Darwin 10.15.3 Upper minikube v1.16.0

✨ Use based on existing profiles docker Driver

👍 Starting control plane node minikube in cluster minikube

🤷 docker "minikube" container is missing, will recreate.

🔥 Creating docker container (CPUs=2, Memory=1988MB) ...

❗ This container is having trouble accessing https://k8s.gcr.io

💡 To pull new external images, you may need to configure a proxy: https://minikube.sigs.k8s.io/docs/reference/networking/proxy/

🐳 Is Docker 19.03.2 Medium preparation Kubernetes v1.20.0...

▪ Generating certificates and keys ...

▪ Booting up control plane ...\

▪ Configuring RBAC rules ...

🔎 Verifying Kubernetes components...

🌟 Enabled addons: default-storageclass

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

[idea @ Mac]>>>>>>

OK, now we're going to the part of deploying the SpringBoot application.

Deploy to k8s based on SpringBoot

First, we need to build a simple SpringBoot application:

Introducing dependency dependency

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

</dependencies>

pack docker Mirror configuration:

<build>

<finalName>Packaged image name</finalName>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>2.2.5.RELEASE</version>

</plugin>

<!-- Docker maven plugin -->

<plugin>

<groupId>com.spotify</groupId>

<artifactId>docker-maven-plugin</artifactId>

<version>1.0.0</version>

<configuration>

<imageName>${project.artifactId}</imageName>

<imageTags>

<tag>1.0.1</tag>

</imageTags>

<dockerDirectory>src/main/docker</dockerDirectory>

<resources>

<resource>

<targetPath>/</targetPath>

<directory>${project.build.directory}</directory>

<include>${project.build.finalName}.jar</include>

</resource>

</resources>

</configuration>

</plugin>

<!-- Docker maven plugin -->

</plugins>

</build>

Then there are simple controller s and startup classes:

@RestController

@RequestMapping(value = "/test")

public class TestController {

@GetMapping(value = "/do-test")

public String doTest(){

System.out.println("this is a test");

return "success";

}

}

@SpringBootApplication

public class WebApplication {

public static void main(String[] args) {

SpringApplication.run(WebApplication.class);

}

}

Script Dockerfile:

FROM openjdk:8-jdk-alpine VOLUME /tmp #Copy springboot-k8s-template.jar to the inside of the container and don't call it springboot-k8s-template-v1.jar ADD springboot-k8s-template.jar springboot-k8s-template-v1.jar #Equivalent to specifying external profiles in a container by executing jar packages with the command cmd ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","/springboot-k8s-template-v1.jar"]

Then go to the Dockerfile directory and build the image:

[idea @ Mac]>>>>>>docker build -t springboot-k8s-template:1.0 . [+] Building 0.5s (7/7) FINISHED => [internal] load build definition from Dockerfile 0.0s => => transferring dockerfile: 419B 0.0s => [internal] load .dockerignore 0.0s => => transferring context: 2B 0.0s => [internal] load metadata for docker.io/library/openjdk:8-jdk-alpine 0.0s => [internal] load build context 0.3s => => transferring context: 17.60MB 0.3s => CACHED [1/2] FROM docker.io/library/openjdk:8-jdk-alpine 0.0s => [2/2] ADD springboot-k8s-template.jar springboot-k8s-template-v1.jar 0.1s => exporting to image 0.1s => => exporting layers 0.1s => => writing image sha256:86d02961c4fa5bb576c91e3ebf031a3d8b140ddbb451b9613a2c4d601ac4d853 0.0s => => naming to docker.io/library/springboot-k8s-template:1.0 0.0s Use 'docker scan' to run Snyk tests against images to find vulnerabilities and learn how to fix them [idea @ Mac]>>>>>>docker images | grep template springboot-k8s-template 1.0 86d02961c4fa 48 seconds ago 122MB

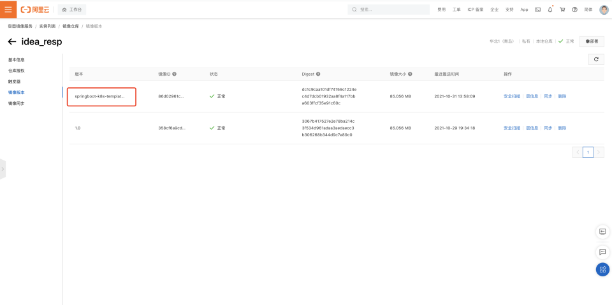

After the construction is completed, the local image is packaged and published to the image warehouse. Here, I process it by pushing it to Alibaba cloud image warehouse.

Push local images to alicloud

First, log in to the docker warehouse, then record the corresponding tag information, and finally push the image.

$ docker login --username=[Alibaba cloud account name] registry.cn-qingdao.aliyuncs.com $ docker tag [ImageId] registry.cn-qingdao.aliyuncs.com/idea_hub/idea_resp:[Mirror version number] $ docker push registry.cn-qingdao.aliyuncs.com/idea_hub/idea_resp:[Mirror version number]

For example:

[idea @ Mac]>>>>>>docker images | grep config qiyu-framework-k8s-config 1.0 6168639757e9 2 minutes ago 122MB [idea @ Mac]>>>>>>docker tag 6168639757e9 registry.cn-qingdao.aliyuncs.com/idea_hub/idea_resp:qiyu-framework-k8s-config-1.0 [idea @ Mac]>>>>>>docker push registry.cn-qingdao.aliyuncs.com/idea_hub/idea_resp:qiyu-framework-k8s-config-1.0 The push refers to repository [registry.cn-qingdao.aliyuncs.com/idea_hub/idea_resp] 1ace00556b41: Pushed ceaf9e1ebef5: Layer already exists 9b9b7f3d56a0: Layer already exists f1b5933fe4b5: Layer already exists qiyu-framework-k8s-config-1.0: digest: sha256:50c1a87484f6cbec699d65321fa5bbe70f5ad6da5a237e95ea87c7953a1c80da size: 1159 [idea @ Mac]>>>>>>

Please replace the [ImageId] and [image version number] parameters in the example according to the actual image information.

After the image file is packaged and pushed to the image warehouse, you can write the corresponding image address in the yaml file to ensure that the corresponding image file can be pulled from the warehouse when the image is downloaded.

Usually, we will use a unified yaml file to deploy and build pod nodes in the project.

yaml profile:

apiVersion: apps/v1 #Kubectl API versions can view the version information through this instruction

kind: Deployment # Specify resource category

metadata: #Some metadata of resources

name: springboot-k8s-template-deployment #Name of deloyment

labels:

app: springboot-k8s-template-deployment #label

spec:

replicas: 2 #Number of created pod s

selector:

matchLabels:

app: springboot-k8s-template-deployment #Only when the tag is set to this can the related pod be scheduled

template:

metadata:

labels:

app: springboot-k8s-template-v1

spec:

containers:

- name: springboot-k8s-template-v1

image: registry.cn-qingdao.aliyuncs.com/idea_hub/idea_resp:1.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

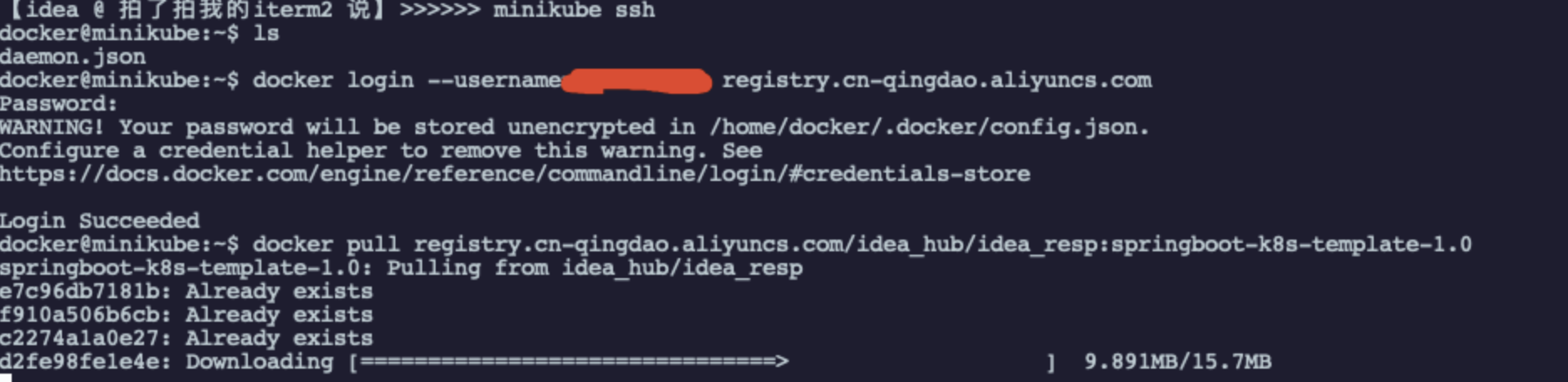

Since Alibaba cloud's image warehouse requires user account and password access, here we can try a simpler strategy. Log in to minicube and download the corresponding Alibaba cloud image in advance.

You can log in to the inside of minicube through the minicube SSH command:

The corresponding resources can be downloaded by using the docker pull command:

docker@minikube:~$ docker pull registry.cn-qingdao.aliyuncs.com/idea_hub/idea_resp:springboot-k8s-template-1.0 springboot-k8s-template-1.0: Pulling from idea_hub/idea_resp e7c96db7181b: Already exists f910a506b6cb: Already exists c2274a1a0e27: Already exists d2fe98fe1e4e: Pull complete Digest: sha256:dc1c9caa101df74159c1224ec4d7dcb01932aa8f4a117bba603ffcf35e91c60c Status: Downloaded newer image for registry.cn-qingdao.aliyuncs.com/idea_hub/idea_resp:springboot-k8s-template-1.0 registry.cn-qingdao.aliyuncs.com/idea_hub/idea_resp:springboot-k8s-template-1.0 docker@minikube:~$

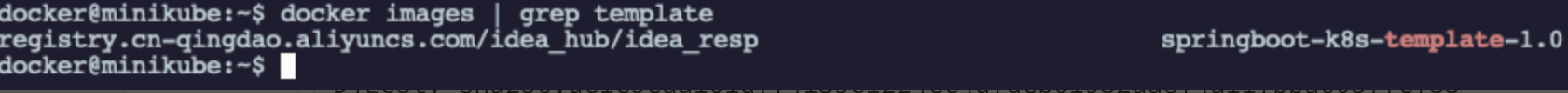

View the corresponding image file

The image pull strategy can be systematically understood according to the introduction on the official website:

https://kubernetes.io/docs/concepts/containers/images/

In the yaml file, I selected the IfNotPresent policy, which can ensure that when there is a local image, the local image is preferred and the network pull is not selected.

Finally, find the relevant yaml file to start the deployment of pod.

kubectl create -f ./k8s-springboot-template.yaml

At this time, you can see the corresponding pod node through the kubectl get pod command:

Finally, the deployment service needs to be exposed:

[idea @ Took a picture of me iterm2 [say]>>>>>> kubectl expose deployment springboot-k8s-template-deployment --type=NodePort service/springboot-k8s-template-deployment exposed [idea @ Took a picture of me iterm2 [say]>>>>>> kubectl get pods NAME READY STATUS RESTARTS AGE springboot-k8s-template-deployment-687f8bf86d-gqxcp 1/1 Running 0 7m50s springboot-k8s-template-deployment-687f8bf86d-lcq5p 1/1 Running 0 7m50s [idea @ I did it iterm2 [say]>>>>>> minikube service springboot-k8s-template-deployment |-----------|------------------------------------|-------------|---------------------------| | NAMESPACE | NAME | TARGET PORT | URL | |-----------|------------------------------------|-------------|---------------------------| | default | springboot-k8s-template-deployment | 8080 | http://192.168.49.2:31179 | |-----------|------------------------------------|-------------|---------------------------| 🏃 Starting tunnel for service springboot-k8s-template-deployment. |-----------|------------------------------------|-------------|------------------------| | NAMESPACE | NAME | TARGET PORT | URL | |-----------|------------------------------------|-------------|------------------------| | default | springboot-k8s-template-deployment | | http://127.0.0.1:57109 | |-----------|------------------------------------|-------------|------------------------| 🎉 Opening service from default browser default/springboot-k8s-template-deployment... ❗ Because you are using a Docker driver on darwin, the terminal needs to be open to run it.

Access after exposure:

http://127.0.0.1:57109/test/do-test

Verify that the interface is normal.

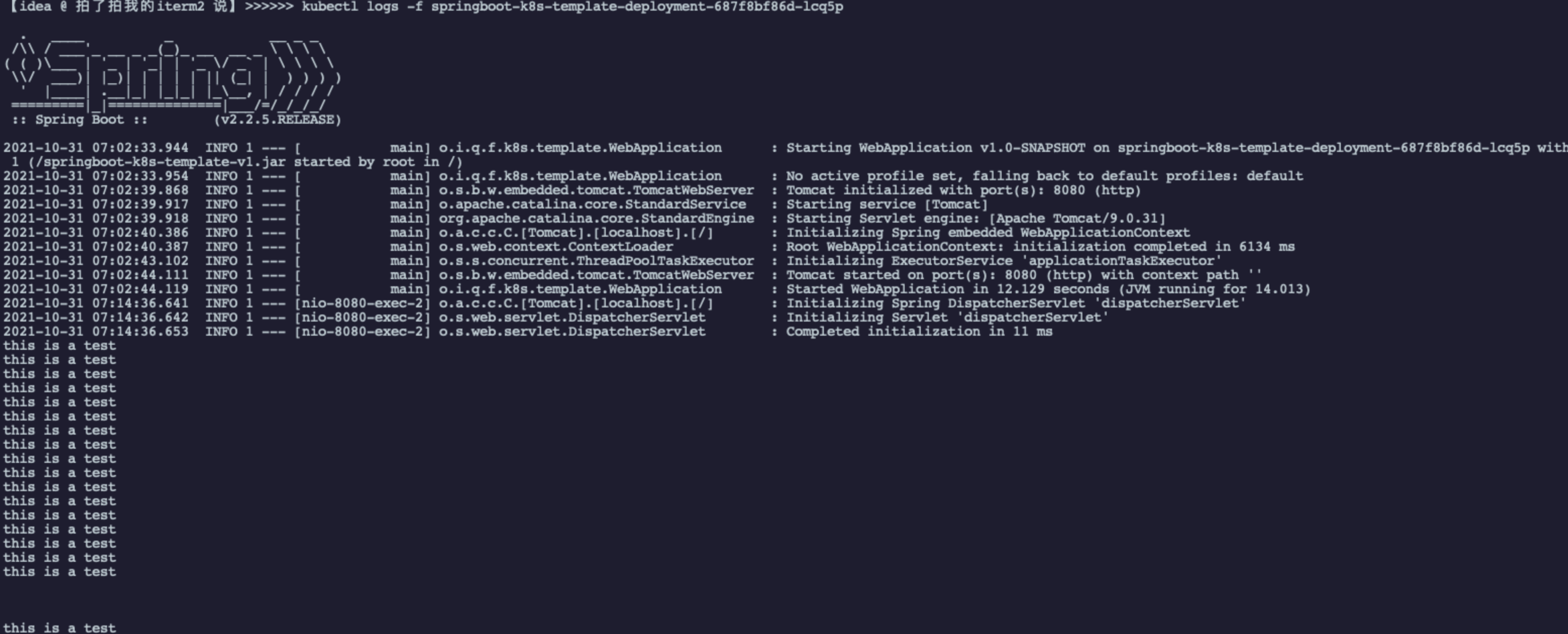

Minicube log view:

kubectl logs -f springboot-k8s-template-deployment-687f8bf86d-lcq5p