Introduction: Course Overview

Reprint (invasion and deletion): https://gitee.com/eson15/springboot_study

Course catalogue

- Introduction: Course Overview

- Lesson 01: Spring Boot development environment setup and project startup

- Lesson 02: Spring Boot returns Json data and data encapsulation

- Lesson 03: Spring Boot using slf4j for logging

- Lesson 04: project property configuration in Spring Boot

- Lesson 05: MVC support in Spring Boot

- Lesson 06: Spring Boot integrates Swagger2 to present online interface documents

- Lesson 07: Spring Boot integrates Thymeleaf template engine

- Lesson 08: Global exception handling in Spring Boot

- Lesson 09: facet AOP processing in Spring Boot

- Lesson 10: integrating MyBatis in Spring Boot

- Lesson 11: Spring Boot transaction configuration management

- Lesson 12: using listeners in Spring Boot

- Lesson 13: using interceptors in Spring Boot

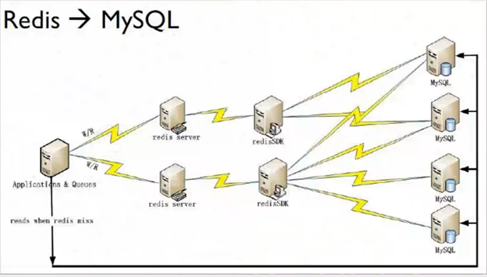

- Lesson 14: integrating Redis in Spring Boot

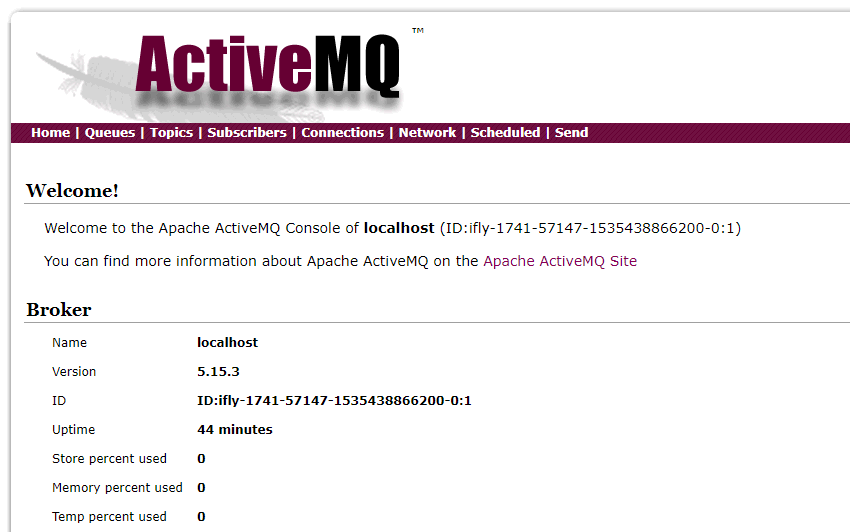

- Lesson 15: integrating ActiveMQ in Spring Boot

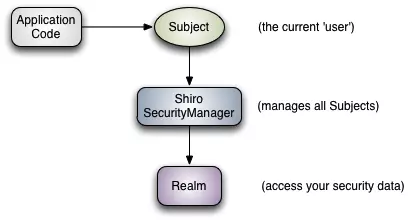

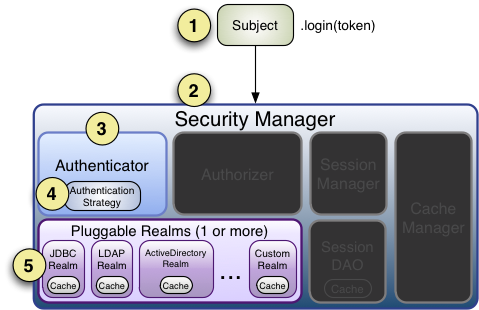

- Lesson 16: integrating Shiro in Spring Boot

- Lesson 17: Lucence in Spring Boot

- Lesson 18: Spring Boot architecture in actual project development

1. What is spring boot

We know that Spring has been developing rapidly since 2002, and now it has become a real standard in the development of Java EE (Java Enterprise Edition). However, with the development of technology, the use of Spring in Java EE has gradually become cumbersome, and a large number of XML files exist in the project. Cumbersome configuration and the configuration problem of integrating the third-party framework lead to the reduction of development and deployment efficiency.

In October 2012, Mike Youngstrom created a function request in Spring jira to support the container less Web application architecture in the spring framework. He talked about configuring Web container services within the main container boot spring container. This is an excerpt from jira's request:

I think Spring's Web application architecture can be greatly simplified if it provides tools and reference architecture to use Spring components and configuration model from top to bottom. Embed and unify the configuration of these common Web container services in the Spring container guided by the simple. main() method.

This requirement prompted the research and development of the Spring Boot project started in early 2013. Today, the version of Spring Boot has reached 2.0.3 RELEASE. Spring Boot is not a solution to replace spring, but a tool closely combined with the spring framework to improve the spring developer experience.

It integrates a large number of commonly used third-party library configurations. In Spring Boot applications, these third-party libraries can be almost out of the box with zero configuration. Most Spring Boot applications only need a very small amount of configuration code (Java based configuration), and developers can focus more on business logic.

2. Why learn Spring Boot

2.1 from the official point of view of Spring

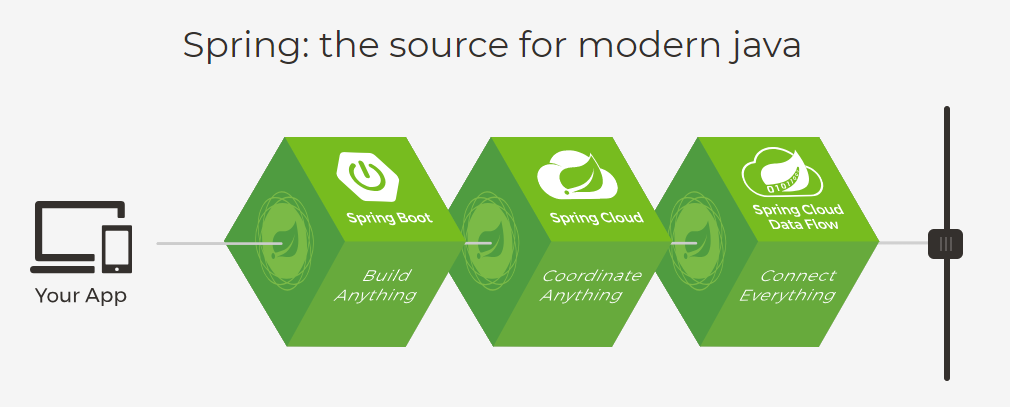

We open Spring's Official website , you can see the following figure:

We can see the official positioning of Spring Boot in the figure: Build Anything, Build Anything. Spring Boot is designed to start and run as quickly as possible, with minimal pre spring configuration. At the same time, let's take a look at the official positioning of the latter two:

Spring cloud: Coordinate Anything;

SpringCloud Data Flow: Connect everything.

Carefully taste the wording of Spring Boot, Spring cloud and Spring cloud data flow on the official website of Spring. At the same time, it can be seen that Spring officials attach great importance to these three technologies and are the focus of learning now and in the future (the courses related to Spring cloud will also be launched at that time).

2.2 from the advantages of Spring Boot

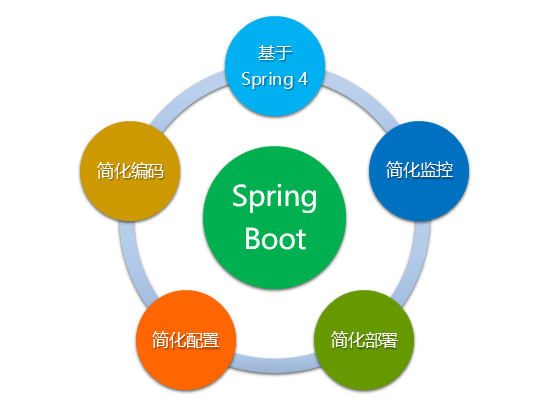

What are the advantages of Spring Boot? What problems have been solved for us? Let's illustrate with the following figure:

2.2.1 good genes

Spring Boot was born with Spring 4.0. Literally, Boot means Boot. Therefore, spring Boot aims to help developers quickly build the spring framework. Spring Boot inherits the excellent gene of the original spring framework, making spring more convenient and fast in use.

2.2.2 simplified coding

For example, if we want to create a web project, friends who use Spring know that when using Spring, we need to add multiple dependencies in the pom file, and Spring Boot will help develop a web container to quickly start. In Spring Boot, we just need to add the following starter web dependency in the pom file.

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency>

After clicking to enter the dependency, we can see that the starter web of Spring Boot already contains multiple dependencies, including those that need to be imported in the Spring project. Let's take a look at some of them, as follows:

<!-- .....Omit other dependencies -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

<version>5.0.7.RELEASE</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-webmvc</artifactId>

<version>5.0.7.RELEASE</version>

<scope>compile</scope>

</dependency>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

It can be seen that Spring Boot greatly simplifies our coding. We don't need to import dependencies one by one, just one dependency.

2.2.3 simplified configuration

Although spring makes Java EE a lightweight framework, it was once considered a "configuration hell" because of its cumbersome configuration. Various XML and Annotation configurations will dazzle people, and if there are many configurations, it is difficult to find the reason if there is an error. Spring Boot uses Java Config to configure spring. for instance:

I create a new class, but I don't need @ Service annotation, that is, it is an ordinary class. So how can we make it a Bean and let Spring manage it? Only @ Configuration , and @ Bean annotations are required, as follows:

public class TestService {

public String sayHello () {

return "Hello Spring Boot!";

}

}

- 1

- 2

- 3

- 4

- 5

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class JavaConfig {

@Bean

public TestService getTestService() {

return new TestService();

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

@Configuration indicates that this class is a configuration class, @ Bean indicates that this method returns a Bean. In this way, TestService is managed by Spring as a Bean. In other places, if we need to use the Bean, we can use it directly by injecting @ Resource annotation as before, which is very convenient.

@Resource private TestService testService;

- 1

- 2

In addition, in terms of deployment configuration, the original Spring has multiple xml and properties configurations, and only one application is required in Spring Boot YML is enough.

2.2.4 simplified deployment

When using Spring, we need to deploy tomcat on the server during project deployment, and then type the project into a war package and throw it into tomcat. After using Spring Boot, we don't need to deploy tomcat on the server, because tomcat is embedded in Spring Boot. We only need to type the project into a jar package and use Java - jar XXX Jar one click launch project.

In addition, it also reduces the basic requirements for the operating environment. JDK can be included in the environment variable.

2.2.5 simplified monitoring

We can introduce the Spring Boot start actor dependency and directly use REST to obtain the runtime performance parameters of the process, so as to achieve the purpose of monitoring, which is more convenient. However, Spring Boot is only a micro framework, which does not provide the corresponding supporting functions of service discovery and registration, no peripheral monitoring integration scheme, and no peripheral security management scheme. Therefore, Spring Cloud is also required to be used together in the micro service architecture.

2.3 from the perspective of future development trend

Microservices are the trend of future development. The project will slowly shift from traditional architecture to microservice architecture, because microservices can enable different teams to focus on a smaller range of work responsibilities, use independent technologies, and deploy more safely and frequently. It inherits the excellent features of spring, comes down in one continuous line with spring, and supports the implementation of various rest APIs. Spring Boot is also a technology strongly recommended by the government. It can be seen that Spring Boot is a general trend in the future.

3. What can be learned from this course

This course uses the latest version of Spring Boot 2.0.3 RELEASE. The course articles are scenes and demo s separated from the actual project by the author. The goal is to lead learners to quickly get started with Spring Boot and quickly apply Spring Boot related technical points to microservice projects. The whole chapter is divided into two parts: basic chapter and advanced chapter.

The basic part (lessons 01-10) mainly introduces some of the most commonly used function points of Spring Boot in projects, which aims to lead learners to quickly master the knowledge points needed in the development of Spring Boot and be able to apply Spring Boot related technologies to the actual project architecture. This part takes the Spring Boot framework as the main line, including Json data encapsulation, logging, attribute configuration MVC Support, online documents, template engine, exception handling, AOP processing, persistence layer integration, etc.

The advanced part (lessons 11-17) mainly introduces the technical points of Spring Boot in the project, including some integrated components, in order to lead learners to quickly integrate and complete the corresponding functions when they encounter specific scenes in the project. This part takes the Spring Boot framework as the main line, including interceptors, listeners, caching, security authentication, word segmentation plug-ins, message queues and so on.

After carefully reading this series of articles, learners will quickly understand and master the most commonly used technical points of Spring Boot in the project. At the end of the course, the author will build an empty architecture of Spring Boot project based on the course content. This architecture is also separated from the actual project, and learners can use this architecture in the actual project, Have the ability to use Spring Boot for actual project development.

All source codes of the course are available for free download: Download address.

5. The course development environment and plug-ins

The development environment of this course:

- Development tool: IDEA 2017

- JDK version: JDK 1.8

- Spring Boot version: 2.0.3 RELEASE

- Maven version: 3.5.2

Plug ins involved:

- FastJson

- Swagger2

- Thymeleaf

- MyBatis

- Redis

- ActiveMQ

- Shiro

- Lucence

Lesson 01: Spring Boot development environment setup and project startup

The previous section introduced the features of Spring Boot. This section mainly explains and analyzes the configuration of Spring Boot jdk, the construction and startup of Spring Boot project, and the structure of Spring Boot project.

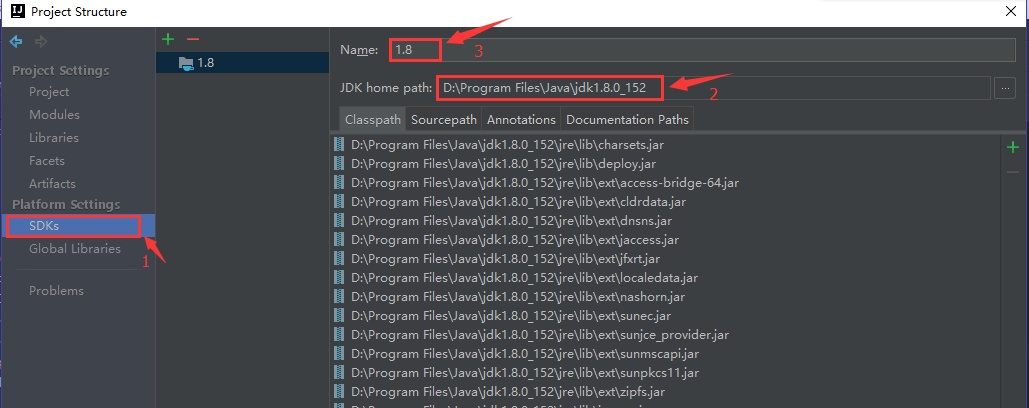

1. jdk configuration

This course is developed using the IDEA. The way to configure jdk in the IDEA is very simple. Open file - > project structure, as shown in the following figure:

- Select SDKs

- Select the installation directory of the local jdk in JDK home path

- Custom Name for jdk in Name

Through the above three steps, you can import the locally installed jdk. If you are a friend Using STS or eclipse, you can add it in two steps:

- Window - > preference - > java - > guided jres to add local jdk s.

- Window -- > preference -- > java -- > compiler selects jre, which is consistent with jdk.

2. Construction of spring boot project

2.1 IDEA quick build

In IDEA, you can quickly build a Spring Boot project through file - > New - > project. As follows, select Spring Initializr, select the jdk we just imported in the Project SDK, and click Next to the project configuration information.

- Group: fill in the enterprise domain name. This course uses com itcodai

- Artifact: fill in the project name. The project name of each course in this course is based on the command of course + course number. course01 is used here

- Dependencies: you can add the dependency information required in our project according to the actual situation. This course only needs to select Web.

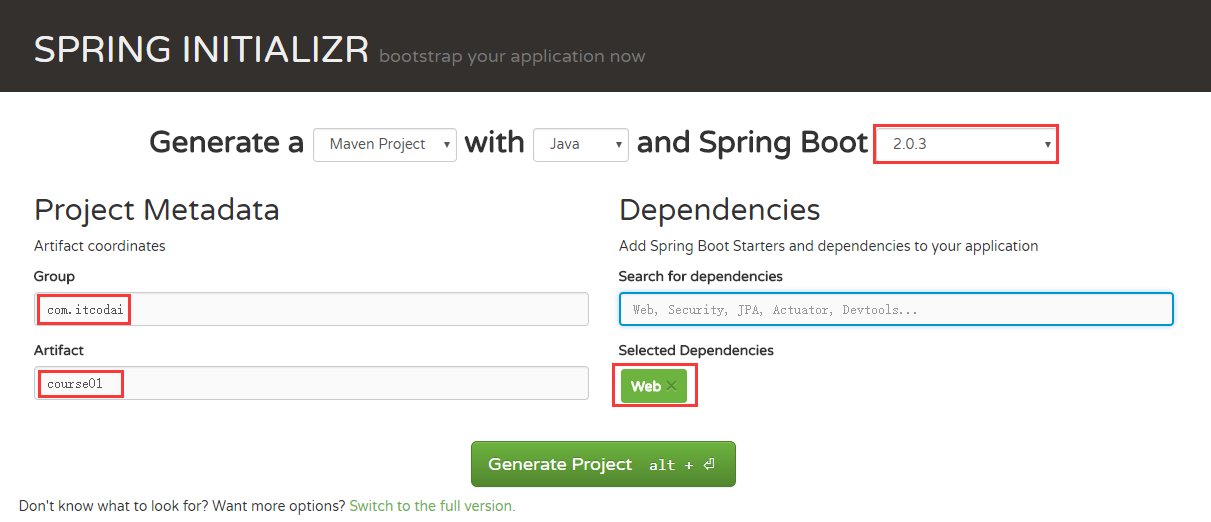

2.2 official construction

The second method can be built through official. The steps are as follows:

- visit http://start.spring.io/ .

- Enter the corresponding Spring Boot version, Group and Artifact information and project dependencies on the page, and then create the project.

- After decompression, use IDEA to import the maven project: File - > New - > model from existing source, and then select the extracted project folder. If you are a friend using eclipse, you can import - > existing maven Projects - > next, and then select the extracted project folder.

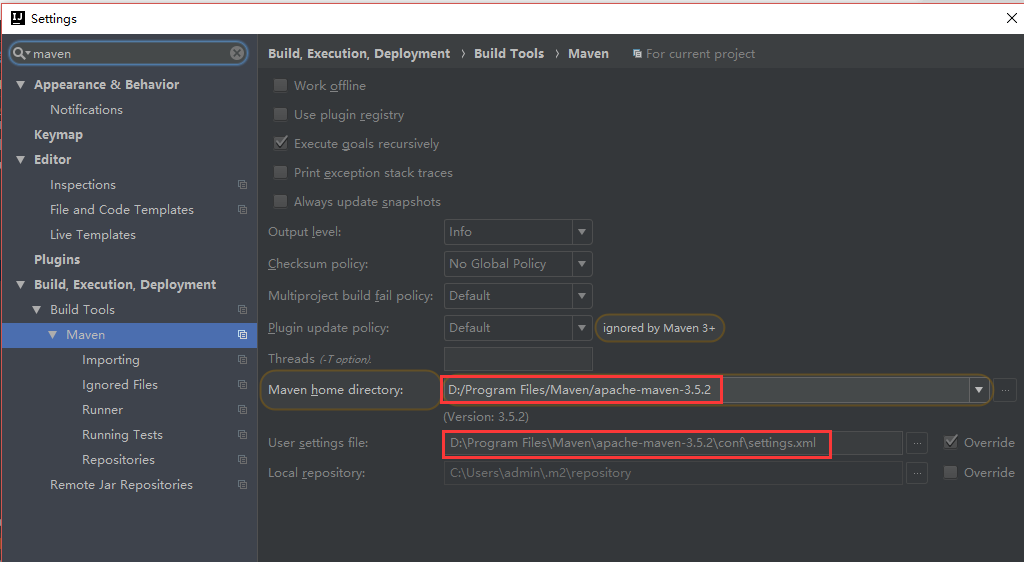

2.3 maven configuration

After creating the Spring Boot project, you need to configure Maven. Open file - > settings, search maven, and configure the local Maven information. As follows:

Select the installation path of local Maven in Maven home directory; In User settings file, select the path where the local Maven configuration file is located. In the configuration file, we configure the image of domestic Alibaba, so that the download speed of Maven dependency is very fast.

<mirror> <id>nexus-aliyun</id> <mirrorOf>*</mirrorOf> <name>Nexus aliyun</name> <url>http://maven.aliyun.com/nexus/content/groups/public</url> </mirror>

- 1

- 2

- 3

- 4

- 5

- 6

If you are a friend using eclipse, you can configure it through window -- > preference -- > Maven -- > user settings in the same way as above.

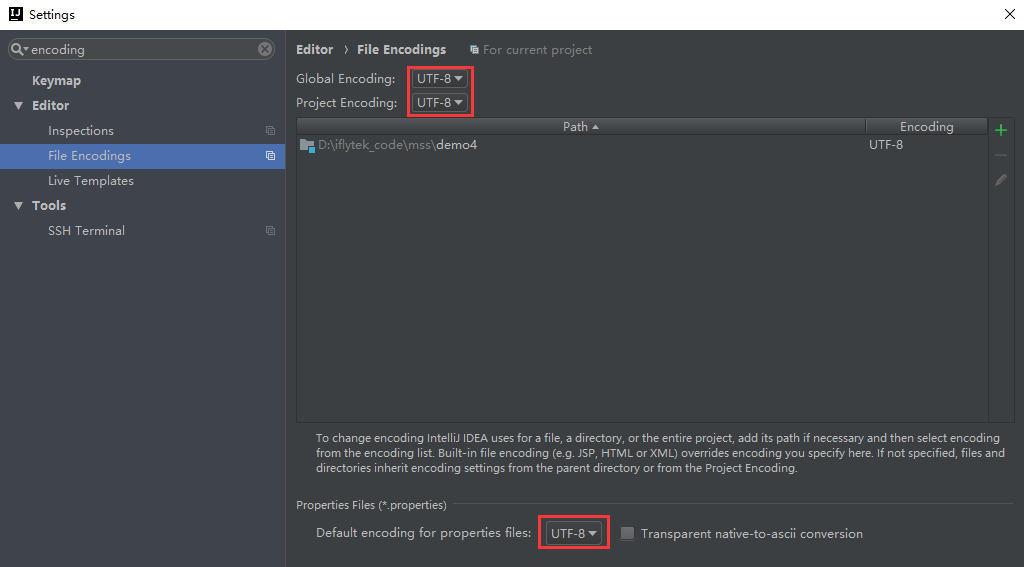

2.4 coding configuration

Similarly, after a new project is created, we generally need to configure coding, which is very important. Many beginners will forget this step, so we should form good habits.

In IDEA, still open file - > settings, search encoding, and configure the local encoding information. As follows:

If you are a friend using eclipse, you need to set the following code in two places:

- window – > qualifications – > General – > workspace, change Text file encoding to utf-8

- window – > privileges – > General – > content types, select Text, and fill Default encoding in utf-8

OK, after the code setting is completed, the project can be started.

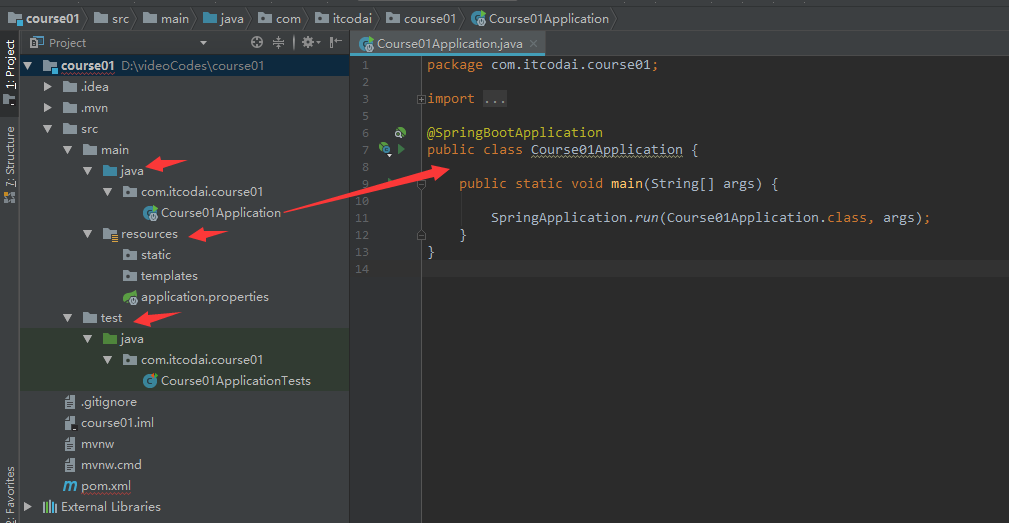

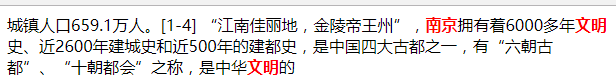

3. Engineering structure of spring boot project

The Spring Boot project has three modules in total, as shown in the following figure:

- src/main/java path: mainly write business programs

- src/main/resources path: store static files and configuration files

- src/test/java path: mainly write test programs

By default, as shown in the above figure, a startup class Course01Application will be created. There is a @ SpringBootApplication annotation on this class, and there is a main method in this startup class. Yes, it is very convenient to start Spring Boot by running the main method. In addition, tomcat is integrated in Spring Boot. We don't need to manually configure tomcat. Developers only need to pay attention to the specific business logic.

So far, the Spring Boot has been started successfully. In order to see the effect clearly, we write a Controller to test it, as follows:

package com.itcodai.course01.controller;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("/start")

public class StartController {

@RequestMapping("/springboot")

public String startSpringBoot() {

return "Welcome to the world of Spring Boot!";

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

Re run the main method to start the project, enter "localhost:8080/start/springboot" in the browser, and if you see "Welcome to the world of Spring Boot!", Congratulations on the successful launch of the project! Spring Boot is so simple and convenient! The default port number is 8080. If you want to modify it, you can click application Use {server. In the YML file Port to specify the port artificially, such as 8001 port:

server: port: 8001

- 1

- 2

4. Summary

In this section, we quickly learned how to import jdk in IDEA, how to configure maven and coding using IDEA, and how to quickly create and start Spring Boot projects. IDEA's support for Spring Boot is very friendly. I suggest you use IDEA to develop Spring Boot. From the next lesson, we will really enter the learning of Spring Boot.

Course source code download address: Poke me to download

Lesson 02: Spring Boot returns Json data and data encapsulation

In the project development, the data transmission between interfaces and between front and rear ends uses the Json format. In Spring Boot, the interface returns data in the Json format. You can use the @ RestController annotation in the Controller to return data in the Json format. The @ RestController is also a new annotation in Spring Boot, Let's click in to see what the annotation contains.

@Target({ElementType.TYPE})

@Retention(RetentionPolicy.RUNTIME)

@Documented

@Controller

@ResponseBody

public @interface RestController {

String value() default "";

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

It can be seen that the @ respontroller 'annotation contains the original @ Controller' and @ ResponseBody 'annotations. Friends who have used Spring have a good understanding of the @ Controller' annotation, which will not be repeated here. The @ ResponseBody 'annotation is to convert the returned data structure into Json format. Therefore, by default, the @ RestController annotation is used to convert the returned data structure into Json format. The default Json parsing technology framework used in Spring Boot is jackson. Let's open POM For the Spring Boot starter web dependency in XML, you can see a Spring Boot starter Json dependency:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-json</artifactId>

<version>2.0.3.RELEASE</version>

<scope>compile</scope>

</dependency>

- 1

- 2

- 3

- 4

- 5

- 6

Spring Boot has well encapsulated dependencies. You can see many dependencies of Spring Boot starter XXX series. This is one of the characteristics of Spring Boot. There is no need to introduce many related dependencies artificially. Starter XXX series directly contains the necessary dependencies, so we click the above Spring Boot starter JSON dependency again, You can see:

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.9.6</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.datatype</groupId>

<artifactId>jackson-datatype-jdk8</artifactId>

<version>2.9.6</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.datatype</groupId>

<artifactId>jackson-datatype-jsr310</artifactId>

<version>2.9.6</version>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.module</groupId>

<artifactId>jackson-module-parameter-names</artifactId>

<version>2.9.6</version>

<scope>compile</scope>

</dependency>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

So far, we know that the default JSON parsing framework used in Spring Boot is jackson. Let's take a look at the conversion of common data types to JSON by the default jackson framework.

1. Spring Boot handles Json by default

In actual projects, the commonly used data structures are all class objects, List objects and Map objects. Let's take a look at the format of the default jackson framework after converting these three commonly used data structures into json.

1.1 create User entity class

To test, we need to create an entity class. Here we will use User to demonstrate.

public class User {

private Long id;

private String username;

private String password;

/* Omit get, set, and construction methods with parameters */

}

- 1

- 2

- 3

- 4

- 5

- 6

1.2 create Controller class

Then we create a Controller and return the User object, list < User > and map < string, Object > respectively.

import com.itcodai.course02.entity.User;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

@RestController

@RequestMapping("/json")

public class JsonController {

@RequestMapping("/user")

public User getUser() {

return new User(1, "Ni Shengwu", "123456");

}

@RequestMapping("/list")

public List<User> getUserList() {

List<User> userList = new ArrayList<>();

User user1 = new User(1, "Ni Shengwu", "123456");

User user2 = new User(2, "Talent class", "123456");

userList.add(user1);

userList.add(user2);

return userList;

}

@RequestMapping("/map")

public Map<String, Object> getMap() {

Map<String, Object> map = new HashMap<>(3);

User user = new User(1, "Ni Shengwu", "123456");

map.put("Author information", user);

map.put("Blog address", "http://blog.itcodai.com");

map.put("CSDN address", "http://blog.csdn.net/eson_15");

map.put("Number of fans", 4153);

return map;

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

1.3 test json returned by different data types

OK, the interface is written, and a User object, a List set and a Map set are returned respectively. The value in the Map set contains different data types. Next, let's test the effect in turn.

Enter localhost:8080/json/user in the browser, and the returned json is as follows:

{"id":1,"username":"Ni Shengwu","password":"123456"}

- 1

Enter localhost:8080/json/list in the browser, and the returned json is as follows:

[{"id":1,"username":"Ni Shengwu","password":"123456"},{"id":2,"username":"Talent class","password":"123456"}]

- 1

Enter localhost:8080/json/map in the browser, and the returned json is as follows:

{"Author information":{"id":1,"username":"Ni Shengwu","password":"123456"},"CSDN address":"http://blog.csdn.net/eson_15 "," number of fans ": 4153," blog address ": http://blog.itcodai.com "}

- 1

It can be seen that no matter what data type is in the map, it can be converted to the corresponding json format, which is very convenient.

1.4 handling of null in Jackson

In actual projects, we will inevitably encounter some null values. When we convert json, we don't want these null values to appear. For example, we expect all nulls to become empty strings such as "" when we convert json. What should we do? In Spring Boot, we can configure it to create a jackson configuration class:

import com.fasterxml.jackson.core.JsonGenerator;

import com.fasterxml.jackson.databind.JsonSerializer;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.SerializerProvider;

import org.springframework.boot.autoconfigure.condition.ConditionalOnMissingBean;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.http.converter.json.Jackson2ObjectMapperBuilder;

import java.io.IOException;

@Configuration

public class JacksonConfig {

@Bean

@Primary

@ConditionalOnMissingBean(ObjectMapper.class)

public ObjectMapper jacksonObjectMapper(Jackson2ObjectMapperBuilder builder) {

ObjectMapper objectMapper = builder.createXmlMapper(false).build();

objectMapper.getSerializerProvider().setNullValueSerializer(new JsonSerializer<Object>() {

@Override

public void serialize(Object o, JsonGenerator jsonGenerator, SerializerProvider serializerProvider) throws IOException {

jsonGenerator.writeString("");

}

});

return objectMapper;

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

Then we modify the interface of the map returned above and change several values to null to test:

@RequestMapping("/map")

public Map<String, Object> getMap() {

Map<String, Object> map = new HashMap<>(3);

User user = new User(1, "Ni Shengwu", null);

map.put("Author information", user);

map.put("Blog address", "http://blog.itcodai.com");

map.put("CSDN address", null);

map.put("Number of fans", 4153);

return map;

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

Restart the project and enter: localhost:8080/json/map again. You can see that jackson has turned all null fields into empty strings.

{"Author information":{"id":1,"username":"Ni Shengwu","password":""},"CSDN address":"","Number of fans":4153,"Blog address":"http://blog.itcodai.com"}

- 1

2. Use Alibaba fastjason settings

2.1 comparison between Jackson and fastjason

Many friends are used to using Alibaba's fastjason for json conversion in the project. At present, Alibaba's fastjason is used in our project. What are the differences between jackson and fastjason? The following table is obtained according to the comparison of information published online.

| option | fastJson | jackson |

|---|---|---|

| Ease of use | easily | secondary |

| Advanced feature support | secondary | rich |

| Official documents, Example support | chinese | english |

| Processing json speed | Slightly faster | fast |

There are a lot of information about the comparison between fastjason and jackson on the Internet, mainly to select the appropriate framework according to their actual project situation. From the perspective of expansion, fastjason is not as flexible as jackson. From the perspective of speed or starting difficulty, fastjason can be considered. Alibaba's fastjason is currently used in our project, which is very convenient.

2.2 fastjason dependency import

Using fastjason requires importing dependencies. This course uses version 1.2.35. The dependencies are as follows:

<dependency> <groupId>com.alibaba</groupId> <artifactId>fastjson</artifactId> <version>1.2.35</version> </dependency>

- 1

- 2

- 3

- 4

- 5

2.2 using fastjason to handle null

When using fastjason, the processing of null is somewhat different from jackson. We need to inherit the WebMvcConfigurationSupport class, and then override the configureMessageConverters method. In the method, we can select and configure the scenario to realize null conversion. As follows:

import com.alibaba.fastjson.serializer.SerializerFeature;

import com.alibaba.fastjson.support.config.FastJsonConfig;

import com.alibaba.fastjson.support.spring.FastJsonHttpMessageConverter;

import org.springframework.context.annotation.Configuration;

import org.springframework.http.MediaType;

import org.springframework.http.converter.HttpMessageConverter;

import org.springframework.web.servlet.config.annotation.WebMvcConfigurationSupport;

import java.nio.charset.Charset;

import java.util.ArrayList;

import java.util.List;

@Configuration

public class fastJsonConfig extends WebMvcConfigurationSupport {

/**

* Use Ali FastJson as JSON MessageConverter

* @param converters

*/

@Override

public void configureMessageConverters(List<HttpMessageConverter<?>> converters) {

FastJsonHttpMessageConverter converter = new FastJsonHttpMessageConverter();

FastJsonConfig config = new FastJsonConfig();

config.setSerializerFeatures(

// Leave empty fields

SerializerFeature.WriteMapNullValue,

// Convert null of String type to ''

SerializerFeature.WriteNullStringAsEmpty,

// Convert null of type Number to 0

SerializerFeature.WriteNullNumberAsZero,

// Convert null of List type to []

SerializerFeature.WriteNullListAsEmpty,

// Convert null of Boolean type to false

SerializerFeature.WriteNullBooleanAsFalse,

// Avoid circular references

SerializerFeature.DisableCircularReferenceDetect);

converter.setFastJsonConfig(config);

converter.setDefaultCharset(Charset.forName("UTF-8"));

List<MediaType> mediaTypeList = new ArrayList<>();

// To solve the problem of Chinese garbled code is equivalent to adding an attribute products = "application / JSON" to @ RequestMapping on the Controller

mediaTypeList.add(MediaType.APPLICATION_JSON);

converter.setSupportedMediaTypes(mediaTypeList);

converters.add(converter);

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

3. Encapsulate the unified returned data structure

The above are some representative examples of json returned by Spring Boot, but in actual projects, in addition to encapsulating data, we often need to add some other information to the returned json, such as returning some status codes and returning some msg to the caller, so that the caller can make some logical judgments according to the code or MSG. Therefore, in the actual project, we need to encapsulate a unified json return structure to store the return information.

3.1 define a unified json structure

Because the type of encapsulated json data is uncertain, we need to use generics when defining a unified json structure. The attributes in the unified json structure include data, status code and prompt information. The construction method can be added according to the actual business needs. Generally speaking, there should be a default return structure and a user specified return structure. As follows:

public class JsonResult<T> {

private T data;

private String code;

private String msg;

/**

* If no data is returned, the default status code is 0 and the prompt message is: operation succeeded!

*/

public JsonResult() {

this.code = "0";

this.msg = "Operation succeeded!";

}

/**

* If no data is returned, you can manually specify the status code and prompt information

* @param code

* @param msg

*/

public JsonResult(String code, String msg) {

this.code = code;

this.msg = msg;

}

/**

* When data is returned, the status code is 0, and the default prompt message is: operation succeeded!

* @param data

*/

public JsonResult(T data) {

this.data = data;

this.code = "0";

this.msg = "Operation succeeded!";

}

/**

* There is data return, the status code is 0, and the prompt information is manually specified

* @param data

* @param msg

*/

public JsonResult(T data, String msg) {

this.data = data;

this.code = "0";

this.msg = msg;

}

// Omit the get and set methods

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

3.2 modify the return value type and test in the Controller

Because JsonResult uses generics, all return value types can use this unified structure. In specific scenarios, you can replace generics with specific data types, which is very convenient and easy to maintain. In actual projects, encapsulation can be continued. For example, an enumeration type can be defined for status code and prompt information. In the future, we only need to maintain the data in this enumeration type (it will not be expanded in this course). According to the above JsonResult, let's rewrite the Controller as follows:

@RestController

@RequestMapping("/jsonresult")

public class JsonResultController {

@RequestMapping("/user")

public JsonResult<User> getUser() {

User user = new User(1, "Ni Shengwu", "123456");

return new JsonResult<>(user);

}

@RequestMapping("/list")

public JsonResult<List> getUserList() {

List<User> userList = new ArrayList<>();

User user1 = new User(1, "Ni Shengwu", "123456");

User user2 = new User(2, "Talent class", "123456");

userList.add(user1);

userList.add(user2);

return new JsonResult<>(userList, "Get user list succeeded");

}

@RequestMapping("/map")

public JsonResult<Map> getMap() {

Map<String, Object> map = new HashMap<>(3);

User user = new User(1, "Ni Shengwu", null);

map.put("Author information", user);

map.put("Blog address", "http://blog.itcodai.com");

map.put("CSDN address", null);

map.put("Number of fans", 4153);

return new JsonResult<>(map);

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

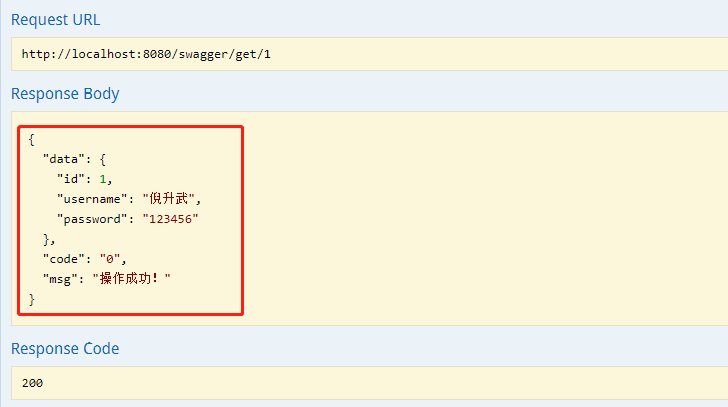

We re-enter localhost:8080/jsonresult/user in the browser. The returned json is as follows:

{"code":"0","data":{"id":1,"password":"123456","username":"Ni Shengwu"},"msg":"Operation succeeded!"}

- 1

Enter: localhost:8080/jsonresult/list, and return json as follows:

{"code":"0","data":[{"id":1,"password":"123456","username":"Ni Shengwu"},{"id":2,"password":"123456","username":"Talent class"}],"msg":"Get user list succeeded"}

- 1

Enter: localhost:8080/jsonresult/map, and return json as follows:

{"code":"0","data":{"Author information":{"id":1,"password":"","username":"Ni Shengwu"},"CSDN address":null,"Number of fans":4153,"Blog address":"http://blog.itcodai.com"},"msg ":" operation succeeded! "}

- 1

Through encapsulation, we not only transfer the data to the front end or other interfaces through json, but also bring the status code and prompt information, which is widely used in actual project scenarios.

4. Summary

This section mainly analyzes the return of json data in Spring Boot in detail, and explains their configuration from the default jackson framework of Spring Boot to Alibaba's fastjason framework. In addition, combined with the actual project situation, the json encapsulation structure used in the actual project is summarized, and the status code and prompt information are added to make the returned json data information more complete.

Course source code download address: Poke me to download

Lesson 03: Spring Boot using slf4j for logging

In development, we often use {system out. Println () to print some information, but it's not good because it uses a lot of {system Out will increase the consumption of resources. In our actual project, slf4j's logback is used to output logs, which is very efficient. Spring Boot provides a logging system, and logback is the best choice.

1. slf4j introduction

Quote a passage from Baidu Encyclopedia:

SLF4J, the Simple Logging Facade for Java, is not a specific logging solution. It only serves a variety of logging systems. According to the official statement, SLF4J is a simple Facade for logging system, which allows end users to use their desired logging system when deploying their applications.

The general meaning of this paragraph is: you only need to write the code to record the log in a unified way, and you don't need to care about which log system and style the log is output through. Because they depend on the logging system that is bound when the project is deployed. For example, if slf4j is used to record logs in the project and log4j is bound (that is, import corresponding dependencies), the logs will be output in the style of log4j; Later, you need to output the log in the style of logback. You only need to replace log4j with logback without modifying the code in the project. This has almost zero learning cost for different log systems introduced by third-party components. Moreover, its advantages include not only this one, but also the use of concise placeholders and log level judgment.

Because sfl4j has so many advantages, Alibaba has taken slf4j as their log framework. In Alibaba Java Development Manual (official version), Article 1 of the log specification requires the use slf4j:

1. [mandatory] the API in the log system (Log4j, Logback) cannot be directly used in the application, but the API in the log framework SLF4J should be used. The log framework in facade mode is used, which is conducive to the unification of maintenance and log processing methods of various classes.

The word "mandatory" reflects the advantages of slf4j, so it is recommended to use slf4j as its own logging framework in practical projects. Using slf4j logging is very simple. You can directly use LoggerFactory to create logs.

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class Test {

private static final Logger logger = LoggerFactory.getLogger(Test.class);

// ......

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

2. application. Log configuration in YML

Spring Boot supports slf4j very well. Slf4j has been integrated internally. Generally, we will configure slf4j when using it. application. The yml file is the only file that needs to be configured in Spring Boot. At the beginning of creating the project, it is application The properties file is more detailed. The yml file is used because the hierarchy of the yml file is particularly good and looks more intuitive. However, the format requirements of the yml file are relatively high. For example, there must be a space after the English colon, otherwise the project is estimated to be unable to start and no error is reported. Either properties or yml depends on personal habits. This course uses yml.

Let's take a look at application Log configuration in YML file:

logging:

config: logback.xml

level:

com.itcodai.course03.dao: trace

- 1

- 2

- 3

- 4

logging.config is used to specify which configuration file to read when the project is started. The log configuration file specified here is logback under the root path XML} files and related configuration information about logs are placed in logback In the XML} file. logging.level , is used to specify the output level of logs in a specific mapper. The above configuration indicates , com itcodai. course03. The output level of all mapper logs under Dao # package is trace, which will print out the sql operating the database. During development, it is set to trace to facilitate problem location. In the production environment, it is sufficient to set this log level to error level (the mapper layer will not be discussed in this lesson, but will be discussed in detail later when Spring Boot integrates MyBatis).

The common log levels are ERROR, WARN, INFO and DEBUG from high to low.

3. logback.xml configuration file parsing

Click application In the YML ¢ file, we specify the log configuration file ¢ logback xml,logback. The XML file is mainly used to configure logs. In logback In XML #, we can define the log output format, path, console output format, file size, save time, etc. Let's analyze:

3.1 define log output format and storage path

<configuration>

<property name="LOG_PATTERN" value="%date{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n" />

<property name="FILE_PATH" value="D:/logs/course03/demo.%d{yyyy-MM-dd}.%i.log" />

</configuration>

- 1

- 2

- 3

- 4

Let's take a look at the meaning of this definition: first, define a format named "LOG_PATTERN". In this format,% date represents the date,% thread represents the thread name,% - 5level represents the five character width of the level from the left,% logger{36} represents the longest 36 characters of the logger name,% msg} represents the log message, and% n} is the newline character.

Then define a file path named "FILE_PATH" where logs will be stored.% n i indicates the ith file. When the log file reaches the specified size, the log will be generated into a new file. Here, i is the file index. The allowable size of the log file can be set, which will be explained below. It should be noted here that the log storage path must be absolute in both windows and Linux systems.

3.2 define console output

<configuration>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<!-- As configured above LOG_PATTERN To print the log -->

<pattern>${LOG_PATTERN}</pattern>

</encoder>

</appender>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

Use the < appender > node to set the configuration of a CONSOLE output (class="ch.qos.logback.core.ConsoleAppender"), which is defined as "CONSOLE". Use the output format (LOG_PATTERN) defined above to output, and use ${} to reference it.

3.3 define relevant parameters of log file

<configuration>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!-- As configured above FILE_PATH Path to save the log -->

<fileNamePattern>${FILE_PATH}</fileNamePattern>

<!-- Keep the log for 15 days -->

<maxHistory>15</maxHistory>

<timeBasedFileNamingAndTriggeringPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedFNATP">

<!-- If the maximum of a single log file exceeds, a new log file store will be created -->

<maxFileSize>10MB</maxFileSize>

</timeBasedFileNamingAndTriggeringPolicy>

</rollingPolicy>

<encoder>

<!-- As configured above LOG_PATTERN To print the log -->

<pattern>${LOG_PATTERN}</pattern>

</encoder>

</appender>

</configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

Use < appender > to define a FILE configuration named "FILE", which is mainly used to configure the saving time of log files, the storage size of a single log FILE, the saving path of files and the output format of logs.

3.4 define log output level

<configuration> <logger name="com.itcodai.course03" level="INFO" /> <root level="INFO"> <appender-ref ref="CONSOLE" /> <appender-ref ref="FILE" /> </root> </configuration>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

After having the above definitions, finally, we use < logger > to define the default log output level in the project. Here, we define the level as INFO. Then, for the log of INFO level, we use < root > to refer to the parameters of console log output and log file defined above. So logback The configuration in the XML file is set.

4. Use Logger to print logs in the project

In the code, we generally use the Logger object to print out some log information. You can specify the log level to print out and support placeholders, which is very convenient.

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("/test")

public class TestController {

private final static Logger logger = LoggerFactory.getLogger(TestController.class);

@RequestMapping("/log")

public String testLog() {

logger.debug("=====Test log debug Level printing====");

logger.info("======Test log info Level printing=====");

logger.error("=====Test log error Level printing====");

logger.warn("======Test log warn Level printing=====");

// You can use placeholders to print out some parameter information

String str1 = "blog.itcodai.com";

String str2 = "blog.csdn.net/eson_15";

logger.info("======Ni Shengwu's personal blog:{};Ni Shengwu's CSDN Blog:{}", str1, str2);

return "success";

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

Start the project and enter localhost:8080/test/log in the browser to see the console log record:

======Test log info Level printing===== =====Test log error Level printing==== ======Test log warn Level printing===== ======Ni Shengwu's personal blog: blog.itcodai.com;Ni Shengwu's CSDN Blog: blog.csdn.net/eson_15

- 1

- 2

- 3

- 4

Because the INFO level is higher than the DEBUG level, the DEBUG message is not printed. If logback If the log level in XML is set to DEBUG, all four statements will be printed out. Let's test it ourselves. At the same time, you can open the D:\logs\course03 \ directory, which contains all the log records generated after the project has just started. After the project is deployed, we mostly locate the problem by viewing the log file.

5. Summary

This lesson mainly introduces slf4j briefly, explains in detail how to use slf4j to output logs in Spring Boot, and focuses on the analysis of slf4j logback Configuration of log related information in XML} file, including different levels of logs. Finally, for these configurations, use the Logger to print out some in the code for testing. In actual projects, these logs are very important information in the process of troubleshooting.

Course source code download address: Poke me to download

Lesson 04: project property configuration in Spring Boot

We know that in the project, we often need to use some configuration information. These information may have different configurations in the test environment and production environment, and may be modified later according to the actual business situation. In view of this situation, we can't write these configurations in the code, and we'd better write them in the configuration file. For example, you can write this information to application YML file.

1. A small amount of configuration information

For example, in the microservice architecture, the most common is that a service needs to call other services to obtain the relevant information provided by it, so the service address to be called needs to be configured in the service configuration file. For example, in the current service, we need to call the order microservice to obtain the order related information. Suppose the port number of the order service is 8002, Then we can configure as follows:

server: port: 8001 # Configure the address of the microservice url: # Address of the order micro service orderUrl: http://localhost:8002

- 1

- 2

- 3

- 4

- 5

- 6

- 7

Then, how to get the configured order service address in the business code? We can use @ Value annotation to solve this problem. Add an attribute to the corresponding class and use the @ Value annotation on the attribute to obtain the configuration information in the configuration file, as follows:

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("/test")

public class ConfigController {

private static final Logger LOGGER = LoggerFactory.getLogger(ConfigController.class);

@Value("${url.orderUrl}")

private String orderUrl;

@RequestMapping("/config")

public String testConfig() {

LOGGER.info("=====The order service address obtained is:{}", orderUrl);

return "success";

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

@The Value value corresponding to the key in the configuration file can be obtained through ${key} on the Value annotation. Let's start the project and enter localhost:8080/test/config in the browser to request the service. You can see that the console will print the address of the order service:

=====The order service address obtained is: http://localhost:8002

- 1

It indicates that we have successfully obtained the order micro service address in the configuration file, which is also used in the actual project. Later, if the address of a service needs to be modified due to server deployment, just modify it in the configuration file.

2. Multiple configuration information

Here is another problem. With the increase of business complexity, there may be more and more microservices in a project. A module may need to call multiple microservices to obtain different information, so it is necessary to configure the addresses of multiple microservices in the configuration file. However, in the code that needs to call these microservices, it is too cumbersome and unscientific to use @ Value annotation to introduce the corresponding microservice address one by one.

Therefore, in the actual project, when the business is cumbersome and the logic is complex, it is necessary to consider encapsulating one or more configuration classes. For example: in the current service, if a business needs to call order microservice, user microservice and shopping cart microservice at the same time, obtain the relevant information of order, user and shopping cart respectively, and then do some logical processing for these information. In the configuration file, we need to configure the addresses of these microservices:

# Configure addresses of multiple microservices url: # Address of the order micro service orderUrl: http://localhost:8002 # Address of user microservice userUrl: http://localhost:8003 # Address of shopping cart micro service shoppingUrl: http://localhost:8004

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

Maybe in the actual business, there are far more than these three micro services, or even more than a dozen. In this case, we can first define a MicroServiceUrl class to store the url of the microservice, as follows:

@Component

@ConfigurationProperties(prefix = "url")

public class MicroServiceUrl {

private String orderUrl;

private String userUrl;

private String shoppingUrl;

// get and set methods are omitted

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

Careful friends should see that you can use @ ConfigurationProperties' annotation and prefix to specify a prefix, and then the property name in this class is the name after removing the prefix in the configuration, one-to-one correspondence. That is, prefix name + attribute name is the key defined in the configuration file. At the same time, the class needs to be annotated with @ Component. Put the class as a Component in the Spring container and let Spring manage it. We can inject it directly when we use it.

Note that using @ ConfigurationProperties annotation requires importing its dependencies:

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-configuration-processor</artifactId> <optional>true</optional> </dependency>

- 1

- 2

- 3

- 4

- 5

OK, so far, we have written the configuration, and then write a Controller to test it. At this time, you don't need to introduce the URLs of these micro services one by one in the code. You can directly inject the newly written configuration class through the @ Resource annotation, which is very convenient. As follows:

@RestController

@RequestMapping("/test")

public class TestController {

private static final Logger LOGGER = LoggerFactory.getLogger(TestController.class);

@Resource

private MicroServiceUrl microServiceUrl;

@RequestMapping("/config")

public String testConfig() {

LOGGER.info("=====The order service address obtained is:{}", microServiceUrl.getOrderUrl());

LOGGER.info("=====The obtained user service address is:{}", microServiceUrl.getUserUrl());

LOGGER.info("=====The shopping cart service address obtained is:{}", microServiceUrl.getShoppingUrl());

return "success";

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

Start the project again, and you can see the following information printed on the console, indicating that the configuration file is effective and the content of the configuration file is obtained correctly:

=====The order service address obtained is: http://localhost:8002 =====The order service address obtained is: http://localhost:8002 =====The obtained user service address is: http://localhost:8003 =====The shopping cart service address obtained is: http://localhost:8004

- 1

- 2

- 3

- 4

3. Specify project profile

As we know, in actual projects, there are generally two environments: development environment and production environment. The configuration in the development environment is often different from that in the production environment, such as environment, port, database, related address, etc. After debugging the development environment and deploying to the production environment, it is impossible for us to modify all the configuration information into the configuration of the production environment. This is too troublesome and unscientific.

The best solution is to have a set of configuration information for both the development environment and the production environment. Then, when we are developing, we specify to read the configuration of the development environment. After we deploy the project to the server, we specify to read the configuration of the production environment.

We create two new configuration files: application-dev.yml , and application-pro YML is used to configure the development environment and production environment respectively. For convenience, we set two access port numbers, 8001 for development environment and 8002 for production environment

# Development environment profile server: port: 8001

- 1

- 2

- 3

# Development environment profile server: port: 8002

- 1

- 2

- 3

Then in application Specify which configuration file to read in the yml} file. For example, in the development environment, we specify to read the {applicationn-dev.yml} file, as follows:

spring:

profiles:

active:

- dev

- 1

- 2

- 3

- 4

In this way, you can specify to read the "application-dev.yml" file during development, and use port 8001 when accessing. After deployment to the server, you only need to add "application Change the file specified in YML to application pro YML #, and then use 8002 port to access, which is very convenient.

4. Summary

This lesson mainly explains how to read relevant configurations in business code in Spring Boot, including single configuration and multiple configuration items. This is very common in microservices. There are often many other microservices to call, so it is a good way to encapsulate a configuration class to receive these configurations. In addition, for example, database related connection parameters can also be placed in a configuration class. Other similar scenarios can be handled in this way. Finally, the fast switching mode of development environment and production environment configuration is introduced, which eliminates the modification of many configuration information during project deployment.

Course source code download address: Poke me to download

Lesson 05: MVC support in Spring Boot

The MVC support of Spring Boot mainly introduces the most commonly used annotations in actual projects, including @ RestController, @ RequestMapping, @ PathVariable, @ RequestParam and @ RequestBody. This paper mainly introduces the common usage and characteristics of these annotations.

1. @RestController

@RestController , is a new annotation for Spring Boot. Let's see what the annotation contains.

@Target({ElementType.TYPE})

@Retention(RetentionPolicy.RUNTIME)

@Documented

@Controller

@ResponseBody

public @interface RestController {

String value() default "";

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

It can be seen that the @ respontroller 'annotation contains the original @ Controller' and @ ResponseBody 'annotations. Friends who have used Spring have a good understanding of the @ Controller' annotation, which will not be repeated here. The @ ResponseBody 'annotation is to convert the returned data structure into Json format. So @ RestController can be regarded as a combination of @ Controller and @ ResponseBody, which is equivalent to stealing laziness. We don't need to use @ Controller after using @ RestController. However, there is a problem to be noted: if the front and rear ends are separated and there is no template rendering, for example Thymeleaf In this case, you can directly use @ RestController , to transfer the data to the front end in json format, and the front end can parse it after getting it; However, if the front and back ends are not separated and the template is needed for rendering, the Controller will generally return to the specific page, so @ RestController cannot be used at this time, for example:

public String getUser() {

return "user";

}

- 1

- 2

- 3

In fact, you need to return to user For HTML pages, if @ RestController , is used, the user will be returned as a string, so we need to use @ Controller , annotation at this time. This will be explained in the next section Spring Boot integrated Thymeleaf template engine.

2. @RequestMapping

@RequestMapping} is an annotation used to handle request address mapping. It can be used on classes or methods. Annotations at the class level will map a specific request or request pattern to a controller, indicating that all methods in the class responding to requests take this address as the parent path; The mapping relationship further specified to the processing method is represented at the method level.

The annotation has six attributes. Generally, there are three attributes commonly used in projects: value, method and produces.

- value attribute: Specifies the actual address of the request. value can be omitted

- method attribute: Specifies the type of request, mainly including GET, PUT, POST and DELETE. The default is GET

- Productions attribute: Specifies the type of returned content, such as productions = "application/json; charset=UTF-8"

@The RequestMapping} annotation is relatively simple. For example:

@RestController

@RequestMapping(value = "/test", produces = "application/json; charset=UTF-8")

public class TestController {

@RequestMapping(value = "/get", method = RequestMethod.GET)

public String testGet() {

return "success";

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

This is very simple. Start the project and enter localhost:8080/test/get in the browser to test it.

There are corresponding annotations for the four different request methods. You don't need to add the method attribute to the @ RequestMapping} annotation every time. The above GET method requests can directly use the @ GetMapping("/get") annotation with the same effect. Accordingly, the annotations corresponding to PUT mode, POST mode and DELETE mode are @ PutMapping, @ PostMapping , and @ DeleteMapping , respectively.

3. @PathVariable

@The PathVariable annotation is mainly used to obtain url parameters. Spring Boot supports restful URLs. For example, a GET request carries a parameter id. we receive the id as a parameter. You can use the @ PathVariable annotation. As follows:

@GetMapping("/user/{id}")

public String testPathVariable(@PathVariable Integer id) {

System.out.println("Obtained id Is:" + id);

return "success";

}

- 1

- 2

- 3

- 4

- 5

One problem to note here is that if you want the id value in the placeholder in the url to be directly assigned to the parameter id, you need to ensure that the parameters in the url are consistent with the method receiving parameters, otherwise it cannot be received. If it is inconsistent, it can also be solved. You need to specify the corresponding relationship with the value attribute in @ PathVariable *. As follows:

@RequestMapping("/user/{idd}")

public String testPathVariable(@PathVariable(value = "idd") Integer id) {

System.out.println("Obtained id Is:" + id);

return "success";

}

- 1

- 2

- 3

- 4

- 5

For the url to be accessed, the position of the placeholder can be anywhere, not necessarily at the end, for example: / xxx/{id}/user. In addition, the url also supports multiple placeholders. Method parameters are received with the same number of parameters. The principle is the same as that of one parameter, for example:

@GetMapping("/user/{idd}/{name}")

public String testPathVariable(@PathVariable(value = "idd") Integer id, @PathVariable String name) {

System.out.println("Obtained id Is:" + id);

System.out.println("Obtained name Is:" + name);

return "success";

}

- 1

- 2

- 3

- 4

- 5

- 6

Run the project and request localhost:8080/test/user/2/zhangsan in the browser. You can see the console output the following information:

Obtained id Is: 2 Obtained name Is: zhangsan

- 1

- 2

Therefore, it supports the reception of multiple parameters. Similarly, if the parameter names in the url are different from those in the method, you also need to use the value attribute to bind the two parameters.

4. @RequestParam

@As the name suggests, the RequestParam annotation is also used to obtain request parameters. We introduced above that the @ PathVariable annotation is also used to obtain request parameters. What is the difference between @ RequestParam and @ PathVariable? The main difference is that @ PathVariable @ is to obtain parameter values from the url template, that is, this style of url: http://localhost:8080/user/{id} ; And @ RequestParam gets the parameter value from the request, that is, the url of this style: http://localhost:8080/user?id=1 . We use the url with parameter ID to test the following code:

@GetMapping("/user")

public String testRequestParam(@RequestParam Integer id) {

System.out.println("Obtained id Is:" + id);

return "success";

}

- 1

- 2

- 3

- 4

- 5

id information can be printed out from the console normally. Similarly, the parameters above the url should be consistent with the parameters of the method. If they are inconsistent, the value attribute should also be used to explain. For example, the url is: http://localhost:8080/user?idd=1

@RequestMapping("/user")

public String testRequestParam(@RequestParam(value = "idd", required = false) Integer id) {

System.out.println("Obtained id Is:" + id);

return "success";

}

- 1

- 2

- 3

- 4

- 5

In addition to the value attribute, there are two more common attributes:

- required attribute: true indicates that the parameter must be passed, otherwise 404 error will be reported, and false indicates optional.

- defaultValue property: default value, which indicates the default value if there is no parameter with the same name in the request.

It can be seen from the url that when the @ RequestParam annotation is used on the GET request, it receives the parameters spliced in the url. In addition, this annotation can also be used for POST requests to receive the parameters submitted by the front-end form. If the front-end submits two parameters username and password through the form, we can use @ RequestParam to receive them. The usage is the same as above.

@PostMapping("/form1")

public String testForm(@RequestParam String username, @RequestParam String password) {

System.out.println("Obtained username Is:" + username);

System.out.println("Obtained password Is:" + password);

return "success";

}

- 1

- 2

- 3

- 4

- 5

- 6

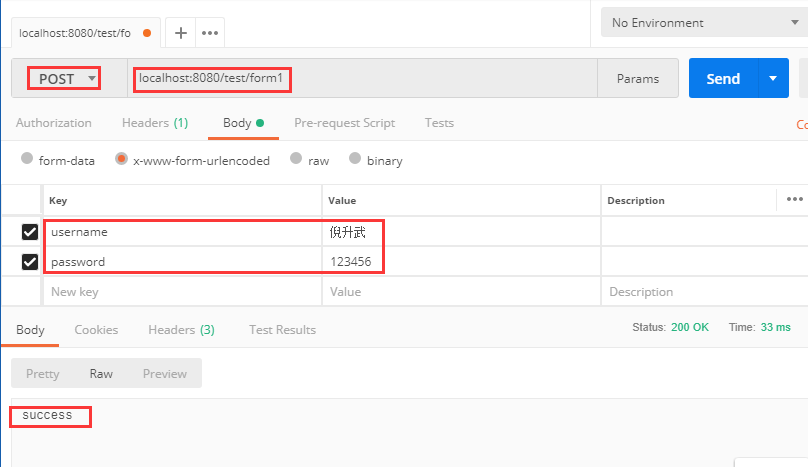

Let's use postman to simulate form submission and test the interface:

The problem is that if the form has a lot of data, we can't write many parameters in the background method, and each parameter needs @ RequestParam annotation. In this case, we need to encapsulate an entity class to receive these parameters. The attribute name in the entity is consistent with the parameter name in the form.

public class User {

private String username;

private String password;

// set get

}

- 1

- 2

- 3

- 4

- 5

If using entity receiving, we can't add @ RequestParam annotation in front, just use it directly.

@PostMapping("/form2")

public String testForm(User user) {

System.out.println("Obtained username Is:" + user.getUsername());

System.out.println("Obtained password Is:" + user.getPassword());

return "success";

}

- 1

- 2

- 3

- 4

- 5

- 6

Use postman to test the form submission again and observe the return value and the log printed out by the console. In actual projects, an entity class is usually encapsulated to receive form data, because there are usually a lot of form data in actual projects.

5. @RequestBody

@The RequestBody annotation is used to receive the entity from the front end, and the receiving parameters are also corresponding entities. For example, the front end sends two parameters username and password through json submission. At this time, we need to encapsulate an entity at the back end to receive. When more parameters are passed, it is very convenient to use @ RequestBody @ to receive. For example:

public class User {

private String username;

private String password;

// set get

}

- 1

- 2

- 3

- 4

- 5

@PostMapping("/user")

public String testRequestBody(@RequestBody User user) {

System.out.println("Obtained username Is:" + user.getUsername());

System.out.println("Obtained password Is:" + user.getPassword());

return "success";

}

- 1

- 2

- 3

- 4

- 5

- 6

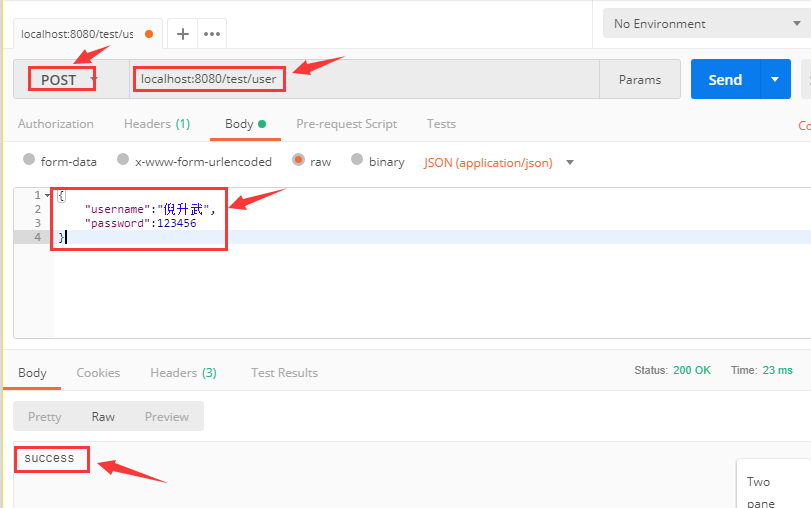

We use the postman tool to test the effect. Open postman, and then enter the request address and parameters. We use json to simulate the parameters, as shown in the figure below. After calling, we return success.

At the same time, look at the log output from the background console:

Obtained username Ni Shengwu Obtained password Is: 123456

- 1

- 2

It can be seen that the @ RequestBody @ annotation is used on POST requests to receive json entity parameters. It is somewhat similar to the form submission described above, except that the format of parameters is different. One is json entity and the other is form submission. In the actual project, you can use corresponding annotations according to specific scenarios and needs.

6. Summary

This lesson mainly explains the support for MVC in Spring Boot, and analyzes the use of @ RestController, @ RequestMapping, @ PathVariable, @ RequestParam and @ RequestBody. Since @ ResponseBody is integrated in @ RestController , the annotation of returning json will not be repeated. The above four annotations are frequently used annotations, which will be encountered in all practical projects. You should master them skillfully.

Course source code download address: Poke me to download

Lesson 06: Spring Boot integration Swagger 2 show online interface documents

1. Introduction to swagger

1.1 problems solved

With the development of Internet technology, the current website architecture has basically changed from the original back-end rendering to the form of front-end and back-end separation, and the front-end technology and back-end technology are farther and farther on their respective roads. The only connection between the front-end and the back-end has become the API interface, so the API document has become the link between the front-end and back-end developers, becoming more and more important.

Then the problem comes. With the continuous updating of code, after developers develop new interfaces or update old interfaces, due to the heavy development tasks, it is often difficult to continuously update the documents. Swagger is an important tool to solve this problem. For those who use interfaces, developers do not need to provide them with documents, As long as you tell them a swagger address, you can display the online API interface documents. In addition, the personnel calling the interface can also test the interface data online. Similarly, when developing the interface, developers can also use swagger online interface documents to test the interface data, which provides convenience for developers.

1.2 Swagger official

We open Swagger official website , the official definition of Swagger is:

The Best APIs are Built with Swagger Tools

The best API is built using Swagger tools. It can be seen that Swagger officials are very confident in its function and position. Because it is very easy to use, the official positioning is also reasonable. As shown in the figure below:

This article mainly explains how to import Swagger2 tool in Spring Boot to show the interface documents in the project. The Swagger version used in this lesson is 2.2.2. Let's start the Swagger2 tour.

2. Swagger2's maven dependence

When using Swagger2 tool, you must import maven dependency. At present, the highest official version is 2.8.0. I tried it. Personally, I feel that the effect of page display is not very good, and it is not compact enough, which is not conducive to operation. In addition, the latest version is not necessarily the most stable version. At present, we use version 2.2.2 in our actual project. This version is stable and friendly. Therefore, this lesson mainly focuses on version 2.2.2. The dependencies are as follows:

<dependency> <groupId>io.springfox</groupId> <artifactId>springfox-swagger2</artifactId> <version>2.2.2</version> </dependency> <dependency> <groupId>io.springfox</groupId> <artifactId>springfox-swagger-ui</artifactId> <version>2.2.2</version> </dependency>

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

3. Swagger2 configuration

Swagger2 needs to be configured. Swagger2 Configuration in Spring Boot is very convenient. Create a new Configuration class. In addition to adding the necessary @ Configuration annotation, you also need to add the @ enableswager2 annotation on the swagger2 Configuration class.

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import springfox.documentation.builders.ApiInfoBuilder;

import springfox.documentation.builders.PathSelectors;

import springfox.documentation.builders.RequestHandlerSelectors;

import springfox.documentation.service.ApiInfo;

import springfox.documentation.spi.DocumentationType;

import springfox.documentation.spring.web.plugins.Docket;

import springfox.documentation.swagger2.annotations.EnableSwagger2;

/**

* @author shengwu ni

*/

@Configuration

@EnableSwagger2

public class SwaggerConfig {

@Bean

public Docket createRestApi() {

return new Docket(DocumentationType.SWAGGER_2)

// Specify how to build the details of the api document: apiInfo()

.apiInfo(apiInfo())

.select()

// Specify the package path to generate api interfaces. Here, take controller as the package path to generate all interfaces in controller

.apis(RequestHandlerSelectors.basePackage("com.itcodai.course06.controller"))

.paths(PathSelectors.any())

.build();

}

/**

* Build api documentation details

* @return

*/

private ApiInfo apiInfo() {

return new ApiInfoBuilder()

// Set page title

.title("Spring Boot integrate Swagger2 Interface Overview")

// Set interface description

.description("Learn with brother Wu Spring Boot Lesson 06")

// Set contact information

.contact("Ni Shengwu," + "CSDN: http://blog.csdn.net/eson_15")

// Set version

.version("1.0")

// structure

.build();

}

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

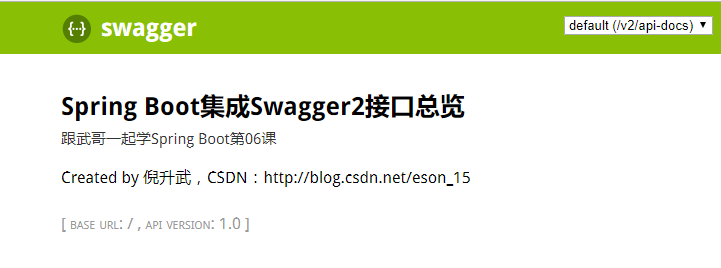

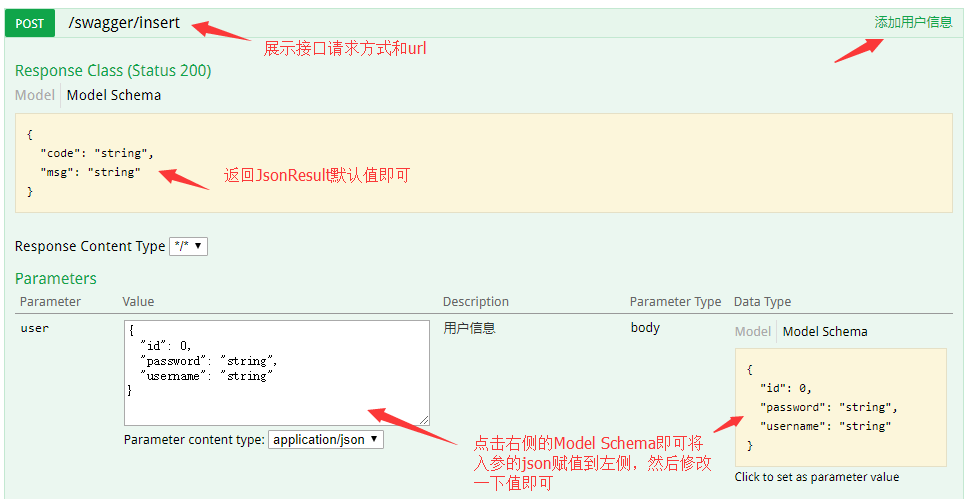

In this configuration class, the function of each method has been explained in detail with comments, which will not be repeated here. So far, we have configured Swagger2. Now we can test whether the configuration is effective. Start the project and enter localhost: 8080 / swagger UI in the browser HTML, you can see the interface page of Swagger2, as shown in the figure below, indicating that Swagger2 integration is successful.

Combined with the figure, you can clearly know the role of each method in the configuration class by comparing the configuration in the Swagger2 configuration file above. In this way, it is easy to understand and master the configuration in Swagger2. It can also be seen that the Swagger2 configuration is very simple.

[friendly note] many friends may encounter the following situations when configuring Swagger, and they can't turn it off. This is caused by the browser cache. Empty the browser cache to solve the problem.

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-gbvzvwz7-1595163751524)( http://p99jlm9k5.bkt.clouddn.com/blog/images/1/error.png )]

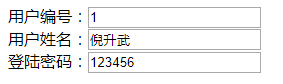

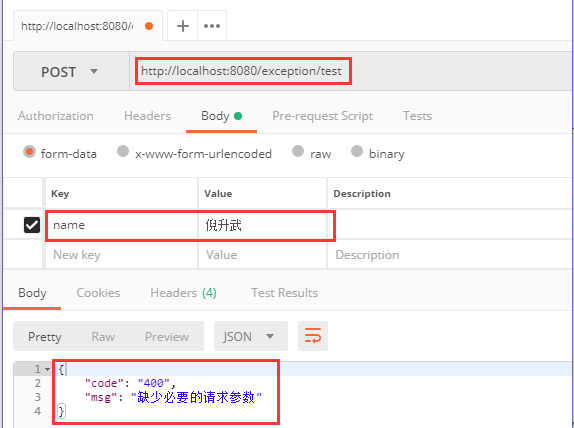

4. Use of swagger2

Swagger2 has been configured and tested, and its function is normal. Next, we start to use swagger2 to introduce several common annotations in swagger2, respectively on entity class, Controller class and methods in Controller. Finally, let's see how swagger2 presents online interface documents on the page, And test the data in the interface in combination with the methods in the Controller.