Installation configuration

Software installation

Download address: https://www.dameng.com/view_61.html

This paper takes x86 win64 DM8 as an example

After installation, open the DM Database Configuration Assistant to create the database, set the character set utf8, and remove the case sensitivity of characters

To create a table space and user, it is better to have a table space corresponding to one user for one library. When creating a user, you need to specify the corresponding table space

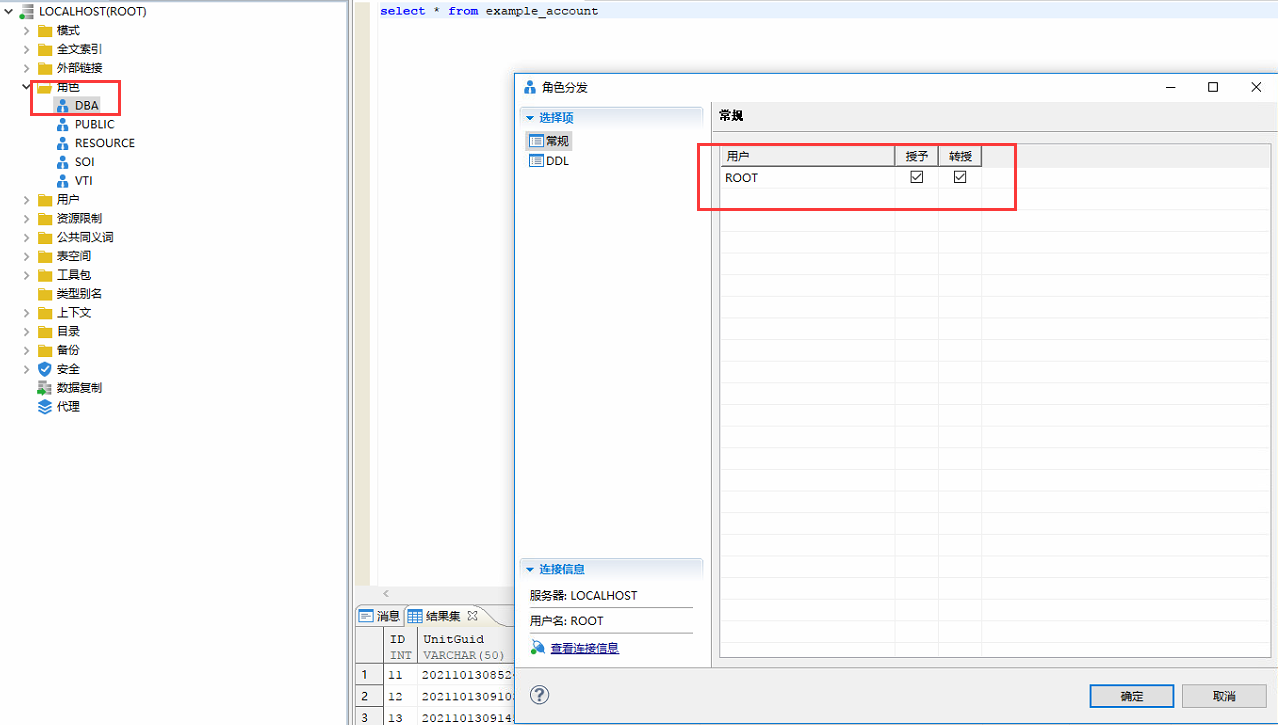

DBA operation permission needs to be assigned to the user

Data table migration

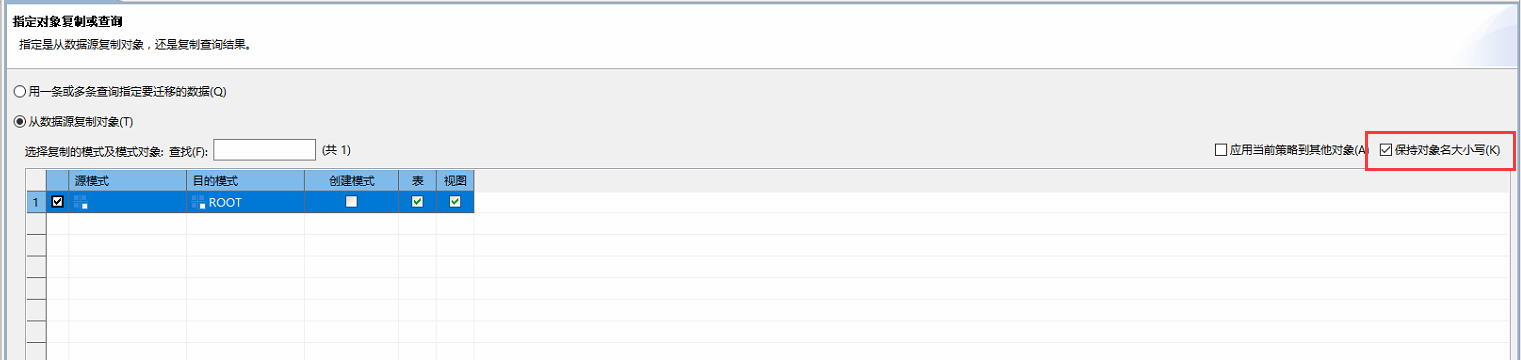

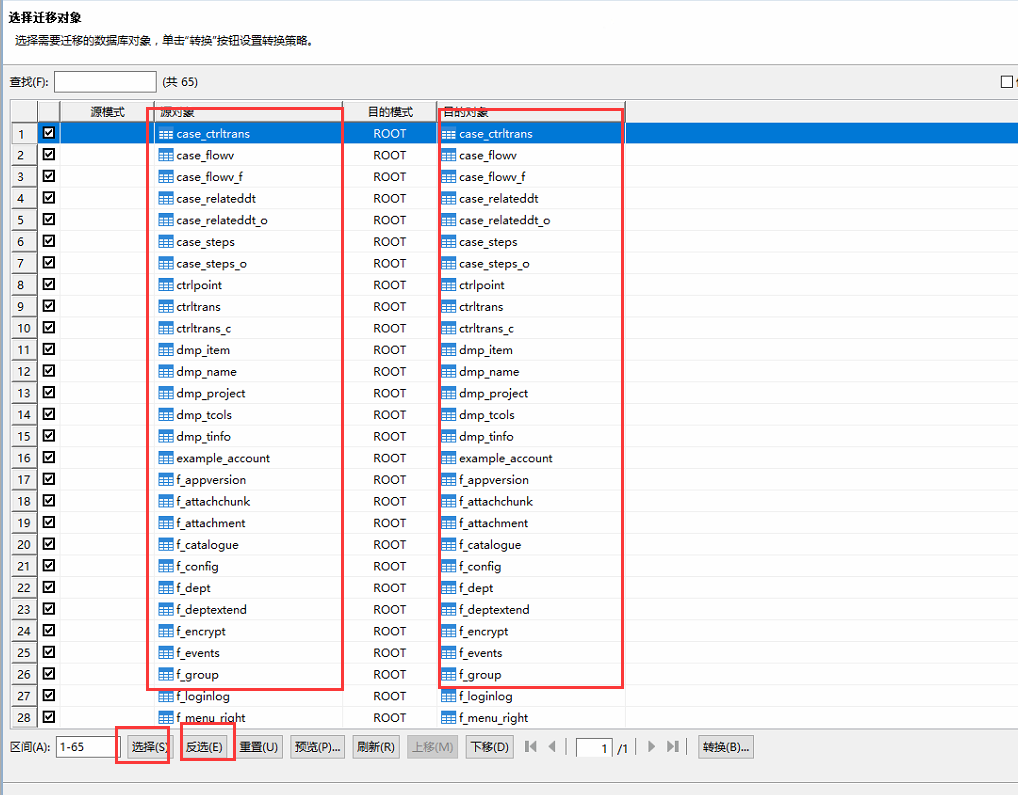

For existing projects or framework libraries that need to be migrated synchronously to the dream database, this paper takes mysql5.0 as an example 7 as an example, open DM data migration tool and pay attention to keeping the case of object names. When selecting tables, take out all and then select all. The migrated table names and field names will be consistent with the original database

maven reference

<dependency>

<groupId>com.dameng</groupId>

<artifactId>DmJdbcDriver18</artifactId>

<version>8.1.1.193</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.2.0</version>

</dependency>

Database configuration

Use druid to manage the connection pool and remove the wall configuration, otherwise an error will be reported

spring:

datasource:

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: dm.jdbc.driver.DmDriver

url: jdbc:dm://localhost:5236/ROOT?zeroDateTimeBehavior=convertToNull&useUnicode=true&characterEncoding=utf-8

username: ROOT

password: abcd@1234

filters: stat,slf4j

compatibility code

Map to LinkHashMap

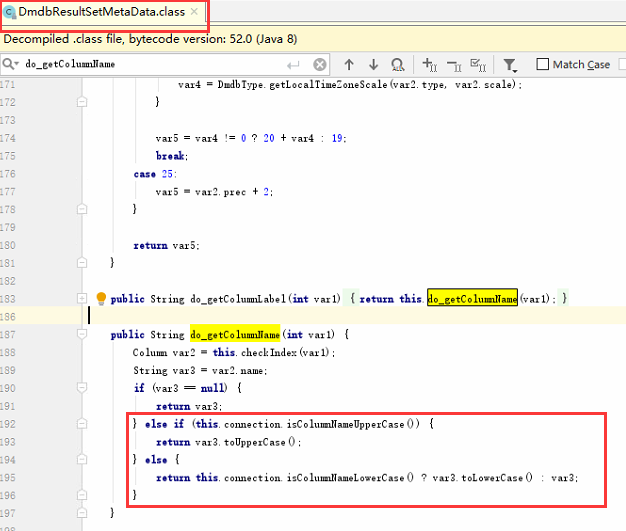

The data will be forced to be capitalized in the database driver of Dameng, which has a problem with the case of the data returned from some interfaces to the front-end data, and the impact range is large

JdbcTemplate processing

We can mix the common operations of using jdbc template for query, call the query method, pass in the custom ResultSetExtractor, get the jdbc native ResultSet object, take out the ResultSetMetaData and convert it into DmdbResultSetMetaData. The columns object is a private object and has no method access. We can take it out through reflection, Get the actual column name of the database through columns

public List<LinkedHashMap<String, Object>> findListByParam(String sqlText, Map<String, Object> map) {

List<LinkedHashMap<String, Object>> result = new ArrayList<>();

List<Object> paramList = new ArrayList<>();

//Parsing placeholder #{xxxx}

String regex = "\\#\\{(?<RegxName>[\\w.]*)\\}";

String sqlTextCopy = sqlText;

Pattern pattern = Pattern.compile(regex);

Matcher matcher = pattern.matcher(sqlTextCopy);

while (matcher.find()) {

String paramNameSymbol = matcher.group(0);

sqlText = sqlText.replace(paramNameSymbol, " ? ");

}

logger.debug("[sqlText]: " + sqlText);

//parameter assignment

matcher = pattern.matcher(sqlTextCopy);

while (matcher.find()) {

String paramNameSymbol = matcher.group(0);

String paramName = paramNameSymbol.replace("#", "").replace("{", "").replace("}", "");

Object paramValue = map.get(paramName);

logger.debug("[paramName]: " + paramName);

logger.debug("[paramValue]: " + paramValue);

paramList.add(paramValue);

}

jdbcTemplate.query(sqlText, paramList.toArray(), new ResultSetExtractor<Object>() {

@Override

public Object extractData(ResultSet rs) throws SQLException, DataAccessException {

try {

ResultSetMetaData rsMetaData = rs.getMetaData();

Column[] dm_columns = null;

if (dataBaseInfoUtil.getUsingDataBaseType() == GlobalEnum.DataBaseType.DM) {

ResultSetMetaDataProxyImpl resultSetMetaDataProxy = (ResultSetMetaDataProxyImpl) rsMetaData;

DmdbResultSetMetaData dmdbResultSetMetaData = (DmdbResultSetMetaData) resultSetMetaDataProxy.getRawObject();

Class dataClass = DmdbResultSetMetaData.class;

Field field = dataClass.getDeclaredField("columns");

field.setAccessible(true);

dm_columns = (Column[]) field.get(dmdbResultSetMetaData);

}

while (rs.next()) {

LinkedHashMap<String, Object> resultitem = new LinkedHashMap<>();

for (int i = 1; i <= rsMetaData.getColumnCount(); i++) {

String columnName = "";

if (dataBaseInfoUtil.getUsingDataBaseType() == GlobalEnum.DataBaseType.DM) {

columnName = dm_columns[i - 1].name;

} else {

columnName = rsMetaData.getColumnName(i);

;

}

Object columnValue = rs.getObject(columnName);

resultitem.put(columnName, columnValue);

}

result.add(resultitem);

}

} catch (Exception e) {

e.printStackTrace();

} finally {

return null;

}

}

});

return result;

}

Unified data source with mybaits

When using transactions, uncommitted data will not be queried under some isolation levels because the query operation passes through JDBC template and the update operation passes through myabtis. Therefore, it is necessary to unify the data sources to be druid managed datasources. The dynamicDataSource here is my customized data source processing object, which inherits from spring's AbstractRoutingDataSource, To handle multiple data sources

@Bean

public SqlSessionFactory sqlSessionFactory() throws Exception {

//SpringBootExecutableJarVFS.addImplClass(SpringBootVFS.class);

final PackagesSqlSessionFactoryBean sessionFactory = new PackagesSqlSessionFactoryBean();

sessionFactory.setDataSource(dynamicDataSource());

sessionFactory.setMapperLocations(new PathMatchingResourcePatternResolver()

.getResources("classpath*:mybatis/**/*Mapper.xml"));

//Turn off hump conversion to prevent underlined fields from being mapped

sessionFactory.getObject().getConfiguration().setMapUnderscoreToCamelCase(false);

return sessionFactory.getObject();

}

@Bean

public JdbcTemplate jdbcTemplate(){

JdbcTemplate jdbcTemplate = null;

try{

jdbcTemplate = new JdbcTemplate(dynamicDataSource());

}catch (Exception e){

e.printStackTrace();

}

return jdbcTemplate;

}

Map to entity class

Uniformly convert the query operation results into LinkHashMap key value pairs, and then map them into corresponding entity classes through BeanMap

clob long text processing

Object value = map.get(resultkey);

if(value instanceof ClobProxyImpl){

try {

value = ((ClobProxyImpl) value).getSubString(1,(int)((ClobProxyImpl) value).length());

} catch (Exception e) {

e.printStackTrace();

}

}

blob binary processing

Object value = map.get(resultkey);

if(value instanceof DmdbBlob){

try {

DmdbBlob dmdbBlob = (DmdbBlob)value;

value = FileUtil.convertStreamToByte(dmdbBlob.getBinaryStream());

} catch (Exception e) {

e.printStackTrace();

}

}