Main points:

1. Use of ACL in Squid

II. Log Analysis

3. Reverse Proxy

1. Use of ACL in Squid

(1) ACL access control methods:

1. List of definitions based on source address, destination URL, file type, etc.

acl List Name List Type List Content...

2. Restrict the list of defined ACLS

http_access allow or deny list name...

(2) ACL rule priority:

When a user accesses a proxy server, Squid sequentially matches the list of all rules defined in Squid and stops matching as soon as the match is successful.When all rules do not match, Squid uses the opposite rule from the last one.

(3) Common ACL list types:

src ->Source Address dst ->Destination Address port ->destination address dstdomain - > target domain Time ->access time maxconn ->maximum concurrent connection ur_ regex ->Target URL Address Urlpath_regex ->Whole Target URL Path

(4) Operation demonstration:

Note: To do a good job of proxy function, and to open port 3128 for Squid proxy server, you need to restart the service every time you change the configuration file.

| role | IP Address |

| Web server | 192.168.220.136 |

| Squid Proxy Server | 192.168.220.131 |

| Client | 192.168.220.128 |

Modify/etc/squid.conf file

Add the following code:

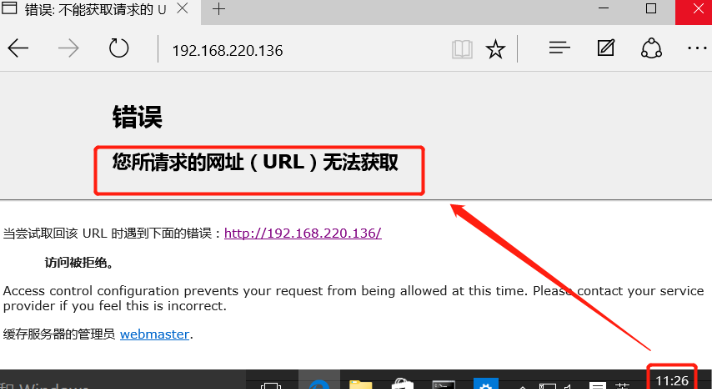

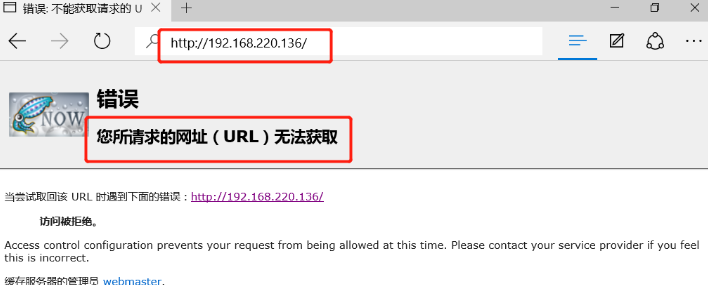

1. Based on IP address restrictions:

acl hostlocal src 192.168.220.128/32 //hostlocal denotes a name http_access deny hostlocal //access denied

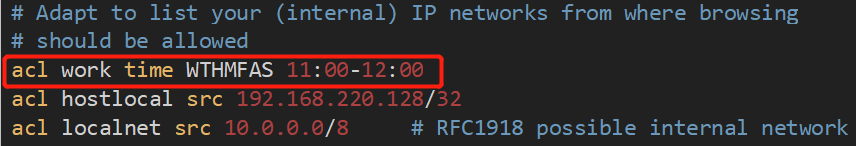

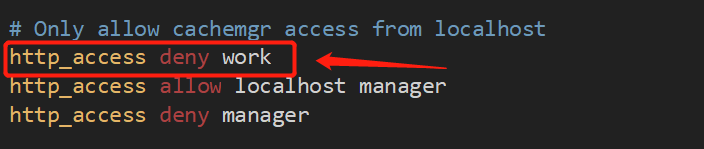

2. Based on time constraints:

acl work time WTHMFAS 11:00-12:00 //Time set at 11:00-12:00 http_access deny work //access denied

3. Based on the destination address (that is, the web server address)

You can add a rejected destination address to a file

1. mkdir/etc/squid // Create a directory first vim dest.list Add rejected ip address to file 192.168.220.111 192.168.220.123 192.168.220.136 2. Make Rules vim /etc/squid.conf acl destion dst "/etc/squid/dest.list" http_access deny destion

II. Log Analysis

Step 1: Install the sarg tool

Sarg (Squid Analysis Report Generator) is a Squid log analysis tool that uses HTML format to list in detail the site information, time-consuming information, ranking, number of connections, number of visits, etc. each user visits the Internet.

(1) Install image processing tools:

yum install -y gd gd-devel pcre

(2) Create a file:

mkdir /usr/local/sarg

tar zxvf sarg-2.3.7.tar.gz -C /opt/ Unzip to/opt/directory

(3) Compilation

./configure --prefix=/usr/local/sarg \ --sysconfdir=/etc/sarg \ --enable-extraprotection //Extra safety protection

(4) Installation

make && make install

(5) Modify the main profile/etc/sarg/sarg.conf

vim /etc/sarg/sarg.conf //Turn on the following functions (with a few modifications): access_log /usr/local/squid/var/logs/access.log //Specify access log files title "Squid User Access Reports" //Page Title output_dir /var/www/html/squid-reports //Report Output Directory user_ip no //Display using user name exclude_hosts /usr/local/sarg/noreport //Site List Files Not Included in Sort topuser_sort_field connect reverse //top sort has number of connections, access bytes, descending sort user_sort_field reverse //User access records, number of connections, access bytes in descending order overwrite_report no //Does the log with the same name overwrite mail_utility mail.postfix //Send Mail Report Command charset UTF-8 //Use Character Set weekdays 0-6 //Weekly cycle of top ranking www_document_root /varwww/html //Page Root Directory

(6) Add a domain name that is not included in the site file and will not be displayed in the sorting

touch /usr/local/sarg/noreport

ln -s /usr/local/sarg/bin/sarg /usr/local/bin/ //Easy to manage and create a soft connection

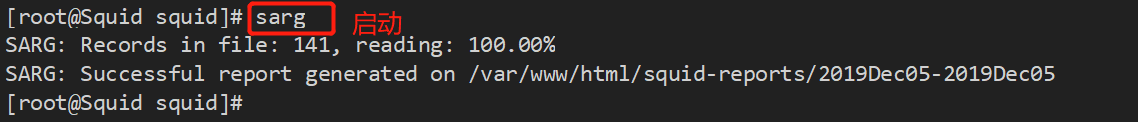

On:

Step 2: Install Apache

yum install httpd -y

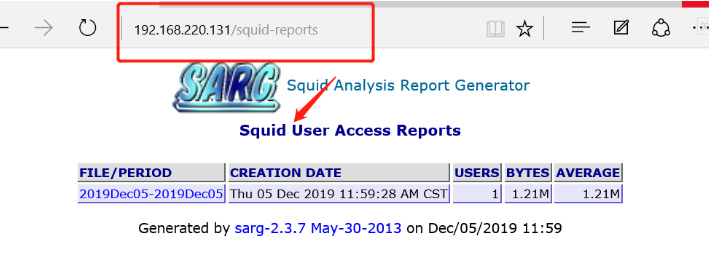

Step 3: Test on Client

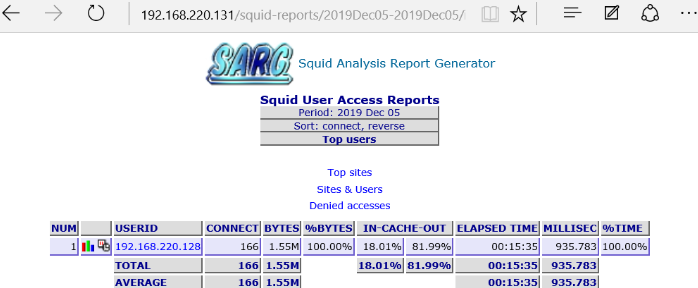

Browser access: http://192.168.220.131/squid-reports

With this tool, we can see detailed visits.

You can also use periodically scheduled tasks to perform daily report generation:

sarg -l /usr/local/squid/var/logs/access.log -o /var/www/html/squid-reports/ -z -d $(date -d "1 day ago" +%d/%m/%Y)-$(date +%d/%m/%Y)

3. Reverse Proxy

How the reverse proxy works:

The reverse proxy server is located between the local WEB server and the Internet.

When the user browser makes an HTTP request, the request is directed to the reverse proxy server through domain name resolution (if you want to implement reverse proxy for multiple WEB servers, you need to point the domain names of multiple WEB servers to the reverse proxy server).Requested by the reverse proxy server processor.Reverse proxies generally only cache bufferable data, such as html web pages and images, while some CGI scripts or programs such as ASP do not.It buffers static pages based on HTTP header tags returned from the WEB server.

| role | IP Address |

| Web Server 1 | 192.168.220.136 |

| Squid Proxy Server | 192.168.220.131 |

| Client | 192.168.220.128 |

| Web Server 2 | 192.168.220.137 |

1. Configure the Squid proxy server:

Modify/etc/squidconf file

vim /etc/squid.conf http_port 192.168.220.131:80 accel vhost vport cache_peer 192.168.220.136 parent 80 0 no-query originserver round-robin max_conn=30 weight=1 name=web1 cache_peer 192.168.220.137 parent 80 0 no-query originserver round-robin max_conn=30 weight=1 name=web2 cache_peer_domain web1 web2 www.yun.com

service squid restart //Restart squid systemctl stop httpd.service //Turn off Apache services

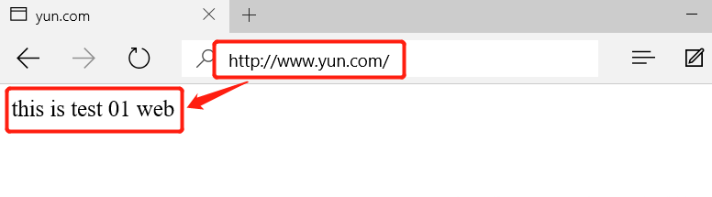

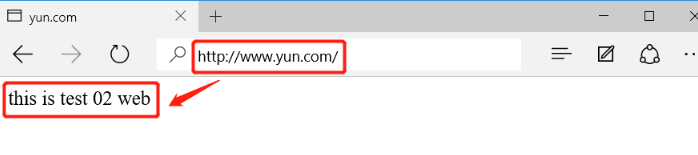

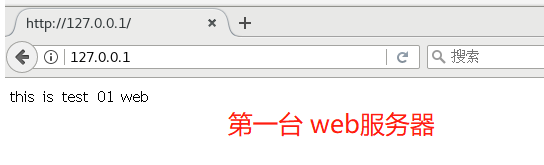

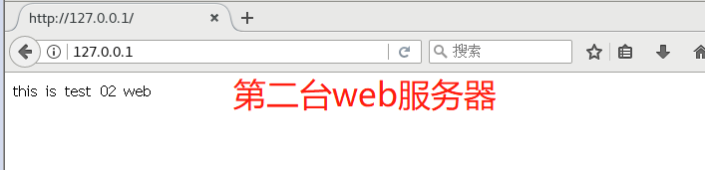

2. Make a test page on two Web servers:

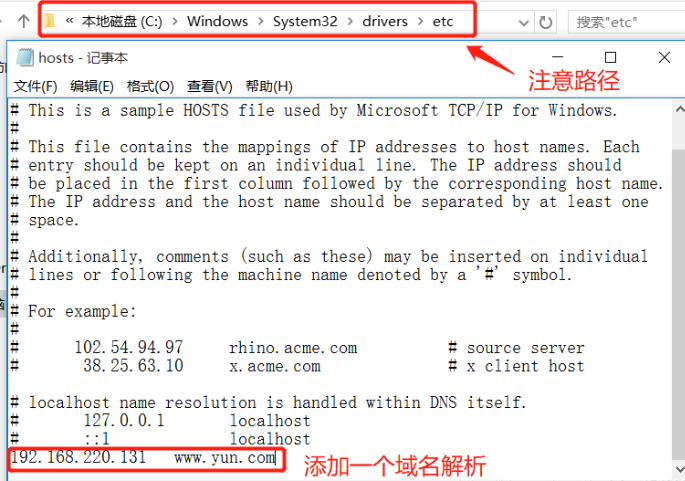

3. The client does domain name resolution:

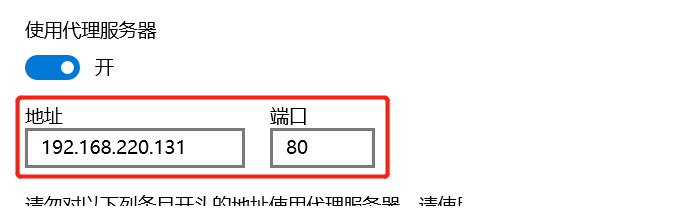

Modify the proxy server port:

4. Browser access http://www.yun.com/