Synchronized & volatile of concurrent programming

concept

Locks are often mentioned in concurrent programming. Adding locks ensures the data security of multiple threads. Each object in java can be used as a lock. There are three specific manifestations:

-

For common synchronization methods, the lock is the current instance object;

-

For static synchronization methods, the lock is the Class object of the current Class;

-

For synchronization methods, locks are objects configured in parentheses

The locks we often use include synchronized and Lock locks, but synchronized is a keyword, and Lock is an interface. Its implementation classes include reentrant Lock, ReadLock and WriteLock respectively. ReadLock and WriteLock are internal classes of read-write Lock class ReentrantLockReadWriteLock, ReentrantLockReadWriteLock has an interface to readwritelock. Learn about Lock stepping into its blog.

synchronized

Locked object

synchronized is a commonly used lock. It belongs to a veteran lock and can be added to variables, methods and classes.

If synchronized locks the method, when thread 1 acquires the lock and executes the method body, other threads cannot acquire it again. After thread 1 releases the lock, other threads can acquire the lock and execute the method body. The thread execution safety is guaranteed. Of course, if an exception occurs during the execution of the method body by thread 1, the lock will also be released automatically. (in a scenario, if the thread needs to finish executing even if there is an exception when executing the synchronization method body, handle the exception to ensure that the lock is not released when an exception occurs)

public static void main(String[] args) {

SynchronizedDemo synchronizedDemo = new SynchronizedDemo();

new Thread(() -> synchronizedDemo.test(), "t1").start();

new Thread(() -> synchronizedDemo.test(), "t2").start();

}

int count = 0;

public synchronized void test(){

System.out.println(Thread.currentThread().getName() + ",start");

while (true) {

count ++ ;

System.out.println(Thread.currentThread().getName() + ",count: " + count);

try {

Thread.sleep(1000);

} catch (Exception e){

e.printStackTrace();

}

try {

if (count == 5) {

int i = 1/0;

System.out.println(i);

}

}catch (Exception e){

e.printStackTrace();

}

}

}

analysis: When count == 5 Exceptions will be thrown, but there are catch Then the lock will not be released, so the thread t1 It will still be executed, and the results are as follows: t1,start t1,count: 1 t1,count: 2 t1,count: 3 t1,count: 4 t1,count: 5 t1,count: 6 java.lang.ArithmeticException: / by zero at com.xrds.springbootdemo.jmm.SynchronizedDemo.test(SynchronizedDemo.java:35) at com.xrds.springbootdemo.jmm.SynchronizedDemo.lambda$main$0(SynchronizedDemo.java:15) at java.lang.Thread.run(Thread.java:745) t1,count: 7 t1,count: 8 if count == 5 If no exception processing is performed, thread 1 will release the lock and thread 2 will obtain the lock. The results are as follows: t1,start t1,count: 1 t1,count: 2 t1,count: 3 t1,count: 4 t1,count: 5 t2,start Exception in thread "t1" java.lang.ArithmeticException: / by zero t2,count: 6 at com.xrds.springbootdemo.jmm.SynchronizedDemo.test(SynchronizedDemo.java:34) at com.xrds.springbootdemo.jmm.SynchronizedDemo.lambda$main$0(SynchronizedDemo.java:15) at java.lang.Thread.run(Thread.java:745) t2,count: 7

Because the Synchronized exception will release the lock, if the data in multiple database tables are processed in concurrent programming, it needs to meet atomicity. If an exception occurs in the middle of the process, it must be processed or the data must be rolled back, otherwise the data obtained by other threads is wrong.

Synchronized reentrant, that is, if both methods are modified by synchronized, method 1 can call method 2, and the lock will judge that it is the same thread.

public static void main(String[] args) {

SynchronizedDemo synchronizedDemo = new SynchronizedDemo();

new Thread(() -> synchronizedDemo.test1(), "t1").start();

new Thread(() -> synchronizedDemo.test2(), "t2").start();

}

public synchronized void test1(){

System.out.println(Thread.currentThread().getName() + ",test1 start");

try {

Thread.sleep(3000);

} catch (InterruptedException e) {

e.printStackTrace();

}

test2();

}

public synchronized void test2(){

System.out.println(Thread.currentThread().getName() + ",test2 start");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("end");

}

analysis: Premise: thread 1 and thread 2 are the same object. Two threads are created and the method test1 Called test2,And both methods are used Synchronized Decoration, then it locks the current object. Thread 1 starts, gets lock, object synchronizedDemo If it is locked by thread 1, thread 2 cannot obtain the lock and needs to wait until thread 1 releases the lock To call a method test2 ,The results are as follows: t1,test1 start t1,test2 start end t2,test2 start end If thread 1 and thread 2 are threads created by two objects, they do not interfere with each other. For example: SynchronizedDemo synchronizedDemo1 = new SynchronizedDemo(); SynchronizedDemo synchronizedDemo2 = new SynchronizedDemo(); new Thread(() -> synchronizedDemo1.test1(), "t1").start(); new Thread(() -> synchronizedDemo2.test2(), "t2").start(); Then the results are as follows: t1,test1 start t2,test2 start end t1,test2 start end

The above example illustrates the reentrant ability of Synchronized and the common synchronization method. The lock is the current instance object.

Of course, if the methods test1 and test2 are static modified, the Synchronized lock is the Class object of the current Class, because the static modified method belongs to the Class, as follows:

public static void main(String[] args) {

new Thread(() -> SynchronizedDemo.test1(), "t1").start();

new Thread(() -> SynchronizedDemo.test2(), "t2").start();

}

public static synchronized void test1(){

System.out.println(Thread.currentThread().getName() + ",test1 start");

try {

Thread.sleep(3000);

} catch (InterruptedException e) {

e.printStackTrace();

}

test2();

}

public static synchronized void test2(){

System.out.println(Thread.currentThread().getName() + ",test2 start");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("end");

}

analysis:

Because method test1 and test2 yes static Decorated, so calling them is a class object call. If thread 1 obtains the lock, the lock is class

Class Object, called by thread 2 test2 The method will be blocked and the result is as follows:

t1,test1 start

t1,test2 start

end

t2,test2 start

end

Lock upgrade

Synchronized has always been a veteran role in multithreaded concurrent programming, and many people will call it heavyweight lock. However, in java SE 1.6, in order to reduce the performance consumption caused by obtaining and releasing locks, biased locks and lightweight locks, as well as the storage structure and upgrade process of locks are introduced.

The lock has four states: no lock state, biased lock state, lightweight lock state and heavyweight lock state. Upgrading from a low-level state to a high-level state is irreversible.

Lock escalation is also called lock inflation.

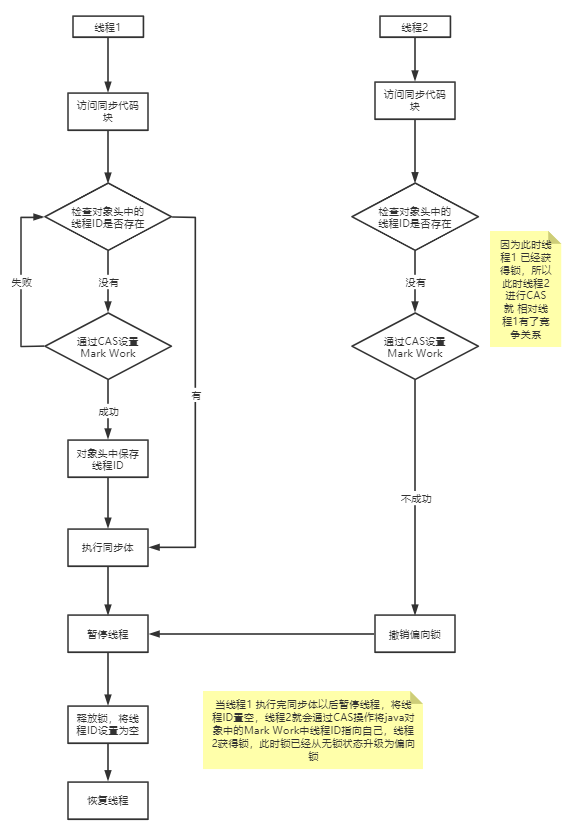

Bias lock

From the lock free state to the lock biased state, the thread will store the thread ID in the java object header. The specific process is as follows:

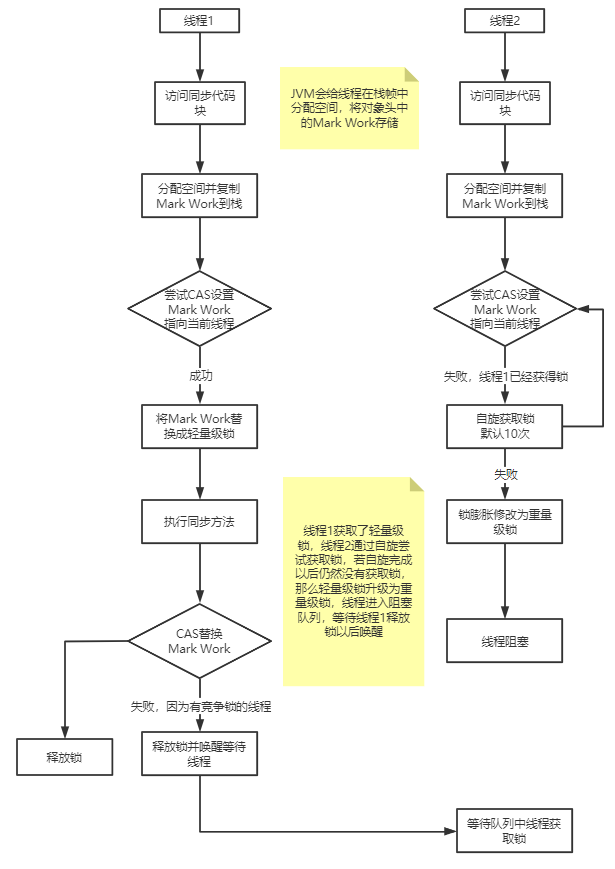

Lightweight Locking

The thread obtains the lightweight lock by spinning. The default spin is 10 times

Spinlocks consume CPU and heavyweight locks use the system kernel, so how to choose which lock to use?

Generally, if the execution time is short and the number of threads is small, spin lock is used, because if the execution time is long and there are many threads, the lock spin will consume CPU and reduce the execution efficiency; On the contrary, if the execution time is long, choose the heavyweight lock.

Locking operation

If synchronized locking is used, the following operations will be performed:

-

Obtain synchronization lock;

-

Clear working memory;

-

Copy the object copy from main memory to working memory;

-

Execute code (calculation or output, etc.);

-

Refresh main memory data;

-

Release synchronization lock

Synchronized realizes thread visibility through the above description, so it will also be mentioned in the later example analysis of volatile that there can be no system in the loop body when judging the visibility of volatile out. println() because the synchronized lock is used in the source code of the println() method

Lock optimization

There are two ways to create objects in singleton mode: lazy and hungry

When learning singleton mode for the first time without considering thread safety, an example of starving Han style is as follows:

private static final PersonBean personBean = new PersonBean();

public PersonBean getInstance(){

return personBean;

}

Whether this object is used or not, create it first and get it through getInstance method when necessary.

The lazy type is lazy and can only be created when it is used. Of course, in order to ensure the singleton, it is only created when it is used for the first time. Later, the object created for the first time is used. An example is as follows:

private static PersonBean personBean ;

public PersonBean getPersonBean1(){

if (personBean == null) {

synchronized (this) {

if (personBean == null) {

personBean = new PersonBean();

}

}

}

return personBean;

}

The above example implements thread safety through double checking.

Lock optimization through Synchronized is nothing more than adding locks at a reasonable granularity according to the actual situation, that is, lock elimination, lock coarsening, lock expansion and spin lock.

For example, if you add Synchronized to a method, each thread's acquisition of objects involves the acquisition and release of locks, which consumes a lot of performance. Therefore, adding locks to the necessary places in the method can play a role of thread safety and reduce the granularity of locks through two judgments.

Of course, in some cases, the granularity of the lock needs to be expanded. For example, many steps in a method are locked to achieve synchronization, so the locking performance of the whole method is better. In short, you can't add too many locks or lock too many code that doesn't need synchronization.

Lock comparison

| lock | advantage | shortcoming | Applicable scenario |

|---|---|---|---|

| Bias lock | Locking and unlocking do not require additional consumption, and there is only a nanosecond gap compared with the implementation of asynchronous methods | If there is lock competition between threads, it will bring additional consumption of lock revocation | It is applicable to the scenario where only one thread accesses the synchronization |

| Lightweight Locking | The competing threads will not block, which improves the response speed of the program | If the thread that cannot get lock competition all the time, using spin will consume CPU | The pursuit of response time, fast synchronization and very fast execution speed |

| Heavyweight lock | Thread contention does not use spin and does not consume CPU | Thread blocking, slow response time | Pursue throughput, fast synchronization and fast execution speed |

Volatile

volatile is lightweight synchronized, which ensures the visibility of shared variables in multiprocessor development.

volatile has two features: visibility and prohibition of instruction rearrangement

What is visibility

In concurrent programming, when a thread modifies some data, other threads obtain new data. stay Java Memory Model I have introduced two threads 1 and 2. In JMM, each thread has its own local memory to store a copy of the shared variable, and the main memory stores the shared variable. When a thread modifies the shared variable, changes the copy, refreshes the shared variable in the main memory, notifies other threads that the shared variable has changed, and other threads read the new shared variable from the main memory to update their own copy.

Both volatile and synchronized have visibility, but there are differences:

-

If thread 1 modifies a variable modified by volatile, it will immediately update its own copy of the shared variable and refresh the main memory to make it visible to other threads to ensure that other threads get the latest value;

-

synchronized locks the class or object and executes synchronization code. The thread state is visible to other threads. When releasing the lock is to update the main memory data, other threads can obtain the latest value.

How to verify that volatile has visibility? Examples are as follows:

private static boolean aBoolean = false;

public static void main(String[] args) {

new Thread(() -> run()).start();

System.out.println("aBoolean:" + aBoolean);

while (!aBoolean) {

}

System.out.println("over");

}

private static void run(){

try {

Thread.sleep(5000);

} catch (InterruptedException e) {

e.printStackTrace();

}

aBoolean = true;

System.out.println("aBoolean by true");

}

analysis:

Through the example, it can be verified volatile Visibility

If it doesn't work volatile modification aBoolean Then this code will continue to loop,

But if you use volatile modification aBoolean Then it will be output over.

Special attention:

stay while Cannot add in System.out.println() Or log input, and thread sleep sleep() And other methods,

otherwise aBoolean no need volatile Modification will also end, such as System.out.println() Look at the source code and find the following:

public void println(String x) {

synchronized (this) {

print(x);

newLine();

}

}

synchronized With thread visibility, it will refresh the main memory data, and other threads can get the latest data

What is reordering

Reordering is a means by which the compiler and processor reorder the execution sequence in order to optimize the performance of the program. Reordering has certain rules and data dependencies. For example, if a=1, b=a, then a depends on b, so the order of the two instructions will not change. If a=1, b=1, c=b, then b has a dependency on c, and a has no dependency on them, then it may occur that the execution instructions execute b=1, c=b, a=1 first, that is, the instructions are reordered. Although reordering, But the final result is the same.

volatile's feature is to prohibit instruction reordering, that is, to execute according to the code order. In fact, it ensures the order.

Both volatile and synchronized are ordered.

difference

volatile and synchronized

-

volatile essentially tells the jvm that the value of the current variable in the register (working memory) is uncertain and needs to be read from main memory; synchronized locks the current variable. Only the current thread can access the variable, and other threads are blocked.

-

volatile can only be used at the variable level; synchronized can be used at the variable, method, and class levels

-

volatile can only realize the modification visibility of variables and cannot guarantee atomicity; synchronized ensures the visibility and atomicity of variable modification

-

volatile will not cause thread blocking; synchronized may cause thread blocking.

-

Variables marked volatile are not optimized by the compiler; Variables marked synchronized can be optimized by the compiler