Memory visibility

Volatile is a lightweight synchronization mechanism provided by Java. It also plays an important role in concurrent programming. Compared with synchronized (usually called heavyweight lock), volatile is more lightweight. Compared with the huge overhead brought by using synchronized, if volatile can be used properly and reasonably, it will be a beautiful thing.

In order to understand volatile clearly and thoroughly, we analyze it step by step. First, let's look at the following code

<pre style="margin: 0px; padding: 0px; overflow: auto; overflow-wrap: break-word; font-family: "Courier New" !important; font-size: 12px !important; white-space: pre-wrap;">public class TestVolatile { boolean status = false; /** * State switch to true */

public void changeStatus(){

status = true;

} /** * If the status is true, then running. */

public void run(){ if(status){

System.out.println("running....");

}

}

}

In the above example, in A multithreaded environment, suppose that thread A executes the changeStatus() method and thread B runs the run() method. Can you ensure that "running..." is output?

The answer is NO!

This conclusion is somewhat confusing and understandable. In the single thread model, if you run the changeStatus method first and then the run method, you can output "running..." correctly; But in the multithreading model, there is no such guarantee. For the shared variable status, the modification of thread A is "invisible" to thread B. That is, thread B may not be able to observe that the status has been modified to true at this time. So what is visibility?

Visibility means that when a thread modifies the value of a shared variable, the new value can be known immediately to other threads. Obviously, there is no way to achieve memory visibility in the above example.

Java Memory Model

Why does this happen? We need to understand JMM (java memory model) first

java virtual machine has its own memory model (JMM). JMM can shield the memory access differences of various hardware and operating systems, so that java programs can achieve consistent memory access effects under various platforms.

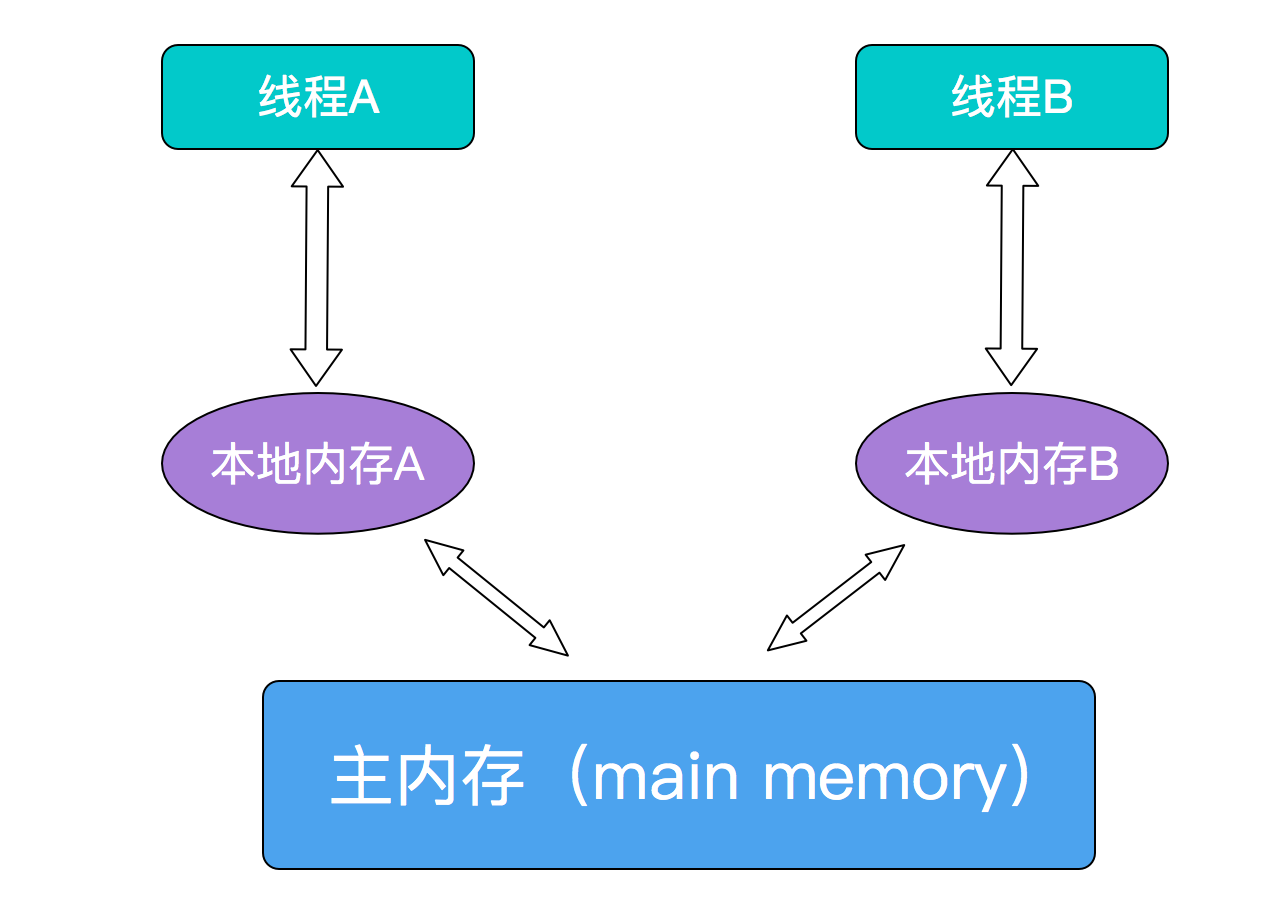

JMM determines when the writing of shared variables by one thread is visible to another thread. JMM defines the abstract relationship between thread and main memory: shared variables are stored in main memory, Each thread has a private Local Memory. The Local Memory stores a copy of the main memory used by the thread. All operations of the thread on variables must be carried out in the working memory, rather than directly reading and writing variables in the main memory. The interaction relationship between the three is as follows

It should be noted that JMM is an abstract memory model, so the so-called local memory and main memory are abstract concepts, which do not necessarily correspond to the real cpu cache and physical memory. Of course, if it is for the purpose of understanding, it is necessary to correspond.

After understanding the simple definition of JMM, the problem is easy to understand. For common shared variables, such as status in the above, thread A modifies it to true. This action occurs in the local memory of thread A and has not been synchronized to the main memory at this time; Thread B caches the initial value of status false. At this time, it may not be observed that the value of status has been modified, which leads to the above problem. So how to solve the invisibility of shared variables in multithreading model? The rough way is to Lock, but using synchronized or Lock here is too heavyweight. It's A bit like shelling mosquitoes. The more reasonable way is actually volatile

Volatile has two features. The first is to ensure the visibility of shared variables to all threads. After a shared variable is declared volatile, it will have the following effects:

1. When writing a volatile variable, JMM will forcibly refresh the variables in the local memory corresponding to the thread to the main memory;

2. This write operation will invalidate the cache in other threads.

In the above example, just declare the status as volatile to ensure that thread B can know immediately when thread A changes it to true

<pre style="margin: 0px; padding: 0px; overflow: auto; overflow-wrap: break-word; font-family: "Courier New" !important; font-size: 12px !important; white-space: pre-wrap;"> volatile boolean status = false;

Pay attention to compound operations

However, it should be noted that we have been comparing volatile and synchronized just because these two keywords have something in common in some memory semantics. Volatile can not completely replace synchronized. It is still a lightweight lock. In many scenarios, volatile is not competent. Look at this example:

<pre style="margin: 0px; padding: 0px; overflow: auto; overflow-wrap: break-word; font-family: "Courier New" !important; font-size: 12px !important; white-space: pre-wrap;">package test; import java.util.concurrent.CountDownLatch; /** * Created by chengxiao on 2017/3/18. */

public class Counter { public static volatile int num = 0; //Use CountDownLatch to wait for the compute thread to finish executing

static CountDownLatch countDownLatch = new CountDownLatch(30); public static void main(String []args) throws InterruptedException { //Start 30 threads for accumulation operation

for(int i=0;i<30;i++){ new Thread(){ public void run(){ for(int j=0;j<10000;j++){

num++;//Self adding operation

}

countDownLatch.countDown();

}

}.start();

} //Wait for the calculation thread to finish executing

countDownLatch.await();

System.out.println(num);

}

}

Execution results:

<pre style="margin: 0px; padding: 0px; overflow: auto; overflow-wrap: break-word; font-family: "Courier New" !important; font-size: 12px !important; white-space: pre-wrap;">**224291**

For this example, some students may wonder, if the shared variable modified with volatile can ensure visibility, shouldn't the result be 300000?

The problem lies in the operation of num + +, because num + + is not an atomic operation, but a composite operation. We can simply understand this operation as consisting of these three steps:

-

read

-

add one-tenth

-

assignment

**Therefore, in A multithreaded environment, it is possible that thread A reads num into local memory. At this time, other threads may have increased num A lot. Thread A still adds expired num and writes it back to main memory. Finally, the result of num is not expected, but less than 30000**

Solve the atomicity problem of num + + operation

For the operations of composite classes such as num + +, you can use the atomic operation class in the java Concurrent package. The atomic operation class ensures its atomicity by circulating CAS.

<pre style="margin: 0px; padding: 0px; overflow: auto; overflow-wrap: break-word; font-family: "Courier New" !important; font-size: 12px !important; white-space: pre-wrap;">/** * Created by chengxiao on 2017/3/18. */

public class Counter {

//Use the atomic operation class public static AtomicInteger num = new AtomicInteger(0)// Use CountDownLatch to wait for the compute thread to finish executing

static CountDownLatch countDownLatch = new CountDownLatch(30); public static void main(String []args) throws InterruptedException { //Start 30 threads for accumulation operation

for(int i=0;i<30;i++){ new Thread(){ public void run(){ for(int j=0;j<10000;j++){

num.incrementAndGet();//Atomic num + +, through cyclic CAS

}

countDownLatch.countDown();

}

}.start();

} //Wait for the calculation thread to finish executing

countDownLatch.await();

System.out.println(num);

}

}

results of enforcement

<pre style="margin: 0px; padding: 0px; overflow: auto; overflow-wrap: break-word; font-family: "Courier New" !important; font-size: 12px !important; white-space: pre-wrap;">**300000**

The basic principles of atomic operations will be introduced in later chapters and will not be repeated here.

Prohibit instruction reordering

volatile also has a feature that prevents instruction reordering optimization.

Reordering is a means by which the compiler and processor sort the instruction sequence in order to optimize the program performance. However, reordering also needs to follow certain rules:

1. Reordering does not reorder operations with data dependencies.

For example: a=1;b=a; In this instruction sequence, since the second operation depends on the first operation, the two operations will not be reordered at compile time and processor runtime.

2. Reordering is to optimize performance, but no matter how reordering, the execution result of the program under single thread cannot be changed

For example: a = 1; b=2; For the three operations of c=a+b, the first step (a=1) and the second step (b=2) may be reordered because there is no data dependency, but the operation of c=a+b will not be reordered because the final result must be c=a+b=3.

Reordering in single thread mode will certainly ensure the correctness of the final result, but in multi-threaded environment, the problem arises. Let's take an example. We slightly improve the first TestVolatile example and add a shared variable a

<pre style="margin: 0px; padding: 0px; overflow: auto; overflow-wrap: break-word; font-family: "Courier New" !important; font-size: 12px !important; white-space: pre-wrap;">public class TestVolatile { int a = 1; boolean status = false; /** * State switch to true */

public void changeStatus(){

a = 2;//1

status = true;//2

} /** * If the status is true, then running. */

public void run(){ if(status){//3

int b = a+1;//4

System.out.println(b);

}

}

}

Suppose thread A executes changeStatus and thread B executes run. Can we ensure that B is equal to 3 at 4?

**The answer is still no guarantee** It is also possible that B is still 2. As mentioned above, in order to provide program parallelism, the compiler and processor may reorder the instructions, while 1 and 2 in the above example may be reordered because there is no data dependency. Execute status=true first and then a=2. At this time, thread B will successfully reach 4, and the operation of a=2 in thread A has not been executed, so the result of b=a+1 may still be equal to 2.

Modifying shared variables with the volatile keyword prevents this reordering. If a shared variable is modified with volatile, a memory barrier will be inserted into the instruction sequence at compile time to prevent specific types of processor reordering

volatile also has some rules for prohibiting instruction reordering, which are briefly listed as follows:

1. When the second operation is a voaltile write, no matter what the first operation is, reordering cannot be performed

** 2. When a local operation is volatile read, no matter what the second operation is, it cannot be reordered**

** 3. When the first operation is volatile write and the second operation is volatile read, reordering cannot be performed**

Summary:

To sum up, volatile is a lightweight synchronization mechanism. It has two main characteristics: one is to ensure the visibility of shared variables to all threads; The second is to prohibit instruction reordering optimization. At the same time, it should be noted that volatile has atomicity for the read / write of a single shared variable, but volatile cannot guarantee its atomicity for composite operations such as num + +. Of course, this paper also puts forward a solution, that is, to use the atomic operation class in the concurrent package to ensure the atomicity of num + + operations by circulating CAS local formula. Atomic operation classes will be introduced in subsequent articles.