Learning tutorials from: [technical art hundred talents plan] Figure 4.2 SSAO algorithm screen space ambient light shading

note

0. Preface

SSAO is generally used in models after IPhone10 and Xiaolong 845

1. SSAO introduction

AO: Ambient Occlusion

SSAO: Screen Space Ambient Occlusion calculates AO through depth buffer and normal buffer

2. SSAO principle

2.1 sample buffer

- Depth buffer: the depth value of each pixel from the camera

- Normal buffer: normal information in camera space

- Position

2.2 normal hemisphere

Where: depth (depth value) + position (vector in camera space) - > vector from camera to pixel in world space

Vector in camera space:

v2f vert_Ao(appdata v){

v2f o;

UNITY_INITIALIZE_OUTPUT(v2f,o);

o.vertex = UnityObjectToClipPos(v.vertex);//Vertex positions: converting to crop space

o.uv = v.uv;

float4 screenPos = ComputeScreenPos(o.vertex);//Vertex positions: converting to screen space

float4 ndcPos = (screenPos / screenPos.w) * 2 - 1;//normalization

float3 clipVec = float3(ndcPos.x, ndcPos.y, 1.0)* _ProjectionParams.z;//Pixel direction: backward clipping space

o.viewVec = mul(unity_CameraInvProjection, clipVec.xyzz).xyz;//Pixel direction: push back into camera space

return o;

}

3. Implementation of SSAO algorithm

3.1 get depth and normal buffer

private void Start()

{

cam = this.GetComponent<Camera>();

cam.depthTextureMode = cam.depthTextureMode | DepthTextureMode.DepthNormals;//With operations, increase the depth and normals of the rendered texture

}

3.2 reconstruction of camera spatial coordinates

reference resources: Unity reconstructs the world space position from the depth buffer

Where: depth (depth value) + position (vector in camera space) - > vector from camera to pixel in camera space

Vector in camera space:

//Step 1: obtain the pixel direction in camera space

v2f vert_Ao(appdata v){

v2f o;

UNITY_INITIALIZE_OUTPUT(v2f,o);

o.vertex = UnityObjectToClipPos(v.vertex);//Vertex positions: converting to crop space

o.uv = v.uv;

float4 screenPos = ComputeScreenPos(o.vertex);//Vertex positions: converting to screen space

float4 ndcPos = (screenPos / screenPos.w) * 2 - 1;//normalization

float3 clipVec = float3(ndcPos.x, ndcPos.y, 1.0)* _ProjectionParams.z;//Pixel direction: backward clipping space

o.viewVec = mul(unity_CameraInvProjection, clipVec.xyzz).xyz;//Pixel direction: push back into camera space

return o;

}

//Step 2: get the depth information and multiply it to get the vector

fixed4 frag_Ao(v2f i) : SV_Target{

fixed4 col tex2D(_MainTex, i.uv);//Screen texture

float3 viewNormal;//Normal direction in camera space

float linear01Depth;//Depth value 0-1

float4 depthnormal = tex2D(_CameraDepthNormalsTexture, i.uv);//Get texture information and decode

DecodeDepthNormal(depthnormal, linear01Depthm viewNormal);

float3 viewPos = linear01Depth * i.viewVec;//Pixel vector in camera space

}

3.3 construction of normal vector orthogonal basis (TBN)

tangent bitangent viewNormal

fixed4 frag_Ao(v2f i) : SV_Target{

//Reconstruction vector orthogonal basis

viewNormal = normalize(viewNormal) * float3(1, 1, -1);//N

float2 noiseScale = _ScreenParams.xy / 4.0;//Scaling of noise textures

float2 noiseUV = i.uv * noiseScale;

float3 randvec = tex2D(_NoiseTex, noiseUV).xyz;//Random vectors are obtained by sampling

float3 tangent = normalize(randvec - viewNormal * dot(randvec, viewNormal));//T

float3 bitangent = cross(viewNormal, tangent);//B

float3x3 TBN = float3x3(tangent, bitangent, viewNormal);

}

3.4 AO sampling core

//Step 1: generate sampling core in C# part

private void GenerateAOSampleKernel()

{

if(sampleKernelCount == sampleKernelList.Count)

{

return;//Return if list is full

}

sampleKernelList.Clear();

for(int i = 0; i < sampleKernelCount; i++)

{

var vec = new Vector4(Random.Range(-1.0f, 1.0f), Random.Range(-1.0f, 1.0f), Random.Range(0, 1.0f), 1.0f);

vec.Normalize();//Initialize random vector

var scale = (float)i / sampleKernelCount;

scale = Mathf.Lerp(0.01f, 1.0f, scale * scale);//i is a quadratic equation curve from 0-63 to 0-1

vec *= scale;

sampleKernelList.Add(vec);

}

}

//Step 2: accumulate ao from the depth change degree of each sampling position

fixed4 frag_Ao(v2f i) : SV_Target{

//Sample and accumulate

float ao = 0;//ao value

int sampleCount = _SampleKernelCount;

for(int i = 0; i < sampleCount; i++){

float3 randomVec = mul(_SampleKernelArray[i].xyz, TBN);//Sampling direction

float weight = smoothstep(0, 0.2, length(randomVec.xy));//Assign weights to different sampling directions

//Sampling location

float3 randomPos = viewPos + randomVec * _SampleKernelRadius;//Sampling position in camera space

float3 rclipPos = mul((float3x3)unity_CameraProjection, randomPos);//Camera space to crop space (projection space)

float2 rscreenPos = (rclipPos.xy / rclipPos.z) * 0.5 + 0.5;//Crop space to screen space

float randomDepth;//Depth of sampling location

float3 randomNormal;//Normal direction of sampling position

//Read the above information from the texture

float4 rcdn = tex2D(_CameraDepthNormalsTexture, rscreenPos);

DecodeDepthNormal(rcdn, randomDepth, randomNormal);

//Comparative calculation ao

float range = abs(randomDepth - linear01Depth) > _RangeStrength ? 0.0 : 1.0;//Depth variation

float selfCheck = randomDepth + _DepthBiasValue < linear01Depth ? 1.0 : 0.0;//Return to zero with excessive depth change

ao += range * selfCheck * weight;

}

ao = ao / sampleCount;

ao = max(0.0, 1 - ao * _AOStrength);

return float4(ao, ao, ao, 1);

}

4. AO effect improvement

Intercepted from the above code

4.1 sampling Noise to obtain random vector

float2 noiseScale = _ScreenParams.xy / 4.0;//Scaling of noise textures float2 noiseUV = i.uv * noiseScale; float3 randvec = tex2D(_NoiseTex, noiseUV).xyz;//Random vectors are obtained by sampling

4.2 cut off outliers

- Depth value with large gap

float selfCheck = randomDepth + _DepthBiasValue < linear01Depth ? 1.0 : 0.0;//Return to zero with excessive depth change

- The depth change of the same plane depth value due to accuracy problems

float range = abs(randomDepth - linear01Depth) > _RangeStrength ? 0.0 : 1.0;//Depth variation

- Smooth weight based on distance

float weight = smoothstep(0, 0.2, length(randomVec.xy));//Assign weights to different sampling directions

- Bilateral filter ambiguity (C# part)

RenderTexture blurRT = RenderTexture.GetTemporary(rtW, rtH, 0);//Get fuzzy render texture

ssaoMaterial.SetFloat("_BilaterFilterFactor", 1.0f - bilaterFilterStrength);

ssaoMaterial.SetVector("_BlurRadius", new Vector4(BlurRadius, 0, 0, 0));//x direction

Graphics.Blit(aoRT, blurRT, ssaoMaterial, (int)SSAOPassName.BilateralFilter);

ssaoMaterial.SetVector("_BlurRadius", new Vector4(0, BlurRadius, 0, 0));//y direction

Graphics.Blit(blurRT, aoRT, ssaoMaterial, (int)SSAOPassName.BilateralFilter);

5. Compare the model baking AO

5.1 baking method

- Modeling software baking to texture: strong controllability (cumbersome operation, UV required, large resource occupation), strong self detail (lack of scene detail), and not affected by static and dynamic.

- Game engine baking, such as Unity3D Lighting, is relatively simple, with good overall details, and dynamic objects cannot be baked

- SSAO: the complexity is based on the number of pixels, strong real-time, flexible and controllable; The performance consumption is the largest compared with the first two, and the final effect is worse than 1 (in theory)

6. SSAO performance consumption

Points consumed:

- Random sampling: IF FOR loop breaks the parallelism of GPU, and excessive sampling times greatly improve the complexity

- Fuzzy processing of bilateral filtering: the number of screen samples is increased

task

1. Achieve SSAO effect

Then I knocked it again. See the above for the content.

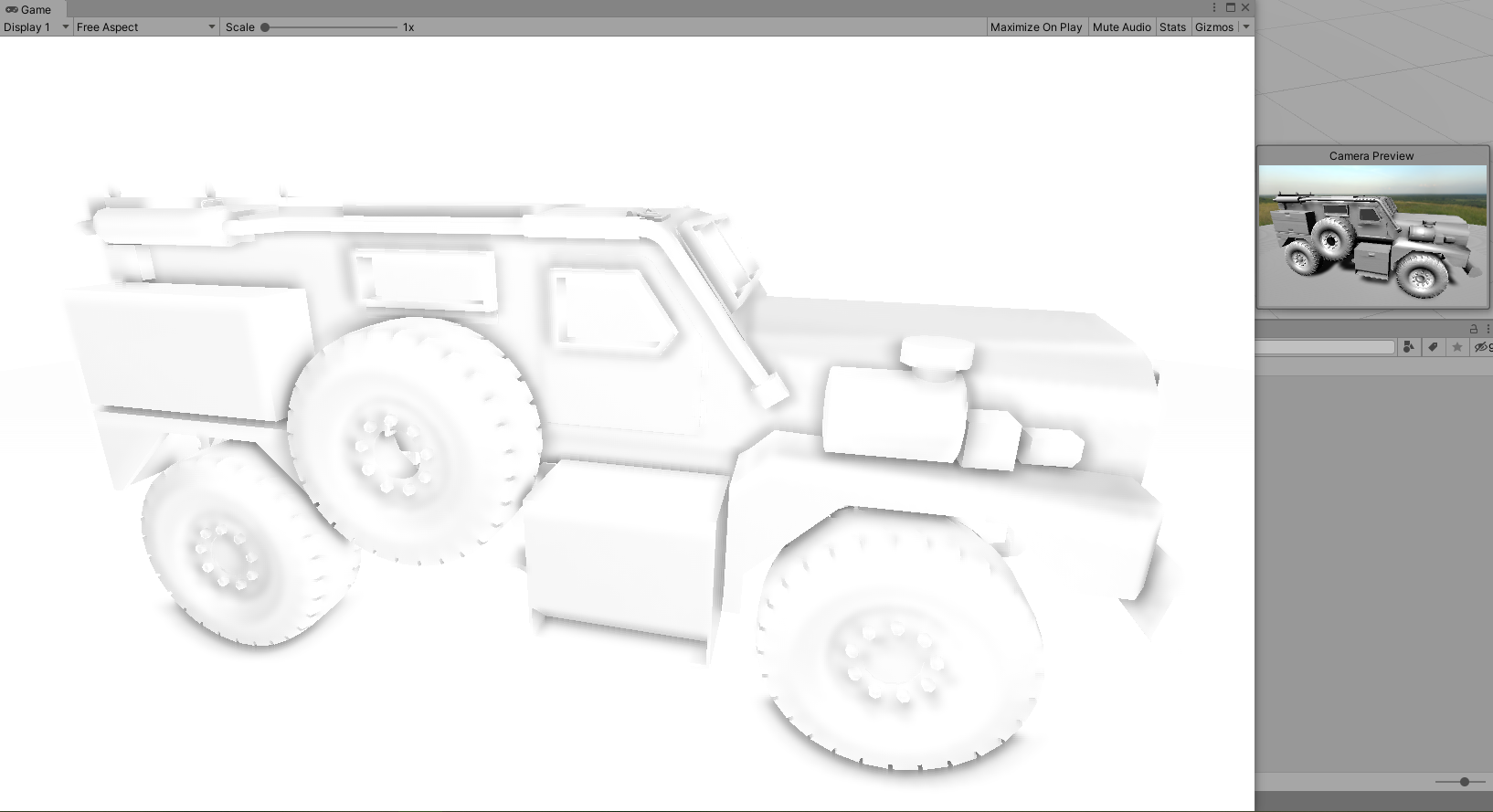

SSAO

SSAO

2. Compare with other AO algorithms

For example, HBAO

Refer to: Ambient Occlusion environment mask 1 The following AO algorithms are mentioned

SSAO-Screen space ambient occlusion

SSDO-Screen space directional occlusion

HDAO-High Definition Ambient Occlusion

HBAO±Horizon Based Ambient Occlusion+

AAO-Alchemy Ambient Occlusion

ABAO-Angle Based Ambient Occlusion

PBAOVXAO-Voxel Accelerated Ambient Occlusion

An algorithm for gtao ground truth ambient occlusion was found on git

Principle reference: UE4 Mobile GTAO implementation (HBAO continued)

Code source: Unity3D Ground Truth Ambient Occlusion

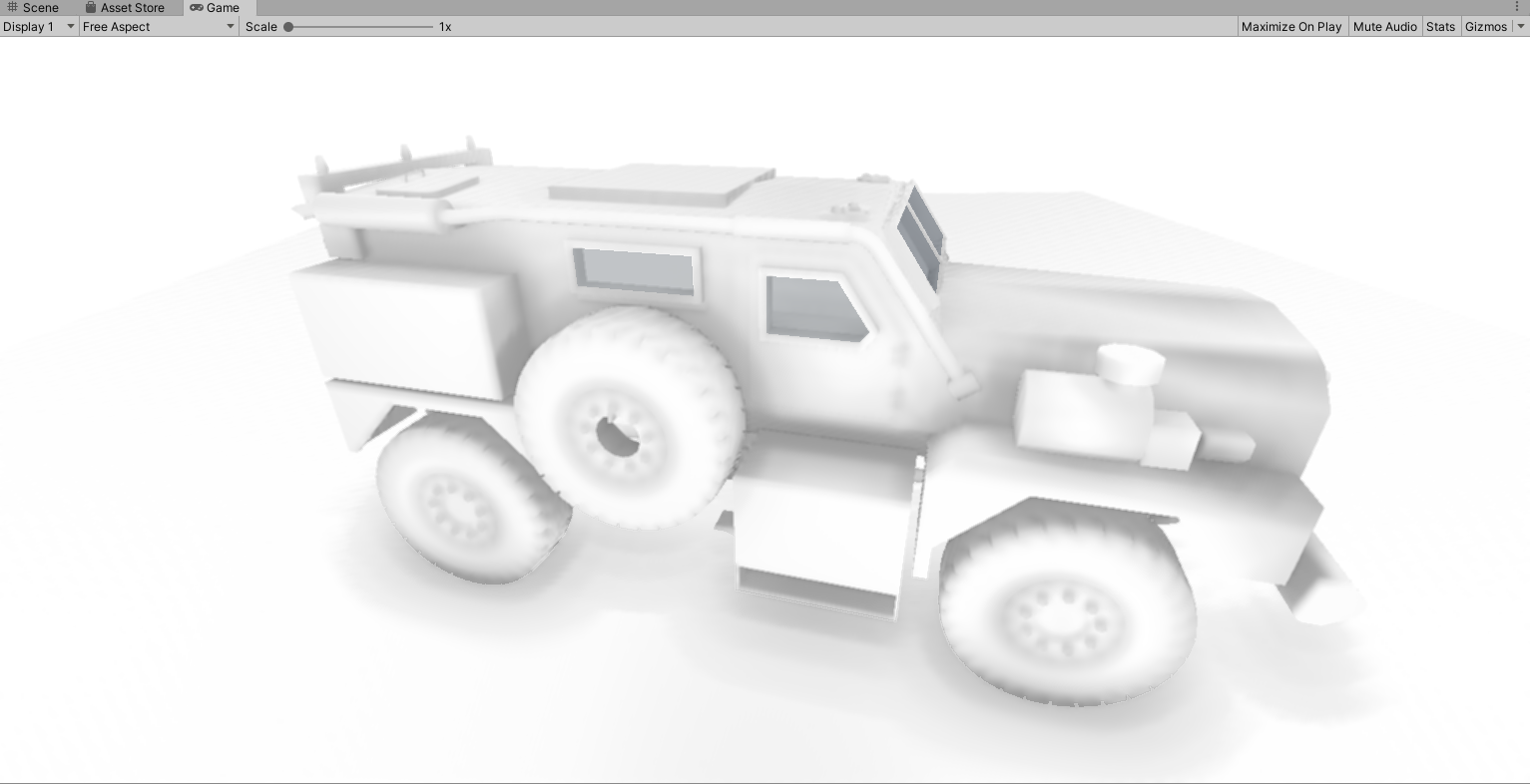

The whole is a little dark:

GTAO

GTAO