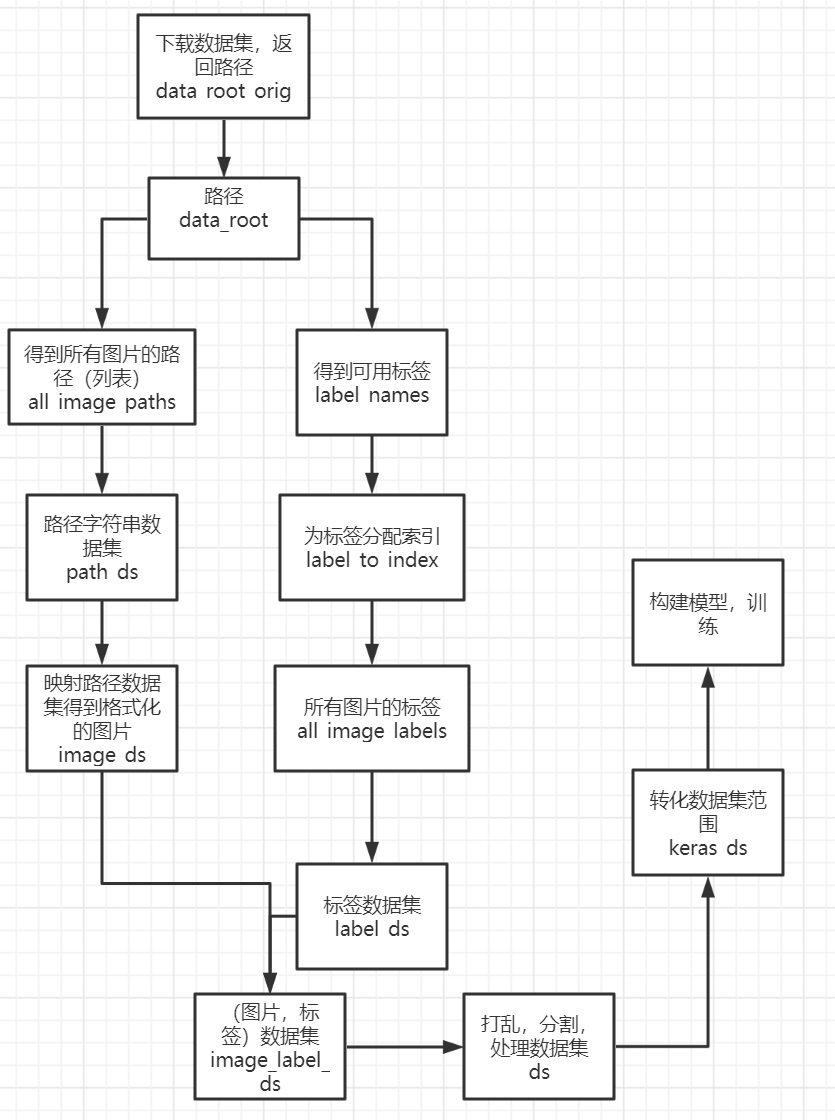

Original code from t ensorflow

Tensorflow learning notes (load pictures with tf.data)

This tutorial provides an example of how to use TF Data loading a simple example of an image.

Importing modules, configuring

import tensorflow as tf

tf.data is used for data set construction and preprocessing

AUTOTUNE = tf.data.experimental.AUTOTUNE

Download and check the dataset

Download the file from the origin web site and name it 'flower'_ Photos', untar=True means decompressing the file.

Via pathlib Path (data_root_orig) obtains the path of the file (although data_root_orig also represents the path of the downloaded file, pathlib.Path can support different operating systems)

import pathlib data_root_orig = tf.keras.utils.get_file(origin='https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz', fname='flower_photos', untar=True) data_root = pathlib.Path(data_root_orig) print(data_root)

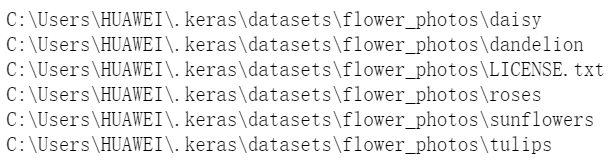

View data_ Files under root path

data_ There are 5 subfolders and 1txt files under the root path.

for item in data_root.iterdir(): print(item)

data_root. There are 6 sub files under the path, data_root.glob('/') means to read all the pictures in the subfolder.

data_root. There are 6 sub files under the path, data_root.glob('/') means to read all the pictures in the subfolder.

import random

all_image_paths = list(data_root.glob('*/*'))

all_image_paths

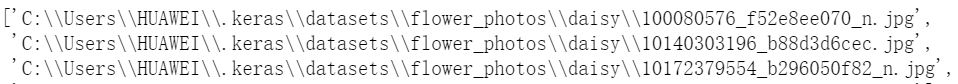

Some of the contents in the list are as follows

[WindowsPath('C:/Users/HUAWEI/.keras/datasets/flower_photos/daisy/100080576_f52e8ee070_n.jpg'),

WindowsPath('C:/Users/HUAWEI/.keras/datasets/flower_photos/daisy/10140303196_b88d3d6cec.jpg'),

Remove the windows path, leaving only the path of the picture

all_image_paths = [str(path) for path in all_image_paths] all_image_paths

Disorder picture path order

See how many pictures there are

random.shuffle(all_image_paths) image_count = len(all_image_paths) image_count

Check the picture

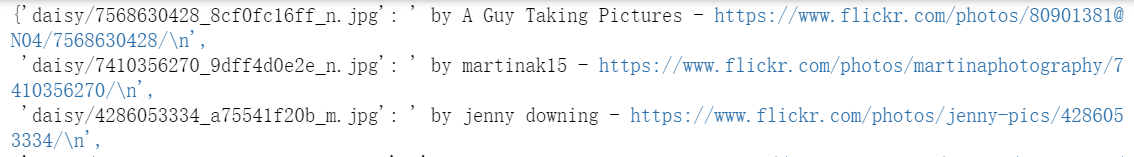

Open data_ The "LICENSE.txt" file in the root path is encoded as' utf-8 ', and the contents after the fourth line of the file are read.

Separate each item in the list with 'CC-BY' as the separator.

import os

attributions = (data_root /"LICENSE.txt").open(encoding='utf-8').readlines()[4:]

attributions = [line.split(' CC-BY') for line in attributions]

Turn attributes into a dictionary

attributions = dict(attributions) attributions

caption_image is a function to view the picture photographer. Input the path of the picture in the computer as a parameter, and use pathlib Path turns the path into a path suitable for the native system. image_ Path is equivalent to data_ A subset of root, pathlib Path(image_path). relative_ To (data_root) is equivalent to image_ Path minus data_ root. Examples are as follows

import IPython.display as display

def caption_image(image_path):

image_rel = pathlib.Path(image_path).relative_to(data_root)

return "Image (CC BY 2.0) " + ' - '.join(attributions[str(image_rel)].split(' - ')[:-1])

Randomly select 3 pictures, browse the pictures and print their photographers

for n in range(3): image_path = random.choice(all_image_paths) display.display(display.Image(image_path)) print(caption_image(image_path)) print()

Identify available labels

View data_ All files under the root path (5 folders, 1 txt file). If the file is a folder, select and sort the file name of the file.

label_names = sorted(item.name for item in data_root.glob('*/') if item.is_dir())

label_names

Each tag is assigned an index by enumerating.

label_to_index = dict((name, index) for index, name in enumerate(label_names)) label_to_index

Label all pictures. Via for path in all_image_paths gets the path of all pictures, such as' / home / kbuilder / keras/datasets/flower_ photos/tulips/8673416166_ 620fc18e2f_ n.jpg’,pathlib.Path(path).parent.name can get the name of the folder above the image, such as tulips.

Label all pictures by key value pair matching.

all_image_labels = [label_to_index[pathlib.Path(path).parent.name]

for path in all_image_paths]

print("First 10 labels indices: ", all_image_labels[:10])

Load and format pictures

Load and format the picture to make it suitable for model training.

Through TF io. read_ File() and the picture path read the original data, return it to image, and decode the original data image into image tensor

def load_and_preprocess_image(path): image = tf.io.read_file(path) return preprocess_image(image)

This function is used to decode the original data. Image is the original data. tf.image.decode_jpeg is used to decode pictures. channels=3 means to output RGB images. Finally, Tensor of uint8 type is returned.

Adjust the size of the image, [192, 192] represents the size of the new image.

Normalize the image.

def preprocess_image(image): image = tf.image.decode_jpeg(image, channels=3) image = tf.image.resize(image, [192, 192]) image /= 255.0 # normalize to [0,1] range return image

View adjusted image

Do not display gridlines

Set abscissa

Set the title and capitalize the first letter

import matplotlib.pyplot as plt image_path = all_image_paths[0] label = all_image_labels[0] plt.imshow(load_and_preprocess_image(img_path)) plt.grid(False) plt.xlabel(caption_image(img_path)) plt.title(label_names[label].title()) print()

Build a TF data. Dataset

Build TF data. The simplest way to use a dataset is to use from_tensor_slices method.

Slice the string array to get a string dataset:

path_ds = tf.data.Dataset.from_tensor_slices(all_image_paths)

Now create a new dataset by mapping preprocess on the path dataset_ Image to dynamically load and format images and return an iterator. That is, through load_and_preprocess_image path_ds map to image_ds, dynamically load and format pictures.

image_ds = path_ds.map(load_and_preprocess_image, num_parallel_calls=AUTOTUNE)

Browse pictures

import matplotlib.pyplot as plt plt.figure(figsize=(8,8)) for n, image in enumerate(image_ds.take(4)): plt.subplot(2,2,n+1) plt.imshow(image) plt.grid(False) plt.xticks([]) plt.yticks([]) plt.xlabel(caption_image(all_image_paths[n])) plt.show()

Use the same from_tensor_slices method you can create a label dataset

label_ds = tf.data.Dataset.from_tensor_slices(tf.cast(all_image_labels, tf.int64))

Since these datasets are in the same order, you can package them together to get a (picture, label) pair of datasets:

image_label_ds = tf.data.Dataset.zip((image_ds, label_ds)) ds = tf.data.Dataset.from_tensor_slices((all_image_paths, all_image_labels))

Tuples are decompressed into the location parameters of the mapping function

def load_and_preprocess_from_path_label(path, label): return load_and_preprocess_image(path), label image_label_ds = ds.map(load_and_preprocess_from_path_label) image_label_ds

During training, the data shall be fully disrupted, divided into batches, always through repetition, and batch shall be provided as much as possible

BATCH_SIZE = 32

Set a shuffle buffer size consistent with the data set size to ensure that the data is fully disrupted.

ds = image_label_ds.shuffle(buffer_size=image_count) ds = ds.repeat() ds = ds.batch(BATCH_SIZE)

When the model is training, prefetch enables the data set to obtain batch in the background.

ds = ds.prefetch(buffer_size=AUTOTUNE) ds

ds = image_label_ds.apply( tf.data.experimental.shuffle_and_repeat(buffer_size=image_count)) ds = ds.batch(BATCH_SIZE) ds = ds.prefetch(buffer_size=AUTOTUNE) ds

Transfer data to model

mobile_net = tf.keras.applications.MobileNetV2(input_shape=(192, 192, 3), include_top=False) mobile_net.trainable=False

The model expects its output to be standardized to the range of [- 1,1]. Before you pass the output to the MobilNet model, you need to convert its range from [0,1] to [- 1,1]:

def change_range(image,label): return 2*image-1, label keras_ds = ds.map(change_range)

Build a model wrapped with MobileNet and use it in TF keras. layers. Use TF. Before the dense output layer keras. layers. Globalaveragepooling2d to average those spatial vectors:

model = tf.keras.Sequential([ mobile_net, tf.keras.layers.GlobalAveragePooling2D(), tf.keras.layers.Dense(len(label_names), activation = 'softmax')])

logit_batch = model(image_batch).numpy()

print("min logit:", logit_batch.min())

print("max logit:", logit_batch.max())

print()

print("Shape:", logit_batch.shape)

Compilation model

model.compile(optimizer=tf.keras.optimizers.Adam(),

loss='sparse_categorical_crossentropy',

metrics=["accuracy"])

View the number of variables that can be trained in the sense layer

len(model.trainable_variables) model.summary()

Note that for demonstration purposes, you will only run 3 step s in each epoch, but generally it is passed to model You will specify the actual number of steps before fit()

steps_per_epoch=tf.math.ceil(len(all_image_paths)/BATCH_SIZE).numpy() steps_per_epoch model.fit(ds, epochs=1, steps_per_epoch=3)