Part of the content comes from Keras introductory course 6 of blogger Stanley compound field - migration learning using concept V3 model

Address: https://blog.csdn.net/tsyccnh/article/details/78889838

There are two main types of transfer learning

- The first is the so-called transfer learning. When migrating training, remove the top layer. For example, the top layer of ImageNet training task is a 1000 output full connection layer, and replace it with a new top layer, such as a 10 output full connection layer. Then, during training, only the last two layers, the penultimate layer of the original network and the new full connection output layer, are trained. It can be said that transfer learning uses the underlying network as a feature extractor.

- The second is called fine tune, which is the same as transfer learning. A new top layer is used, but this time, all (or most) other layers will be trained during the training process. That is, the weight of the bottom layer will also be adjusted with the training.

Download Inception V3 related data

import os

from tensorflow.keras import layers

from tensorflow.keras import Model

!wget --no-check-certificate \

https://storage.googleapis.com/mledu-datasets/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5 \

-O /tmp/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5

from tensorflow.keras.applications.inception_v3 import InceptionV3

local_weights_file = '/tmp/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5'

Set pre_model architecture (also known as base_model)

Two parameters of the inception V3 model are more important. One is weights. If it is' Imagenet ', Keras will automatically download the parameters that have been trained on Imagenet. If it is None, the system will initialize the parameters in a random way. At present, there are only two options for this parameter. The other parameter is include_top, if True, the full connection layer will be retained. If False, the top-level fully connected network will be removed

Input here_ Shape = (150, 150, 3) is the cat and dog picture structure we input into the network

pre_trained_model = InceptionV3(input_shape = (150, 150, 3),

include_top = False,

weights = None)

pre_trained_model.load_weights(local_weights_file)

Freeze pre_trained_model all layers, so that the skeleton model is no longer trained

for layer in pre_trained_model.layers: layer.trainable = False

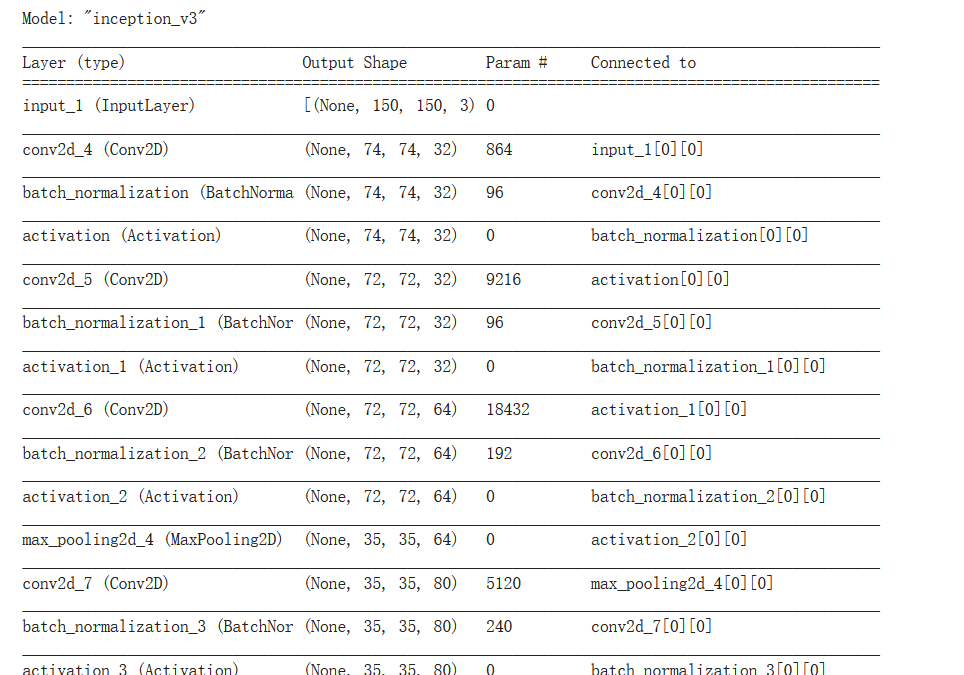

Because the network structure is too long, only part is shown here

pre_trained_model.summary()

Here, we do not use all levels of inception V3, but let the network take the mixed 7 layer as the output and connect to our newly added network

last_layer = pre_trained_model.get_layer('mixed7')

print('last layer output shape: ', last_layer.output_shape)

last_output = last_layer.output

last layer output shape: (None, 7, 7, 768)

Add our own layers to the network and use dropout to reduce over fitting

from tensorflow.keras.optimizers import RMSprop

# Flatten the output layer to 1 dimension

x = layers.Flatten()(last_output)

# Add a fully connected layer with 1,024 hidden units and ReLU activation

x = layers.Dense(1024, activation='relu')(x)

# Add a dropout rate of 0.2

x = layers.Dropout(0.2)(x)

# Add a final sigmoid layer for classification

x = layers.Dense (1, activation='sigmoid')(x)

model = Model( inputs=pre_trained_model.input, outputs=x)

model.compile(optimizer = RMSprop(lr=0.0001),

loss = 'binary_crossentropy',

metrics = ['acc'])

Define directories with data enhancement

base_dir = '/tmp/cats_and_dogs_filtered'

train_dir = os.path.join( base_dir, 'train')

validation_dir = os.path.join( base_dir, 'validation')

train_cats_dir = os.path.join(train_dir, 'cats') # Directory with our training cat pictures

train_dogs_dir = os.path.join(train_dir, 'dogs') # Directory with our training dog pictures

validation_cats_dir = os.path.join(validation_dir, 'cats') # Directory with our validation cat pictures

validation_dogs_dir = os.path.join(validation_dir, 'dogs')# Directory with our validation dog pictures

train_cat_fnames = os.listdir(train_cats_dir)

train_dog_fnames = os.listdir(train_dogs_dir)

# Add our data-augmentation parameters to ImageDataGenerator

train_datagen = ImageDataGenerator(rescale = 1./255.,

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True)

# Note that the validation data should not be augmented!

test_datagen = ImageDataGenerator( rescale = 1.0/255. )

# Flow training images in batches of 20 using train_datagen generator

train_generator = train_datagen.flow_from_directory(train_dir,

batch_size = 20,

class_mode = 'binary',

target_size = (150, 150))

# Flow validation images in batches of 20 using test_datagen generator

validation_generator = test_datagen.flow_from_directory( validation_dir,

batch_size = 20,

class_mode = 'binary',

target_size = (150, 150))

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.

Training network

history = model.fit_generator(

train_generator,

validation_data = validation_generator,

steps_per_epoch = 100,

epochs = 20,

validation_steps = 50,

verbose = 2)

Epoch 1/20

100/100 - 29s - loss: 0.3360 - acc: 0.8655 - val_loss: 0.1211 - val_acc: 0.9470

Epoch 2/20

100/100 - 23s - loss: 0.2193 - acc: 0.9145 - val_loss: 0.1096 - val_acc: 0.9640

Epoch 3/20

100/100 - 23s - loss: 0.2038 - acc: 0.9290 - val_loss: 0.0888 - val_acc: 0.9660

Epoch 4/20

100/100 - 22s - loss: 0.1879 - acc: 0.9315 - val_loss: 0.1198 - val_acc: 0.9590

Epoch 5/20

100/100 - 23s - loss: 0.1760 - acc: 0.9415 - val_loss: 0.1155 - val_acc: 0.9660

Epoch 6/20

100/100 - 22s - loss: 0.1771 - acc: 0.9375 - val_loss: 0.1540 - val_acc: 0.9450

Epoch 7/20

100/100 - 23s - loss: 0.1916 - acc: 0.9370 - val_loss: 0.1616 - val_acc: 0.9550

Epoch 8/20

100/100 - 22s - loss: 0.1594 - acc: 0.9440 - val_loss: 0.1422 - val_acc: 0.9630

Epoch 9/20

100/100 - 23s - loss: 0.1669 - acc: 0.9465 - val_loss: 0.1099 - val_acc: 0.9650

Epoch 10/20

100/100 - 23s - loss: 0.1677 - acc: 0.9445 - val_loss: 0.1245 - val_acc: 0.9600

Epoch 11/20

100/100 - 22s - loss: 0.1653 - acc: 0.9470 - val_loss: 0.0918 - val_acc: 0.9730

Epoch 12/20

100/100 - 22s - loss: 0.1542 - acc: 0.9455 - val_loss: 0.1623 - val_acc: 0.9570

Epoch 13/20

100/100 - 22s - loss: 0.1525 - acc: 0.9520 - val_loss: 0.1087 - val_acc: 0.9670

Epoch 14/20

100/100 - 23s - loss: 0.1454 - acc: 0.9565 - val_loss: 0.1314 - val_acc: 0.9640

Epoch 15/20

100/100 - 22s - loss: 0.1279 - acc: 0.9525 - val_loss: 0.1515 - val_acc: 0.9630

Epoch 16/20

100/100 - 23s - loss: 0.1255 - acc: 0.9530 - val_loss: 0.1306 - val_acc: 0.9650

Epoch 17/20

100/100 - 22s - loss: 0.1430 - acc: 0.9575 - val_loss: 0.1226 - val_acc: 0.9660

Epoch 18/20

100/100 - 23s - loss: 0.1350 - acc: 0.9510 - val_loss: 0.1583 - val_acc: 0.9520

Epoch 19/20

100/100 - 22s - loss: 0.1288 - acc: 0.9580 - val_loss: 0.1170 - val_acc: 0.9710

Epoch 20/20

100/100 - 22s - loss: 0.1363 - acc: 0.9550 - val_loss: 0.1260 - val_acc: 0.9660

Plot loss and accuracy

import matplotlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'r', label='Training accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation accuracy')

plt.title('Training and validation accuracy')

plt.legend(loc=0)

plt.figure()

plt.show()