Reference article: datasets: quick learn

Datasets: quick learn

- Read memory data from the numpy array.

- Read the csv file line by line.

Basic input

Learning how to get array fragments is the easiest way to start learning tf.data.

def train_input_fn(features, labels, batch_size): """An input function for training""" # Converts the input value to a dataset. dataset = tf.data.Dataset.from_tensor_slices((dict(features), labels)) # Mixed, repeated, batch samples. dataset = dataset.shuffle(1000).repeat().batch(batch_size) # Return data set return dataset

Let's take a closer look at this function.

parameter

This function takes three arguments. If the expected type of a parameter is "array", it will accept almost all values that can be converted to an array using numpy.array. We can see that there is only one exception: tuple, which has a special meaning for Datasets.

- Features: a data dictionary (or DataFrame) in the form of {'feature [u name': array} that contains the original input features.

- labels: an array of label s containing each sample.

- batch_size: an integer indicating the required batch size.

In premade estimator.py, we use the iris data.load data () function to retrieve iris data.

You can run the function and extract the results as follows:

import iris_data # get data train, test = iris_data.load_data() features, labels = train

Then pass the data to the input function with a line of code like this:

batch_size=100 iris_data.train_input_fn(features, labels, batch_size)

Let's look specifically at the train input fn() function.

(array) fragment

TF Layers tutorial: building convolutional neural network

The code to return this Dataset is as follows:

train, test = tf.keras.datasets.mnist.load_data() mnist_x, mnist_y = train mnist_ds = tf.data.Dataset.from_tensor_slices(mnist_x) print(mnist_ds)

<TensorSliceDataset shapes: (28,28), types: tf.uint8>

The dataset above represents a simple collection of arrays, but it is more complex. Dataset can transparently handle any nested dictionary or tuple combination (or named tuple).

For example, after converting the features of irls to the standard python dictionary, you can convert the array dictionary to the Dataset of the dictionary, as follows:

dataset = tf.data.Dataset.from_tensor_slices(dict(features)) print(dataset)

<TensorSliceDataset shapes: { SepalLength: (), PetalWidth: (), PetalLength: (), SepalWidth: ()}, types: { SepalLength: tf.float64, PetalWidth: tf.float64, PetalLength: tf.float64, SepalWidth: tf.float64} >

The first line of iris, train input, uses the same function, but adds a layer of structure. It creates a data set that contains (features? Dict, label) data pairs.

The following code indicates that the label is a scalar of type int64:

# Converts the input to a dataset. dataset = tf.data.Dataset.from_tensor_slices((dict(features), labels)) print(dataset)

<TensorSliceDataset shapes: ( { SepalLength: (), PetalWidth: (), PetalLength: (), SepalWidth: ()}, ()), types: ( { SepalLength: tf.float64, PetalWidth: tf.float64, PetalLength: tf.float64, SepalWidth: tf.float64}, tf.int64)>

operation

At present, Dataset will traverse the data in a fixed order once, and only one element can be generated at a time. It needs further processing before it can be used for training. Fortunately, the tf.data.Dataset class provides methods to prepare data for training. The next line of code for train input uses several of these methods:

# Mixed arrangement, repetition and batch processing of samples. dataset = dataset.shuffle(1000).repeat().batch(batch_size)

print(mnist_ds.batch(100))

<BatchDataset shapes: (?, 28, 28), types: tf.uint8>

Note that because the last batch will have fewer elements, the batch size of the dataset is uncertain.

In train input, after batch processing, the data set contains one-dimensional vectors of the elements. The front part of these one-dimensional vectors is:

print(dataset)

<TensorSliceDataset shapes: ( { SepalLength: (?,), PetalWidth: (?,), PetalLength: (?,), SepalWidth: (?,)}, (?,)), types: ( { SepalLength: tf.float64, PetalWidth: tf.float64, PetalLength: tf.float64, SepalWidth: tf.float64}, tf.int64)>

Return

At this point, the Dataset contains (features? Dict, labels) pairs. This is the format expected by the train and evaluate methods, so input FN will return the Dataset.

When using the predict method, you can / should omit labels.

Read CSV file

The following call to iris_data.make_download function will download the data when necessary and return the path of the result file:

import iris_data train_path, test_path = iris_data.maybe_download()

iris_data.csv_input_fn Function includes an alternative to parsing csv files with Dataset.

Let's see how to build an Estimator compatible input function that can read local files.

Create Dataset

ds = tf.data.TextLineDataset(train_path).skip(1)

Build a csv line parser

We start by creating a function that can parse a row.

# Metadata describing text columns COLUMNS = ['SepalLength', 'SepalWidth', 'PetalLength', 'PetalWidth', 'label'] FIELD_DEFAULTS = [[0.0], [0.0], [0.0], [0.0], [0]] def _parse_line(line): # Decode rows into fields fields = tf.decode_csv(line, FIELD_DEFAULTS) # Package the results into a dictionary features = dict(zip(COLUMNS,fields)) # Detach labels from features label = features.pop('label') return features, label

Parsing multiple rows

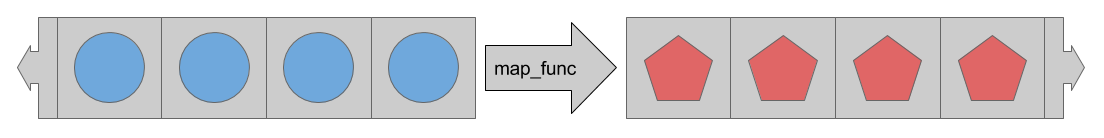

The map method takes a map func parameter that describes how each element in the Dataset should be converted.

tf.data.Dataset.map

Therefore, in order to parse the multiline data when it is read from the csv file, we provide the map method with the "parse" function

ds = ds.map(_parse_line) print(ds)

<MapDataset shapes: ( {SepalLength: (), PetalWidth: (), ...}, ()), types: ( {SepalLength: tf.float32, PetalWidth: tf.float32, ...}, tf.int32)>

Now, the dataset contains (features, label) data pairs instead of simple string scalars.

The rest of the iris data.csv input function and Basic input The iris data.train input function described in is the same.

practice

This function can be used as an alternative to iris data.train input FN. It can provide data to the estimator as follows:

train_path, test_path = iris_data.maybe_download() # All inputs are numbers feature_columns = [ tf.feature_column.numeric_column(name) for name in iris_data.CSV_COLUMN_NAMES[:-1]] # Building the estimator est = tf.estimator.LinearClassifier(feature_columns, n_classes=3) # Training estimator batch_size = 100 est.train( steps=1000, input_fn=lambda : iris_data.csv_input_fn(train_path, batch_size))

Estimator expects input FN to have no parameters. To remove this limitation, we use lambda to capture parameters and provide the expected interface.

summary

In order to read data conveniently from different data sources, tf.data module provides a set of classes and functions. In addition, tf.data has simple and powerful methods to apply various standards and custom transformations.

Now you have a basic understanding of how to get data efficiently for Estimator. (as an extension) next, consider the following documents: