0. Preface

Tensorflow is an open source machine learning framework based on Python. It is developed by Google and has rich applications in graphics classification, audio processing, recommendation system and natural language processing. It is one of the most popular machine learning frameworks at present. TensorFlow2.0 was released in October 2019 and has reached version 2.7.

Although many people are still using TF1 version, I think the future trend must be 2.0, and some old wheels will eventually be replaced by new wheels, so I started from 2.0. The code here mainly comes from the video course "artificial intelligence practice: tensorflow 2.0 notes" by Cao Jian, a teacher of Peking University. This article is a study note, which is mainly for your own query notes, not for commercial purposes. If there is infringement, please contact me.

1. Preparatory work

1.1 installation of Anaconda

Installation through anaconda is highly recommended here. Because Anaconda's base is really easy to use. It not only integrates the basic modules required by artificial intelligence, such as numpy, pandas, sklearn, matplotlib, but also facilitates environmental management and later upgrading. It is almost the only choice.

Official website address: https://www.anaconda.com/distribution/

Of course, there is no exception in installing such program software. If you can use domestic image, you can use domestic image.

Tsinghua mirror address: https://mirrors.tuna.tsinghua.edu.cn/anaconda/archive/

I chose anaconda3-5.3.1-windows-x86 here_ 64.exe version

Because it needs to be reinstalled on different machines frequently, just download it and save the source file. When you need to use it, double-click it directly to install it.

There was not much to say during the installation process, so I followed the prompts all the way. Note the python version. I chose Python 3 Version 8.5.

After installing anaconda, in fact, many basic modules of the environment required for artificial intelligence have been installed (of course, some are not suitable for TF2.0 and need to be upgraded), as well as Python. Next, install tensorflow2 0.

1.2,TensorFlow2.0 installation

Enter the prompt interface of Anaconda. If you want to create a new environment, you can use:

conda create environment name

To create an environment. If you want to switch environments, you can use

conda activate environment name} switch to the environment you want to use

Use the pip command to install TF2. Similarly, we can use the image if we can use the image:

Install tensorflow:

Specified version

pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple --upgrade tensorflow==1.12

Install the latest

pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple tensorflow

tips:

Error encountered while installing tensorflow: cannot uninstall 'wrap' It is a distutils installed project and thus we cannot accurately determine which files belong to it which would lead to only a partial uninstall.

Enter the following statement

pip install -U --ignore-installed wrapt enum34 simplejson netaddr

Install the wrapt first, and then install tensorflow

1.3 installation in other environments

Some of the libraries that come with my version of anaconda cannot be adapted to TF2 0, to upgrade, similarly, we try to upgrade with image.

Upgrade and install numpy

pip3 install --upgrade numpy

Upgrade and install pandas

pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple --upgrade pandas

Upgrade and install matplotlib

pip3 install -i https://pypi.tuna.tsinghua.edu.cn/simple --upgrade matplotlib

Finally, if I'm used to using jupyter, upgrade jupyter again. I'm used to using vscode.

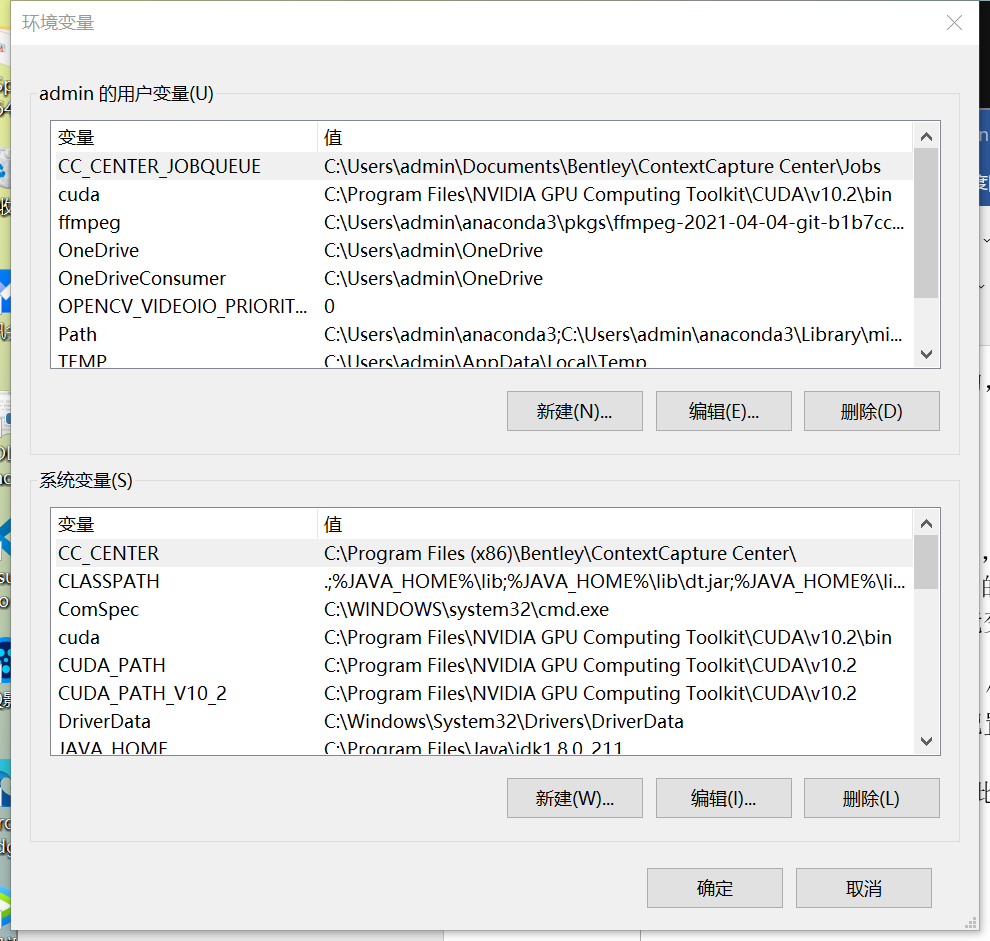

1.4 environment variable configuration

There are two types of environment variables.

1. System environment variables

System environment variable, as the name suggests, is a system variable. That is to say, once the system environment variable is configured, any user who uses this operating system (an operating system can generally set multiple users) can directly find the corresponding program in the doc command window through this environment variable

2. User environment variables

User environment variable, as the name suggests, belongs to a user alone. Generally, if it is configured by that user, it belongs to that user. Only users who configure this environment variable can use it

Generally speaking, in this computer - properties - advanced system settings - Advanced - environment variables

You can find the following interface of environment variables

The above is the user variable and the following is the system variable. You can add both

Add the program address to be used to the list of environment variables.

For example, add my Anaconda variable address C:\Users\admin\anaconda3\Scripts.

After the above work is completed, test whether it is normal:

import tensorflow as tf from tensorflow import keras import matplotlib as mpl import matplotlib.pyplot as plt %matplotlib inline import numpy as np import sklearn import pandas as pd import os import sys import time print(tf.__version__) print(sys.version_info) for module in mpl,np,pd,sklearn,tf,keras: print(module.__name__,module.__version__)

After operation, the results are as follows:

2.7.0 sys.version_info(major=3, minor=7, micro=0, releaselevel='final', serial=0) matplotlib 3.5.0 numpy 1.21.4 pandas 1.1.5 sklearn 0.19.2 tensorflow 2.7.0 keras.api._v2.keras 2.7.0

Note that my TensorFlow is version 2.7.0, followed by the versions of each module library. We will run our TensorFlow code in this environment.

2. Basic knowledge

2.1 tensor

Tensor in TensorFlow is tensor. In fact, it is a multidimensional array, and the dimension of tensor is expressed by order.

The tensor of order 0 is scalar, which represents a single number, such as s=5

The first-order tensor is a vector, which represents a one-dimensional array, such as v=[1,2,3]

The second-order tensor is a matrix, which represents a two-dimensional array, such as m=[[1,2,3],[4,5,6]]

N-order tensors are n-dimensional arrays

The data types of TensorFlow are

tf.int , tf.float: tf.int 32 tf.float32 tf.float64

tf.bool: tf.constant([True,False])

tf.string: tf.constant('Hello,world')

Method of creating tensor:

Tf. Constant (tensor content, dtype = data type (optional))

#Example 2-1: create a one-dimensional tensor import tensorflow as tf a=tf.constant([2,5],dtype=tf.int64) print(a) #Printed results: tf.Tensor([2 5], shape=(2,), dtype=int64)

tf. convert_ to_ The tensor function can change data in numpy format into data in tensor format

#Example 2-2: using convert_to_tensor created by tensor function import numpy as np b=np.arange(0,5) c=tf.convert_to_tensor(b,dtype=tf.int64) print(b) print(c) #The printing results are as follows: [0 1 2 3 4] tf.Tensor([0 1 2 3 4], shape=(5,), dtype=int64)

tf. Zeros (dimension) creates tensors with all zeros

tf. The ones (dimension) creates tensors that are all 1

tf. Fill (dimension, specified value) creates a tensor with all specified values

#Example 2-3: create tensors with zeros, ones and fill functions d=tf.zeros([3,4]) e=tf.ones([3,2]) f=tf.fill([3,3],8) print(d) print(e) print(f) Print results: tf.Tensor( [[0. 0. 0. 0.] [0. 0. 0. 0.] [0. 0. 0. 0.]], shape=(3, 4), dtype=float32) tf.Tensor( [[1. 1.] [1. 1.] [1. 1.]], shape=(3, 2), dtype=float32) tf.Tensor( [[8 8 8] [8 8 8] [8 8 8]], shape=(3, 3), dtype=int32)

tf. random. Normal (dimension, mean = mean, stddev = standard deviation)

Generate random numbers that conform to normal distribution. The default mean is 0 and the standard deviation is 1

Tf. random. truncated_ Normal (dimension, mean = mean, stddev = standard deviation)

Generating random numbers with truncated normal distribution

Tf. random. Uniform (dimension, minval = minimum, maxval = maximum)

Generates a uniformly distributed random number with a specified maximum and minimum value

#Example 2-4: generate different types of random number tensor s g=tf.random.normal([3,2],mean=3,stddev=1) h=tf.random.truncated_normal([2,4],mean=5) i=tf.random.uniform([4,5],minval=8,maxval=20) print(g) print(h) print(i) #Print results tf.Tensor( [[2.711913 2.7192001] [2.9681098 3.2150037] [2.3997366 2.749307 ]], shape=(3, 2), dtype=float32) tf.Tensor( [[4.66647 5.542239 4.4181 4.7608485] [6.2916203 5.4339285 5.40028 6.618659 ]], shape=(2, 4), dtype=float32) tf.Tensor( [[ 9.444313 16.606283 8.992081 9.405373 18.322927 ] [18.356173 9.4511795 17.346773 8.193683 15.328684 ] [13.65999 8.159064 9.28767 11.537907 13.05283 ] [10.011724 10.25061 12.929413 11.793535 19.735085 ]], shape=(4, 5), dtype=float32)

2.2 common functions

2.2.1,tf.cast is used to force type conversion, that is, force tensor to convert to this data type

Usage: TF Cast (tensor name, dtype = data type)

2.2.2,tf.reduce_min is used to calculate the minimum value of the element on the tensor dimension

Usage: TF reduce_ Min (tensor name)

2.2.3,tf.reduce_max is used to calculate the maximum value of the element in the tensor dimension

Usage: TF reduce_ Max (tensor name)

2.2.4,tf.reduce_mean is used to calculate the average value of elements in the tensor dimension

Usage: TF reduce_ Mean (tensor name, axis = operation axis)

2.2.5,tf.reduce_sum is used to calculate the sum of elements in the tensor dimension

Usage: TF reduce_ Sum (tensor name, axis = operation axis)

#Example 2-5: some operations of tensor j=tf.constant([[1,2.34,3.76],[5.98,7.09,9.13]],dtype=tf.float64) print(j) j2=tf.cast(j,tf.int32) print(j2) j3=tf.reduce_min(j) print(j3) j4=tf.reduce_max(j) print(j4) j5=tf.reduce_mean(j2,axis=1) print(j5) j6=tf.reduce_sum(j2,axis=0) print(j6) #Print results tf.Tensor( [[1. 2.34 3.76] [5.98 7.09 9.13]], shape=(2, 3), dtype=float64) tf.Tensor( [[1 2 3] [5 7 9]], shape=(2, 3), dtype=int32) tf.Tensor(1.0, shape=(), dtype=float64) tf.Tensor(9.13, shape=(), dtype=float64) tf.Tensor([2 7], shape=(2,), dtype=int32) tf.Tensor([ 6 9 12], shape=(3,), dtype=int32)

2.2.6 variable function

Mark the variable as "trainable", and the marked variable will record the gradient information in the back propagation. This function is often used to mark the parameters to be trained in neural network training.

Usage: TF Variable (initial value)

#Example 2-6

import tensorflow as tf

w = tf.Variable(tf.constant(5, dtype=tf.float32))

epoch = 40

LR_BASE = 0.2 # Initial learning rate

LR_DECAY = 0.99 # Learning rate decay rate

LR_STEP = 1 # How many rounds of batch are fed_ After size, update the learning rate once

for epoch in range(epoch): # For epoch defines the top-level cycle, which means that the data set is cycled for epoch times. In this example, the data set has only one w. during initialization, constant is assigned as 5, and the cycle is iterated for 100 times.

lr = LR_BASE * LR_DECAY ** (epoch / LR_STEP)

with tf.GradientTape() as tape: # The calculation process of gradient from with structure to grads frame.

loss = tf.square(w + 1)

grads = tape.gradient(loss, w) # The. gradient function tells who takes the derivative from whom

w.assign_sub(lr * grads) # .assign_sub makes self subtraction of variables, i.e. w -= lr*grads, i.e. w = w - lr*grads

print("After %s epoch,w is %f,loss is %f,lr is %f" % (epoch, w.numpy(), loss, lr))

#Print results

After 0 epoch,w is 2.600000,loss is 36.000000,lr is 0.200000

After 1 epoch,w is 1.174400,loss is 12.959999,lr is 0.198000

After 2 epoch,w is 0.321948,loss is 4.728015,lr is 0.196020

After 3 epoch,w is -0.191126,loss is 1.747547,lr is 0.194060

After 4 epoch,w is -0.501926,loss is 0.654277,lr is 0.192119

After 5 epoch,w is -0.691392,loss is 0.248077,lr is 0.190198

After 6 epoch,w is -0.807611,loss is 0.095239,lr is 0.188296

After 7 epoch,w is -0.879339,loss is 0.037014,lr is 0.186413

After 8 epoch,w is -0.923874,loss is 0.014559,lr is 0.184549

After 9 epoch,w is -0.951691,loss is 0.005795,lr is 0.182703

After 10 epoch,w is -0.969167,loss is 0.002334,lr is 0.180876

After 11 epoch,w is -0.980209,loss is 0.000951,lr is 0.179068

After 12 epoch,w is -0.987226,loss is 0.000392,lr is 0.177277

After 13 epoch,w is -0.991710,loss is 0.000163,lr is 0.175504

After 14 epoch,w is -0.994591,loss is 0.000069,lr is 0.173749

After 15 epoch,w is -0.996452,loss is 0.000029,lr is 0.172012

After 16 epoch,w is -0.997660,loss is 0.000013,lr is 0.170292

After 17 epoch,w is -0.998449,loss is 0.000005,lr is 0.168589

After 18 epoch,w is -0.998967,loss is 0.000002,lr is 0.166903

After 19 epoch,w is -0.999308,loss is 0.000001,lr is 0.165234

After 20 epoch,w is -0.999535,loss is 0.000000,lr is 0.163581

After 21 epoch,w is -0.999685,loss is 0.000000,lr is 0.161946

After 22 epoch,w is -0.999786,loss is 0.000000,lr is 0.160326

After 23 epoch,w is -0.999854,loss is 0.000000,lr is 0.158723

After 24 epoch,w is -0.999900,loss is 0.000000,lr is 0.157136

After 25 epoch,w is -0.999931,loss is 0.000000,lr is 0.155564

After 26 epoch,w is -0.999952,loss is 0.000000,lr is 0.154009

After 27 epoch,w is -0.999967,loss is 0.000000,lr is 0.152469

After 28 epoch,w is -0.999977,loss is 0.000000,lr is 0.150944

After 29 epoch,w is -0.999984,loss is 0.000000,lr is 0.149434

After 30 epoch,w is -0.999989,loss is 0.000000,lr is 0.147940

After 31 epoch,w is -0.999992,loss is 0.000000,lr is 0.146461

After 32 epoch,w is -0.999994,loss is 0.000000,lr is 0.144996

After 33 epoch,w is -0.999996,loss is 0.000000,lr is 0.143546

After 34 epoch,w is -0.999997,loss is 0.000000,lr is 0.142111

After 35 epoch,w is -0.999998,loss is 0.000000,lr is 0.140690

After 36 epoch,w is -0.999999,loss is 0.000000,lr is 0.139283

After 37 epoch,w is -0.999999,loss is 0.000000,lr is 0.137890

After 38 epoch,w is -0.999999,loss is 0.000000,lr is 0.136511

After 39 epoch,w is -0.999999,loss is 0.000000,lr is 0.1351462.2.7 four operations of corresponding elements

Four operations: addition: TF Add, subtract: TF Subtract, multiplication: TF Multiply, Division: TF divide

Square: TF square,

Power: TF pow

Prescription: TF sqrt

Matrix multiplication: TF matmul

#Example 2-7

import tensorflow as tf

k1 = tf.ones([1, 3])

k2 = tf.fill([1, 3], 3.)

print("k1:", k1)

print("k2:", k2)

print("k1+k2:", tf.add(k1, k2))

print("k1-k2:", tf.subtract(k1, k2))

print("k1*k2:", tf.multiply(k1, k2))

print("k2/k1:", tf.divide(k2, k1))

k3 = tf.fill([1, 2], 3.)

print("k3:", k3)

print("k3 The third power of:", tf.pow(k3, 3))

print("k3 Square of:", tf.square(k3))

print("k3 Formula of:", tf.sqrt(k3))

k4 = tf.ones([3, 2])

k5 = tf.fill([2, 3], 3.)

print("k4:", k4)

print("k5:", k5)

print("k4*k5:", tf.matmul(k4, k5))

#Print results:

k1: tf.Tensor([[1. 1. 1.]], shape=(1, 3), dtype=float32)

k2: tf.Tensor([[3. 3. 3.]], shape=(1, 3), dtype=float32)

k1+k2: tf.Tensor([[4. 4. 4.]], shape=(1, 3), dtype=float32)

k1-k2: tf.Tensor([[-2. -2. -2.]], shape=(1, 3), dtype=float32)

k1*k2: tf.Tensor([[3. 3. 3.]], shape=(1, 3), dtype=float32)

k2/k1: tf.Tensor([[3. 3. 3.]], shape=(1, 3), dtype=float32)

k3: tf.Tensor([[3. 3.]], shape=(1, 2), dtype=float32)

k3 The third power of: tf.Tensor([[27. 27.]], shape=(1, 2), dtype=float32)

k3 Square of: tf.Tensor([[9. 9.]], shape=(1, 2), dtype=float32)

k3 Formula of: tf.Tensor([[1.7320508 1.7320508]], shape=(1, 2), dtype=float32)

k4: tf.Tensor(

[[1. 1.]

[1. 1.]

[1. 1.]], shape=(3, 2), dtype=float32)

k5: tf.Tensor(

[[3. 3. 3.]

[3. 3. 3.]], shape=(2, 3), dtype=float32)

k4*k5: tf.Tensor(

[[6. 6. 6.]

[6. 6. 6.]

[6. 6. 6.]], shape=(3, 3), dtype=float32)2.2.8,tf.data.Dataset.from_tensor_slices is used to segment the first dimension of the incoming tensor, generate input feature tag pairs, and construct data sets

#Example 2-8

data=tf.data.Dataset.from_tensor_slices((Input features, labels))

features=tf.constant([15, 28, 34, 19])

labels=tf.constant([0,1,1,0])

dataset=tf.data.Dataset.from_tensor_slices((features,labels))

print(dataset)

for element in dataset:

print(element)

#Print results

(<tf.Tensor: shape=(), dtype=int32, numpy=15>, <tf.Tensor: shape=(), dtype=int32, numpy=0>)

(<tf.Tensor: shape=(), dtype=int32, numpy=28>, <tf.Tensor: shape=(), dtype=int32, numpy=1>)

(<tf.Tensor: shape=(), dtype=int32, numpy=34>, <tf.Tensor: shape=(), dtype=int32, numpy=1>)

(<tf.Tensor: shape=(), dtype=int32, numpy=19>, <tf.Tensor: shape=(), dtype=int32, numpy=0>)

2.2.9,tf.GradientTape , find the gradient of the tensor

Usage:

with tf.GradientTape() as tape:

Calculation process

Grad=tape. Gradient (function, derivative to whom)

#Example 2-9: With tf.gradientTape() as tape: W=tf.Variable(tf.constant(3.0)) Loss=tf.pow(w,2) Grad=tape.gradient(loss,w) Print(grad) #Print results: tf.Tensor(6.0, shape=(), dtype=float32)

2.2.10 enumerate is a built-in function of python. It can traverse each element (such as list, tuple or string) and combine it into index elements, which are often used in the for loop.

Usage: enumerate (list name)

#Example 2-10: Seq=['one','two','three'] For I,element in enumerate(seq): Print(I,enumerate) #Print results 0 one 1 two 2 three

2.2.11 independent heat coding TF one_ Hot directly converts the data to be converted into one hot data output.

Usage: TF one_ Hot (data to be converted, depth = several categories)

#Example 2-11:

import tensorflow as tf

classes = 3

labels = tf.constant([1, 0, 2]) # The minimum and maximum element values entered are 0 and 2

output = tf.one_hot(labels, depth=classes)

print("result of labels1:", output)

print("\n")

#Print results

result of labels1: tf.Tensor(

[[0. 1. 0.]

[1. 0. 0.]

[0. 0. 1.]], shape=(3, 3), dtype=float32)2.2.12,tf.nn.softmax makes the output conform to the probability distribution

Usage: TF nn. softmax(x)

#Example 2-12:

import tensorflow as tf

x1 = tf.constant([[5.8, 4.0, 1.2, 0.2]]) # 5.8,4.0,1.2,0.2(0)

w1 = tf.constant([[-0.8, -0.34, -1.4],

[0.6, 1.3, 0.25],

[0.5, 1.45, 0.9],

[0.65, 0.7, -1.2]])

b1 = tf.constant([2.52, -3.1, 5.62])

y = tf.matmul(x1, w1) + b1

print("x1.shape:", x1.shape)

print("w1.shape:", w1.shape)

print("b1.shape:", b1.shape)

print("y.shape:", y.shape)

print("y:", y)

#####The following code will output the result y Convert to probability value#####

y_dim = tf.squeeze(y) # Remove y mid latitude 1 (observe the comparison of y_dim and Y effect)

y_pro = tf.nn.softmax(y_dim) # Make y_dim conforms to the probability distribution and the output is the probability value

print("y_dim:", y_dim)

print("y_pro:", y_pro)

#Please observe the printed shape

x1.shape: (1, 4)

w1.shape: (4, 3)

b1.shape: (3,)

y.shape: (1, 3)

y: tf.Tensor([[ 1.0099998 2.008 -0.65999985]], shape=(1, 3), dtype=float32)

y_dim: tf.Tensor([ 1.0099998 2.008 -0.65999985], shape=(3,), dtype=float32)

y_pro: tf.Tensor([0.2563381 0.69540703 0.04825491], shape=(3,), dtype=float32)2.2.13, assign_sub assignment operation, update the value of the parameter and return. Call assign_ Before sub, use TF Variable defines the variable w as trainable (self changing).

Usage: w.assign_sub(w content to be subtracted)

#Example 2-13:

import tensorflow as tf

x = tf.Variable(4)

x.assign_sub(1)

print("x:", x) # 4-1=3

#Print results

x: <tf.Variable 'Variable:0' shape=() dtype=int32, numpy=3>2.2.14,tf.argmax returns the index of the maximum value of the tensor along the specified dimension

Usage: TF Argmax (tensor name, axis = operation axis)

#Example 2-14:

import numpy as np

import tensorflow as tf

test = np.array([[1, 2, 3], [2, 3, 4], [5, 4, 3], [8, 7, 2]])

print("test:\n", test)

print("Index of the maximum value of each column:", tf.argmax(test, axis=0)) # Returns the index of the maximum value of each column

print("The index of the maximum value of each row", tf.argmax(test, axis=1)) # Returns the index of the maximum value of each row

#Print results

test:

[[1 2 3]

[2 3 4]

[5 4 3]

[8 7 2]]

Index of the maximum value of each column: tf.Tensor([3 3 1], shape=(3,), dtype=int64)

The index of the maximum value of each row tf.Tensor([2 2 0 0], shape=(4,), dtype=int64)2.3 activation function

If the activation function is not used, the output of each layer is a linear function of the input of the upper layer. No matter how many layers the neural network has, the output is a linear combination of inputs. This is the most primitive perceptron.

The activation function introduces nonlinear factors into neurons, so that the neural network can approach any nonlinear function arbitrarily, so that the neural network can be applied to many nonlinear models.

The so-called Activation Function is a function running on the neurons of the artificial neural network, which is responsible for mapping the inputs of the neurons to the outputs.

Excellent activation function:

1) Nonlinearity: when the activation function is nonlinear, the multilayer neural network can approach all functions

2) Differentiability: most optimizers update parameters with gradient descent

3) Monotonicity: when the activation function is monotone, it can ensure that the loss function of single-layer network is convex and easy to converge

4) Approximate identity: when the parameters are initialized to small random values, the neural network is more stable

Range of the output value of the active function:

1) When the output of the activation function is finite, the gradient based optimization method is more stable

2) When the output of the activation function is infinite, the initial value of the parameter has a great impact on the model. It is suggested to reduce the learning rate

There are three main activation functions in tensorflow:

Sigmoid function, Tanh function, ReLU function

Suggestions for beginners:

1) Preferred relu function

2) The learning rate is set to a smaller value

3) Standardization of input features, that is, let the input features meet the normal distribution with 0 as the mean and 1 as the standard deviation

4) Centralization of initial parameters, that is, let the randomly generated parameters meet the normal distribution with 0 as the mean and standard deviation

2.4 loss function

The loss function (loss) is the predicted value (y) and the known answer (y_) Gap between

2.4.1 mean square error MSE: loss_mse=tf.reduce_mean(tf.square(y_-y))

#Example 2-15

import tensorflow as tf

import numpy as np

SEED = 23455

rdm = np.random.RandomState(seed=SEED) # Generate random numbers between [0,1]

x = rdm.rand(32, 2)

y_ = [[x1 + x2 + (rdm.rand() / 10.0 - 0.05)] for (x1, x2) in x] # Generated noise [0,1) / 10 = [0,0.1); [0,0.1) - 0.05 = [- 0.05,0.05)

x = tf.cast(x, dtype=tf.float32)

w1 = tf.Variable(tf.random.normal([2, 1], stddev=1, seed=1))

epoch = 15000

lr = 0.002

for epoch in range(epoch):

with tf.GradientTape() as tape:

y = tf.matmul(x, w1)

loss_mse = tf.reduce_mean(tf.square(y_ - y))

grads = tape.gradient(loss_mse, w1)

w1.assign_sub(lr * grads)

if epoch % 500 == 0:

print("After %d training steps,w1 is " % (epoch))

print(w1.numpy(), "\n")

print("Final w1 is: ", w1.numpy())

The calculation results are as follows

After 0 training steps,w1 is [[-0.8096241] [ 1.4855157]] After 500 training steps,w1 is [[-0.21934733] [ 1.6984866 ]] After 1000 training steps,w1 is [[0.0893971] [1.673225 ]] After 1500 training steps,w1 is [[0.28368822] [1.5853055 ]] After 2000 training steps,w1 is [[0.423243 ] [1.4906037]] After 2500 training steps,w1 is [[0.531055 ] [1.4053345]] After 3000 training steps,w1 is [[0.61725086] [1.332841 ]] After 3500 training steps,w1 is [[0.687201 ] [1.2725208]] After 4000 training steps,w1 is [[0.7443262] [1.2227542]] After 4500 training steps,w1 is [[0.7910986] [1.1818361]] After 5000 training steps,w1 is [[0.82943517] [1.1482395 ]] After 5500 training steps,w1 is [[0.860872 ] [1.1206709]] After 6000 training steps,w1 is [[0.88665503] [1.098054 ]] After 6500 training steps,w1 is [[0.90780276] [1.0795006 ]] After 7000 training steps,w1 is [[0.92514884] [1.0642821 ]] After 7500 training steps,w1 is [[0.93937725] [1.0517985 ]] After 8000 training steps,w1 is [[0.951048] [1.041559]] After 8500 training steps,w1 is [[0.96062106] [1.0331597 ]] After 9000 training steps,w1 is [[0.9684733] [1.0262702]] After 9500 training steps,w1 is [[0.97491425] [1.0206193 ]] After 10000 training steps,w1 is [[0.9801975] [1.0159837]] After 10500 training steps,w1 is [[0.9845312] [1.0121814]] After 11000 training steps,w1 is [[0.9880858] [1.0090628]] After 11500 training steps,w1 is [[0.99100184] [1.0065047 ]] After 12000 training steps,w1 is [[0.9933934] [1.0044063]] After 12500 training steps,w1 is [[0.9953551] [1.0026854]] After 13000 training steps,w1 is [[0.99696386] [1.0012728 ]] After 13500 training steps,w1 is [[0.9982835] [1.0001147]] After 14000 training steps,w1 is [[0.9993659] [0.999166 ]] After 14500 training steps,w1 is [[1.0002553 ] [0.99838644]] Final w1 is: [[1.0009792] [0.9977485]] PS C:\code\workspace>

2.4.2 user defined loss function

If you predict the sales volume of goods, if you predict too much, you will lose costs and if you predict less, you will lose profits.

If profit= Cost, then the loss generated by mse cannot maximize the benefits

#Example 2-16 custom loss function

import tensorflow as tf

import numpy as np

# Custom loss function

# The cost of yogurt is 1 yuan and the profit of yogurt is 99 yuan

# The cost is very low and the profit is very high. People want to make more predictions. The coefficient of the generated model is greater than 1, so they make more predictions

SEED = 8

COST = 1

PROFIT = 99

rdm = np.random.RandomState(SEED)

x = rdm.rand(32, 2)

y_ = [[x1 + x2 + (rdm.rand() / 10.0 - 0.05)] for (x1, x2) in x] # Generated noise [0,1) / 10 = [0,0.1); [0,0.1) - 0.05 = [- 0.05,0.05)

x = tf.cast(x, dtype=tf.float32)

w1 = tf.Variable(tf.random.normal([2, 1], stddev=1, seed=1))

epoch = 10000

lr = 0.002

for epoch in range(epoch):

with tf.GradientTape() as tape:

y = tf.matmul(x, w1)

loss = tf.reduce_sum(tf.where(tf.greater(y, y_), (y - y_) * COST, (y_ - y) * PROFIT))

grads = tape.gradient(loss, w1)

w1.assign_sub(lr * grads)

if epoch % 500 == 0:

print("After %d training steps,w1 is " % (epoch))

print(w1.numpy(), "\n")

print("Final w1 is: ", w1.numpy())

2.4.3 cross entropy loss function

#Example 2-17 cross entropy loss function

import tensorflow as tf

# Cross entropy loss function

loss_ce1 = tf.losses.categorical_crossentropy([1, 0], [0.6, 0.4])

loss_ce2 = tf.losses.categorical_crossentropy([1, 0], [0.8, 0.2])

print("loss_ce1:", loss_ce1)

print("loss_ce2:", loss_ce2)

The calculation results are as follows:

loss_ce1: tf.Tensor(0.5108256, shape=(), dtype=float32) loss_ce2: tf.Tensor(0.22314353, shape=(), dtype=float32) PS C:\code\workspace>

Combination of softmax and cross entropy

The output passes through the softmax function before calculating y_ Cross entropy loss function with y

tf.nn.softmax_cross_entropy_with_logits(y_,y)

#Example 2-18 combination of softmax and cross entropy loss function

import tensorflow as tf

import numpy as np

y_ = np.array([[1, 0, 0], [0, 1, 0], [0, 0, 1], [1, 0, 0], [0, 1, 0]])

y = np.array([[12, 3, 2], [3, 10, 1], [1, 2, 5], [4, 6.5, 1.2], [3, 6, 1]])

y_pro = tf.nn.softmax(y)

loss_ce1 = tf.losses.categorical_crossentropy(y_,y_pro)

loss_ce2 = tf.nn.softmax_cross_entropy_with_logits(y_, y)

print('Results of step-by-step calculation:\n', loss_ce1)

print('Combined with the results of calculation:\n', loss_ce2)

# The output results are the sameThe calculation results are as follows:

Results of step-by-step calculation: tf.Tensor( [1.68795487e-04 1.03475622e-03 6.58839038e-02 2.58349207e+00 5.49852354e-02], shape=(5,), dtype=float64) Combined with the results of calculation: tf.Tensor( [1.68795487e-04 1.03475622e-03 6.58839038e-02 2.58349207e+00 5.49852354e-02], shape=(5,), dtype=float64) PS C:\code\workspace>

2.5 under fitting and over fitting

Under fitting is that the model can not fit the data set effectively, which is not thorough enough to learn the existing data set

It is difficult to make a correct judgment on the training data set, but it is too difficult to fit the training data set.

Solution to under fitting:

Add input feature item

Add network parameters

Reduce regularization parameters

Solution to over fitting:

Data cleaning

Increase training set

Using regularization

Increase regularization parameters

Regularization alleviates overfitting

Regularization is to introduce the model complexity index into the loss function and use the weighted value of w to weaken the noise of training data (general irregular b)

L1 regularization probability will make many parameters become 0, so this method can reduce the complexity by sparse parameters, that is, reducing the number of parameters

L2 regularization will make the parameter close to 0 but not 0, so this method can reduce the complexity by reducing the size of the parameter value.

#Example 2-19

# Import required modules

import tensorflow as tf

from matplotlib import pyplot as plt

import numpy as np

import pandas as pd

# Read in data / label generation x_train y_train

df = pd.read_csv('dot.csv')

x_data = np.array(df[['x1', 'x2']])

y_data = np.array(df['y_c'])

x_train = x_data

y_train = y_data.reshape(-1, 1)

Y_c = [['red' if y else 'blue'] for y in y_train]

# Convert the data type of x, otherwise an error will be reported due to the data type problem when multiplying the following matrix

x_train = tf.cast(x_train, tf.float32)

y_train = tf.cast(y_train, tf.float32)

# from_tensor_slices function splits the first dimension of the incoming tensor and generates the corresponding data set, so that the input characteristics and label values correspond one by one

train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(32)

# The parameters of the neural network are generated. The input layer is 4 neurons, the hidden layer is 32 neurons, the hidden layer is 2 layers, and the output layer is 3 neurons

# Use TF Variable() ensures that the parameter can be trained

w1 = tf.Variable(tf.random.normal([2, 11]), dtype=tf.float32)

b1 = tf.Variable(tf.constant(0.01, shape=[11]))

w2 = tf.Variable(tf.random.normal([11, 1]), dtype=tf.float32)

b2 = tf.Variable(tf.constant(0.01, shape=[1]))

lr = 0.005 # The learning rate is

epoch = 800 # Number of cycles

# Training part

for epoch in range(epoch):

for step, (x_train, y_train) in enumerate(train_db):

with tf.GradientTape() as tape: # Record gradient information

h1 = tf.matmul(x_train, w1) + b1 # Recording neural network multiplication and addition operation

h1 = tf.nn.relu(h1)

y = tf.matmul(h1, w2) + b2

# Use the mean square error loss function mse = mean(sum(y-out)^2)

loss_mse = tf.reduce_mean(tf.square(y_train - y))

# Add l2 regularization

loss_regularization = []

# tf.nn.l2_loss(w)=sum(w ** 2) / 2

loss_regularization.append(tf.nn.l2_loss(w1))

loss_regularization.append(tf.nn.l2_loss(w2))

# Sum

# Example: x = TF constant(([1,1,1],[1,1,1]))

# tf.reduce_sum(x)

# >>>6

loss_regularization = tf.reduce_sum(loss_regularization)

loss = loss_mse + 0.03 * loss_regularization # REGULARIZER = 0.03

# Calculate the gradient of loss to each parameter

variables = [w1, b1, w2, b2]

grads = tape.gradient(loss, variables)

# Realize gradient update

# w1 = w1 - lr * w1_grad

w1.assign_sub(lr * grads[0])

b1.assign_sub(lr * grads[1])

w2.assign_sub(lr * grads[2])

b2.assign_sub(lr * grads[3])

# Print loss information every 200 epoch s

if epoch % 20 == 0:

print('epoch:', epoch, 'loss:', float(loss))

# Prediction part

print("*******predict*******")

# xx in steps of 0.01 between - 3 and 3 and yy in steps of 0.01 between - 3 and 3 to generate interval value points

xx, yy = np.mgrid[-3:3:.1, -3:3:.1]

# Straighten XX and YY and combine them into two-dimensional tensors to generate two-dimensional coordinate points

grid = np.c_[xx.ravel(), yy.ravel()]

grid = tf.cast(grid, tf.float32)

# The grid coordinate points are fed into the neural network for prediction, and probs is the output

probs = []

for x_predict in grid:

# Use the trained parameters for prediction

h1 = tf.matmul([x_predict], w1) + b1

h1 = tf.nn.relu(h1)

y = tf.matmul(h1, w2) + b2 # y is the prediction result

probs.append(y)

# Take column 0 as x1 and column 1 as x2

x1 = x_data[:, 0]

x2 = x_data[:, 1]

# Adjust the shape of probs to xx

probs = np.array(probs).reshape(xx.shape)

plt.scatter(x1, x2, color=np.squeeze(Y_c))

# Put the coordinate xx yy and the corresponding value probs into the contour function, and color all points with probs value of 0.5 PLT After show(), the dividing line of red and blue dots is displayed

plt.contour(xx, yy, probs, levels=[.5])

plt.show()

# Read in the red and blue dots and draw the segmentation line, including regularization

# For unclear data, it is recommended to print it out and check it

2.6 optimizer

Optimizer is a tool to guide neural network to update parameters

Five commonly used neural network optimizers

2.6.1 SGD, commonly used gradient descent method

#Example 2-20 use iris data set to realize forward propagation and back propagation and visualize loss curve

# Import required modules

import tensorflow as tf

from sklearn import datasets

from matplotlib import pyplot as plt

import numpy as np

import time ##1##

# Import data, including input features and labels

x_data = datasets.load_iris().data

y_data = datasets.load_iris().target

# Randomly scramble the data (because the original data is in order, the accuracy will be affected if the order is not scrambled)

# Seed: random number seed, which is an integer. After setting, the random number generated each time is the same (for the convenience of teaching, to ensure the consistency of each student's results)

np.random.seed(116) # Use the same seed to ensure that the input features and labels correspond one by one

np.random.shuffle(x_data)

np.random.seed(116)

np.random.shuffle(y_data)

tf.random.set_seed(116)

# The disrupted data set is divided into training set and test set. The training set is the first 120 rows and the test set is the last 30 rows

x_train = x_data[:-30]

y_train = y_data[:-30]

x_test = x_data[-30:]

y_test = y_data[-30:]

# Convert the data type of x, otherwise an error will be reported due to inconsistent data types when multiplying the following matrices

x_train = tf.cast(x_train, tf.float32)

x_test = tf.cast(x_test, tf.float32)

# from_ tensor_ The slices function maps the input feature to the tag value one by one. (divide the data set into batches and batch group data for each batch)

train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(32)

test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(32)

# The parameters of the neural network are generated, and there are 4 input characteristics. Therefore, the input layer is 4 input nodes; Because of 3 classification, the output layer is 3 neurons

# Use TF The variable () tag parameter can be trained

# Use seed to make the random number generated each time the same (convenient for teaching, so that everyone's results are consistent. Don't write seed in real use)

w1 = tf.Variable(tf.random.truncated_normal([4, 3], stddev=0.1, seed=1))

b1 = tf.Variable(tf.random.truncated_normal([3], stddev=0.1, seed=1))

lr = 0.1 # The learning rate is 0.1

train_loss_results = [] # Record the loss of each round in this list to provide data for subsequent drawing of loss curve

test_acc = [] # Record the acc of each round in this list to provide data for subsequent drawing of acc curve

epoch = 500 # 500 cycles

loss_all = 0 # Each round is divided into 4 steps, loss_all records the sum of four losses generated by four steps

# Training part

now_time = time.time() ##2##

for epoch in range(epoch): # Data set level loops, one data set per epoch

for step, (x_train, y_train) in enumerate(train_db): # Batch level loops, one batch per step loop

with tf.GradientTape() as tape: # with structure records gradient information

y = tf.matmul(x_train, w1) + b1 # Neural network multiplication and addition operation

y = tf.nn.softmax(y) # Make the output y conform to the probability distribution (after this operation, it is the same order of magnitude as the single hot code, and the loss can be calculated by subtraction)

y_ = tf.one_hot(y_train, depth=3) # Convert the tag value to the unique hot code format to facilitate the calculation of loss and accuracy

loss = tf.reduce_mean(tf.square(y_ - y)) # Use the mean square error loss function mse = mean(sum(y-out)^2)

loss_all += loss.numpy() # Accumulate the loss calculated by each step to provide data for the subsequent average of loss, so that the calculated loss is more accurate

# Calculate the gradient of loss to each parameter

grads = tape.gradient(loss, [w1, b1])

# Implement gradient update w1 = w1 - lr * w1_grad b = b - lr * b_grad

w1.assign_sub(lr * grads[0]) # Parameter w1 self updating

b1.assign_sub(lr * grads[1]) # Parameter b self updating

# Print loss information for each epoch

print("Epoch {}, loss: {}".format(epoch, loss_all / 4))

train_loss_results.append(loss_all / 4) # Average the loss of four step s and record it in this variable

loss_all = 0 # loss_all returns to zero to prepare for recording the loss of the next epoch

# Test part

# total_correct is the number of samples of the prediction pair, total_number is the total number of samples tested, and both variables are initialized to 0

total_correct, total_number = 0, 0

for x_test, y_test in test_db:

# Use the updated parameters for prediction

y = tf.matmul(x_test, w1) + b1

y = tf.nn.softmax(y)

pred = tf.argmax(y, axis=1) # The maximum value returned in the predicted index of y

# Convert pred to y_ Data type of test

pred = tf.cast(pred, dtype=y_test.dtype)

# If the classification is correct, correct=1, otherwise it is 0, and the result of bool type is converted to int type

correct = tf.cast(tf.equal(pred, y_test), dtype=tf.int32)

# Add up the correct number of each batch

correct = tf.reduce_sum(correct)

# Add up the number of correct in all batch es

total_correct += int(correct)

# total_number is the total number of samples tested, that is, X_ Number of rows of test, and shape[0] returns the number of rows of the variable

total_number += x_test.shape[0]

# The total accuracy is equal to total_correct/total_number

acc = total_correct / total_number

test_acc.append(acc)

print("Test_acc:", acc)

print("--------------------------")

total_time = time.time() - now_time ##3##

print("total_time", total_time) ##4##

# Draw loss curve

plt.title('Loss Function Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Loss') # y-axis variable name

plt.plot(train_loss_results, label="$Loss$") # Draw trian point by point_ Loss_ The results value and connect, and the connection icon is Loss

plt.legend() # Draw a curve Icon

plt.show() # Draw an image

# Draw Accuracy curve

plt.title('Acc Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Acc') # y-axis variable name

plt.plot(test_acc, label="$Accuracy$") # Draw the test point by point_ ACC value and connect. The connection icon is Accuracy

plt.legend()

plt.show()

# This document is relatively simple class1\p45_iris.py For adding four time records only ##n## identification

# Please add loss curve, ACC curve and total_time record to class2 \ optimizer comparison docx compares the convergence of each optimizer

2.6.2 SGDM (SGD with momentum) increases the first-order momentum on the basis of SGD

#Example 2-21 use iris data set to realize forward propagation and back propagation and visualize loss curve

# Import required modules

import tensorflow as tf

from sklearn import datasets

from matplotlib import pyplot as plt

import numpy as np

import time ##1##

# Import data, including input features and labels

x_data = datasets.load_iris().data

y_data = datasets.load_iris().target

# Randomly scramble the data (because the original data is in order, the accuracy will be affected if the order is not scrambled)

# Seed: random number seed, which is an integer. After setting, the random number generated each time is the same (for the convenience of teaching, to ensure the consistency of each student's results)

np.random.seed(116) # Use the same seed to ensure that the input features and labels correspond one by one

np.random.shuffle(x_data)

np.random.seed(116)

np.random.shuffle(y_data)

tf.random.set_seed(116)

# The disrupted data set is divided into training set and test set. The training set is the first 120 rows and the test set is the last 30 rows

x_train = x_data[:-30]

y_train = y_data[:-30]

x_test = x_data[-30:]

y_test = y_data[-30:]

# Convert the data type of x, otherwise an error will be reported due to inconsistent data types when multiplying the following matrices

x_train = tf.cast(x_train, tf.float32)

x_test = tf.cast(x_test, tf.float32)

# from_ tensor_ The slices function maps the input feature to the tag value one by one. (divide the data set into batches and batch group data for each batch)

train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(32)

test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(32)

# The parameters of the neural network are generated, and there are 4 input characteristics. Therefore, the input layer is 4 input nodes; Because of 3 classification, the output layer is 3 neurons

# Use TF The variable () tag parameter can be trained

# Use seed to make the random number generated each time the same (convenient for teaching, so that everyone's results are consistent. Don't write seed in real use)

w1 = tf.Variable(tf.random.truncated_normal([4, 3], stddev=0.1, seed=1))

b1 = tf.Variable(tf.random.truncated_normal([3], stddev=0.1, seed=1))

lr = 0.1 # The learning rate is 0.1

train_loss_results = [] # Record the loss of each round in this list to provide data for subsequent drawing of loss curve

test_acc = [] # Record the acc of each round in this list to provide data for subsequent drawing of acc curve

epoch = 500 # 500 cycles

loss_all = 0 # Each round is divided into 4 steps, loss_all records the sum of four losses generated by four steps

##########################################################################

m_w, m_b = 0, 0

beta = 0.9

##########################################################################

# Training part

now_time = time.time() ##2##

for epoch in range(epoch): # Data set level loops, one data set per epoch

for step, (x_train, y_train) in enumerate(train_db): # Batch level loops, one batch per step loop

with tf.GradientTape() as tape: # with structure records gradient information

y = tf.matmul(x_train, w1) + b1 # Neural network multiplication and addition operation

y = tf.nn.softmax(y) # Make the output y conform to the probability distribution (after this operation, it is the same order of magnitude as the single hot code, and the loss can be calculated by subtraction)

y_ = tf.one_hot(y_train, depth=3) # Convert the tag value to the unique hot code format to facilitate the calculation of loss and accuracy

loss = tf.reduce_mean(tf.square(y_ - y)) # Use the mean square error loss function mse = mean(sum(y-out)^2)

loss_all += loss.numpy() # Accumulate the loss calculated by each step to provide data for the subsequent average of loss, so that the calculated loss is more accurate

# Calculate the gradient of loss to each parameter

grads = tape.gradient(loss, [w1, b1])

##########################################################################

# sgd-momentun

m_w = beta * m_w + (1 - beta) * grads[0]

m_b = beta * m_b + (1 - beta) * grads[1]

w1.assign_sub(lr * m_w)

b1.assign_sub(lr * m_b)

##########################################################################

# Print loss information for each epoch

print("Epoch {}, loss: {}".format(epoch, loss_all / 4))

train_loss_results.append(loss_all / 4) # Average the loss of four step s and record it in this variable

loss_all = 0 # loss_all returns to zero to prepare for recording the loss of the next epoch

# Test part

# total_correct is the number of samples of the prediction pair, total_number is the total number of samples tested, and both variables are initialized to 0

total_correct, total_number = 0, 0

for x_test, y_test in test_db:

# Use the updated parameters for prediction

y = tf.matmul(x_test, w1) + b1

y = tf.nn.softmax(y)

pred = tf.argmax(y, axis=1) # The maximum value returned in the predicted index of y

# Convert pred to y_ Data type of test

pred = tf.cast(pred, dtype=y_test.dtype)

# If the classification is correct, correct=1, otherwise it is 0, and the result of bool type is converted to int type

correct = tf.cast(tf.equal(pred, y_test), dtype=tf.int32)

# Add up the correct number of each batch

correct = tf.reduce_sum(correct)

# Add up the number of correct in all batch es

total_correct += int(correct)

# total_number is the total number of samples tested, that is, X_ Number of rows of test, and shape[0] returns the number of rows of the variable

total_number += x_test.shape[0]

# The total accuracy is equal to total_correct/total_number

acc = total_correct / total_number

test_acc.append(acc)

print("Test_acc:", acc)

print("--------------------------")

total_time = time.time() - now_time ##3##

print("total_time", total_time) ##4##

# Draw loss curve

plt.title('Loss Function Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Loss') # y-axis variable name

plt.plot(train_loss_results, label="$Loss$") # Draw trian point by point_ Loss_ The results value and connect, and the connection icon is Loss

plt.legend() # Draw a curve Icon

plt.show() # Draw an image

# Draw Accuracy curve

plt.title('Acc Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Acc') # y-axis variable name

plt.plot(test_acc, label="$Accuracy$") # Draw the test point by point_ ACC value and connect. The connection icon is Accuracy

plt.legend()

plt.show()

# Please add loss curve, ACC curve and total_time record to class2 \ optimizer comparison docx compares the convergence of each optimizer

2.6.3 Adagrad, add second-order momentum on the basis of SGD

#Example 2-22 use iris data set to realize forward propagation and back propagation and visualize loss curve

# Import required modules

import tensorflow as tf

from sklearn import datasets

from matplotlib import pyplot as plt

import numpy as np

import time ##1##

# Import data, including input features and labels

x_data = datasets.load_iris().data

y_data = datasets.load_iris().target

# Randomly scramble the data (because the original data is in order, the accuracy will be affected if the order is not scrambled)

# Seed: random number seed, which is an integer. After setting, the random number generated each time is the same (for the convenience of teaching, to ensure the consistency of each student's results)

np.random.seed(116) # Use the same seed to ensure that the input features and labels correspond one by one

np.random.shuffle(x_data)

np.random.seed(116)

np.random.shuffle(y_data)

tf.random.set_seed(116)

# The disrupted data set is divided into training set and test set. The training set is the first 120 rows and the test set is the last 30 rows

x_train = x_data[:-30]

y_train = y_data[:-30]

x_test = x_data[-30:]

y_test = y_data[-30:]

# Convert the data type of x, otherwise an error will be reported due to inconsistent data types when multiplying the following matrices

x_train = tf.cast(x_train, tf.float32)

x_test = tf.cast(x_test, tf.float32)

# from_ tensor_ The slices function maps the input feature to the tag value one by one. (divide the data set into batches and batch group data for each batch)

train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(32)

test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(32)

# The parameters of the neural network are generated, and there are 4 input characteristics. Therefore, the input layer is 4 input nodes; Because of 3 classification, the output layer is 3 neurons

# Use TF The variable () tag parameter can be trained

# Use seed to make the random number generated each time the same (convenient for teaching, so that everyone's results are consistent. Don't write seed in real use)

w1 = tf.Variable(tf.random.truncated_normal([4, 3], stddev=0.1, seed=1))

b1 = tf.Variable(tf.random.truncated_normal([3], stddev=0.1, seed=1))

lr = 0.1 # The learning rate is 0.1

train_loss_results = [] # Record the loss of each round in this list to provide data for subsequent drawing of loss curve

test_acc = [] # Record the acc of each round in this list to provide data for subsequent drawing of acc curve

epoch = 500 # 500 cycles

loss_all = 0 # Each round is divided into 4 steps, loss_all records the sum of four losses generated by four steps

##########################################################################

v_w, v_b = 0, 0

##########################################################################

# Training part

now_time = time.time() ##2##

for epoch in range(epoch): # Data set level loops, one data set per epoch

for step, (x_train, y_train) in enumerate(train_db): # Batch level loops, one batch per step loop

with tf.GradientTape() as tape: # with structure records gradient information

y = tf.matmul(x_train, w1) + b1 # Neural network multiplication and addition operation

y = tf.nn.softmax(y) # Make the output y conform to the probability distribution (after this operation, it is the same order of magnitude as the single hot code, and the loss can be calculated by subtraction)

y_ = tf.one_hot(y_train, depth=3) # Convert the tag value to the unique hot code format to facilitate the calculation of loss and accuracy

loss = tf.reduce_mean(tf.square(y_ - y)) # Use the mean square error loss function mse = mean(sum(y-out)^2)

loss_all += loss.numpy() # Accumulate the loss calculated by each step to provide data for the subsequent average of loss, so that the calculated loss is more accurate

# Calculate the gradient of loss to each parameter

grads = tape.gradient(loss, [w1, b1])

##########################################################################

# adagrad

v_w += tf.square(grads[0])

v_b += tf.square(grads[1])

w1.assign_sub(lr * grads[0] / tf.sqrt(v_w))

b1.assign_sub(lr * grads[1] / tf.sqrt(v_b))

##########################################################################

# Print loss information for each epoch

print("Epoch {}, loss: {}".format(epoch, loss_all / 4))

train_loss_results.append(loss_all / 4) # Average the loss of four step s and record it in this variable

loss_all = 0 # loss_all returns to zero to prepare for recording the loss of the next epoch

# Test part

# total_correct is the number of samples of the prediction pair, total_number is the total number of samples tested, and both variables are initialized to 0

total_correct, total_number = 0, 0

for x_test, y_test in test_db:

# Use the updated parameters for prediction

y = tf.matmul(x_test, w1) + b1

y = tf.nn.softmax(y)

pred = tf.argmax(y, axis=1) # The maximum value returned in the predicted index of y

# Convert pred to y_ Data type of test

pred = tf.cast(pred, dtype=y_test.dtype)

# If the classification is correct, correct=1, otherwise it is 0, and the result of bool type is converted to int type

correct = tf.cast(tf.equal(pred, y_test), dtype=tf.int32)

# Add up the correct number of each batch

correct = tf.reduce_sum(correct)

# Add up the number of correct in all batch es

total_correct += int(correct)

# total_number is the total number of samples tested, that is, X_ Number of rows of test, and shape[0] returns the number of rows of the variable

total_number += x_test.shape[0]

# The total accuracy is equal to total_correct/total_number

acc = total_correct / total_number

test_acc.append(acc)

print("Test_acc:", acc)

print("--------------------------")

total_time = time.time() - now_time ##3##

print("total_time", total_time) ##4##

# Draw loss curve

plt.title('Loss Function Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Loss') # y-axis variable name

plt.plot(train_loss_results, label="$Loss$") # Draw trian point by point_ Loss_ The results value and connect, and the connection icon is Loss

plt.legend() # Draw a curve Icon

plt.show() # Draw an image

# Draw Accuracy curve

plt.title('Acc Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Acc') # y-axis variable name

plt.plot(test_acc, label="$Accuracy$") # Draw the test point by point_ ACC value and connect. The connection icon is Accuracy

plt.legend()

plt.show()

# Please add loss curve, ACC curve and total_time record to class2 \ optimizer comparison docx compares the convergence of each optimizer

2.6.4. Add second-order momentum based on RMSProp and SGD

#Example 2-23 use iris data set to realize forward propagation and back propagation and visualize loss curve

# Import required modules

import tensorflow as tf

from sklearn import datasets

from matplotlib import pyplot as plt

import numpy as np

import time ##1##

# Import data, including input features and labels

x_data = datasets.load_iris().data

y_data = datasets.load_iris().target

# Randomly scramble the data (because the original data is in order, the accuracy will be affected if the order is not scrambled)

# Seed: random number seed, which is an integer. After setting, the random number generated each time is the same (for the convenience of teaching, to ensure the consistency of each student's results)

np.random.seed(116) # Use the same seed to ensure that the input features and labels correspond one by one

np.random.shuffle(x_data)

np.random.seed(116)

np.random.shuffle(y_data)

tf.random.set_seed(116)

# The disrupted data set is divided into training set and test set. The training set is the first 120 rows and the test set is the last 30 rows

x_train = x_data[:-30]

y_train = y_data[:-30]

x_test = x_data[-30:]

y_test = y_data[-30:]

# Convert the data type of x, otherwise an error will be reported due to inconsistent data types when multiplying the following matrices

x_train = tf.cast(x_train, tf.float32)

x_test = tf.cast(x_test, tf.float32)

# from_ tensor_ The slices function maps the input feature to the tag value one by one. (divide the data set into batches and batch group data for each batch)

train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(32)

test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(32)

# The parameters of the neural network are generated, and there are 4 input characteristics. Therefore, the input layer is 4 input nodes; Because of 3 classification, the output layer is 3 neurons

# Use TF The variable () tag parameter can be trained

# Use seed to make the random number generated each time the same (convenient for teaching, so that everyone's results are consistent. Don't write seed in real use)

w1 = tf.Variable(tf.random.truncated_normal([4, 3], stddev=0.1, seed=1))

b1 = tf.Variable(tf.random.truncated_normal([3], stddev=0.1, seed=1))

lr = 0.1 # The learning rate is 0.1

train_loss_results = [] # Record the loss of each round in this list to provide data for subsequent drawing of loss curve

test_acc = [] # Record the acc of each round in this list to provide data for subsequent drawing of acc curve

epoch = 500 # 500 cycles

loss_all = 0 # Each round is divided into 4 steps, loss_all records the sum of four losses generated by four steps

##########################################################################

v_w, v_b = 0, 0

beta = 0.9

##########################################################################

# Training part

now_time = time.time() ##2##

for epoch in range(epoch): # Data set level loops, one data set per epoch

for step, (x_train, y_train) in enumerate(train_db): # Batch level loops, one batch per step loop

with tf.GradientTape() as tape: # with structure records gradient information

y = tf.matmul(x_train, w1) + b1 # Neural network multiplication and addition operation

y = tf.nn.softmax(y) # Make the output y conform to the probability distribution (after this operation, it is the same order of magnitude as the single hot code, and the loss can be calculated by subtraction)

y_ = tf.one_hot(y_train, depth=3) # Convert the tag value to the unique hot code format to facilitate the calculation of loss and accuracy

loss = tf.reduce_mean(tf.square(y_ - y)) # Use the mean square error loss function mse = mean(sum(y-out)^2)

loss_all += loss.numpy() # Accumulate the loss calculated by each step to provide data for the subsequent average of loss, so that the calculated loss is more accurate

# Calculate the gradient of loss to each parameter

grads = tape.gradient(loss, [w1, b1])

##########################################################################

# rmsprop

v_w = beta * v_w + (1 - beta) * tf.square(grads[0])

v_b = beta * v_b + (1 - beta) * tf.square(grads[1])

w1.assign_sub(lr * grads[0] / tf.sqrt(v_w))

b1.assign_sub(lr * grads[1] / tf.sqrt(v_b))

##########################################################################

# Print loss information for each epoch

print("Epoch {}, loss: {}".format(epoch, loss_all / 4))

train_loss_results.append(loss_all / 4) # Average the loss of four step s and record it in this variable

loss_all = 0 # loss_all returns to zero to prepare for recording the loss of the next epoch

# Test part

# total_correct is the number of samples of the prediction pair, total_number is the total number of samples tested, and both variables are initialized to 0

total_correct, total_number = 0, 0

for x_test, y_test in test_db:

# Use the updated parameters for prediction

y = tf.matmul(x_test, w1) + b1

y = tf.nn.softmax(y)

pred = tf.argmax(y, axis=1) # The maximum value returned in the predicted index of y

# Convert pred to y_ Data type of test

pred = tf.cast(pred, dtype=y_test.dtype)

# If the classification is correct, correct=1, otherwise it is 0, and the result of bool type is converted to int type

correct = tf.cast(tf.equal(pred, y_test), dtype=tf.int32)

# Add up the correct number of each batch

correct = tf.reduce_sum(correct)

# Add up the number of correct in all batch es

total_correct += int(correct)

# total_number is the total number of samples tested, that is, X_ Number of rows of test, and shape[0] returns the number of rows of the variable

total_number += x_test.shape[0]

# The total accuracy is equal to total_correct/total_number

acc = total_correct / total_number

test_acc.append(acc)

print("Test_acc:", acc)

print("--------------------------")

total_time = time.time() - now_time ##3##

print("total_time", total_time) ##4##

# Draw loss curve

plt.title('Loss Function Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Loss') # y-axis variable name

plt.plot(train_loss_results, label="$Loss$") # Draw trian point by point_ Loss_ The results value and connect, and the connection icon is Loss

plt.legend() # Draw a curve Icon

plt.show() # Draw an image

# Draw Accuracy curve

plt.title('Acc Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Acc') # y-axis variable name

plt.plot(test_acc, label="$Accuracy$") # Draw the test point by point_ ACC value and connect. The connection icon is Accuracy

plt.legend()

plt.show()

# Please add loss curve, ACC curve and total_time record to class2 \ optimizer comparison docx compares the convergence of each optimizer

2.6.5 Adam, combining the first-order momentum of SGDM and the second-order momentum of RMSProp

#Example 2-24 use iris data set to realize forward propagation and back propagation and visualize loss curve

# Import required modules

import tensorflow as tf

from sklearn import datasets

from matplotlib import pyplot as plt

import numpy as np

import time ##1##

# Import data, including input features and labels

x_data = datasets.load_iris().data

y_data = datasets.load_iris().target

# Randomly scramble the data (because the original data is in order, the accuracy will be affected if the order is not scrambled)

# Seed: random number seed, which is an integer. After setting, the random number generated each time is the same (for the convenience of teaching, to ensure the consistency of each student's results)

np.random.seed(116) # Use the same seed to ensure that the input features and labels correspond one by one

np.random.shuffle(x_data)

np.random.seed(116)

np.random.shuffle(y_data)

tf.random.set_seed(116)

# The disrupted data set is divided into training set and test set. The training set is the first 120 rows and the test set is the last 30 rows

x_train = x_data[:-30]

y_train = y_data[:-30]

x_test = x_data[-30:]

y_test = y_data[-30:]

# Convert the data type of x, otherwise an error will be reported due to inconsistent data types when multiplying the following matrices

x_train = tf.cast(x_train, tf.float32)

x_test = tf.cast(x_test, tf.float32)

# from_ tensor_ The slices function maps the input feature to the tag value one by one. (divide the data set into batches and batch group data for each batch)

train_db = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(32)

test_db = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(32)

# The parameters of the neural network are generated, and there are 4 input characteristics. Therefore, the input layer is 4 input nodes; Because of 3 classification, the output layer is 3 neurons

# Use TF The variable () tag parameter can be trained

# Use seed to make the random number generated each time the same (convenient for teaching, so that everyone's results are consistent. Don't write seed in real use)

w1 = tf.Variable(tf.random.truncated_normal([4, 3], stddev=0.1, seed=1))

b1 = tf.Variable(tf.random.truncated_normal([3], stddev=0.1, seed=1))

lr = 0.1 # The learning rate is 0.1

train_loss_results = [] # Record the loss of each round in this list to provide data for subsequent drawing of loss curve

test_acc = [] # Record the acc of each round in this list to provide data for subsequent drawing of acc curve

epoch = 500 # 500 cycles

loss_all = 0 # Each round is divided into 4 steps, loss_all records the sum of four losses generated by four steps

##########################################################################

m_w, m_b = 0, 0

v_w, v_b = 0, 0

beta1, beta2 = 0.9, 0.999

delta_w, delta_b = 0, 0

global_step = 0

##########################################################################

# Training part

now_time = time.time() ##2##

for epoch in range(epoch): # Data set level loops, one data set per epoch

for step, (x_train, y_train) in enumerate(train_db): # Batch level loops, one batch per step loop

##########################################################################

global_step += 1

##########################################################################

with tf.GradientTape() as tape: # with structure records gradient information

y = tf.matmul(x_train, w1) + b1 # Neural network multiplication and addition operation

y = tf.nn.softmax(y) # Make the output y conform to the probability distribution (after this operation, it is the same order of magnitude as the single hot code, and the loss can be calculated by subtraction)

y_ = tf.one_hot(y_train, depth=3) # Convert the tag value to the unique hot code format to facilitate the calculation of loss and accuracy

loss = tf.reduce_mean(tf.square(y_ - y)) # Use the mean square error loss function mse = mean(sum(y-out)^2)

loss_all += loss.numpy() # Accumulate the loss calculated by each step to provide data for the subsequent average of loss, so that the calculated loss is more accurate

# Calculate the gradient of loss to each parameter

grads = tape.gradient(loss, [w1, b1])

##########################################################################

# adam

m_w = beta1 * m_w + (1 - beta1) * grads[0]

m_b = beta1 * m_b + (1 - beta1) * grads[1]

v_w = beta2 * v_w + (1 - beta2) * tf.square(grads[0])

v_b = beta2 * v_b + (1 - beta2) * tf.square(grads[1])

m_w_correction = m_w / (1 - tf.pow(beta1, int(global_step)))

m_b_correction = m_b / (1 - tf.pow(beta1, int(global_step)))

v_w_correction = v_w / (1 - tf.pow(beta2, int(global_step)))

v_b_correction = v_b / (1 - tf.pow(beta2, int(global_step)))

w1.assign_sub(lr * m_w_correction / tf.sqrt(v_w_correction))

b1.assign_sub(lr * m_b_correction / tf.sqrt(v_b_correction))

##########################################################################

# Print loss information for each epoch

print("Epoch {}, loss: {}".format(epoch, loss_all / 4))

train_loss_results.append(loss_all / 4) # Average the loss of four step s and record it in this variable

loss_all = 0 # loss_all returns to zero to prepare for recording the loss of the next epoch

# Test part

# total_correct is the number of samples of the prediction pair, total_number is the total number of samples tested, and both variables are initialized to 0

total_correct, total_number = 0, 0

for x_test, y_test in test_db:

# Use the updated parameters for prediction

y = tf.matmul(x_test, w1) + b1

y = tf.nn.softmax(y)

pred = tf.argmax(y, axis=1) # The maximum value returned in the predicted index of y

# Convert pred to y_ Data type of test

pred = tf.cast(pred, dtype=y_test.dtype)

# If the classification is correct, correct=1, otherwise it is 0, and the result of bool type is converted to int type

correct = tf.cast(tf.equal(pred, y_test), dtype=tf.int32)

# Add up the correct number of each batch

correct = tf.reduce_sum(correct)

# Add up the number of correct in all batch es

total_correct += int(correct)

# total_number is the total number of samples tested, that is, X_ Number of rows of test, and shape[0] returns the number of rows of the variable

total_number += x_test.shape[0]

# The total accuracy is equal to total_correct/total_number

acc = total_correct / total_number

test_acc.append(acc)

print("Test_acc:", acc)

print("--------------------------")

total_time = time.time() - now_time ##3##

print("total_time", total_time) ##4##

# Draw loss curve

plt.title('Loss Function Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Loss') # y-axis variable name

plt.plot(train_loss_results, label="$Loss$") # Draw trian point by point_ Loss_ The results value and connect, and the connection icon is Loss

plt.legend() # Draw a curve Icon

plt.show() # Draw an image

# Draw Accuracy curve

plt.title('Acc Curve') # Picture title

plt.xlabel('Epoch') # x-axis variable name

plt.ylabel('Acc') # y-axis variable name

plt.plot(test_acc, label="$Accuracy$") # Draw the test point by point_ ACC value and connect. The connection icon is Accuracy

plt.legend()

plt.show()

# Please add loss curve, ACC curve and total_time record to class2 \ optimizer comparison docx compares the convergence of each optimizer

3. Building neural network (Part I)

3.1 basic steps (six steps):

1) import related modules

2)train test

Tell the training set and test set of the network to be trained,

Specifies the input characteristics X of the training set_ Label y of train and training set_ train

Specifies the input characteristic x of the test set_ Test and test set labels y_test

3)model=tf.keras.models.Sequential

Building a network in sequential and describing each layer of the network layer by layer is equivalent to walking forward

4) Configure the training method in compile and tell which optimizer to choose, which loss function to choose and which evaluation index to choose during training

5) Execute the training process in fit, tell the input characteristics and labels of the training set and test set, tell how many batches each batch is, and tell how many data sets to iterate

6) Statistics of network parameters and printed summary

3.2 # build neural network with sequential

model=tf.keras.models.Sequential([network structure] # describes the network of each layer)

sequential() can be considered as a container, which encapsulates a neural network structure

In sequential, the network structure of each layer from input layer to output layer should be described

Straightening layer: TF keras. layers. Flatten () layer does not contain calculation, but only shape conversion, straightening the input features into a one-dimensional array

Full connection layer: TF keras. layers. Density (number of neurons, activation = "activation function", kernel_regularizer = which regularization)

Activation (given by string): relu , softmax , sigmoid , tanh

kernel_regularizer optional: TF keras. regularizers. L1() tf.keras.regularizers.L2()

Convolution layer: TF keras. layers. Conv2d (filters = number of convolution cores, kernel_size = convolution core size, stripes = convolution step size, padding = "valid" or"same")

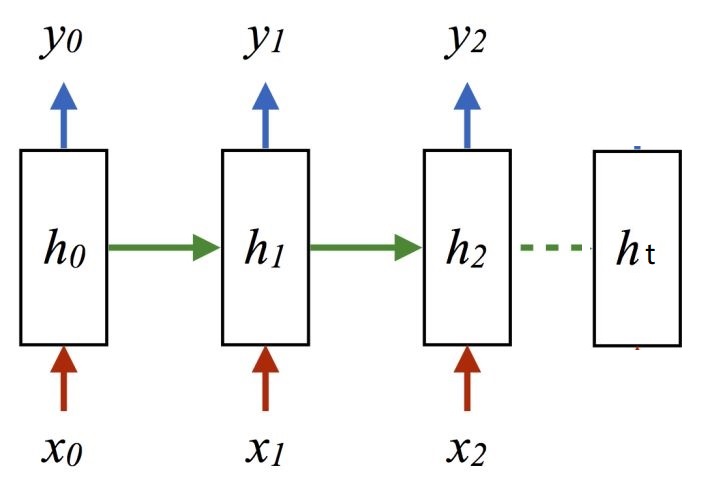

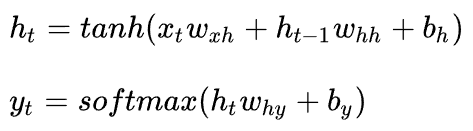

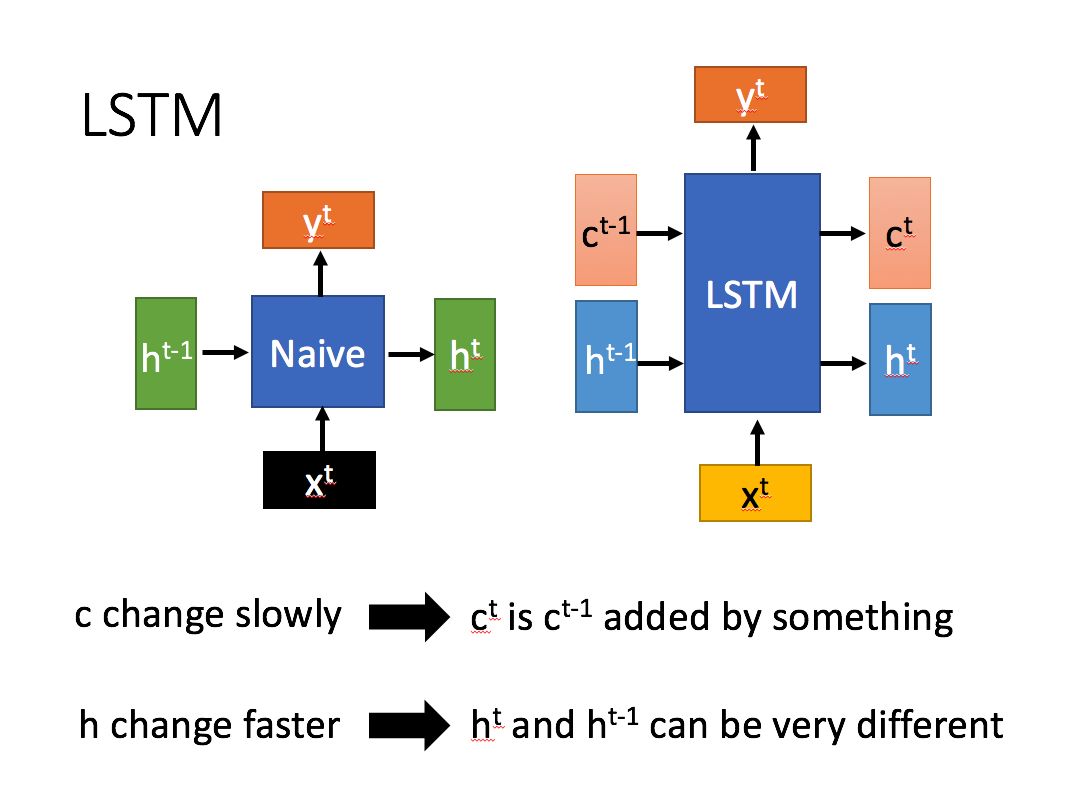

LSTM layer: TF keras. layers. LSTM()

3.3 compile usage:

model.compile(optimizer = optimizer, loss = loss function, metrics = ['accuracy'])

optimizer optional:

‘sgd’ or tf.keras.optimizers.SGD(lr = learning rate, momentum = momentum parameter)

'adagrad' or tf.keras.optimizers.Adagrad(lr = learning rate)

'adadelta' or tf.keras.optimizers.Adadelta(lr = learning rate)

‘adam’ or tf.keras.optimizers.Adam(lr = learning rate, beta_1=0.9,beta_2=0.999)

loss optional:

'mse' or tf.keras.losses.MeanSquaredError()

'sparse_categorical_crossentropy' or tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False)

Metrics optional:

‘accuracy’:y_ And y are numerical values, such as y_=[1] y=[1]

'categorical_accuracy': y_ And y are unique hot codes (probability distribution), such as y_=[0,1,0],y=[0.256,0.696,0.048]

'sparse_categorical_accuracy': y_ Is a numerical value, y is a unique heat code (probability distribution), such as y_=[1],y=[0.256,0.696,0.048]

3.4 fit usage:

model. Fit (input feature of training set, label of training set,

batch_size= , epochs= ,

validation_data = (input characteristics of test set, label of test set)

validation_split = what proportion is divided from the training set to the test set,

validation_freq = how many epoch tests (once)

3.5 model.summary()

Network structure and parameter statistics can be printed out

#Neural network construction: 6-3 steps

import tensorflow as tf

from sklearn import datasets

import numpy as np

x_train = datasets.load_iris().data

y_train = datasets.load_iris().target

np.random.seed(116)

np.random.shuffle(x_train)

np.random.seed(116)

np.random.shuffle(y_train)

tf.random.set_seed(116)

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(3, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

])

model.compile(optimizer=tf.keras.optimizers.SGD(lr=0.1),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train, y_train, batch_size=32, epochs=500, validation_split=0.2, validation_freq=20)

model.summary()The printing results are as follows:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 3) 15

=================================================================

Total params: 15

Trainable params: 15

Non-trainable params: 03.6 building neural network with class

Define a class and encapsulate a neural network structure

General format:

#Example 3-2 constructing neural network pseudo code with class class

class MyModel(Model):

def __init__(self):

super(MyModel,self),__init__()

Define network building blocks

def call(self,x):

Call the network structure block to realize forward propagation

return y

model=MyModel()

Next, we define a neural network based on Iris data set

#Example 3-3 take iris data set as an example, build neural network with class

import tensorflow as tf

from tensorflow.keras.layers import Dense

from tensorflow.keras import Model

from sklearn import datasets

import numpy as np

x_train = datasets.load_iris().data

y_train = datasets.load_iris().target

np.random.seed(116)

np.random.shuffle(x_train)

np.random.seed(116)

np.random.shuffle(y_train)

tf.random.set_seed(116)

class IrisModel(Model):

def __init__(self):

super(IrisModel, self).__init__()

self.d1 = Dense(3, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

def call(self, x):

y = self.d1(x)

return y

model = IrisModel()

model.compile(optimizer=tf.keras.optimizers.SGD(lr=0.1),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train, y_train, batch_size=32, epochs=500, validation_split=0.2, validation_freq=20)

model.summary()

The operation results are as follows:

#The training process is omitted

Epoch 500/500

4/4 [==============================] - 0s 15ms/step - loss: 0.3896 - sparse_categorical_accuracy: 0.9250 - val_loss: 0.3515 - val_sparse_categorical_accuracy: 0.8667

Model: "iris_model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) multiple 15

=================================================================

Total params: 15

Trainable params: 15

Non-trainable params: 04. Building neural network (Part 2)

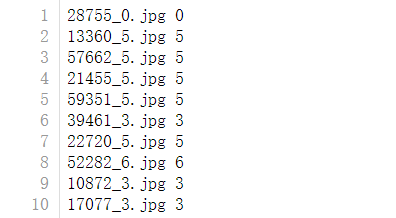

4.1 self made dataset

If you want to train data in this field, you need to customize the data set for training.

For example, we made a batch of handwritten digits to replace the data of MNIST data set to recognize my personal handwritten digits

We have prepared 70000 pictures, all in JPG file format, with pixel size of 28 * 28, which is consistent with MNIST dataset

Among them, 60000 are used as training pictures and 10000 are used as test pictures, all of which are gray-scale images with white words on a black background

In addition, prepare two text files in the following format: