1. overview

In this tutorial, we will explore how to use two different strategies to improve client retry: exponential regression and jitter.

2. retry

In distributed systems, network communication between multiple components may fail at any time.

Client applications handle these failures by implementing retries.

Imagine that we have a client application that calls remote services, PingPongService.

interface PingPongService {

String call(String ping) throws PingPongServiceException;

}If PingPongService returns a PingPongService Exception, the client application must retry. Among the following options, we will consider ways to implement client retry.

3. Resilience 4J retry

In our example, we will use Resilience4j Library, especially its retry Modular. We need to add resilience 4j-retry module to pom.xml:

<dependency>

<groupId>io.github.resilience4j</groupId>

<artifactId>resilience4j-retry</artifactId>

</dependency>Regarding retry, don't forget to check our Resilience 4J Guide.

4. Index retreat

Client applications must be responsible for retrying. When customers retry failed calls without waiting, they may overwhelm the system and further degrade the already troubled services.

Exponential fallback is a common strategy for dealing with failed network call retries. Simply put, the client waits longer and longer between consecutive retries:

wait_interval = base * multiplier^n

Among them,

- base is the initial interval, waiting for the first retry

- n is the number of failures that have occurred

- Multier is an arbitrary multiplier that can be replaced by any suitable value.

In this way, we provide the system with breathing space to recover from intermittent failures or more serious problems.

We can use the exponential fallback algorithm in Resilience 4J retry by configuring its IntervalFunction, which accepts initial Interval and multiplier.

The retry mechanism uses IntervalFunction as a sleep function:

IntervalFunction intervalFn =

IntervalFunction.ofExponentialBackoff(INITIAL_INTERVAL, MULTIPLIER);

RetryConfig retryConfig = RetryConfig.custom()

.maxAttempts(MAX_RETRIES)

.intervalFunction(intervalFn)

.build();

Retry retry = Retry.of("pingpong", retryConfig);

Function<String, String> pingPongFn = Retry

.decorateFunction(retry, ping -> service.call(ping));

pingPongFn.apply("Hello");Let's simulate a real scenario, assuming that we have several clients calling PingPongService at the same time:

ExecutorService executors = newFixedThreadPool(NUM_CONCURRENT_CLIENTS);

List<Callable> tasks = nCopies(NUM_CONCURRENT_CLIENTS, () -> pingPongFn.apply("Hello"));

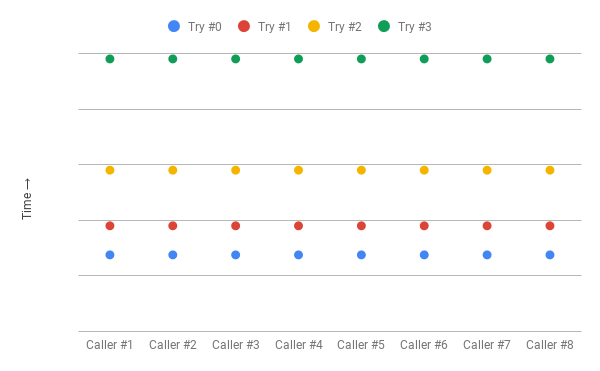

executors.invokeAll(tasks);Let's look at the remote call log for NUM_CONCURRENT_CLIENTS = 4:

[thread-1] At 00:37:42.756 [thread-2] At 00:37:42.756 [thread-3] At 00:37:42.756 [thread-4] At 00:37:42.756 [thread-2] At 00:37:43.802 [thread-4] At 00:37:43.802 [thread-1] At 00:37:43.802 [thread-3] At 00:37:43.802 [thread-2] At 00:37:45.803 [thread-1] At 00:37:45.803 [thread-4] At 00:37:45.803 [thread-3] At 00:37:45.803 [thread-2] At 00:37:49.808 [thread-3] At 00:37:49.808 [thread-4] At 00:37:49.808 [thread-1] At 00:37:49.808

We can see a clear pattern here - clients wait for exponential growth intervals, but they call remote services at the same time each time they retry (conflict).

We've only solved part of the problem - we're not restarting remote services anymore, but instead we're scattering our workload over time, with more work intervals and more free time. This behavior is similar to Panic group problem.

5. Introducing jitter

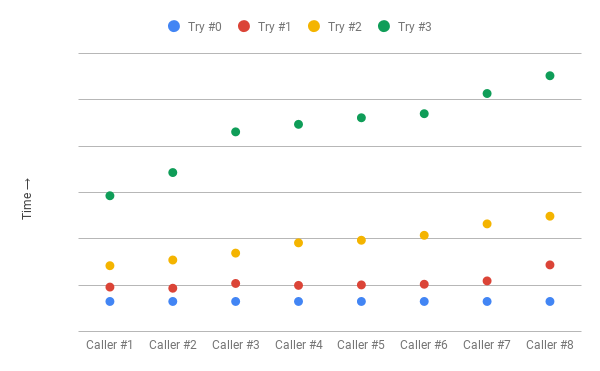

In our previous approach, the client's waiting time is getting longer, but still synchronized. Adding jitter provides a way to interrupt synchronization across clients to avoid conflicts. In this method, we add randomness to the waiting interval.

wait_interval = (base * 2^n) +/- (random_interval)

Where random_interval is added (or subtracted) to break the synchronization between clients.

We will not delve into the computer mechanism of random intervals, but randomization must separate the peak space into a smoother distribution of client calls.

We can use exponential regression with jitter in Resilience 4J retries by configuring an exponential random regression IntervalFunction, which also accepts a randomizationFactor:

IntervalFunction intervalFn = IntervalFunction.ofExponentialRandomBackoff(INITIAL_INTERVAL, MULTIPLIER, RANDOMIZATION_FACTOR);

Let's go back to our real scenario and look at the jittery remote call log:

[thread-2] At 39:21.297 [thread-4] At 39:21.297 [thread-3] At 39:21.297 [thread-1] At 39:21.297 [thread-2] At 39:21.918 [thread-3] At 39:21.868 [thread-4] At 39:22.011 [thread-1] At 39:22.184 [thread-1] At 39:23.086 [thread-5] At 39:23.939 [thread-3] At 39:24.152 [thread-4] At 39:24.977 [thread-3] At 39:26.861 [thread-1] At 39:28.617 [thread-4] At 39:28.942 [thread-2] At 39:31.039

Now we have better communication. We have eliminated conflicts and idle time and ended up with almost constant client call rates unless there was an initial surge.

Note: We have exaggerated the interval time between illustrations. In practice, we will have a smaller gap.

6. conclusion

In this tutorial, we explore how to improve client application retry failed calls by using jitter increase exponential fallback. The source code for the examples used in this tutorial is available GitHub Find it.

Original text: https://www.baeldung.com/resilience4j-backoff-jitter

Author: Priyank Srivastava

Translator: Queena

September Welfare, Focus on Public Number

Backstage reply: 004, get the August translation collection!

Futures benefits reply: 001, 002, 003 can be received!