0x0. preface

The previous articles in this series have a superficial understanding of the components of MLIR. This article will not continue to talk about the architecture of MLIR. But from a practical point of view, let's take readers to see what MLIR has helped me do. Here we still take OneFlow Dialect as an example. stay Interpretation of Moore's Law: the basic structure of the compiler The comments section of this article has briefly introduced how the components related to OneFlow Dialect are implemented. Based on the implementation of OneFlow Dialect, let me continue to introduce how the Pass mechanism of MLIR helps to accelerate OneFlow model training and reasoning.

The articles and experimental codes of the compiler series of learning from scratch and in-depth learning are sorted in this warehouse: https://github.com/BBuf/tvm_mlir_learn At present, 300 + stars have been harvested. If you are interested, you can check it by yourself. It would be better if you could click star.

0x1. background

At present, Transformer architecture has become the infrastructure that AI algorithm developers and engineers have to talk about. A series of super large models derived from Transformer infrastructure, such as Bert and gpt-2, have a great impact in the industry and also lead the trend of large models. However, the high training cost of large models discourages many people and even many companies. Usually, they can only do some downstream tasks on the pre trained large models. Therefore, how to speed up the training of large models is very important. In 2019, the first mock exam successfully constructed and trained the largest language model GPT-2 8B, which contained 8 billion 300 million parameter volumes, 24 times the BERT-Large model and 5.6 times the GPT-2. The first mock exam called the first mock exam called Megatron (Wei Zhentian), and also opened the pytorch code to train the model: https://github.com/NVIDIA/Megatron-LM .

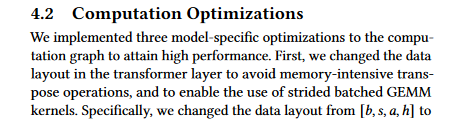

This paper mentioned many methods to accelerate large model training, especially model parallel training technology, but I know little about distributed training, which is not introduced here. My only focus here is on the Megatron paper( https://arxiv.org/pdf/2104.04473.pdf )Accelerated model training of compilation optimization mentioned in Section 4.2 of:

The compilation optimization in this section mainly focuses on the integration of OPS through PyTorch JIT technology, such as bias_add and gelu merge into one operator, bias_add+dropout are fused into one operator. After the fusion of these operators, it can not only avoid the repeated reading and writing of data by GPU and reduce the occupation of video memory, but also reduce the number of CUDA kernel launches and accelerate the whole calculation process.

To realize the compilation optimization mentioned in the paper, two preconditions are needed. Firstly, the framework provides the implementation of fusion Op. secondly, an optimized Pass is implemented based on the compiler to automatically find the fused Pattern in the model and rewrite it into an equivalent fusion Op, so as to accelerate the operation of the calculation graph.

0x2. Introduction to biasadd dropout and fusion operator

In OneFlow, to benchmark the bias of Megatron_ Add and dropout fuse implement a fused_bias_add_mask_scale operator, what it does is to integrate BiasAdd and Dropout into one operator to speed up. The implementation process of this operator is not expanded here. The focus is how to automatically discover this Pattern in the model based on MLIR and automatically replace this Pattern with fused_bias_add_mask_scale operator.

In order to better understand the practice of integrating Pass in the next section, here is bias_add, dropout and fused_ bias_add_ mask_ The parameter list of the three OPS of scale is briefly introduced.

- bias_add operator:

>>> import oneflow as flow >>> x = flow.randn(2, 3) >>> y = flow.randn(3) >>> z = flow._C.bias_add(x, y, axis=1)

You can see that this operator has three parameters, one is input Tensor, the other is bias Tensor, and the axis attribute indicates which dimension of the input Tensor you need to attach bias Tensor to. In the Transformer structure, the biased linear layer (nn.Linear) is through a matrix multiplication operator and a bias_add implementation.

- nn.Dropout operator: I believe you are very familiar with dropout operator and do not need more explanation. Please refer to the OneFlow operator document below.

example:

>>> import numpy as np

>>> import oneflow as flow

>>> m = flow.nn.Dropout(p=0)

>>> arr = np.array(

... [

... [-0.7797, 0.2264, 0.2458, 0.4163],

... [0.4299, 0.3626, -0.4892, 0.4141],

... [-1.4115, 1.2183, -0.5503, 0.6520],

... ]

... )

>>> x = flow.Tensor(arr)

>>> y = m(x)

>>> y

tensor([[-0.7797, 0.2264, 0.2458, 0.4163],

[ 0.4299, 0.3626, -0.4892, 0.4141],

[-1.4115, 1.2183, -0.5503, 0.6520]], dtype=oneflow.float32)

- fused_bias_add_mask_scale: fused_ bias_add_ mask_ Scale operator needs bias_ The inputs a and b (bias) of the add operator, and then you need a random called by the input a_ mask_ The mask Tensor mask generated by like OP is used as its third input, and finally bias is required_ axis attribute of add operator and p attribute of Dropout.

Here we need to explain why we need a mask. In fact, when the Dropout operator is implemented, it will also produce two outputs, one is Tensor and the other is mask. This is because Dropout will generate a mask according to p and the random number seed we input to determine which location of neurons should be retained and which location of neurons should be set to 0. For the needs of correct back propagation, we must retain this mask to calculate the gradient corresponding to the input Tensor. So in fused_ bias_ add_ mask_ In scale Op, it is necessary to pass the mask display to this Op, because there is only one output of this Op, and no additional mask will be output. The mask is generated by using the random inside oneflow_ mask_ Like Op, which accepts an input Tensor and p and a random number seed to generate a mask Tensor mask with a certain probability distribution.

0x3. Pattern matching and rewriting

After knowing the operands, attributes and output of these OPS, we can automatically match and rewrite the Patten for BiasAdd and Dropout based on MLIR. This function is implemented in: https://github.com/Oneflow-Inc/oneflow/pull/7709 .

First of all, we need to create a pattern in ONEFLOW / IR / include / ONEFLOW / oneflowpatterns TD in this file, the DRR framework based on MLIR writes out templates for automatic matching and rewriting. The implementation is as follows:

def GetDefaultSeed :

NativeCodeCall<"mlir::oneflow::GetDefaultSeed($_builder)">;

def FusedBiasAddMaskScale :

NativeCodeCall<"mlir::oneflow::CreateFusedBiasAddMaskScale($_builder, $0, $1, $2)">;

def IsAddToOutputNone: Constraint<CPred<"mlir::oneflow::IsAddToOutputNone($0)">, "">;

def FusedBiasAddDropoutPattern : Pattern<

(

OneFlow_DropoutOp: $dropout_res

(

OneFlow_BiasAddOp: $bias_add_res

$a,

$b,

$bias_add_op_name,

$bias_add_device_tag,

$bias_add_device_name,

$bias_add_scope_symbol_id,

$bias_add_hierarchy,

$bias_add_op_axis

),

$_add_to_output,

$dropout_op_name,

$dropout_device_tag,

$dropout_device_name,

$dropout_scope_symbol_id,

$dropout_hierarchy,

$dropout_op_rate

),

[

(

FusedBiasAddMaskScale

$dropout_res__0,

$bias_add_res,

(

OneFlow_RandomMaskLikeOp : $mask

$a,

$bias_add_op_name,

$dropout_device_tag,

$dropout_device_name,

$dropout_scope_symbol_id,

$dropout_hierarchy,

$dropout_op_rate,

(GetDefaultSeed)

)

),

(replaceWithValue $mask)

],

[(IsAddToOutputNone $_add_to_output)]

>;

Nativecodcall is a placeholder code. We can call the C + + function we wrote in dialog through nativecodcall. For example:

def GetDefaultSeed : NativeCodeCall<"mlir::oneflow::GetDefaultSeed($_builder)">;

Here we call the GetDefaultSeed function we wrote in OneFlow Dialect, which returns a random seed generated by the DefaultAutoGenerator class of OneFlow. This random seed is used in the Pattern as an attribute of RandomMaskLikeOp:

mlir::IntegerAttr GetDefaultSeed(::mlir::PatternRewriter& rewriter) {

const auto gen = CHECK_JUST(::oneflow::one::DefaultAutoGenerator());

return getSI64IntegerAttr(rewriter, (int64_t)gen->current_seed());

}

Similar to CreateFusedBiasAddMaskScale, this function rewrites the Pattern (BiasAddOp+DropoutOp) on the matching to fusedbiasaddmaskscale Op. The code implementation is as follows:

::llvm::SmallVector<::mlir::Value, 4> CreateFusedBiasAddMaskScale(::mlir::PatternRewriter& rewriter,

OpResult dropout_result,

OpResult bias_add_result,

Operation* mask) {

if (auto dropout_op = llvm::dyn_cast<oneflow::DropoutOp>(dropout_result.getDefiningOp())) {

if (auto bias_add_op = llvm::dyn_cast<oneflow::BiasAddOp>(bias_add_result.getDefiningOp())) {

SmallVector<Value, 4> operands;

operands.push_back(bias_add_op.a());

operands.push_back(bias_add_op.b());

operands.push_back(mask->getResults()[0]);

NamedAttrList fused_bias_add_dropout_attributes = dropout_op->getAttrs();

fused_bias_add_dropout_attributes.append(llvm::StringRef("axis"), bias_add_op.axisAttr());

fused_bias_add_dropout_attributes.append(llvm::StringRef("scale"), dropout_op.rateAttr());

fused_bias_add_dropout_attributes.erase(dropout_op.rateAttrName());

auto res = rewriter

.create<oneflow::FusedBiasAddMaskScaleOp>(

dropout_op->getLoc(), dropout_op->getResultTypes().front(), operands,

fused_bias_add_dropout_attributes)

->getResults();

// bias_add and dropout op is expected to be erased if it is not used

return res;

}

}

return {};

}

This function receives the output values of a PatternRewriter object, DropoutOp and BiasAddOp, and then obtains the op defining them from these two values, and the corresponding operands and attributes from Op. Then, the process of creating a new OP is completed based on the PatternRewriter object, and the replacement is completed at the position of the current DropoutOp. In this way, the rewriting of a specific Pattern is completed. The failed BiasAddOp and DropoutOp will be automatically deleted in the generated IR because they are NoSideEffect.

Next, let's take a look at the constraint IsAddToOutputNone: def IsAddToOutputNone: constraint < CPred < "MLIR:: ONEFLOW:: IsAddToOutputNone ($0)" >, ">; Here, we use CPred to define a constraint. In this CPred, we can put any C + + function that returns bool type. The implementation here is:

bool IsAddToOutputNone(ValueRange value) { return (int)value.size() > 0 ? false : true; }

That is, judge the of Dropout Op_ add_ to_ Whether the optional input output exists. If it does not exist, we can use the Pass we implemented.

In addition to the general part above, two special points need to be noted here, which I list separately.

Problems caused by the restriction of nativecodcall

We can find from the MLIR document that native codecall can only return one result. Therefore, in the above template matching and rewriting, we set two outputs for the rewritten part, one is the output of FusedBiasAddMaskScaleOp (target output), and the other is the placeholder output defined by (replaceWithValue $mask). The reason is that Dropout Op has two outputs. If a new placeholder output is not defined here, an error will be reported when the template is matched and rewritten. The reason for using replaceWithValue here is that it can simply and directly complete the function of replacing a value (mlir::Value), which is more suitable for the occupation function here.

Why should RandomMaskLikeOp customize the builder

Another dependency of the above implementation is the need to customize the builder of RandomMaskLikeOp. The definition of the new RandomMaskLikeOp is as follows:

def OneFlow_RandomMaskLikeOp : OneFlow_BaseOp<"random_mask_like", [NoSideEffect, NoGrad, DeclareOpInterfaceMethods<UserOpCompatibleInterface>]> {

let input = (ins

OneFlow_Tensor:$like

);

let output = (outs

OneFlow_Tensor:$out

);

let attrs = (ins

DefaultValuedAttr<F32Attr, "0.">:$rate,

DefaultValuedAttr<SI64Attr, "0">:$seed

);

let builders = [

OpBuilder<(ins

"Value":$like,

"StringRef":$op_name,

"StringRef":$device_tag,

"ArrayAttr":$device_name,

"IntegerAttr":$scope_symbol_id,

"ArrayAttr":$hierarchy,

"FloatAttr":$rate,

"IntegerAttr":$seed

)>

];

let has_check_fn = 1;

let has_logical_tensor_desc_infer_fn = 1;

let has_physical_tensor_desc_infer_fn = 1;

let has_get_sbp_fn = 1;

let has_data_type_infer_fn = 1;

}

After customizing the builder, you need to use C + + to implement the builder as follows:

void RandomMaskLikeOp::build(mlir::OpBuilder& odsBuilder, mlir::OperationState& odsState,

mlir::Value like, StringRef op_name, StringRef device_tag,

ArrayAttr device_name, IntegerAttr scope_symbol_id,

ArrayAttr hierarchy, mlir::FloatAttr rate, mlir::IntegerAttr seed) {

odsState.addOperands(like);

odsState.addAttribute(op_nameAttrName(odsState.name), odsBuilder.getStringAttr(op_name));

odsState.addAttribute(device_tagAttrName(odsState.name), odsBuilder.getStringAttr(device_tag));

odsState.addAttribute(device_nameAttrName(odsState.name), device_name);

if (scope_symbol_id) {

odsState.addAttribute(scope_symbol_idAttrName(odsState.name), scope_symbol_id);

}

if (hierarchy) { odsState.addAttribute(hierarchyAttrName(odsState.name), hierarchy); }

odsState.addAttribute(rateAttrName(odsState.name), rate);

odsState.addAttribute(seedAttrName(odsState.name), seed);

odsState.addTypes(like.getType());

}

The reason for this is that the mask generated by RandomMaskLikeOp here is not the outermost Op of Dag to be replaced, so MLIR cannot infer the output value type of RandomMaskLikeOp (if it is a single Op, the output type of this Op is the output type of the Op it will replace), so we need to provide a special builder without output type. The builder will do type inference. In this example, the type is obtained directly from like. Namely: odsstate addTypes(like.getType()); This line of code.

If you do not modify this, an error will be reported that the type cannot be matched. It is about as follows:

python3: /home/xxx/oneflow/build/oneflow/ir/llvm_monorepo-src/mlir/lib/IR/PatternMatch.cpp:328: void mlir::RewriterBase::replaceOpWithResultsOfAnotherOp(mlir::Operation*, mlir::Operation*): Assertion `op->getNumResults() == newOp->getNumResults() && "replacement op doesn't match results of original op"' failed

0x4. test

Having finished all the implementation details above, we can construct a OneFlow program to verify whether the IR Fusion works normally. The test code is as follows:

import unittest

import numpy as np

import os

os.environ["ONEFLOW_MLIR_ENABLE_ROUND_TRIP"] = "1"

import oneflow as flow

import oneflow.unittest

def do_bias_add_dropout_graph(test_case, with_cuda, prob):

x = flow.randn(2, 3, 4, 5)

bias = flow.randn(5)

dropout = flow.nn.Dropout(p=prob)

if with_cuda:

x = x.cuda()

bias = bias.to("cuda")

dropout.to("cuda")

eager_res = dropout(flow._C.bias_add(x, bias, axis=3))

class GraphToRun(flow.nn.Graph):

def __init__(self):

super().__init__()

self.dropout = dropout

def build(self, x, bias):

return self.dropout(flow._C.bias_add(x, bias, axis=3))

graph_to_run = GraphToRun()

lazy_res = graph_to_run(x, bias)

test_case.assertTrue(np.array_equal(eager_res.numpy(), lazy_res.numpy()))

@flow.unittest.skip_unless_1n1d()

class TestBiasAddDropout(oneflow.unittest.TestCase):

def test_bias_add_dropout_graph(test_case):

do_bias_add_dropout_graph(test_case, True, 1.0)

if __name__ == "__main__":

unittest.main()

NN is used here Graph wraps the calculation process, that is, running the whole program in the mode of static graph. nn. After the graph is constructed, a Job (the original calculation diagram representation of OneFlow) will be generated, and then the Job will be converted into an MLIR expression (OneFlow Dialect) for the above Fuse Pass, and then transferred back to the Job (the optimized OneFlow calculation diagram representation) for training or reasoning.

We can take a look at the IR representation before and after using MLIR FuseBiasAddDropout Pass. First, the MLIR expression without this Pass:

module {

oneflow.job @GraphToRun_0(%arg0: tensor<2x3x4x5xf32>, %arg1: tensor<5xf32>) -> tensor<2x3x4x5xf32> {

%output = "oneflow.input"(%arg0) {data_type = 2 : i32, device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], is_dynamic = false, nd_sbp = ["B"], op_name = "_GraphToRun_0_input.0.0_2", output_lbns = ["_GraphToRun_0_input.0.0_2/out"], scope_symbol_id = 4611686018427420671 : i64, shape = [2 : si64, 3 : si64, 4 : si64, 5 : si64]} : (tensor<2x3x4x5xf32>) -> tensor<2x3x4x5xf32>

%output_0 = "oneflow.input"(%arg1) {data_type = 2 : i32, device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], is_dynamic = false, nd_sbp = ["B"], op_name = "_GraphToRun_0_input.0.1_3", output_lbns = ["_GraphToRun_0_input.0.1_3/out"], scope_symbol_id = 4611686018427420671 : i64, shape = [5 : si64]} : (tensor<5xf32>) -> tensor<5xf32>

%0 = "oneflow.bias_add"(%output, %output_0) {axis = 3 : si32, device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], op_name = "bias_add-0", output_lbns = ["bias_add-0/out_0"], scope_symbol_id = 4611686018427420671 : i64} : (tensor<2x3x4x5xf32>, tensor<5xf32>) -> tensor<2x3x4x5xf32>

%out, %mask = "oneflow.dropout"(%0) {device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], op_name = "dropout-dropout-1", output_lbns = ["dropout-dropout-1/out_0", "dropout-dropout-1/mask_0"], rate = 1.000000e+00 : f32, scope_symbol_id = 4611686018427428863 : i64} : (tensor<2x3x4x5xf32>) -> (tensor<2x3x4x5xf32>, tensor<2x3x4x5xi8>)

%output_1 = "oneflow.output"(%out) {data_type = 2 : i32, device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], is_dynamic = false, nd_sbp = ["B"], op_name = "_GraphToRun_0_output.0.0_2", output_lbns = ["_GraphToRun_0_output.0.0_2/out"], scope_symbol_id = 4611686018427420671 : i64, shape = [2 : si64, 3 : si64, 4 : si64, 5 : si64]} : (tensor<2x3x4x5xf32>) -> tensor<2x3x4x5xf32>

oneflow.return %output_1 : tensor<2x3x4x5xf32>

}

}

Then the MLIR expression obtained after starting this Pass:

module {

oneflow.job @GraphToRun_0(%arg0: tensor<2x3x4x5xf32>, %arg1: tensor<5xf32>) -> tensor<2x3x4x5xf32> {

%output = "oneflow.input"(%arg0) {data_type = 2 : i32, device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], is_dynamic = false, nd_sbp = ["B"], op_name = "_GraphToRun_0_input.0.0_2", output_lbns = ["_GraphToRun_0_input.0.0_2/out"], scope_symbol_id = 4611686018427420671 : i64, shape = [2 : si64, 3 : si64, 4 : si64, 5 : si64]} : (tensor<2x3x4x5xf32>) -> tensor<2x3x4x5xf32>

%output_0 = "oneflow.input"(%arg1) {data_type = 2 : i32, device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], is_dynamic = false, nd_sbp = ["B"], op_name = "_GraphToRun_0_input.0.1_3", output_lbns = ["_GraphToRun_0_input.0.1_3/out"], scope_symbol_id = 4611686018427420671 : i64, shape = [5 : si64]} : (tensor<5xf32>) -> tensor<5xf32>

%0 = "oneflow.random_mask_like"(%output) {device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], op_name = "bias_add-0", rate = 1.000000e+00 : f32, scope_symbol_id = 4611686018427428863 : i64, seed = 4920936260932536 : si64} : (tensor<2x3x4x5xf32>) -> tensor<2x3x4x5xf32>

%1 = "oneflow.fused_bias_add_mask_scale"(%output, %output_0, %0) {axis = 3 : si32, device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], op_name = "dropout-dropout-1", output_lbns = ["dropout-dropout-1/out_0", "dropout-dropout-1/mask_0"], scale = 1.000000e+00 : f32, scope_symbol_id = 4611686018427428863 : i64} : (tensor<2x3x4x5xf32>, tensor<5xf32>, tensor<2x3x4x5xf32>) -> tensor<2x3x4x5xf32>

%output_1 = "oneflow.output"(%1) {data_type = 2 : i32, device_name = ["@0:0"], device_tag = "gpu", hierarchy = [1], is_dynamic = false, nd_sbp = ["B"], op_name = "_GraphToRun_0_output.0.0_2", output_lbns = ["_GraphToRun_0_output.0.0_2/out"], scope_symbol_id = 4611686018427420671 : i64, shape = [2 : si64, 3 : si64, 4 : si64, 5 : si64]} : (tensor<2x3x4x5xf32>) -> tensor<2x3x4x5xf32>

oneflow.return %output_1 : tensor<2x3x4x5xf32>

}

}

You can see that the FuseBiasAddDropout Pass implemented above has successfully completed the integration of BiasAdd and Dropout Op.

0x5. summary

This article introduces the practice of the Pass mechanism of MLIR. Many commonly used fuse OPS have been implemented in OneFlow dialog, and MLIR is used to do Pattern Match and Rewrite, so as to speed up the calculation diagram and save video memory without requiring users to modify any code. If you are interested in this part, you can check it in our OneFlow warehouse.

0x6. data

- https://github.com/Oneflow-Inc/oneflow

- https://mlir.llvm.org/docs/DeclarativeRewrites/