Although there were more or less python node s a few years ago, when you really want to use them, you often can't remember anything, and you have to spend time copy ing online again.

ps: after all, I'm not the kind of person who can write it easily.

Well, if you want to use it this time, you'll write something down and try to copy or clone it when you want to use it next time.

The content may be dry, but it has been tested repeatedly. In theory, you can directly copy and paste a shuttle.

The text begins here:

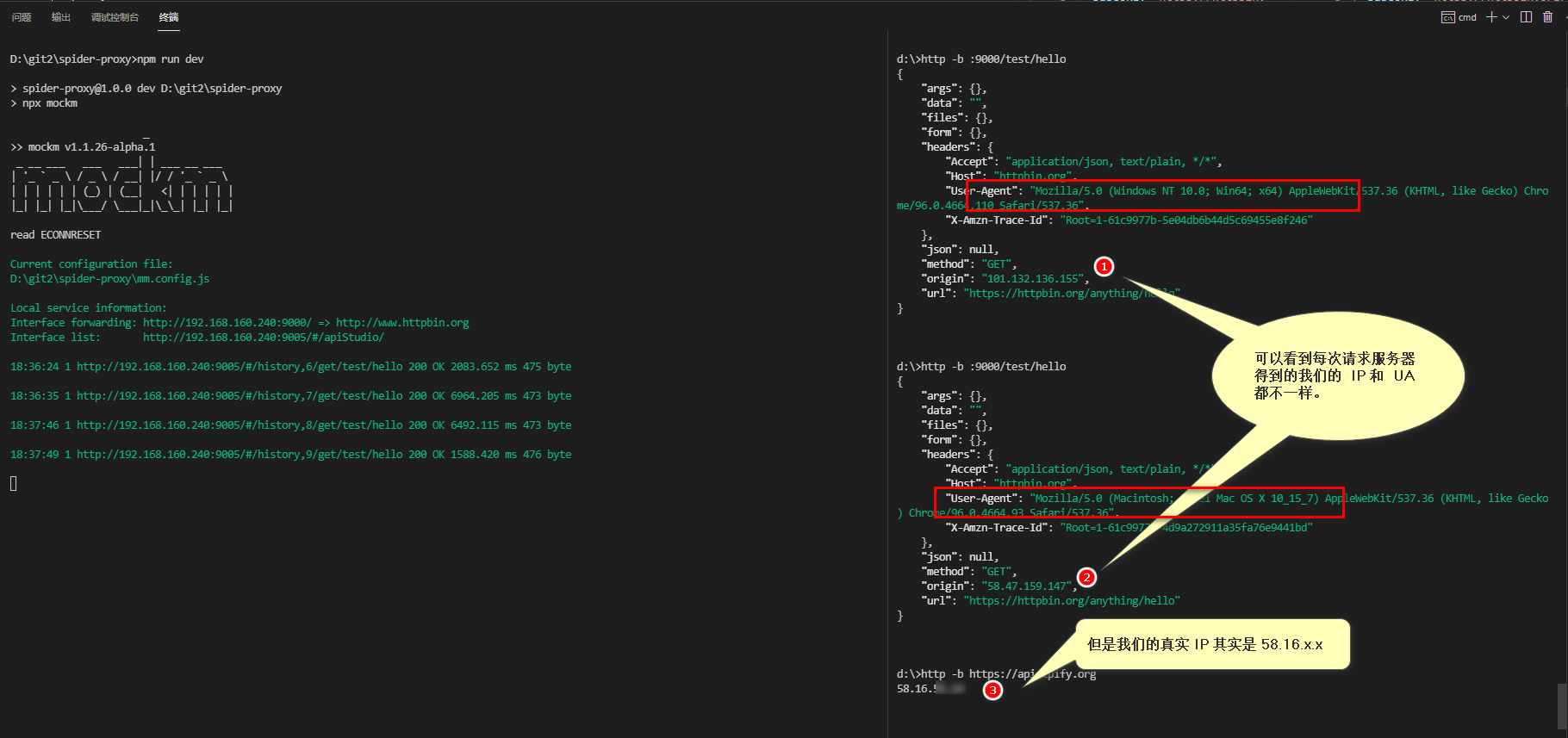

spider-proxy

This project uses node to implement the demo of random application agent and UA information request interface, and introduces how to build agent pool and other functions

npm i npm run dev

- visit: https://api.ipify.org View your IP

- visit: http://127.0.0.1:9000/test/xxx Test agent

How to implement proxy

axios

At present, there is a bug in axios that makes the built-in proxy method invalid. Fortunately, it can be solved by using a third-party library HTTPS proxy agent or node tunnel

You may also randomly get one from a public example http://demo.spiderpy.cn/get/?type=https

const axios = require('axios').default const http = axios.create({ baseURL: 'https://httpbin.org/', proxy: false, }) // Because many interfaces have to go through agents, they should be applied in interceptors http.interceptors.request.use(async (config) => { // Here, you can asynchronously request the latest proxy server configuration through the api // 127.0. 0.1:1080 is the ip and port of your proxy server. Since I built one locally, I used my local test config.httpsAgent = await new require('https-proxy-agent')(`http://127.0.0.1:1080`) return config }, (err) => Promise.reject(err)) http.interceptors.response.use((res) => res.data, (err) => Promise.reject(err)) new Promise(async () => { const data = await http.get(`/ip`).catch((err) => console.log(String(err))) // If the ip address of your proxy is returned here, it indicates that the proxy has been successfully applied console.log(`data`, data) })

How to implement random UA

User agents indicates the client browser information accessed We need to find the common ones, which can better integrate robots into the torrent of human beings, hahaha!

Some commonly used UA S are like this:

const userAgents = [ 'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.0.12) Gecko/20070731 Ubuntu/dapper-security Firefox/1.5.0.12', 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)', 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20', 'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6', 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER', 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0) ,Lynx/2.8.5rel.1 libwww-FM/2.14 SSL-MM/1.4.1 GNUTLS/1.2.9', 'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)', 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; QQBrowser/7.0.3698.400)', 'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)', 'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:2.0b13pre) Gecko/20110307 Firefox/4.0b13pre', 'Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52', 'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.8.0.12) Gecko/20070731 Ubuntu/dapper-security Firefox/1.5.0.12', 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; LBBROWSER)', 'Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6', 'Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6', 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; QQBrowser/7.0.3698.400)', 'Opera/9.25 (Windows NT 5.1; U; en), Lynx/2.8.5rel.1 libwww-FM/2.14 SSL-MM/1.4.1 GNUTLS/1.2.9', 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/61.0.3163.100 Safari/537.36' ]; module.exports = userAgents;

Then select one at random:

import userAgents from '../src/userAgent'; let userAgent = userAgents[parseInt(Math.random() * userAgents.length)];

It feels perfect In fact, it's troublesome to copy and paste and write a random!

We can optimize the codes at both ends of the above into one line:

(new (require('user-agents'))).data.userAgent

In this way, it looks much more beautiful

User agents is a JavaScript package that generates random user agents based on how often they are used in the market. A new version of the software package is automatically released every day, so the data is always up-to-date. The generated data includes browser fingerprint attributes that are difficult to find, and powerful filtering capabilities allow you to limit the generated user agents to meet your exact needs.

Implement agent pool

The main function of the crawler agent IP pool project is to regularly collect the free agent published on the Internet, verify and store the agent regularly, ensure the availability of the agent, and provide API and CLI At the same time, you can also expand the proxy source to increase the quality and quantity of proxy pool IP

This agent pool uses https://github.com/jhao104/proxy_pool .

Install docker

uname -r yum update yum remove docker docker-common docker-selinux docker-engine yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo yum -y install docker-ce-20.10.12-3.el7 systemctl start docker systemctl enable docker docker version

Install redis

yum -y install epel-release-7-14 yum -y install redis-3.2.12-2.el7 systemctl start redis # Configure redis # Change the password foobared to jdbjdkojbk sed -i 's/# requirepass foobared/requirepass jdbjdkojbk/' /etc/redis.conf # Modify port number sed -i 's/^port 6379/port 6389/' /etc/redis.conf # Configure allow other PC links sed -i 's/^bind 127.0.0.1/# bind 127.0.0.1/' /etc/redis.conf # Restart redis systemctl restart redis # View process ps -ef | grep redis # Test connection redis-cli -h 127.0.0.1 -p 6389 -a jdbjdkojbk

Install agent pool

docker pull jhao104/proxy_pool:2.4.0 # be careful docker run -itd --env DB_CONN=redis://:jdbjdkojbk@10.0.8.10:6389/0 -p 5010:5010 jhao104/proxy_pool:2.4.0

other

I have uploaded the complete code to github: welcome to stay claw~

Uninstall redis

systemctl stop redis yum remove redis rm -rf /usr/local/bin/redis* rm -rf /etc/redis.conf