Generally speaking, when we crawl the whole source code of the web page, we need to analyze the web page.

There are three normal analytical methods

① : regular match resolution

② : BeatuifulSoup parsing

③ : lxml parsing

Regular match resolution:

In the previous study, we learned the basic usage of reptiles, such as / s,/d,/w, *, +,? However, when parsing the crawled web page, it is not enough to only use these basic usages. Therefore, we need to understand the classical functions of regular matching in Python.

re.match

runoob explanation: re Match attempts to match a pattern from the starting position of the string. If the matching is not successful, match will return none. If the matching is successful, re Match will return a matching object, otherwise it is null To view the objects returned by matching, use the method of the group function.

Syntax:

re.match(pattern,string,flags=0)

Explanation: pattern is the regular expression for matching, string is the character to be matched, and flags is the flag bit, which is used to match whether it is case sensitive, multi line matching, etc. The specific usage is shown in the following table:

| Modifier | describe |

|---|---|

| re.I | Make matching case insensitive |

| re.M | Multi line matching, affecting ^ and$ |

| re.S | Make Match all characters including line feed (under normal circumstances, if line feed is not matched, this behavior is very dangerous and easy to be bypassed by hackers) |

| re.U | This flag affects \ w,\W,\b,\B when characters are parsed according to the Unicode character set |

Code case:

#!/usr/bin/python

#coding:utf-8

import re

line="Anyone who dreams there gets"

match=re.match(r'(.*) dreams (.*?).*',line,re.M|re.I)

#match1=re.match(r'(.*) Dreams (.*?)',line,re.M)#Case sensitive, matching will fail

match2=re.match(r'(.*) Dreams (.*?).*',line,re.M|re.I)#Non greedy model

match3=re.match(r'(.*) Dreams (.*).*',line, re.M|re.I)#Greedy model

print(match.group())

#print(match1.group())

print(match2.group())

print("match 0:",match.group(0))#Anyone who dreams there gets

print("match 1:",match.group(1))#Anyone who

print("match 2:",match.group(2))#' '

print("match2 0:",match2.group(0))#Anyone who dreams there gets

print("match2 1:",match2.group(1))#Anyone who

print("match2 2:",match2.group(2))#' '

print("match3 0:",match3.group(0))#Anyone who dreams there gets

print("match3 1:",match3.group(1))#Anyone who

print("match3 2:",match3.group(2))#there gets

You can see (. *?) The non greedy pattern will match the space and put it into group(2). By default, group()==group(0) matches the whole sentence, and the groups() function will put the matching result into a list. For the results returned by matching, such as match, we can use start(),end(),span() to view the start and end positions.

The R of the regular matching string r '(. *)' represents raw string and pure matching string. If it is used, the backslash in the backquote will not be specially processed. For example, the newline \ n will not be escaped. Therefore, we can understand the escape in the following regular matching:

'\ \' will be escaped without adding r, because the string has undergone two transitions. One is string escape, which becomes' \ 'and then regular escape.

If you add r, it will become '\' because matching with a pure string cancels the string escape. This is the escape process in regular matching.

re.search

re.search and re Match differs in re Search scans the entire string and returns the first result. In addition, re Match doesn't make much difference. You can also use m.span(),m.start(),m.end() to view the starting position and so on

Goal: crawl the titles of all python videos in the first bilibili search bar

code:

#!/usr/bin/python

#coding=utf-8

import requests

import re

link="https://search.bilibili.com/all?keyword=python&from_source=nav_search&spm_id_from=333.851.b_696e7465726e6174696f6e616c486561646572.9"

headers={"User-Agent":'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:85.0) Gecko/20100101 Firefox/85.0'}

r=requests.get(link,headers=headers)

text=r.text

title_list=re.findall('<a title=(.*?) href=.*?>',text)

for i in title_list:

print(i)

Key content: title_list=re.findall(’ ',text)

the second.? Indicates the result of matching conditions. The first (.?) Indicates to extract the results that meet the conditions

In this way, we can extract the title

BeautifulSoup parsing

Beautiful soup can extract data from HTML or XML, which is similar to a toolbox. When using beautiful soup, you can use some parsers to make the speed faster. At present, the better Lxml HTML parser is used as follows:

soup=BeautifulSoup(r.text,"lxml")

The essence of beautiful soup is a complex tree structure. There are generally three methods to extract its objects:

① : traverse the document tree, which is similar to the file directory of Linux. Since the tree structure must be from top to bottom, the first node must be the header. The specific method is as follows:

#To get a label that starts with < H1 >, you can use soup.header.h1 #Content similar to < H1 id = "name" > Culin < / H1 > will be returned #If you want to get all the child nodes of the div, you can use soup.header.div.contents #If you want to get all the child nodes of the div, you can use soup.header.div.children #If you want to get the descendant nodes of div, you can use soup.header.div.descendants #If you want to get the parent node of node a, you can use soup.header.div.a.parents

② : search document tree:

Traversing the document tree has great limitations. Generally, it is not recommended. It is more to search the document tree. The use method is very convenient. The essence lies in find and find_all function, for example, we need to crawl the title of the blog post:

#!/usr/bin/python

#coding:utf-8

import requestss

from bs4 import BeautifulSoup

link="https://editor.csdn.net/md/?articleId=115454835/"

r=requests.get(link)

soup=BeautifulSoup(r.text,"lxml")

#Print the first title

first_title=soup.find("h1",class_="post-title").a.text.strip()

#Print all articles

all_title=soup.find_all("h1",class_="post-title")

for i in range(len(all_titile)):

print("No ",i," is ",all_title[i].a.text.strip())

③ : CSS selector:

CSS can be used as a document tree shrew to extract data, or it can be searched according to the method of searching the document tree. However, I personally think the CSS selector is not as easy to use as selenium, and it is very inconvenient to use, so I won't repeat it

In addition, the lxml parser focuses on xpath, which is also well reflected in selenium. For the choice of xpath, it is more convenient for us to directly check xpath in web page inspection.

Project practice

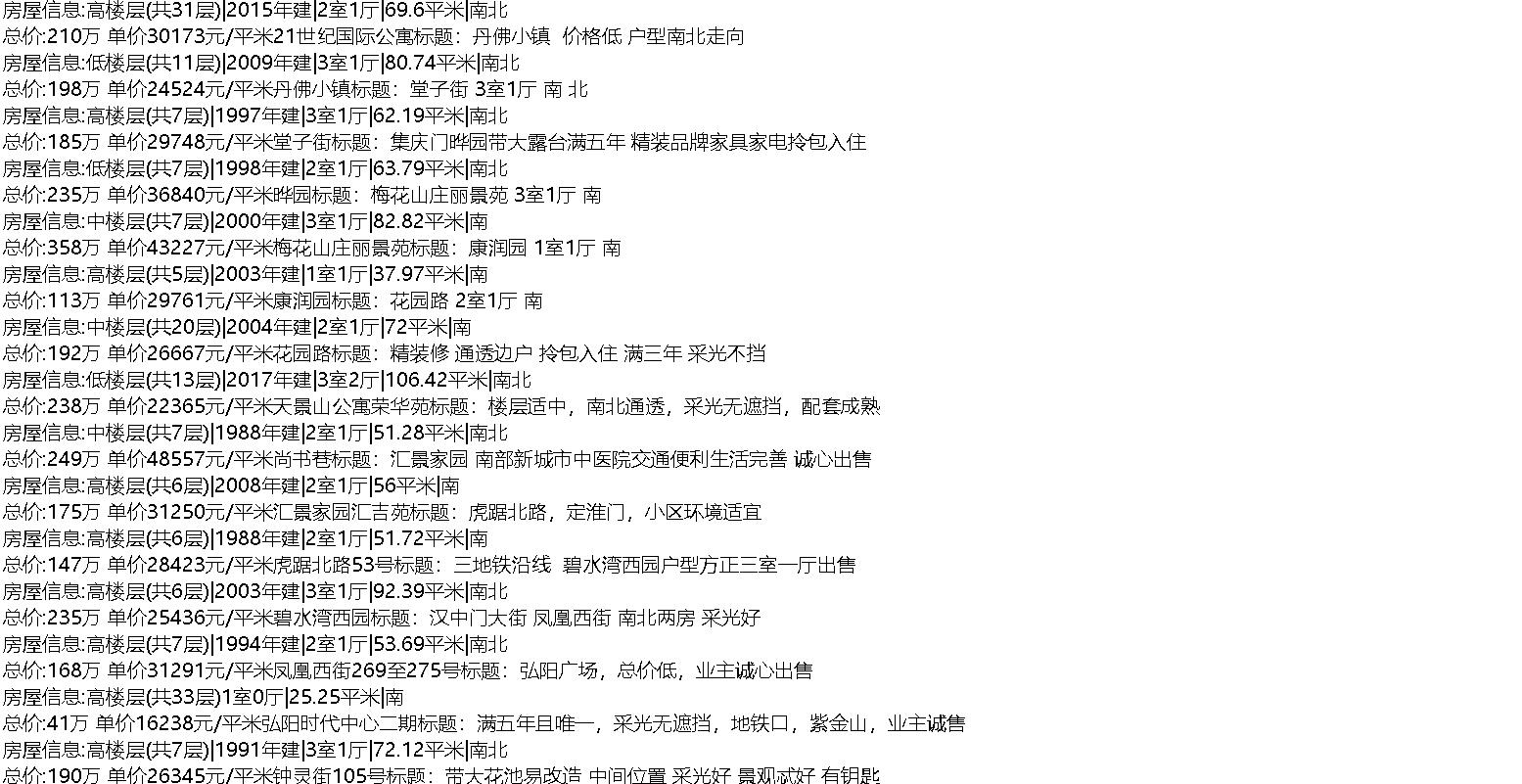

Crawling shell website, Nanjing housing information, total house price, average house price, location

#coding:utf-8

import requests

import re

from bs4 import BeautifulSoup

link="https://nj.ke.com/ershoufang/pg"

headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:86.0) Gecko/20100101 Firefox/86.0","Host":

"nj.ke.com"}

for i in range(1,20):

link_=link+str(i)+'/'

r=requests.get(link_,headers=headers)

soup=BeautifulSoup(r.text,"html.parser")

houst_list=soup.find_all('li',class_='clear')

for house in houst_list:

name=house.find('div',class_='title').a.text.strip()+'\n'

houst_info=house.find('div',class_='houseInfo').text.strip()

houst_info=re.sub('\s+','',houst_info)+'\n'

total_money=str(house.find('div',class_='totalPrice').span.text.strip())+'ten thousand'

aver_money=str(house.find('div',class_='unitPrice').span.text.strip())

adderess=house.find('div',class_='positionInfo').a.text.strip()

text_="title:"+name+"House information:"+houst_info+"Total price:"+total_money+' '+aver_money+adderess

with open ('haha.txt',"a+") as f:

f.write(text_)

The result returns a haha Txt, as follows: