This note is excerpted from: https://www.cnblogs.com/zhili/archive/2012/07/21/ThreadsSynchronous.html Record the learning process for future reference.

I. overview of thread synchronization

Create multiple threads to enable us to better respond to the application. However, when we create multiple threads, there will be multiple threads accessing a shared resource at the same time. At this point, we need to use thread synchronization. Line alignment

Step can prevent data (shared resources) from being corrupted.

Generally speaking, the application should be designed to avoid thread synchronization as much as possible, because thread synchronization will cause some problems:

1.1. Its use is cumbersome. We need extra code to surround the data accessed by multiple threads at the same time, and acquire and release a thread synchronization lock. If a code block forgets to acquire the lock, it may cause data corruption.

1.2. Using thread synchronization will affect performance.

1.2.1. It takes time to acquire and release a lock. When we decide which thread acquires the lock first, the CPU needs to coordinate. These extra work will affect the performance.

1.2.2. Thread synchronization only allows one thread to access resources at a time, which will block the thread and cause more threads to be created. This makes it possible for the CPU to schedule more threads, which has an impact on performance.

2. Synchronous use of threads

2.1 effect of using lock on Performance

1.2.1 describes the impact of using locks on performance. This is illustrated by comparing the time spent using locks and not using locks:

class Program { static void Main(string[] args) { #region Thread synchronization: time consuming comparison between using lock and not using lock int x = 0; //5 million iterations const int iterationNumber = 5000000; //No locks Stopwatch sw = Stopwatch.StartNew(); for (int i = 0; i < iterationNumber; i++) { x++; } Console.WriteLine("Total time consuming is:{0}ms.", sw.ElapsedMilliseconds); sw.Restart(); //Use lock for (int i = 0; i < iterationNumber; i++) { Interlocked.Increment(ref x); } Console.WriteLine("Total time consuming is:{0}ms.", sw.ElapsedMilliseconds); Console.Read(); #endregion } }

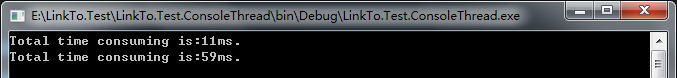

The operation results are as follows:

2.2 Interlocked for thread synchronization

Interlocked provides atomic operations for multiple threads to share variables. When we increase an integer in multiple threads, we need to realize thread synchronization.

The following code shows the difference between lock and no lock:

Unlocked:

class Program { //shared resource public static int number = 0; static void Main(string[] args) { #region Thread synchronization: Using Interlocked Thread synchronization //Unlocked for (int i = 0; i < 10; i++) { Thread thread = new Thread(Add); thread.Start(); } Console.Read(); #endregion } /// <summary> /// Increasing without lock /// </summary> public static void Add() { Thread.Sleep(1000); Console.WriteLine("The current value of number is:{0}", ++number); } }

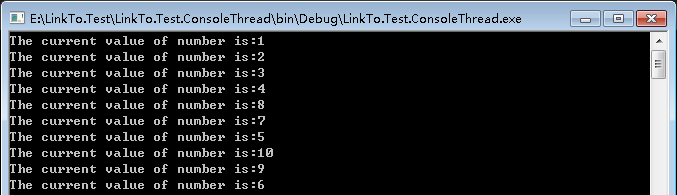

The operation results are as follows:

The results may not be as expected. In order to solve this problem, we can use the method of Interlocked.Increment to implement the auto increment operation.

Implementation principle: similar to bank call, when there is an empty number and the number is your own, you can go to handle the relevant business, otherwise you can continue to wait.

Lock:

class Program { //shared resource public static int number = 0; public static long signal = 0; static void Main(string[] args) { #region Thread synchronization: Using Interlocked Thread synchronization //Lock up for (int i = 0; i < 10; i++) { Thread thread = new Thread(new ParameterizedThreadStart(AddWithInterlocked)); thread.Start(i); } Console.Read(); #endregion } /// <summary> /// Recursive increase Interlocked lock /// </summary> public static void AddWithInterlocked(object parameter) { while (Interlocked.Read(ref signal) != 0 || (int)parameter != number) { Thread.Sleep(100); } Interlocked.Increment(ref signal); Console.WriteLine("The current value of number is:{0}", ++number); Interlocked.Decrement(ref signal); } }

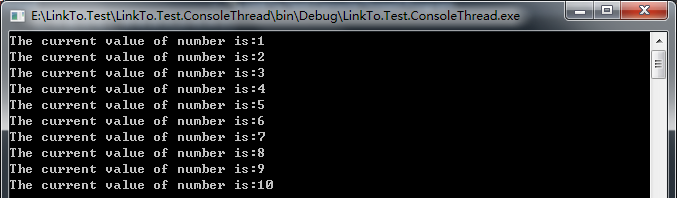

The operation results are as follows:

2.3 Monitor for thread synchronization

For the above case, thread synchronization can also be realized through Monitor.Enter and Monitor.Exit methods.

In ා C ා a simplified syntax is provided through the lock keyword (lock can be understood as the syntax sugar of Monitor.Enter and Monitor.Exit methods).

class Program { //shared resource public static int number = 0; private static readonly object addLock = new object(); static void Main(string[] args) { #region Thread synchronization: Using Monitor Thread synchronization //Non grammatical sugar for (int i = 0; i < 10; i++) { Thread thread = new Thread(AddWithMonitor); thread.Start(); } Console.Read(); //Grammatical sugar //for (int i = 0; i < 10; i++) //{ // Thread thread = new Thread(AddWithLock); // thread.Start(); //} //Console.Read(); #endregion } /// <summary> /// Recursive increase Monitor lock /// </summary> public static void AddWithMonitor() { Thread.Sleep(100); Monitor.Enter(addLock); Console.WriteLine("The current value of number is:{0}", ++number); Monitor.Exit(addLock); } /// <summary> /// Recursive increase Lock lock /// </summary> public static void AddWithLock() { Thread.Sleep(100); lock (addLock) { Console.WriteLine("The current value of number is:{0}", ++number); } } }

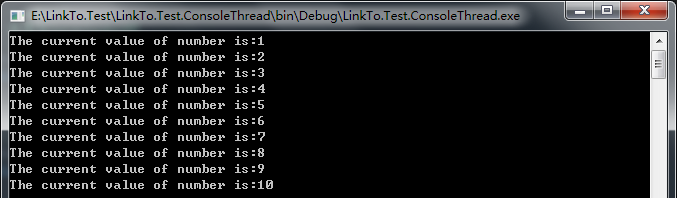

The operation results are as follows:

Connect the above addLock lock lock (described as obj lock below), and learn the principle of Monitor class by the way:

Monitor maintains two thread queues R and W and a reference T on the lock object obj:

(1)T is a reference to the thread that currently obtains the obj lock.

(2) R is the ready queue.

The threads on the R queue are ready to compete for the obj lock.

The thread can directly enter the R queue by calling Monitor.Enter(obj) or Monitor.TryEnter(obj), and release the obj lock obtained by calling Monitor.Exit(obj) or Monitor.Wait(obj).

When the obj lock is released by a thread, the threads on the queue will compete for the obj lock, and the thread obtaining the obj lock will be referenced by T.

(3) W is the waiting queue.

Threads on the W queue are threads that will not be directly scheduled by the OS for execution. That is to say, the thread on the waiting queue cannot obtain the obj lock.

The thread can directly enter the W queue by calling Monitor.Wait(obj). The first waiting thread or all waiting threads in the W queue can be moved to the R queue by calling Monitor.Pulse(obj) or Monitor.PulseAll(obj),

At this time, these threads that are moved to the R queue have the opportunity to be directly scheduled by the OS for execution, that is, to compete for obj locks.

Member method of (4)Monitor.

Monitor.Enter(obj)/Monitor.TryEnter(obj): thread will enter R queue to wait for obj lock

Monitor.Exit(obj): the thread releases the obj lock (only the thread obtaining the obj lock can execute Monitor.Exit(obj))

Monitor.Wait(obj): the thread releases the currently obtained obj lock, then enters the W queue and blocks it.

Monitor.Pulse(obj): move the first waiting thread in the W queue to the R queue so that the first thread has the opportunity to acquire the obj lock.

Monitor.PulseAll(obj): move all waiting threads in the W queue to the R queue so that these threads have the opportunity to obtain obj lock.

The following code demonstrates the use of Monitor.Wait and Monitor.Pulse:

class Program { //shared resource private static readonly object addLock = new object(); static void Main(string[] args) { #region Thread synchronization: Monitor.Wait And Monitor.Pulse Use for (int i = 0; i < 10; i++) { Thread thread = new Thread(MonitorWaitAndPulse); thread.Start(); } Console.Read(); #endregion } /// <summary> /// Monitor Medium Wait And Pulse Method /// </summary> public static void MonitorWaitAndPulse() { //Enter the ready queue to wait for the lock resource Monitor.Enter(addLock); //Come in and say hello Console.WriteLine("{0}: I'm here. I'm going out to do something temporarily.", Thread.CurrentThread.ManagedThreadId); //The first thread in the wake-up waiting queue enters the ready queue Monitor.Pulse(addLock); //Temporarily release the lock resource and enter the waiting queue Monitor.Wait(addLock); //Go out to do business Thread.Sleep(1000); //Come back and say hello Console.WriteLine("{0}: I'm back!", Thread.CurrentThread.ManagedThreadId); //Release lock resource Monitor.Exit(addLock); } }

The operation results are as follows:

2.4 ReaderWriterLock for thread synchronization

If we need to read a shared resource multiple times, the synchronization locks implemented with the above classes only allow one thread to access, and other threads will be blocked. Because it is only for reading operation, it is unnecessary

Blocking other threads should allow them to execute concurrently.

At this time, parallel reading can be realized through the ReaderWriterLock class.

class Program { //create object public static List<int> lists = new List<int>(); public static ReaderWriterLock readerWriteLock = new ReaderWriterLock(); static void Main(string[] args) { #region Thread synchronization: Using ReaderWriterLock Thread synchronization //Create a thread to read data Thread threadWrite = new Thread(Write); threadWrite.Start(); //Create 10 threads to read data for (int i = 0; i < 10; i++) { Thread threadRead = new Thread(Read); threadRead.Start(); } Console.Read(); #endregion } /// <summary> /// Writing method /// </summary> public static void Write() { //Get write lock, timeout in 10 ms. readerWriteLock.AcquireWriterLock(10); Random ran = new Random(); int count = ran.Next(1, 10); lists.Add(count); Console.WriteLine("Write the data is:" + count); //Release write lock readerWriteLock.ReleaseWriterLock(); } /// <summary> /// Reading method /// </summary> public static void Read() { Thread.Sleep(100); //Get read lock readerWriteLock.AcquireReaderLock(10); foreach (int list in lists) { //Output read data Console.WriteLine(list); } // Release read lock readerWriteLock.ReleaseReaderLock(); } }