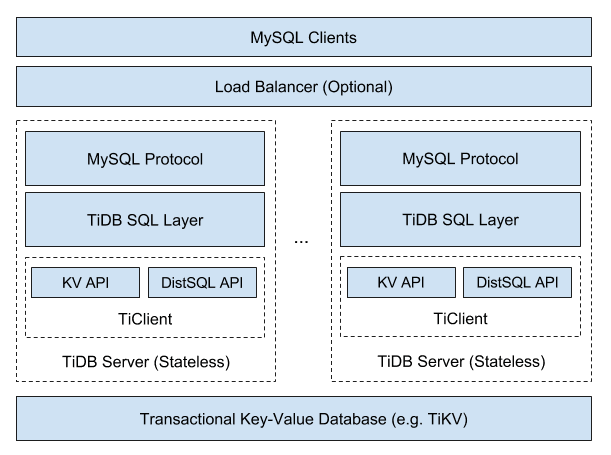

framework

There is on the official website. There is no analysis here. This paper mainly records the SQL part of the source code processing.

entrance

The main function is as follows. All the previous parameters are configured from terror Mustnil (SVR. Run()) enters run here

Then go all the way down, through a series of judgments, and finally reach the run function in server/conn.go, which is the real start of SQL processing.

help := flag.Bool("help", false, "show the usage")

flag.Parse()

if *help {

flag.Usage()

os.Exit(0)

}

if *version {

fmt.Println(printer.GetTiDBInfo())

os.Exit(0)

}

registerStores()

registerMetrics()

config.InitializeConfig(*configPath, *configCheck, *configStrict, overrideConfig)

if config.GetGlobalConfig().OOMUseTmpStorage {

config.GetGlobalConfig().UpdateTempStoragePath()

err := disk.InitializeTempDir()

terror.MustNil(err)

checkTempStorageQuota()

}

// Enable failpoints in tikv/client-go if the test API is enabled.

// It appears in the main function to be set before any use of client-go to prevent data race.

if _, err := failpoint.Status("github.com/pingcap/tidb/server/enableTestAPI"); err == nil {

warnMsg := "tikv/client-go failpoint is enabled, this should NOT happen in the production environment"

logutil.BgLogger().Warn(warnMsg)

tikv.EnableFailpoints()

}

setGlobalVars()

setCPUAffinity()

setupLog()

setupTracing() // Should before createServer and after setup config.

printInfo()

setupBinlogClient()

setupMetrics()

storage, dom := createStoreAndDomain()

svr := createServer(storage, dom)

// Register error API is not thread-safe, the caller MUST NOT register errors after initialization.

// To prevent misuse, set a flag to indicate that register new error will panic immediately.

// For regression of issue like https://github.com/pingcap/tidb/issues/28190

terror.RegisterFinish()

exited := make(chan struct{})

signal.SetupSignalHandler(func(graceful bool) {

svr.Close()

cleanup(svr, storage, dom, graceful)

close(exited)

})

topsql.SetupTopSQL()

terror.MustNil(svr.Run())

<-exited

syncLog()

In the run function in server/conn.go

1063: data, err := cc.readPacket()

1090: if err = cc.dispatch(ctx, data); err != nil{

Here, the network packets will be read continuously in a cycle, waiting for the input of SQL. After reading the SQL, follow-up processing will be carried out. There are still four or five hundred lines in the official website document. I don't know how many generations have been updated.

SQL preprocessing

dispatch function

cmd := data[0] // Call the corresponding processing function according to cmd

1257 data = data[1:]

......

switch cmd {

.......

1326 case mysql.ComQuery:

// For issue 1989

// Input payload may end with byte '\0', we didn't find related mysql document about it, but mysql

// implementation accept that case. So trim the last '\0' here as if the payload an EOF string.

// See http://dev.mysql.com/doc/internals/en/com-query.html

if len(data) > 0 && data[len(data)-1] == 0 {

data = data[:len(data)-1]

dataStr = string(hack.String(data))

}

return cc.handleQuery(ctx, dataStr)

data is an array of SQL statements. The first field specifies what your SQL statement is. The most commonly used Command is COM_QUERY, for most SQL statements, as long as it is not Prepared, it is COM_QUERY, this article will only introduce this Command. For other commands, please refer to MySQL documents for code. For Command Query, SQL text is mainly sent from the client, and the processing function is handleQuery().

1789 stmts, err := cc.ctx.Parse(ctx, sql)

The Parse function is

Parse parses a query string to raw ast.StmtNode.

It is to convert the requested statement into an abstract syntax tree (AST), which can parse the text into structured data. The implementation of parse is in session/session.go. This part is quite complex and can be understood after reading. For reference Lex and Yacc study notes

Then we go back to the handleQuery function

1840 retryable, err = cc.handleStmt(ctx, stmt, parserWarns, i == len(stmts)-1)

handleStmt function

rs, err := cc.ctx.ExecuteStmt(ctx, stmt)

Finally, jump to session ExecuteStmt function of go

1677 stmt, err := compiler.Compile(ctx, stmtNode)

When we enter the Compile function, we can see three important steps:

- plan.Preprocess: do some legitimacy checks and name binding;

- plan.Optimize: making a query plan and optimizing it is one of the most core steps, which will be highlighted in the following articles;

- Construct executor ExecStmt structure: this ExecStmt structure holds query plans and is the basis for subsequent execution. It is very important, especially the Exec method.

Subsequent execution will not be written here, but mainly SQL processing

SQL processing

SQL processing is mainly the Optimize function. After a series of judgment processing

finalPlan, cost, err := plannercore.DoOptimize(ctx, sctx, builder.GetOptFlag(), logic)

Enter the DoOptimize function. The main function of the DoOptimize function is to convert the logical plan into an executable physical plan, which is also the core part of SQL conversion.

logic, err := logicalOptimize(ctx, flag, logic) ... physical, cost, err := physicalOptimize(logic, &planCounter) ... finalPlan := postOptimize(sctx, physical)

The transformation is divided into three steps, to be continued.