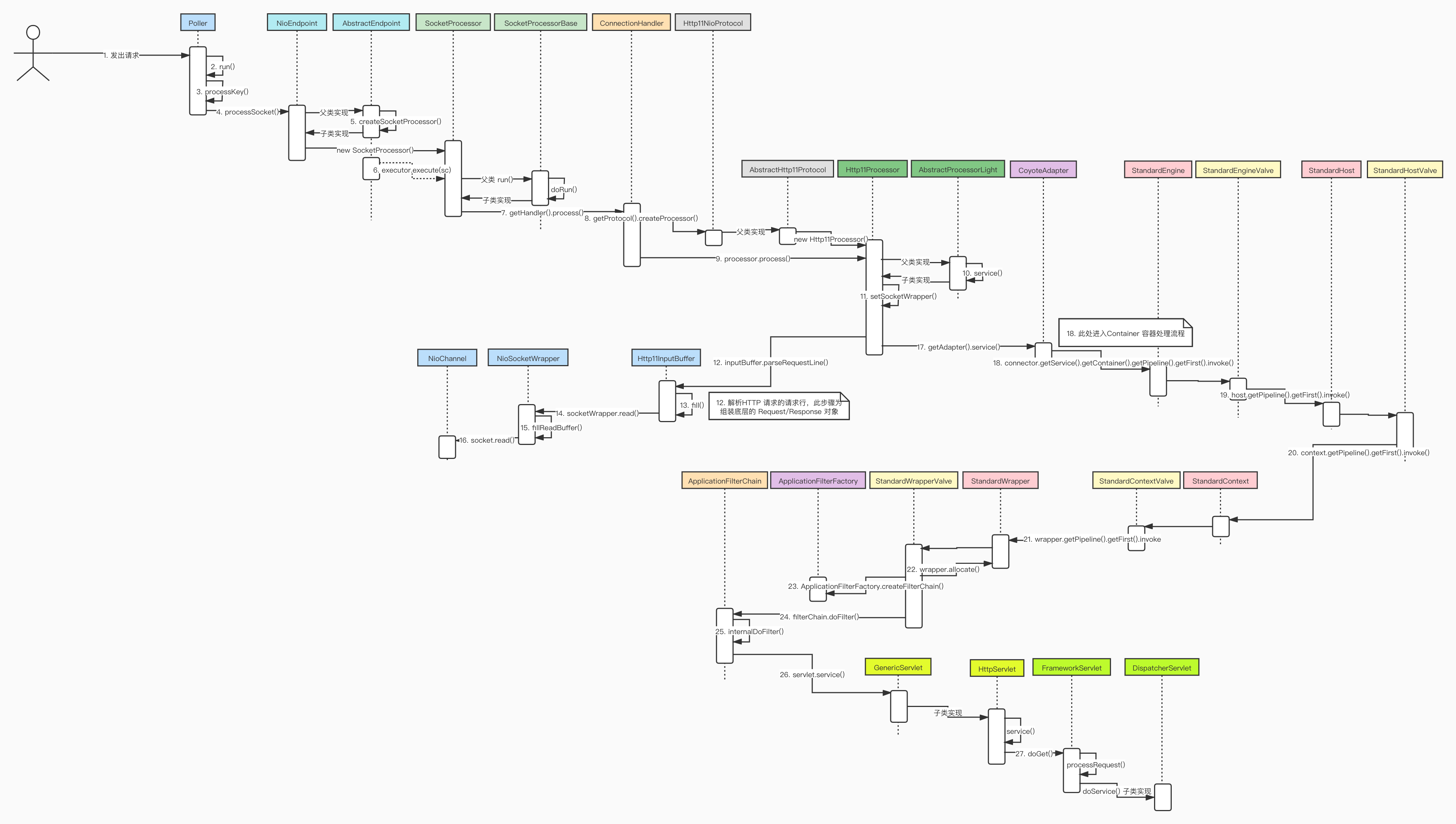

1. Tomcat network request processing flow

stay Tomcat source code analysis (1) - structure composition and core components In, the author analyzes the core composition of Tomcat. Therefore, the network request processing of Tomcat can also be divided into the following steps:

- Poller distributes socket connection read / write events

- The Processor reads the socket data and constructs the underlying Request/Response object according to the protocol type

- The Adapter adapts to transform the upper ServletRequest/ServletResponse object and put the request into the Container

- The Container completes the business processing for the request

2. Source code analysis

2.1 connection Poller poller processing

-

Taking NioEndpoint as an example, this paper analyzes the underlying connection processing of Tomcat Tomcat source code analysis (2) - startup of Connector The author has mentioned that as a polling device, Poller#run() will keep polling the ready connections in the sub thread while loop. As a result, the key logic is to get readable and written connections through Selector and then call Poller#processKey() to process connections.

public void run() { // Loop until destroy() is called while (true) { boolean hasEvents = false; try { if (!close) { hasEvents = events(); if (wakeupCounter.getAndSet(-1) > 0) { // If we are here, means we have other stuff to do // Do a non blocking select keyCount = selector.selectNow(); } else { keyCount = selector.select(selectorTimeout); } wakeupCounter.set(0); } if (close) { events(); timeout(0, false); try { selector.close(); } catch (IOException ioe) { log.error(sm.getString("endpoint.nio.selectorCloseFail"), ioe); } break; } } catch (Throwable x) { ExceptionUtils.handleThrowable(x); log.error(sm.getString("endpoint.nio.selectorLoopError"), x); continue; } // Either we timed out or we woke up, process events first if (keyCount == 0) { hasEvents = (hasEvents | events()); } Iterator<SelectionKey> iterator = keyCount > 0 ? selector.selectedKeys().iterator() : null; // Walk through the collection of ready keys and dispatch // any active event. while (iterator != null && iterator.hasNext()) { SelectionKey sk = iterator.next(); NioSocketWrapper socketWrapper = (NioSocketWrapper) sk.attachment(); // Attachment may be null if another thread has called // cancelledKey() if (socketWrapper == null) { iterator.remove(); } else { iterator.remove(); processKey(sk, socketWrapper); } } // Process timeouts timeout(keyCount,hasEvents); } getStopLatch().countDown(); } -

The core logic of Poller#processKey() is divided into the following steps:

- First, judge whether it is the operation of sending files. If so, call Poller#processSendfile() to send data in zero copy with the help of FileChannel#transferTo()

- The AbstractEndpoint#processSocket() method is called for unified processing of ordinary read-write operations, but the SocketEvent of the two methods is different

protected void processKey(SelectionKey sk, NioSocketWrapper socketWrapper) { try { if (close) { cancelledKey(sk, socketWrapper); } else if (sk.isValid() && socketWrapper != null) { if (sk.isReadable() || sk.isWritable()) { if (socketWrapper.getSendfileData() != null) { processSendfile(sk, socketWrapper, false); } else { unreg(sk, socketWrapper, sk.readyOps()); boolean closeSocket = false; // Read goes before write if (sk.isReadable()) { if (socketWrapper.readOperation != null) { if (!socketWrapper.readOperation.process()) { closeSocket = true; } } else if (!processSocket(socketWrapper, SocketEvent.OPEN_READ, true)) { closeSocket = true; } } if (!closeSocket && sk.isWritable()) { if (socketWrapper.writeOperation != null) { if (!socketWrapper.writeOperation.process()) { closeSocket = true; } } else if (!processSocket(socketWrapper, SocketEvent.OPEN_WRITE, true)) { closeSocket = true; } } if (closeSocket) { cancelledKey(sk, socketWrapper); } } } } else { // Invalid key cancelledKey(sk, socketWrapper); } } catch (CancelledKeyException ckx) { cancelledKey(sk, socketWrapper); } catch (Throwable t) { ExceptionUtils.handleThrowable(t); log.error(sm.getString("endpoint.nio.keyProcessingError"), t); } } -

The core logic of AbstractEndpoint#processSocket() method is actually to call the subclass method to implement NioEndpoint#createSocketProcessor() to create a SocketProcessorBase asynchronous task object, and then throw the task into the thread pool for execution. In this example, the asynchronous task object created is the SocketProcessor object of NioEndpoint internal class

public boolean processSocket(SocketWrapperBase<S> socketWrapper, SocketEvent event, boolean dispatch) { try { if (socketWrapper == null) { return false; } SocketProcessorBase<S> sc = null; if (processorCache != null) { sc = processorCache.pop(); } if (sc == null) { sc = createSocketProcessor(socketWrapper, event); } else { sc.reset(socketWrapper, event); } Executor executor = getExecutor(); if (dispatch && executor != null) { executor.execute(sc); } else { sc.run(); } } catch (RejectedExecutionException ree) { getLog().warn(sm.getString("endpoint.executor.fail", socketWrapper) , ree); return false; } catch (Throwable t) { ExceptionUtils.handleThrowable(t); // This means we got an OOM or similar creating a thread, or that // the pool and its queue are full getLog().error(sm.getString("endpoint.process.fail"), t); return false; } return true; } -

NioEndpoint. The socketprocessor#dorun () method will be triggered during task execution. The important logic here is through gethandler () Process() calls ConnectionHandler#process() to hand over the socket connection to the Processor for data processing

protected void doRun() { NioChannel socket = socketWrapper.getSocket(); SelectionKey key = socket.getIOChannel().keyFor(socket.getSocketWrapper().getPoller().getSelector()); Poller poller = NioEndpoint.this.poller; if (poller == null) { socketWrapper.close(); return; } try { int handshake = -1; try { if (key != null) { if (socket.isHandshakeComplete()) { // No TLS handshaking required. Let the handler // process this socket / event combination. handshake = 0; } else if (event == SocketEvent.STOP || event == SocketEvent.DISCONNECT || event == SocketEvent.ERROR) { // Unable to complete the TLS handshake. Treat it as // if the handshake failed. handshake = -1; } else { handshake = socket.handshake(key.isReadable(), key.isWritable()); // The handshake process reads/writes from/to the // socket. status may therefore be OPEN_WRITE once // the handshake completes. However, the handshake // happens when the socket is opened so the status // must always be OPEN_READ after it completes. It // is OK to always set this as it is only used if // the handshake completes. event = SocketEvent.OPEN_READ; } } } catch (IOException x) { handshake = -1; if (log.isDebugEnabled()) log.debug("Error during SSL handshake",x); } catch (CancelledKeyException ckx) { handshake = -1; } if (handshake == 0) { SocketState state = SocketState.OPEN; // Process the request from this socket if (event == null) { state = getHandler().process(socketWrapper, SocketEvent.OPEN_READ); } else { state = getHandler().process(socketWrapper, event); } if (state == SocketState.CLOSED) { poller.cancelledKey(key, socketWrapper); } } else if (handshake == -1 ) { getHandler().process(socketWrapper, SocketEvent.CONNECT_FAIL); poller.cancelledKey(key, socketWrapper); } else if (handshake == SelectionKey.OP_READ){ socketWrapper.registerReadInterest(); } else if (handshake == SelectionKey.OP_WRITE){ socketWrapper.registerWriteInterest(); } } catch (CancelledKeyException cx) { poller.cancelledKey(key, socketWrapper); } catch (VirtualMachineError vme) { ExceptionUtils.handleThrowable(vme); } catch (Throwable t) { log.error(sm.getString("endpoint.processing.fail"), t); poller.cancelledKey(key, socketWrapper); } finally { socketWrapper = null; event = null; //return to cache if (running && !paused && processorCache != null) { processorCache.push(this); } } } } -

The key processing of the ConnectionHandler#process() method is as follows:

- If the Processor object is not obtained from all levels of cache, use getprotocol() Createprocessor() creates a new Processor. In this example, AbstractHttp11Protocol#createProcessor() will be called to create an Http11Processor object

- Through Processor Process () calls the AbstractProcessorLight#process() method to process the data in the socket. Here you enter the Processor processing process, which will be analyzed in the next section

- Further processing is performed according to the processing status of the socket, and no in-depth analysis is made here. Note, however, that if the status is socketstate Long and the Processor object is in asynchronous mode, it will be passed through getprotocol() Addwaitingprocessor() is added to the waiting queue. Here is Connector start The data base of asynchronous request timeout detection mentioned in

public SocketState process(SocketWrapperBase<S> wrapper, SocketEvent status) { if (getLog().isDebugEnabled()) { getLog().debug(sm.getString("abstractConnectionHandler.process", wrapper.getSocket(), status)); } if (wrapper == null) { // Nothing to do. Socket has been closed. return SocketState.CLOSED; } S socket = wrapper.getSocket(); Processor processor = (Processor) wrapper.getCurrentProcessor(); if (getLog().isDebugEnabled()) { getLog().debug(sm.getString("abstractConnectionHandler.connectionsGet", processor, socket)); } // Timeouts are calculated on a dedicated thread and then // dispatched. Because of delays in the dispatch process, the // timeout may no longer be required. Check here and avoid // unnecessary processing. if (SocketEvent.TIMEOUT == status && (processor == null || !processor.isAsync() && !processor.isUpgrade() || processor.isAsync() && !processor.checkAsyncTimeoutGeneration())) { // This is effectively a NO-OP return SocketState.OPEN; } if (processor != null) { // Make sure an async timeout doesn't fire getProtocol().removeWaitingProcessor(processor); } else if (status == SocketEvent.DISCONNECT || status == SocketEvent.ERROR) { // Nothing to do. Endpoint requested a close and there is no // longer a processor associated with this socket. return SocketState.CLOSED; } ContainerThreadMarker.set(); try { ...... if (processor == null) { processor = getProtocol().createProcessor(); register(processor); if (getLog().isDebugEnabled()) { getLog().debug(sm.getString("abstractConnectionHandler.processorCreate", processor)); } } processor.setSslSupport( wrapper.getSslSupport(getProtocol().getClientCertProvider())); // Associate the processor with the connection wrapper.setCurrentProcessor(processor); SocketState state = SocketState.CLOSED; do { state = processor.process(wrapper, status); ...... } while ( state == SocketState.UPGRADING); if (state == SocketState.LONG) { // In the middle of processing a request/response. Keep the // socket associated with the processor. Exact requirements // depend on type of long poll longPoll(wrapper, processor); if (processor.isAsync()) { getProtocol().addWaitingProcessor(processor); } } ...... // Make sure socket/processor is removed from the list of current // connections wrapper.setCurrentProcessor(null); release(processor); return SocketState.CLOSED; }

2.2 Protocol parser Processor reads socket

-

The AbstractProcessorLight#process() method will handle SocketEvent differently. For SocketEvent OPEN_ Read will call the Http11Processor#service() method

public SocketState process(SocketWrapperBase<?> socketWrapper, SocketEvent status) throws IOException { SocketState state = SocketState.CLOSED; Iterator<DispatchType> dispatches = null; do { if (dispatches != null) { DispatchType nextDispatch = dispatches.next(); if (getLog().isDebugEnabled()) { getLog().debug("Processing dispatch type: [" + nextDispatch + "]"); } state = dispatch(nextDispatch.getSocketStatus()); if (!dispatches.hasNext()) { state = checkForPipelinedData(state, socketWrapper); } } else if (status == SocketEvent.DISCONNECT) { // Do nothing here, just wait for it to get recycled } else if (isAsync() || isUpgrade() || state == SocketState.ASYNC_END) { state = dispatch(status); state = checkForPipelinedData(state, socketWrapper); } else if (status == SocketEvent.OPEN_WRITE) { // Extra write event likely after async, ignore state = SocketState.LONG; } else if (status == SocketEvent.OPEN_READ) { state = service(socketWrapper); } else if (status == SocketEvent.CONNECT_FAIL) { logAccess(socketWrapper); } else { // Default to closing the socket if the SocketEvent passed in // is not consistent with the current state of the Processor state = SocketState.CLOSED; } if (getLog().isDebugEnabled()) { getLog().debug("Socket: [" + socketWrapper + "], Status in: [" + status + "], State out: [" + state + "]"); } if (isAsync()) { state = asyncPostProcess(); if (getLog().isDebugEnabled()) { getLog().debug("Socket: [" + socketWrapper + "], State after async post processing: [" + state + "]"); } } if (dispatches == null || !dispatches.hasNext()) { // Only returns non-null iterator if there are // dispatches to process. dispatches = getIteratorAndClearDispatches(); } } while (state == SocketState.ASYNC_END || dispatches != null && state != SocketState.CLOSED); return state; } -

The key logic of the Http11Processor#service() method is divided into the following steps:

- Call the Http11Processor#setSocketWrapper() method to initialize the internal Http11InputBuffer/Http11OutputBuffer object representing socket input and output

- Read the socket data, parse the request line and request header of the HTTP message, and build the Request/Response object at the Adapter level. The parsing of the request body will be delayed until the business processing needs to use the request parameters. Readers can refer to if they are unclear about the results of the HTTP request message Structure of HTTP request message . In this example, the Http11InputBuffer#parseRequestLine() method will parse the HTTP request line, and the Http11InputBuffer#parseHeaders() method will parse the request header

- Through getadapter() Service (request, response) throws the request / response object into the Adapter for further transformation. So far, it enters the processing of CoyoteAdapter#service() method, which is analyzed in the next section

public SocketState service(SocketWrapperBase<?> socketWrapper) throws IOException { RequestInfo rp = request.getRequestProcessor(); rp.setStage(org.apache.coyote.Constants.STAGE_PARSE); // Setting up the I/O setSocketWrapper(socketWrapper); // Flags keepAlive = true; openSocket = false; readComplete = true; boolean keptAlive = false; SendfileState sendfileState = SendfileState.DONE; while (!getErrorState().isError() && keepAlive && !isAsync() && upgradeToken == null && sendfileState == SendfileState.DONE && !protocol.isPaused()) { // Parsing the request header try { if (!inputBuffer.parseRequestLine(keptAlive, protocol.getConnectionTimeout(), protocol.getKeepAliveTimeout())) { if (inputBuffer.getParsingRequestLinePhase() == -1) { return SocketState.UPGRADING; } else if (handleIncompleteRequestLineRead()) { break; } } // Process the Protocol component of the request line // Need to know if this is an HTTP 0.9 request before trying to // parse headers. prepareRequestProtocol(); if (protocol.isPaused()) { // 503 - Service unavailable response.setStatus(503); setErrorState(ErrorState.CLOSE_CLEAN, null); } else { keptAlive = true; // Set this every time in case limit has been changed via JMX request.getMimeHeaders().setLimit(protocol.getMaxHeaderCount()); // Don't parse headers for HTTP/0.9 if (!http09 && !inputBuffer.parseHeaders()) { // We've read part of the request, don't recycle it // instead associate it with the socket openSocket = true; readComplete = false; break; } if (!protocol.getDisableUploadTimeout()) { socketWrapper.setReadTimeout(protocol.getConnectionUploadTimeout()); } } } catch (IOException e) { if (log.isDebugEnabled()) { log.debug(sm.getString("http11processor.header.parse"), e); } setErrorState(ErrorState.CLOSE_CONNECTION_NOW, e); break; } catch (Throwable t) { ExceptionUtils.handleThrowable(t); UserDataHelper.Mode logMode = userDataHelper.getNextMode(); if (logMode != null) { String message = sm.getString("http11processor.header.parse"); switch (logMode) { case INFO_THEN_DEBUG: message += sm.getString("http11processor.fallToDebug"); //$FALL-THROUGH$ case INFO: log.info(message, t); break; case DEBUG: log.debug(message, t); } } // 400 - Bad Request response.setStatus(400); setErrorState(ErrorState.CLOSE_CLEAN, t); } ...... // Process the request in the adapter if (getErrorState().isIoAllowed()) { try { rp.setStage(org.apache.coyote.Constants.STAGE_SERVICE); getAdapter().service(request, response); // Handle when the response was committed before a serious // error occurred. Throwing a ServletException should both // set the status to 500 and set the errorException. // If we fail here, then the response is likely already // committed, so we can't try and set headers. if(keepAlive && !getErrorState().isError() && !isAsync() && statusDropsConnection(response.getStatus())) { setErrorState(ErrorState.CLOSE_CLEAN, null); } } catch (InterruptedIOException e) { setErrorState(ErrorState.CLOSE_CONNECTION_NOW, e); } catch (HeadersTooLargeException e) { log.error(sm.getString("http11processor.request.process"), e); // The response should not have been committed but check it // anyway to be safe if (response.isCommitted()) { setErrorState(ErrorState.CLOSE_NOW, e); } else { response.reset(); response.setStatus(500); setErrorState(ErrorState.CLOSE_CLEAN, e); response.setHeader("Connection", "close"); // TODO: Remove } } catch (Throwable t) { ExceptionUtils.handleThrowable(t); log.error(sm.getString("http11processor.request.process"), t); // 500 - Internal Server Error response.setStatus(500); setErrorState(ErrorState.CLOSE_CLEAN, t); getAdapter().log(request, response, 0); } } ...... } -

The implementation of Http11InputBuffer#parseRequestLine() method is long. The key is to call Http11InputBuffer#fill() method to read data from socket into byte buffer. Subsequent processing is actually to fetch data from byte buffer for parsing

boolean parseRequestLine(boolean keptAlive, int connectionTimeout, int keepAliveTimeout) throws IOException { // check state if (!parsingRequestLine) { return true; } // // Skipping blank lines // if (parsingRequestLinePhase < 2) { do { // Read new bytes if needed if (byteBuffer.position() >= byteBuffer.limit()) { if (keptAlive) { // Haven't read any request data yet so use the keep-alive // timeout. wrapper.setReadTimeout(keepAliveTimeout); } if (!fill(false)) { // A read is pending, so no longer in initial state parsingRequestLinePhase = 1; return false; } // At least one byte of the request has been received. // Switch to the socket timeout. wrapper.setReadTimeout(connectionTimeout); } if (!keptAlive && byteBuffer.position() == 0 && byteBuffer.limit() >= CLIENT_PREFACE_START.length - 1) { boolean prefaceMatch = true; for (int i = 0; i < CLIENT_PREFACE_START.length && prefaceMatch; i++) { if (CLIENT_PREFACE_START[i] != byteBuffer.get(i)) { prefaceMatch = false; } } if (prefaceMatch) { // HTTP/2 preface matched parsingRequestLinePhase = -1; return false; } } // Set the start time once we start reading data (even if it is // just skipping blank lines) if (request.getStartTime() < 0) { request.setStartTime(System.currentTimeMillis()); } chr = byteBuffer.get(); } while ((chr == Constants.CR) || (chr == Constants.LF)); byteBuffer.position(byteBuffer.position() - 1); parsingRequestLineStart = byteBuffer.position(); parsingRequestLinePhase = 2; if (log.isDebugEnabled()) { log.debug("Received [" + new String(byteBuffer.array(), byteBuffer.position(), byteBuffer.remaining(), StandardCharsets.ISO_8859_1) + "]"); } } ...... } -

The key step of the Http11InputBuffer#fill() method is through the socketwrapper Read() reads data from the socket. In this example, NioSocketWrapper#read() method will be called. After that, the process of reading data using the relevant interfaces provided by Java will be described here

private boolean fill(boolean block) throws IOException { if (parsingHeader) { if (byteBuffer.limit() >= headerBufferSize) { if (parsingRequestLine) { // Avoid unknown protocol triggering an additional error request.protocol().setString(Constants.HTTP_11); } throw new IllegalArgumentException(sm.getString("iib.requestheadertoolarge.error")); } } else { byteBuffer.limit(end).position(end); } byteBuffer.mark(); if (byteBuffer.position() < byteBuffer.limit()) { byteBuffer.position(byteBuffer.limit()); } byteBuffer.limit(byteBuffer.capacity()); SocketWrapperBase<?> socketWrapper = this.wrapper; int nRead = -1; if (socketWrapper != null) { nRead = socketWrapper.read(block, byteBuffer); } else { throw new CloseNowException(sm.getString("iib.eof.error")); } byteBuffer.limit(byteBuffer.position()).reset(); if (nRead > 0) { return true; } else if (nRead == -1) { throw new EOFException(sm.getString("iib.eof.error")); } else { return false; } } -

The core of Http11InputBuffer#parseHeaders() method is to call the internally overloaded Http11InputBuffer#parseHeaders() method. The important processing here is divided into two steps:

- Call Http11InputBuffer#fill() method to read socket data to byte buffer

- Via headers Addvalue() calls the MimeHeaders#addValue() method to insert the Request header data into the headers property of the Request object in the Adapter hierarchy. It will be used later in the Http11Processor#prepareRequest() method and will not be repeated

boolean parseHeaders() throws IOException { if (!parsingHeader) { throw new IllegalStateException(sm.getString("iib.parseheaders.ise.error")); } HeaderParseStatus status = HeaderParseStatus.HAVE_MORE_HEADERS; do { status = parseHeader(); // Checking that // (1) Headers plus request line size does not exceed its limit // (2) There are enough bytes to avoid expanding the buffer when // reading body // Technically, (2) is technical limitation, (1) is logical // limitation to enforce the meaning of headerBufferSize // From the way how buf is allocated and how blank lines are being // read, it should be enough to check (1) only. if (byteBuffer.position() > headerBufferSize || byteBuffer.capacity() - byteBuffer.position() < socketReadBufferSize) { throw new IllegalArgumentException(sm.getString("iib.requestheadertoolarge.error")); } } while (status == HeaderParseStatus.HAVE_MORE_HEADERS); if (status == HeaderParseStatus.DONE) { parsingHeader = false; end = byteBuffer.position(); return true; } else { return false; } } private HeaderParseStatus parseHeader() throws IOException { while (headerParsePos == HeaderParsePosition.HEADER_START) { // Read new bytes if needed if (byteBuffer.position() >= byteBuffer.limit()) { if (!fill(false)) {// parse header headerParsePos = HeaderParsePosition.HEADER_START; return HeaderParseStatus.NEED_MORE_DATA; } } prevChr = chr; chr = byteBuffer.get(); if (chr == Constants.CR && prevChr != Constants.CR) { // Possible start of CRLF - process the next byte. } else if (prevChr == Constants.CR && chr == Constants.LF) { return HeaderParseStatus.DONE; } else { if (prevChr == Constants.CR) { // Must have read two bytes (first was CR, second was not LF) byteBuffer.position(byteBuffer.position() - 2); } else { // Must have only read one byte byteBuffer.position(byteBuffer.position() - 1); } break; } } if (headerParsePos == HeaderParsePosition.HEADER_START) { // Mark the current buffer position headerData.start = byteBuffer.position(); headerData.lineStart = headerData.start; headerParsePos = HeaderParsePosition.HEADER_NAME; } // // Reading the header name // Header name is always US-ASCII // while (headerParsePos == HeaderParsePosition.HEADER_NAME) { // Read new bytes if needed if (byteBuffer.position() >= byteBuffer.limit()) { if (!fill(false)) { // parse header return HeaderParseStatus.NEED_MORE_DATA; } } int pos = byteBuffer.position(); chr = byteBuffer.get(); if (chr == Constants.COLON) { headerParsePos = HeaderParsePosition.HEADER_VALUE_START; headerData.headerValue = headers.addValue(byteBuffer.array(), headerData.start, pos - headerData.start); pos = byteBuffer.position(); // Mark the current buffer position headerData.start = pos; headerData.realPos = pos; headerData.lastSignificantChar = pos; break; } ...... return HeaderParseStatus.HAVE_MORE_HEADERS; }

2.3 Request object conversion of Adapter

The source code of CoyoteAdapter#service() method is as follows, and the key processing is summarized as follows:

- Via connector Createrequest() calls the Connector#createRequest() method to create the HttpServletRequest object provided to the upper container

- Via connector getService(). getContainer(). getPipeline(). getFirst(). The invoke (request, response) chain call throws the HttpServletRequest object into the container for processing. The composition structure of the service in Tomcat is fully reflected here. The component track is connector - > Service - > engine - > pipeline - > valve. Readers who don't remember can refer to it again Tomcat source code analysis (1) - structure composition and core components

public void service(org.apache.coyote.Request req, org.apache.coyote.Response res)

throws Exception {

Request request = (Request) req.getNote(ADAPTER_NOTES);

Response response = (Response) res.getNote(ADAPTER_NOTES);

if (request == null) {

// Create objects

request = connector.createRequest();

request.setCoyoteRequest(req);

response = connector.createResponse();

response.setCoyoteResponse(res);

// Link objects

request.setResponse(response);

response.setRequest(request);

// Set as notes

req.setNote(ADAPTER_NOTES, request);

res.setNote(ADAPTER_NOTES, response);

// Set query string encoding

req.getParameters().setQueryStringCharset(connector.getURICharset());

}

if (connector.getXpoweredBy()) {

response.addHeader("X-Powered-By", POWERED_BY);

}

boolean async = false;

boolean postParseSuccess = false;

req.getRequestProcessor().setWorkerThreadName(THREAD_NAME.get());

try {

// Parse and set Catalina and configuration specific

// request parameters

postParseSuccess = postParseRequest(req, request, res, response);

if (postParseSuccess) {

//check valves if we support async

request.setAsyncSupported(

connector.getService().getContainer().getPipeline().isAsyncSupported());

// Calling the container

connector.getService().getContainer().getPipeline().getFirst().invoke(

request, response);

}

if (request.isAsync()) {

async = true;

ReadListener readListener = req.getReadListener();

if (readListener != null && request.isFinished()) {

// Possible the all data may have been read during service()

// method so this needs to be checked here

ClassLoader oldCL = null;

try {

oldCL = request.getContext().bind(false, null);

if (req.sendAllDataReadEvent()) {

req.getReadListener().onAllDataRead();

}

} finally {

request.getContext().unbind(false, oldCL);

}

}

Throwable throwable =

(Throwable) request.getAttribute(RequestDispatcher.ERROR_EXCEPTION);

// If an async request was started, is not going to end once

// this container thread finishes and an error occurred, trigger

// the async error process

if (!request.isAsyncCompleting() && throwable != null) {

request.getAsyncContextInternal().setErrorState(throwable, true);

}

} else {

request.finishRequest();

response.finishResponse();

}

} catch (IOException e) {

// Ignore

} finally {

AtomicBoolean error = new AtomicBoolean(false);

res.action(ActionCode.IS_ERROR, error);

if (request.isAsyncCompleting() && error.get()) {

// Connection will be forcibly closed which will prevent

// completion happening at the usual point. Need to trigger

// call to onComplete() here.

res.action(ActionCode.ASYNC_POST_PROCESS, null);

async = false;

}

// Access log

if (!async && postParseSuccess) {

// Log only if processing was invoked.

// If postParseRequest() failed, it has already logged it.

Context context = request.getContext();

Host host = request.getHost();

// If the context is null, it is likely that the endpoint was

// shutdown, this connection closed and the request recycled in

// a different thread. That thread will have updated the access

// log so it is OK not to update the access log here in that

// case.

// The other possibility is that an error occurred early in

// processing and the request could not be mapped to a Context.

// Log via the host or engine in that case.

long time = System.currentTimeMillis() - req.getStartTime();

if (context != null) {

context.logAccess(request, response, time, false);

} else if (response.isError()) {

if (host != null) {

host.logAccess(request, response, time, false);

} else {

connector.getService().getContainer().logAccess(

request, response, time, false);

}

}

}

req.getRequestProcessor().setWorkerThreadName(null);

// Recycle the wrapper request and response

if (!async) {

updateWrapperErrorCount(request, response);

request.recycle();

response.recycle();

}

}

}

2.4 handling of Container

-

After the processing in Section 2.3, the HttpServletRequest used by the upper layer enters the container processing phase. The first Valve it needs to pass through is StandardEngineValve#invoke(). You can see that the main logic of this method is to post the request to the next level container Host, and finally call StandardHostValve#invoke()

public final void invoke(Request request, Response response) throws IOException, ServletException { // Select the Host to be used for this Request Host host = request.getHost(); if (host == null) { // HTTP 0.9 or HTTP 1.0 request without a host when no default host // is defined. This is handled by the CoyoteAdapter. return; } if (request.isAsyncSupported()) { request.setAsyncSupported(host.getPipeline().isAsyncSupported()); } // Ask this Host to process this request host.getPipeline().getFirst().invoke(request, response); } -

The core logic of StandardHostValve#invoke() is to post the request to the next level container, and finally call the StandardContextValve#invoke() method

public final void invoke(Request request, Response response) throws IOException, ServletException { // Select the Context to be used for this Request Context context = request.getContext(); if (context == null) { return; } if (request.isAsyncSupported()) { request.setAsyncSupported(context.getPipeline().isAsyncSupported()); } boolean asyncAtStart = request.isAsync(); try { context.bind(Globals.IS_SECURITY_ENABLED, MY_CLASSLOADER); if (!asyncAtStart && !context.fireRequestInitEvent(request.getRequest())) { // Don't fire listeners during async processing (the listener // fired for the request that called startAsync()). // If a request init listener throws an exception, the request // is aborted. return; } // Ask this Context to process this request. Requests that are // already in error must have been routed here to check for // application defined error pages so DO NOT forward them to the the // application for processing. try { if (!response.isErrorReportRequired()) { context.getPipeline().getFirst().invoke(request, response); } } catch (Throwable t) { ExceptionUtils.handleThrowable(t); container.getLogger().error("Exception Processing " + request.getRequestURI(), t); // If a new error occurred while trying to report a previous // error allow the original error to be reported. if (!response.isErrorReportRequired()) { request.setAttribute(RequestDispatcher.ERROR_EXCEPTION, t); throwable(request, response, t); } } // Now that the request/response pair is back under container // control lift the suspension so that the error handling can // complete and/or the container can flush any remaining data response.setSuspended(false); Throwable t = (Throwable) request.getAttribute(RequestDispatcher.ERROR_EXCEPTION); // Protect against NPEs if the context was destroyed during a // long running request. if (!context.getState().isAvailable()) { return; } // Look for (and render if found) an application level error page if (response.isErrorReportRequired()) { // If an error has occurred that prevents further I/O, don't waste time // producing an error report that will never be read AtomicBoolean result = new AtomicBoolean(false); response.getCoyoteResponse().action(ActionCode.IS_IO_ALLOWED, result); if (result.get()) { if (t != null) { throwable(request, response, t); } else { status(request, response); } } } if (!request.isAsync() && !asyncAtStart) { context.fireRequestDestroyEvent(request.getRequest()); } } finally { // Access a session (if present) to update last accessed time, based // on a strict interpretation of the specification if (ACCESS_SESSION) { request.getSession(false); } context.unbind(Globals.IS_SECURITY_ENABLED, MY_CLASSLOADER); } } -

The StandardContextValve#invoke() method finally posts the request to the lowest level container Wrapper, and StandardWrapperValve#invoke() will be called

public final void invoke(Request request, Response response) throws IOException, ServletException { // Disallow any direct access to resources under WEB-INF or META-INF MessageBytes requestPathMB = request.getRequestPathMB(); if ((requestPathMB.startsWithIgnoreCase("/META-INF/", 0)) || (requestPathMB.equalsIgnoreCase("/META-INF")) || (requestPathMB.startsWithIgnoreCase("/WEB-INF/", 0)) || (requestPathMB.equalsIgnoreCase("/WEB-INF"))) { response.sendError(HttpServletResponse.SC_NOT_FOUND); return; } // Select the Wrapper to be used for this Request Wrapper wrapper = request.getWrapper(); if (wrapper == null || wrapper.isUnavailable()) { response.sendError(HttpServletResponse.SC_NOT_FOUND); return; } // Acknowledge the request try { response.sendAcknowledgement(); } catch (IOException ioe) { container.getLogger().error(sm.getString( "standardContextValve.acknowledgeException"), ioe); request.setAttribute(RequestDispatcher.ERROR_EXCEPTION, ioe); response.sendError(HttpServletResponse.SC_INTERNAL_SERVER_ERROR); return; } if (request.isAsyncSupported()) { request.setAsyncSupported(wrapper.getPipeline().isAsyncSupported()); } wrapper.getPipeline().getFirst().invoke(request, response); } -

The important processing of StandardWrapperValve#invoke() is divided into the following steps:

- First, through wrapper Allocate() calls the StandardWrapper#allocate() method to create a Servlet instance using reflection

- Call applicationfilterfactory Createfilterchain() encapsulates the Servlet and related filters as ApplicationFilterChain execution chain objects

- Call the ApplicationFilterChain#doFilter() method to execute the execution chain

public final void invoke(Request request, Response response) throws IOException, ServletException { // Initialize local variables we may need boolean unavailable = false; Throwable throwable = null; // This should be a Request attribute... long t1=System.currentTimeMillis(); requestCount.incrementAndGet(); StandardWrapper wrapper = (StandardWrapper) getContainer(); Servlet servlet = null; Context context = (Context) wrapper.getParent(); // Check for the application being marked unavailable if (!context.getState().isAvailable()) { response.sendError(HttpServletResponse.SC_SERVICE_UNAVAILABLE, sm.getString("standardContext.isUnavailable")); unavailable = true; } // Check for the servlet being marked unavailable if (!unavailable && wrapper.isUnavailable()) { container.getLogger().info(sm.getString("standardWrapper.isUnavailable", wrapper.getName())); long available = wrapper.getAvailable(); if ((available > 0L) && (available < Long.MAX_VALUE)) { response.setDateHeader("Retry-After", available); response.sendError(HttpServletResponse.SC_SERVICE_UNAVAILABLE, sm.getString("standardWrapper.isUnavailable", wrapper.getName())); } else if (available == Long.MAX_VALUE) { response.sendError(HttpServletResponse.SC_NOT_FOUND, sm.getString("standardWrapper.notFound", wrapper.getName())); } unavailable = true; } // Allocate a servlet instance to process this request try { if (!unavailable) { servlet = wrapper.allocate(); } } catch (UnavailableException e) { container.getLogger().error( sm.getString("standardWrapper.allocateException", wrapper.getName()), e); long available = wrapper.getAvailable(); if ((available > 0L) && (available < Long.MAX_VALUE)) { response.setDateHeader("Retry-After", available); response.sendError(HttpServletResponse.SC_SERVICE_UNAVAILABLE, sm.getString("standardWrapper.isUnavailable", wrapper.getName())); } else if (available == Long.MAX_VALUE) { response.sendError(HttpServletResponse.SC_NOT_FOUND, sm.getString("standardWrapper.notFound", wrapper.getName())); } } catch (ServletException e) { container.getLogger().error(sm.getString("standardWrapper.allocateException", wrapper.getName()), StandardWrapper.getRootCause(e)); throwable = e; exception(request, response, e); } catch (Throwable e) { ExceptionUtils.handleThrowable(e); container.getLogger().error(sm.getString("standardWrapper.allocateException", wrapper.getName()), e); throwable = e; exception(request, response, e); servlet = null; } MessageBytes requestPathMB = request.getRequestPathMB(); DispatcherType dispatcherType = DispatcherType.REQUEST; if (request.getDispatcherType()==DispatcherType.ASYNC) dispatcherType = DispatcherType.ASYNC; request.setAttribute(Globals.DISPATCHER_TYPE_ATTR,dispatcherType); request.setAttribute(Globals.DISPATCHER_REQUEST_PATH_ATTR, requestPathMB); // Create the filter chain for this request ApplicationFilterChain filterChain = ApplicationFilterFactory.createFilterChain(request, wrapper, servlet); // Call the filter chain for this request // NOTE: This also calls the servlet's service() method Container container = this.container; try { if ((servlet != null) && (filterChain != null)) { // Swallow output if needed if (context.getSwallowOutput()) { try { SystemLogHandler.startCapture(); if (request.isAsyncDispatching()) { request.getAsyncContextInternal().doInternalDispatch(); } else { filterChain.doFilter(request.getRequest(), response.getResponse()); } } finally { String log = SystemLogHandler.stopCapture(); if (log != null && log.length() > 0) { context.getLogger().info(log); } } } else { if (request.isAsyncDispatching()) { request.getAsyncContextInternal().doInternalDispatch(); } else { filterChain.doFilter (request.getRequest(), response.getResponse()); } } } } catch (ClientAbortException | CloseNowException e) { ...... } } -

The ApplicationFilterChain#doFilter() method is implemented as follows. You can see that the core logic is to call the ApplicationFilterChain#internalDoFilter() method to execute the Filter first, and then call the servlet Service (request, response) calls the GenericServlet#service() method

public void doFilter(ServletRequest request, ServletResponse response) throws IOException, ServletException { if( Globals.IS_SECURITY_ENABLED ) { final ServletRequest req = request; final ServletResponse res = response; try { java.security.AccessController.doPrivileged( new java.security.PrivilegedExceptionAction<Void>() { @Override public Void run() throws ServletException, IOException { internalDoFilter(req,res); return null; } } ); } catch( PrivilegedActionException pe) { Exception e = pe.getException(); if (e instanceof ServletException) throw (ServletException) e; else if (e instanceof IOException) throw (IOException) e; else if (e instanceof RuntimeException) throw (RuntimeException) e; else throw new ServletException(e.getMessage(), e); } } else { internalDoFilter(request,response); } } private void internalDoFilter(ServletRequest request, ServletResponse response) throws IOException, ServletException { // Call the next filter if there is one if (pos < n) { ApplicationFilterConfig filterConfig = filters[pos++]; try { Filter filter = filterConfig.getFilter(); if (request.isAsyncSupported() && "false".equalsIgnoreCase( filterConfig.getFilterDef().getAsyncSupported())) { request.setAttribute(Globals.ASYNC_SUPPORTED_ATTR, Boolean.FALSE); } if( Globals.IS_SECURITY_ENABLED ) { final ServletRequest req = request; final ServletResponse res = response; Principal principal = ((HttpServletRequest) req).getUserPrincipal(); Object[] args = new Object[]{req, res, this}; SecurityUtil.doAsPrivilege ("doFilter", filter, classType, args, principal); } else { filter.doFilter(request, response, this); } } catch (IOException | ServletException | RuntimeException e) { throw e; } catch (Throwable e) { e = ExceptionUtils.unwrapInvocationTargetException(e); ExceptionUtils.handleThrowable(e); throw new ServletException(sm.getString("filterChain.filter"), e); } return; } // We fell off the end of the chain -- call the servlet instance try { if (ApplicationDispatcher.WRAP_SAME_OBJECT) { lastServicedRequest.set(request); lastServicedResponse.set(response); } if (request.isAsyncSupported() && !servletSupportsAsync) { request.setAttribute(Globals.ASYNC_SUPPORTED_ATTR, Boolean.FALSE); } // Use potentially wrapped request from this point if ((request instanceof HttpServletRequest) && (response instanceof HttpServletResponse) && Globals.IS_SECURITY_ENABLED ) { final ServletRequest req = request; final ServletResponse res = response; Principal principal = ((HttpServletRequest) req).getUserPrincipal(); Object[] args = new Object[]{req, res}; SecurityUtil.doAsPrivilege("service", servlet, classTypeUsedInService, args, principal); } else { servlet.service(request, response); } } catch (IOException | ServletException | RuntimeException e) { throw e; } catch (Throwable e) { e = ExceptionUtils.unwrapInvocationTargetException(e); ExceptionUtils.handleThrowable(e); throw new ServletException(sm.getString("filterChain.servlet"), e); } finally { if (ApplicationDispatcher.WRAP_SAME_OBJECT) { lastServicedRequest.set(null); lastServicedResponse.set(null); } } } -

GenericServlet#service() is an interface method, which is implemented as HttpServlet#service() method, and HttpServlet#service() method will actually call the HttpServlet#service() method overridden by subclasses. Here you can see that the FrameworkServlet in the Spring WebMVC framework rewrites this method, and now enters the Spring WebMVC framework layer

public void service(ServletRequest req, ServletResponse res) throws ServletException, IOException { HttpServletRequest request; HttpServletResponse response; try { request = (HttpServletRequest) req; response = (HttpServletResponse) res; } catch (ClassCastException e) { throw new ServletException(lStrings.getString("http.non_http")); } service(request, response); } -

The core logic of FrameworkServlet#service() is to invoke the FrameworkServlet#processRequest() method and call the doService() method implemented by the subclass in this method, that is, calling DispatcherServlet#doService(). Here I finally saw the dispatcherservlet in the Spring WebMVC framework. From then on, the request was sent to each processing method we wrote. Readers can refer to it if they are interested Spring WebMVC source code analysis (1) - request processing main process , the Tomcat request processing process has basically ended

protected void service(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException { HttpMethod httpMethod = HttpMethod.resolve(request.getMethod()); if (httpMethod == HttpMethod.PATCH || httpMethod == null) { processRequest(request, response); } else { super.service(request, response); } } protected final void processRequest(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException { long startTime = System.currentTimeMillis(); Throwable failureCause = null; LocaleContext previousLocaleContext = LocaleContextHolder.getLocaleContext(); LocaleContext localeContext = buildLocaleContext(request); RequestAttributes previousAttributes = RequestContextHolder.getRequestAttributes(); ServletRequestAttributes requestAttributes = buildRequestAttributes(request, response, previousAttributes); WebAsyncManager asyncManager = WebAsyncUtils.getAsyncManager(request); asyncManager.registerCallableInterceptor(FrameworkServlet.class.getName(), new RequestBindingInterceptor()); initContextHolders(request, localeContext, requestAttributes); try { doService(request, response); } catch (ServletException | IOException ex) { failureCause = ex; throw ex; } catch (Throwable ex) { failureCause = ex; throw new NestedServletException("Request processing failed", ex); } finally { resetContextHolders(request, previousLocaleContext, previousAttributes); if (requestAttributes != null) { requestAttributes.requestCompleted(); } logResult(request, response, failureCause, asyncManager); publishRequestHandledEvent(request, response, startTime, failureCause); } } ```