nn Fault Handling

1. SceneThe NameNode process hangs and the stored data is lost. How to recover the NameNode

2. Fault simulation

(1) kill -9 NameNode process

(2) Delete the data stored in NameNode (/ home/lqs/module/hadoop-3.1.3/data/tmp/dfs/name)[lqs@bdc112 current]$ kill -9 19886

3. Solution[lqs@bdc112 hadoop-3.1.3]$ rm -rf /home/lqs/module/hadoop-3.1.3/data/dfs/name/*

(1) Copy the data in the SecondaryNameNode to the original NameNode storage data directory

(2) Restart NameNode[lqs@bdc112 dfs]$ scp -rlqs@bdc114: /home/lqs/module/hadoop-3.1.3/data/dfs/namesecondary/* ./name/

(3) Upload a file to the cluster[lqs@bdc112 hadoop-3.1.3]$ hdfs --daemon start namenode

Cluster security mode & disk repair

brief introduction

The so-called security mode is that the file system only accepts data read requests, but does not accept change requests such as deletion and modificationWhich scenarios will enter safe mode

1. nn will enter safe mode during the time period of loading image files and editing logs2. nn will also be in safe mode when receiving dn registration

Conditions for exiting safe mode

1. When DFS namenode. safemode. Min.datanodes: minimum number of available datanodes, 0 by default2,dfs. namenode. safemode. Threshold PCT: the percentage of blocks with the minimum number of copies in the total number of blocks in the system. The default value is 0.999f. (only one block is allowed to be lost)

3,dfs.namenode.safemode.extension: stabilization time. The default value is 30000 milliseconds, i.e. 30 seconds

Basic grammar

The cluster is in safe mode and cannot perform important operations (write operations). After the cluster is started, it will automatically exit safe mode.bin/hdfs dfsadmin -safemode get Function: view the safe mode status bin/hdfs dfsadmin -safemode enter Function: enter safe mode state bin/hdfs dfsadmin -safemode leave Function: leave safe mode state bin/hdfs dfsadmin -safemode wait Function: wait for safe mode status

Practice 01, start the cluster and enter the safe mode

1. Restart the cluster2. After the cluster is started, immediately go to the cluster to delete data, and prompt that the cluster is in safe mode[lqs@bdc112 subdir0]$ myhadoop.sh stop [lqs@bdc112 subdir0]$ myhadoop.sh start

Practice 02, disk repair

Scenario requirements:The data block is damaged and enters the safe mode. How to solve it

1. Enter / home / LQS / module / hadoop-3.1 of bdc112, bdc113 and bdc114 respectively 3/data/dfs/data/current/BP-1015489500-192.168. 10.102-1611909480872 / current / finalized / subdir0 / subdir0 directory, uniformly delete two block information

2. Restart the cluster[lqs@bdc112 subdir0]$ pwd/home/lqs/module/hadoop-3.1.3/data/dfs/data/current/BP-1015489500-192.168.10.102-1611909480872/current/finalized/subdir0/subdir0 [lqs@bdc112 subdir0]$ rm -rf blk_1073741847 blk_1073741847_1023.meta [lqs@bdc112 subdir0]$ rm -rf blk_1073741865 blk_1073741865_1042.meta #Note: bdc113 and bdc114 repeat the above commands

3. Observe http://bdc112:9870/dfshealth.html#tab-overview[lqs@bdc112 subdir0]$ myhadoop.sh stop [lqs@bdc112 subdir0]$ myhadoop.sh start

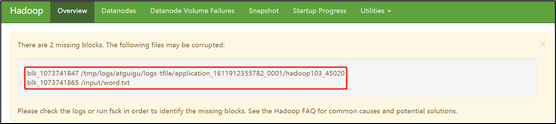

If the above figure appears, it indicates that the security mode has been turned on and the number of blocks does not meet the requirements.

4. Leave safe mode

5. Observe http://bdc112:9870/dfshealth.html#tab-overview[lqs@bdc112 subdir0]$ hdfs dfsadmin -safemode get Safe mode is ON [lqs@bdc112 subdir0]$ hdfs dfsadmin -safemode leave Safe mode is OFF

6. Delete metadata on the web side

7. Observe http://bdc112:9870/dfshealth.html#tab-overview, the cluster is normal

Practical operation 03

Scenario requirements:Simulate wait safe mode

1. View current mode

2. First in safe mode[lqs@bdc112 hadoop-3.1.3]$ hdfs dfsadmin -safemode get Safe mode is OFF

3. Create and execute the following script[lqs@bdc112 hadoop-3.1.3]$ bin/hdfs dfsadmin -safemode enter

At / home / LQS / module / hadoop-3.1 3 path, edit a script safemode sh

[lqs@bdc112 hadoop-3.1.3]$ vim safemode.sh

#!/bin/bash hdfs dfsadmin -safemode wait hdfs dfs -put /home/lqs/module/hadoop-3.1.3/README.txt /

4. In addition, open another window to execute[lqs@bdc112 hadoop-3.1.3]$ chmod 777 safemode.sh [lqs@bdc112 hadoop-3.1.3]$ ./safemode.sh

5. Look at the previous window[lqs@bdc112 hadoop-3.1.3]$ bin/hdfs dfsadmin -safemode leave

6. There are already uploaded data on the HDFS clusterSafe mode is OFF