WeChat official account: operation and development story, author: Mr. Dong Zi

1. Fault phenomenon

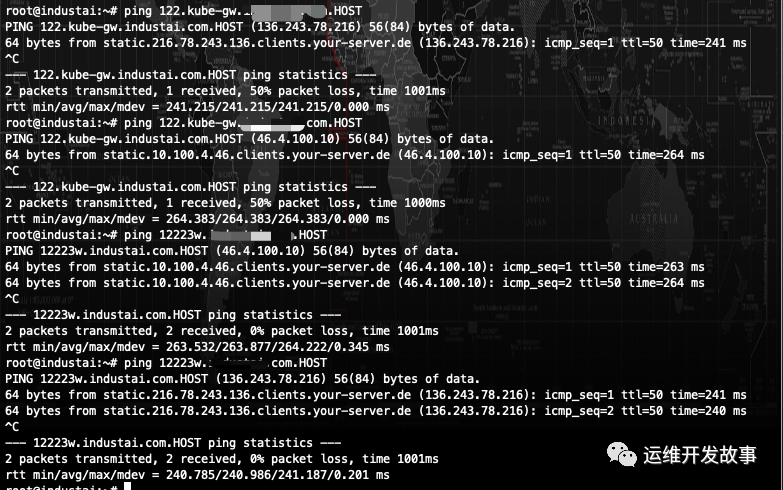

We have an agent agent service. After publishing to the k8s cluster, the pod status is Running, but the server has been unable to receive the heartbeat signal. Therefore, we go to the cluster to check the log and find a large number of TCP timeouts connected to an ip address in the service log

img

2. Troubleshooting process

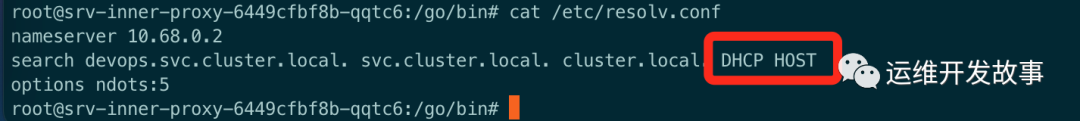

Through checking the logs, it is found that there are a large number of error logs. Connecting to an ip address generates i/o timeout. Therefore, check the business logic of the service. The service will only connect to the server side. The domain name of the server side is configured in the environment variable of the service. It is suspected that it is possible for the server side to hang up and call the address of the server on the local and cluster host. It is found that it is feasible, Therefore, the problem of the server itself is eliminated

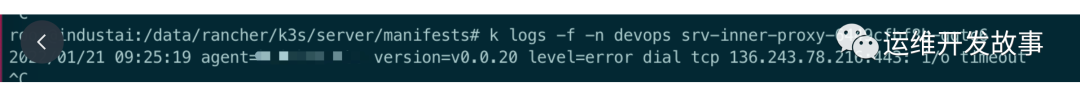

Because the connection address of the server side can be called normally on our local HOST and cluster HOST, it is suspected that the address from the service pod to the server side is not available. After entering the pod for testing, it is found that it can not be called. It is also possible to use the ping domain name, but it is found that the ip address resolved by ping is not the external ip address of our server side; Therefore, it is suspected that the problem of dns resolution is solved by using the nsloopup command (usually the service does not have this command. You need to manually install apt get install dnsutils, yum install bind utils, or use the kubectl debug tool to share containers for troubleshooting). The resolved name is a very strange name, and the domain name is followed by a HOST

img

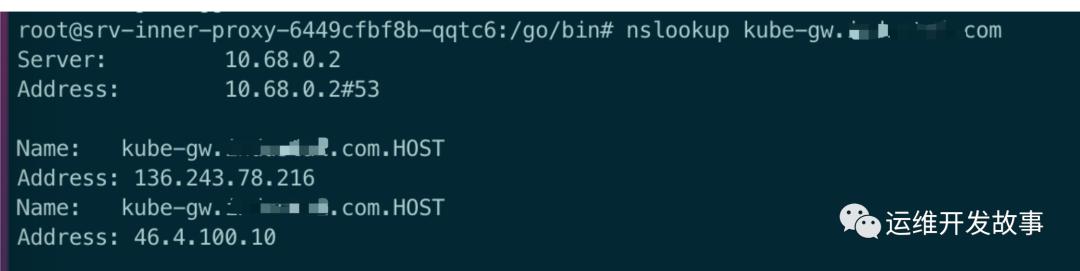

Take a closer look at / etc / resolv Conf, it is found that there is a HOST search domain in the search domain, so the domain name will be resolved with HOST

img

I also tested several domain names. As long as I bring HOST in the end, it will be resolved to an ip address. After searching the Internet, I know that this HOST is a top-level domain name and will be generally resolved to an ip address

img

So far, the cause of this failure has been located because the search domain in pod contains a top-level domain name HOST, and the resulting pan resolution is to an address that is not our server side

3. Failure cause analysis

First of all, we need to know how the pod in k8s calls domain names between services and resolves them?

Analysis of domain name resolution in Kubernetes

- Cluster internal domain name resolution

In Kubernetes, for example, service a accesses service B. for the same Namespace, it can be accessed directly in pod through curl B. For the case of cross Namespace, the corresponding Namespace after the service name is enough. For example, curl b.devops. Then, users will have several questions:

① : what is the service name? ② : why can you directly access the service name under the same Namespace? Under different namespaces, you need to bring a Namespace? ③ : why can internal domain names be resolved? What is the principle?

How DNS resolves depends on the configuration of the resolv file in the container

cat /etc/resolv.conf nameserver 10.68.0.2 search devops.svc.cluster.local. svc.cluster.local. cluster.local.

In this file, the configured DNS Server is generally the ClusterIP of kubedns Service in K8S. This IP is a virtual IP and cannot be ping ed, but can be accessed.

root@other-8-67:~# kubectl get svc -n kube-system |grep dns kube-dns ClusterIP 10.68.0.2 <none> 53/UDP,53/TCP,9153/TCP 106d

Therefore, all domain names should be resolved through kubedns virtual IP 10.68.0.2, whether internal or external domain names of Kubernetes. In Kubernetes, the full name of the domain name must be Service name namespace. svc. cluster. In the local mode, the Service name is the name of the Service in Kubernetes. Therefore, when we execute the following command:

curl b

There must be a Service named b, which is the premise.

In the container, it will be based on / etc / resolve Conf for parsing process. Select nameserver 10.68.0.2 for parsing, and then use the string "b" to bring in / etc / resolve search domain in conf for DNS lookup, which are:

// The search content is similar to the following (the first field will be different for different pod s) search devops.svc.cluster.local svc.cluster.local cluster.local

b.devops. svc. cluster. local -> b.svc. cluster. local -> b.cluster. Local until it is found.

Therefore, we can complete DNS requests by executing curl B or curl b.devops. These two different operations will perform different DNS operations respectively

// curl b, which can be found at one time (b +devops.svc.cluster.local) b.devops.svc.cluster.local // The first time (c.dev.ops + cluster. Dev, c.ops. DEV) cannot be found b.devops.devops.svc.cluster.local // The second search (b.devops + svc.cluster.local), you can find b.devops.svc.cluster.local

Therefore, curl B is more efficient than curl b.devops, because curl b.devops has gone through one more DNS query.

- Cluster external domain name resolution

Do you use the search domain to access external domain names? Depending on the situation, it can be said that the search domain is used in most cases. We ask Baidu Com as an example, take a look at accessing baidu.com in a container by capturing packets COM, what kind of packets are generated in the process of DNS lookup. Note: if we want to catch the packet of the DNS container, we must first enter the network of the DNS container (not the container that initiated the DNS request).

Because DNS containers often do not have bash, they cannot enter the container to capture packets through docker exec. We use other methods:

// 1. Find the container ID and print its NS ID

docker inspect --format "{{.State.Pid}}" 16938de418ac

// 2. Enter the network Namespace of this container

nsenter -n -t 54438

// 3. Grab DNS packet

tcpdump -i eth0 udp dst port 53|grep baidu.com

In other containers, Baidu COM domain name search

nslookup baidu.com 114.114.114.114

Note: the reason why the IP address of the DNS service container is specified at the end of the nslookup command is that if it is not specified and there are multiple DNS service containers, the DNS request may be equally distributed to all DNS service containers. If we only catch the packets caught by a single DNS service container, it may be incomplete. After specifying the IP address, the DNS request will only hit a single DNS container. The packet capture data is complete.

You can see similar results as follows:

11:46:26.843118 IP srv-device-manager-7595d6795c-8rq6n.60857 > kube-dns.kube-system.svc.cluster.local.domain: 19198+ A? baidu.com.devops.svc.cluster.local. (49) 11:46:26.843714 IP srv-device-manager-7595d6795c-8rq6n.35998 > kube-dns.kube-system.svc.cluster.local.domain: 53768+ AAAA? baidu.com.devops.svc.cluster.local. (49) 11:46:26.844260 IP srv-device-manager-7595d6795c-8rq6n.57939 > kube-dns.kube-system.svc.cluster.local.domain: 48864+ A? baidu.com.svc.cluster.local. (45) 11:46:26.844666 IP srv-device-manager-7595d6795c-8rq6n.35990 > kube-dns.kube-system.svc.cluster.local.domain: 43238+ AAAA? baidu.com.svc.cluster.local. (45) 11:46:26.845153 IP srv-device-manager-7595d6795c-8rq6n.58745 > kube-dns.kube-system.svc.cluster.local.domain: 59086+ A? baidu.com.cluster.local. (41) 11:46:26.845543 IP srv-device-manager-7595d6795c-8rq6n.32910 > kube-dns.kube-system.svc.cluster.local.domain: 30930+ AAAA? baidu.com.cluster.local. (41) 11:46:26.845907 IP srv-device-manager-7595d6795c-8rq6n.55367 > kube-dns.kube-system.svc.cluster.local.domain: 58903+ A? baidu.com. (27) 11:46:26.861714 IP srv-device-manager-7595d6795c-8rq6n.32900 > kube-dns.kube-system.svc.cluster.local.domain: 58394+ AAAA? baidu.com. (27)

We can see that in the real analysis of Baidu COM, experienced baidu.com com. devops. svc. cluster. local. -> baidu. com. svc. cluster. local. -> baidu. com. cluster. local. -> baidu. com.

This means that there are three DNS requests, which are wasteful and meaningless requests. This is because in Kubernetes, / etc / resolv The conf file contains not only the nameserver and search domains, but also a very important item: ndots.

/prometheus $ cat /etc/resolv.conf nameserver 10.66.0.2 search monitor.svc.cluster.local. svc.cluster.local. cluster.local. options ndots:5

ndots:5, indicating that if the queried domain name contains a dot ".", If there are less than 5, the DNS search will use the non fully qualified name (or absolute domain name). If the domain name you query contains points greater than or equal to 5, the DNS query will use the absolute domain name by default. For example:

If the domain name we requested is a.b.c.d.e, and there are four points in the domain name, then the non absolute domain name will be used for DNS request in the container, and the non absolute domain name will be used according to / etc / resolv In the search field in conf, go through the following steps to add matching:

a.b.c.d.e.devops.svc.cluster.local. -> a.b.c.d.e.svc.cluster.local. -> a.b.c.d.e.cluster.local.

Until you find it. If the domain cannot be found after the search, use a.b.c.d.e. as the absolute domain name for DNS search.

We analyze a specific case through packet capture: the number of points in the domain name is less than 5:

// DNS resolution request for domain name a.b.c.d.com root@srv-xxx-7595d6795c-8rq6n:/go/bin# nslookup a.b.c.d.com Server: 10.68.0.2 Address: 10.68.0.2#53 ** server can't find a.b.c.d.com: NXDOMAIN // The packet capture data are as follows: root@srv-device-manager-7595d6795c-8rq6n:/go/bin# tcpdump -i eth0 udp dst port 53 -c 20 |grep a.b.c.d.com tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes 20 packets captured16:14:40.053575 IP srv-device-manager-7595d6795c-8rq6n.37359 > kube-dns.kube-system.svc.cluster.local.domain: 29842+ A? a.b.c.d.com.cluster.local. (43) 16:14:40.054083 IP srv-device-manager-7595d6795c-8rq6n.34813 > kube-dns.kube-system.svc.cluster.local.domain: 19104+ AAAA? a.b.c.d.com.cluster.local. (43) 25 packets received by filter16:14:40.054983 IP srv-device-manager-7595d6795c-8rq6n.37303 > kube-dns.kube-system.svc.cluster.local.domain: 53902+ A? a.b.c.d.com.devops.svc.cluster.local. (51) 16:14:40.055465 IP srv-device-manager-7595d6795c-8rq6n.40766 > kube-dns.kube-system.svc.cluster.local.domain: 34453+ AAAA? a.b.c.d.com.devops.svc.cluster.local. (51) 0 packets dropped by kernel 16:14:40.055946 IP srv-device-manager-7595d6795c-8rq6n.35443 > kube-dns.kube-system.svc.cluster.local.domain: 24829+ A? a.b.c.d.com.svc.cluster.local. (47) 16:14:40.057698 IP srv-device-manager-7595d6795c-8rq6n.44180 > kube-dns.kube-system.svc.cluster.local.domain: 23046+ AAAA? a.b.c.d.com.svc.cluster.local. (47) 16:14:40.058062 IP srv-device-manager-7595d6795c-8rq6n.56986 > kube-dns.kube-system.svc.cluster.local.domain: 42008+ A? a.b.c.d.com. (29) 16:14:40.075579 IP srv-device-manager-7595d6795c-8rq6n.55738 > kube-dns.kube-system.svc.cluster.local.domain: 32284+ AAAA? a.b.c.d.com. (29) // Conclusion: // If the number of points is less than 5, first go to the search domain, and finally query it as an absolute domain name

The number of points in the domain name > = 5:

// DNS resolution request for domain name a.b.c.d.e.com root@srv-xxx-7595d6795c-8rq6n:/go/bin# nslookup a.b.c.d.e.com Server: 10.68.0.2 Address: 10.68.0.2#53 ** server can't find a.b.c.d.e.com: NXDOMAIN // The packet capture data are as follows: root@srv-device-manager-7595d6795c-8rq6n:/go/bin# tcpdump -i eth0 udp dst port 53 -c 20 |grep a.b.c.d.e.com tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes 16:32:39.624305 IP srv-device-manager-7595d6795c-8rq6n.56274 > kube-dns.kube-system.svc.cluster.local.domain: 43582+ A? a.b.c.d.e.com. (31) 20 packets captured16:32:39.805470 IP srv-device-manager-7595d6795c-8rq6n.56909 > kube-dns.kube-system.svc.cluster.local.domain: 27206+ AAAA? a.b.c.d.e.com. (31) 16:32:39.833203 IP srv-device-manager-7595d6795c-8rq6n.33370 > kube-dns.kube-system.svc.cluster.local.domain: 14881+ A? a.b.c.d.e.com.cluster.local. (45) 21 packets received by filter16:32:39.833779 IP srv-device-manager-7595d6795c-8rq6n.40814 > kube-dns.kube-system.svc.cluster.local.domain: 43047+ AAAA? a.b.c.d.e.com.cluster.local. (45) 16:32:39.834363 IP srv-device-manager-7595d6795c-8rq6n.53053 > kube-dns.kube-system.svc.cluster.local.domain: 17994+ A? a.b.c.d.e.com.iot.svc.cluster.local. (53) 0 packets dropped by kernel16:32:39.834740 IP srv-device-manager-7595d6795c-8rq6n.47803 > kube-dns.kube-system.svc.cluster.local.domain: 15951+ AAAA? a.b.c.d.e.com.iot.svc.cluster.local. (53) 16:32:39.835177 IP srv-device-manager-7595d6795c-8rq6n.60845 > kube-dns.kube-system.svc.cluster.local.domain: 38541+ A? a.b.c.d.e.com.svc.cluster.local. (49) 16:32:39.835611 IP srv-device-manager-7595d6795c-8rq6n.36086 > kube-dns.kube-system.svc.cluster.local.domain: 49809+ AAAA? a.b.c.d.e.com.svc.cluster.local. (49) // Conclusion: // The number of points > = 5 is directly regarded as the absolute domain name for search. Only when the query cannot be found, the search domain will continue.

Optimization method 1: use fully qualified domain name

In fact, the most direct and effective optimization method is to use "fully qualified name". In short, using "fully qualified domain name" (also known as absolute domain name), the domain name you visit must be marked with "." It is a suffix, which will avoid using the search field for matching. Let's grab the packet and try again:

nslookup a.b.c.com.

The packets caught on the DNS service container are as follows

root@srv-device-manager-7595d6795c-8rq6n:/go/bin# tcpdump -i eth0 udp dst port 53 -c 20 |grep a.b.c.com. tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes 16:39:31.771615 IP srv-device-manager-7595d6795c-8rq6n.50332 > kube-dns.kube-system.svc.cluster.local.domain: 50829+ A? a.b.c.com. (27) 20 packets captured16:39:31.793579 IP srv-device-manager-7595d6795c-8rq6n.51946 > kube-dns.kube-system.svc.cluster.local.domain: 25235+ AAAA? a.b.c.com. (27)

There are no redundant DNS requests

Optimization method 2: configure specific ndots for specific applications

In fact, often we are really not good at using this absolute domain name. Who requests Baidu COM, also written as Baidu com. And?

In kubernetes, the default setting of the ndots value is 5, because kubernetes believes that the maximum length of the internal domain name is 5. To ensure the request of the internal domain name, give priority to the DNS within the cluster rather than the DNS resolution request of the internal domain name. There is a chance to reach the external network. Kubernetes sets the ndots value to 5, which is a more reasonable behavior.

If you need to customize this length, you'd better configure ndots separately for your own business (deployment as an example).

...

spec:

containers:

- env:

- name: GOENV

value: DEV

image: xxx/devops/srv-inner-proxy

imagePullPolicy: IfNotPresent

lifecycle: {}

livenessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8000

scheme: HTTP

initialDelaySeconds: 5

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

name: srv-inner-proxy

ports:

- containerPort: 80

protocol: TCP

- containerPort: 8000

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /health

port: 8000

scheme: HTTP

initialDelaySeconds: 5

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsConfig:

options:

- name: timeout

value: "2"

- name: ndots

value: "2"

- name: single-request-reopen

dnsPolicy: ClusterFirst

...

In Kubernetes, there are four DNS policies

Specifically:

- None

Indicates an empty DNS setting

This method is generally used in scenarios where you want to customize DNS configuration, and it often needs to be used together with dnsConfig to customize DNS.

- Default

Some people say that Default is the way to use the host, which is not accurate.

This way, in fact, is to let kubelet decide what DNS policy to use. By default, kubelet uses / etc / resolv Conf (maybe this is how some people say to use the DNS policy of the host), but kubelet can flexibly configure what files to use for DNS policy. We can use kubelet's parameters: – resolv conf = / etc / resolv Conf to determine your DNS resolution file address.

- ClusterFirst

In this way, it means that DNS in POD uses DNS services configured in the cluster. In short, it means using kubedns or coredns services in Kubernetes for domain name resolution. If the resolution is unsuccessful, the DNS configuration of the host will be used for resolution.

- ClusterFirstWithHostNet

In some scenarios, our POD is started in the HOST mode (the HOST mode is shared with the HOST network). Once the HOST mode is used, it means that all containers in this POD should use the / etc / resolv of the HOST Conf configuration for DNS query, but if you want to use the HOST mode and continue to use the DNS service of Kubernetes, set dnsPolicy to ClusterFirstWithHostNet.

For these DNS policies, you need to set dnsPolicy in Pod, Deployment, RC and other resources

4. Conclusion

Through the analysis of the cause of the fault, we can know that a better solution to the fault is to set dnsPolicy in the deployment. Without affecting the direct invocation of services in the cluster, modify ndots from the default 5 to 2, so that when the proxy service pod accesses the server domain name, the dns resolves the absolute domain name directly, so as to avoid using the search domain for matching, Can be correctly matched to the ip address. Through this fault, I also know why. In the process of troubleshooting, I need to understand the knowledge points and root causes involved behind it.

Reference article:

https://cloud.tencent.com/developer/article/1804653

Official account: operation and development story

github: https://github.com/orgs/sunsharing-note/dashboard

Blog * *: https://www.devopstory.cn **

Love life, love operation and maintenance

Migrant workers, cloud dry cargo official account, and hard core technology dry goods, and our thinking and understanding of technology. Welcome to pay attention to our official account, and look forward to becoming a member of your company.

Scanning QR code

Pay attention to me and maintain high-quality content from time to time

........................