The only problem caused by fragmentation

When the data of a table is divided into multiple tables, the unique primary key of a single table cannot be controlled through the database, because it is necessary to ensure the uniqueness of the primary key id of the same table in multiple real tables.

Note: 5 X version and 4 The X version is slightly different in configuration

Built in solution

Sharding JDBC has two built-in primary key algorithms to choose from;

uuid

uuid uses the uuid string generated by jdk to ensure that the primary key id is not repeated

The built-in algorithm class is UUIDKeyGenerateAlgorithm

The way of use is also very simple

keyGenerateStrategy: column: order_id keyGeneratorName: uuid

keyGenerators:

uuid:

type: UUID

Disadvantages: 1 The primary key must be of string type;

2. If the primary key is discontinuous, when inserting data into the b-tree index, the performance of establishing the index is worse than that of continuous data;

Advantages: relatively simple

Not a very recommended way;

Snowflake algorithm

Snowflake algorithm is a distributed sequence algorithm of Twitter open source. The generated is a 64bit integer. And the generated is continuous according to time;

The overall structure is the serialization of timestamp + workid + machine id + milliseconds

The specific details are not repeated here. Please Baidu or google by yourself

Usage:

Configure the primary key id column, configuration type and configuration attribute. You can configure a work id, that is, the middle machine id, which can effectively avoid duplication;

keyGenerateStrategy: column: order_id keyGeneratorName: snowflak

Define snowflow on the last face

Advantages: the generated sequence is ordered according to time;

Disadvantages: it depends on the clock. If the clock is dialed back, it may cause repetition;

Note that the generated id is a 64 bit integer. Remember to do some processing schemes when returning it to the front end. For example, handle json serialization and serialize long according to string

Custom algorithm

Of course, if the two built-in algorithms cannot meet the requirements, sharding JDBC supports spi extended distributed sequence algorithm;

1. First, define a sequence algorithm class. Here, only AtomicLong # is used to do a global self increment operation. Of course, it can be extended by itself in actual business;

Implement the getType method and return the type name of this algorithm

package com.example.shardingjdbcdemo.spi;

import org.apache.shardingsphere.sharding.spi.KeyGenerateAlgorithm;

import org.springframework.context.annotation.Scope;

import org.springframework.stereotype.Component;

import java.util.Properties;

import java.util.concurrent.atomic.AtomicLong;

@Component

public final class IncrementKeyGenerate implements KeyGenerateAlgorithm {

private AtomicLong atomicLong;

@Override

public Properties getProps() {

return KeyGenerateAlgorithm.super.getProps();

}

@Override

public void setProps(Properties props) {

KeyGenerateAlgorithm.super.setProps(props);

}

@Override

public String getType() {

return "INCREMENT";

}

@Override

public Comparable<?> generateKey() {

return atomicLong.getAndIncrement();

}

@Override

public void init() {

atomicLong = new AtomicLong(1);

}

@Override

public boolean isDefault() {

return true;

}

}

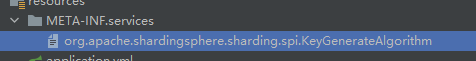

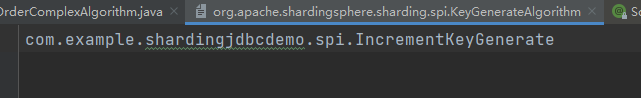

2. Define spi so that customized classes can be scanned;

Create META-INF folder under restore, and then create services folder. Strictly install java spi standard to define.

The name of the file is the name of the interface to be implemented. Org apache. shardingsphere. sharding. spi. KeyGenerateAlgorithm

Write concrete implementation class internally

3. Next, when defining the type of primary key algorithm, you can use the type defined by yourself;

The complete configuration is as follows

spring:

application:

name: shardingjdbcDemo

main:

allow-bean-definition-overriding: true

shardingsphere:

#Data source information

datasource:

#Data source named dbsource-0

dbsource-0:

type: com.zaxxer.hikari.HikariDataSource

driver-class-name: com.mysql.cj.jdbc.Driver

jdbc-url: jdbc:mysql://127.0.0.1:3306/db1?serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8&useSSL=false

username: root

password: 123456

names: dbsource-0

#Configuration of rules

rules:

sharding:

tables:

#Rules for order table

t_order:

actual-data-nodes: dbsource-0.t_order_$->{0..1}

#Table splitting strategy

table-strategy:

#Standard strategy

standard:

sharding-column: user_id

sharding-algorithm-name: order-alg

keyGenerateStrategy:

column: order_id

keyGeneratorName: order-incr

#Configuration of user table

t_user:

actual-data-nodes: dbsource-0.t_user_$->{0..1}

table-strategy:

#Standard strategy

standard:

sharding-column: user_id

sharding-algorithm-name: user-alg

keyGenerateStrategy:

column: user_id

keyGeneratorName: user-incr

#Partition algorithm

sharding-algorithms:

order-alg:

type: INLINE

props:

algorithm-expression: t_order_$->{user_id % 2}

user-alg:

type: INLINE

props:

algorithm-expression: t_user_$->{user_id % 2}

binding-tables:

- t_order,t_user

keyGenerators:

user-incr:

type: INCREMENT

order-incr:

type: INCREMENT

props:

sql-show: true

sql-comment-parse-enabled: true

Brief description: define algorithm name, algorithm type and some attribute information in keyGenerators;

Then, write the name of the definition in the # keyGenerateStrategy of the specific table by using # keyGeneratorName to complete the association definition of a primary key generation algorithm;

After the data is inserted, the id will be written to each table in an incremental installation order;

Note: two algorithms are defined here to use different instance classes for different tables and avoid using a self increasing id for different tables

Note: if you use mybatis plus, you should specify id type = idtype Auto can take effect;

@TableId(type = IdType.AUTO)

Some other distributed sequence schemes

- Redis global auto increment: use the incr command of redis to maintain the global auto increment order

- mysql maintains auto increment, and uses a separate data table to record the auto increment location and other information of each business table;

- leaf algorithm, meituan's self increasing algorithm