The difference between the two methods: Sequential network building method. The upper output is the Sequential network structure of the lower input, but it is impossible to write some non Sequential network structures with hops. At this time, we can choose to use class to build a neural network structure, and class can be used to encapsulate a neural network structure

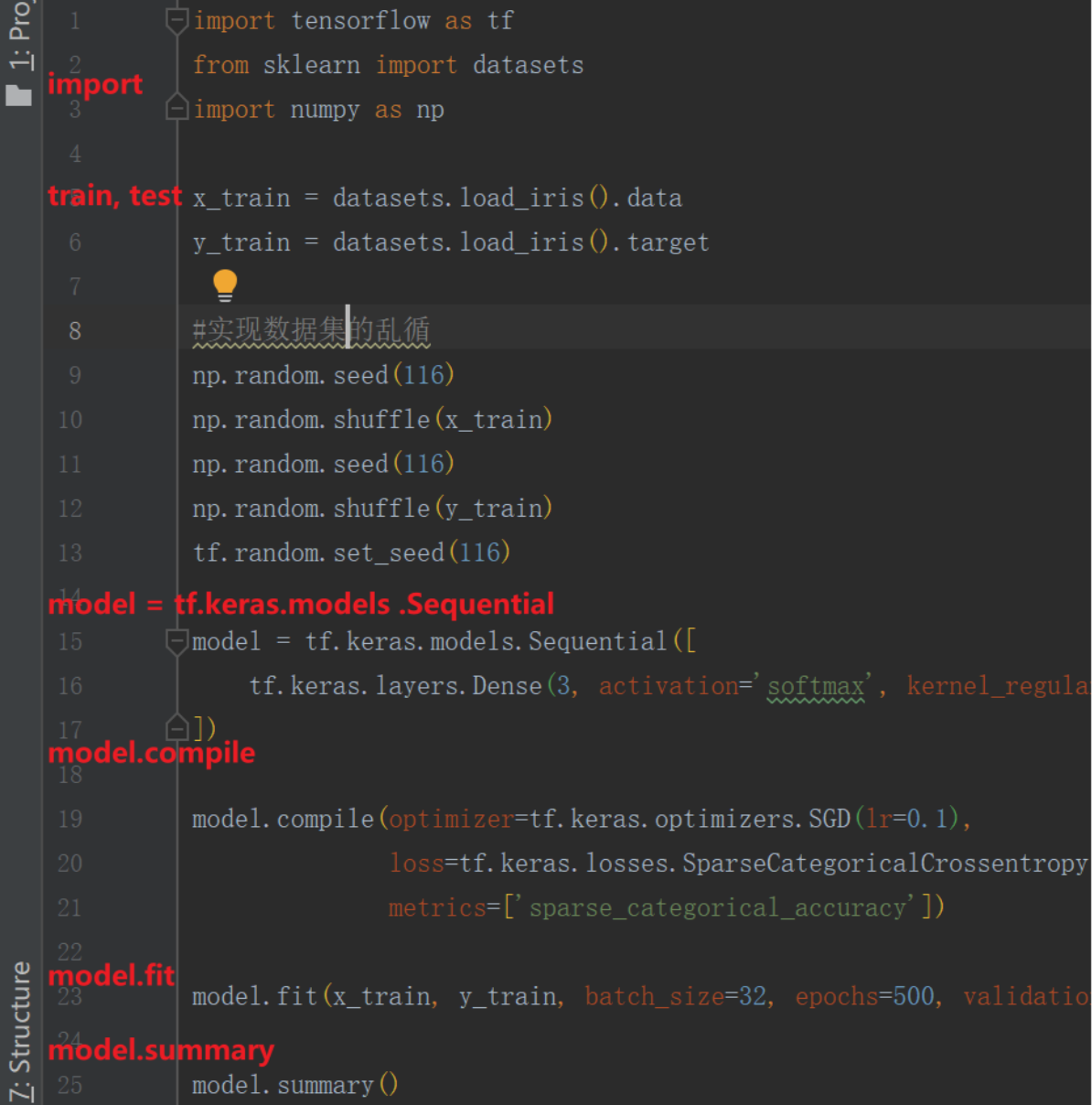

**I * use Sequential six steps to build a network:**

1. **import**

2. **train, test * * --- * specify the training set and test set of the feed network*

3. **model = tf.keras.models .Sequential * * --- * build a network structure in sequential and describe each layer of the network layer by layer, which is equivalent to walking forward for one time*

4. **model.compile * * -- * configure the training method in compile to tell which optimizer to choose, which loss function to choose and which evaluation index to choose during training*

5. **model.fit * * -- execute the training process in fit (), inform the input characteristics and labels of the training set and test set, the number of each batch, and the number of data sets to be iterated

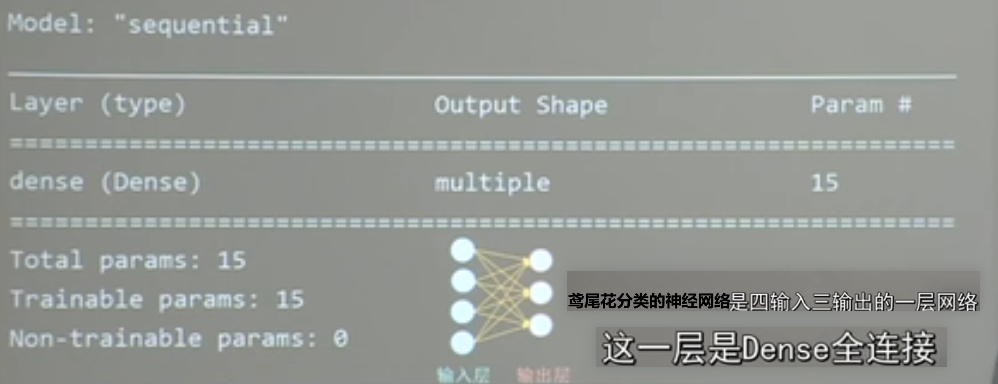

6. **model.summary * * -- print out the network structure and parameter statistics with summary() (step 6 is equivalent to the outline)

Function usage introduced:

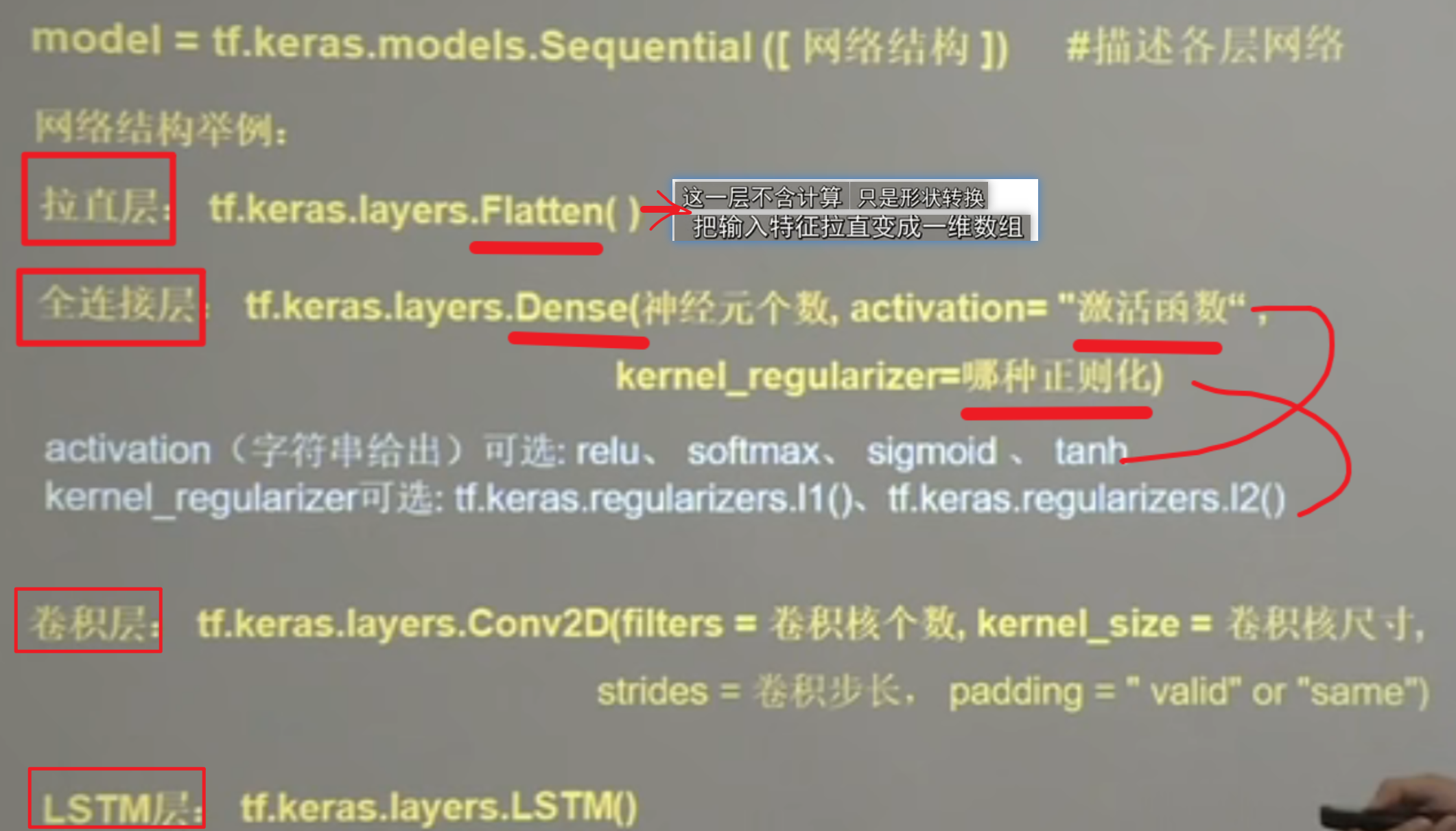

* Sequential () -- it can be considered as a container that encapsulates a neural network

Straightening layer: Flatten (); Transform input features into a one-dimensional array

Full connection layer: Dense (): full connection layer:

Dense implementation:

output = activation(dot(input, kernel) + bias), where is the element by element activation function passed as parameter activation, is the weight matrix created by the layer, and is the offset vector created by the layer (only applicable to is)--- y=wx+b

keras.layers.Dense(units,

activation=None,

use_bias=None,

kernel_initializer=None,

bias_initializer=None,

kernel_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

bias_constraint=None)units: number of neurons

activation: activate function

use_bias: whether to use offset

kernel_initializer: weight initialization function

bias_initializer: offset initialization function

kernel_regularizer: weight normalization function

bias_regularizer: offset value normalization function

activity_regularizer: output normalization function

kernel_constraint: weight limit function

bias_constraint: offset value limit function

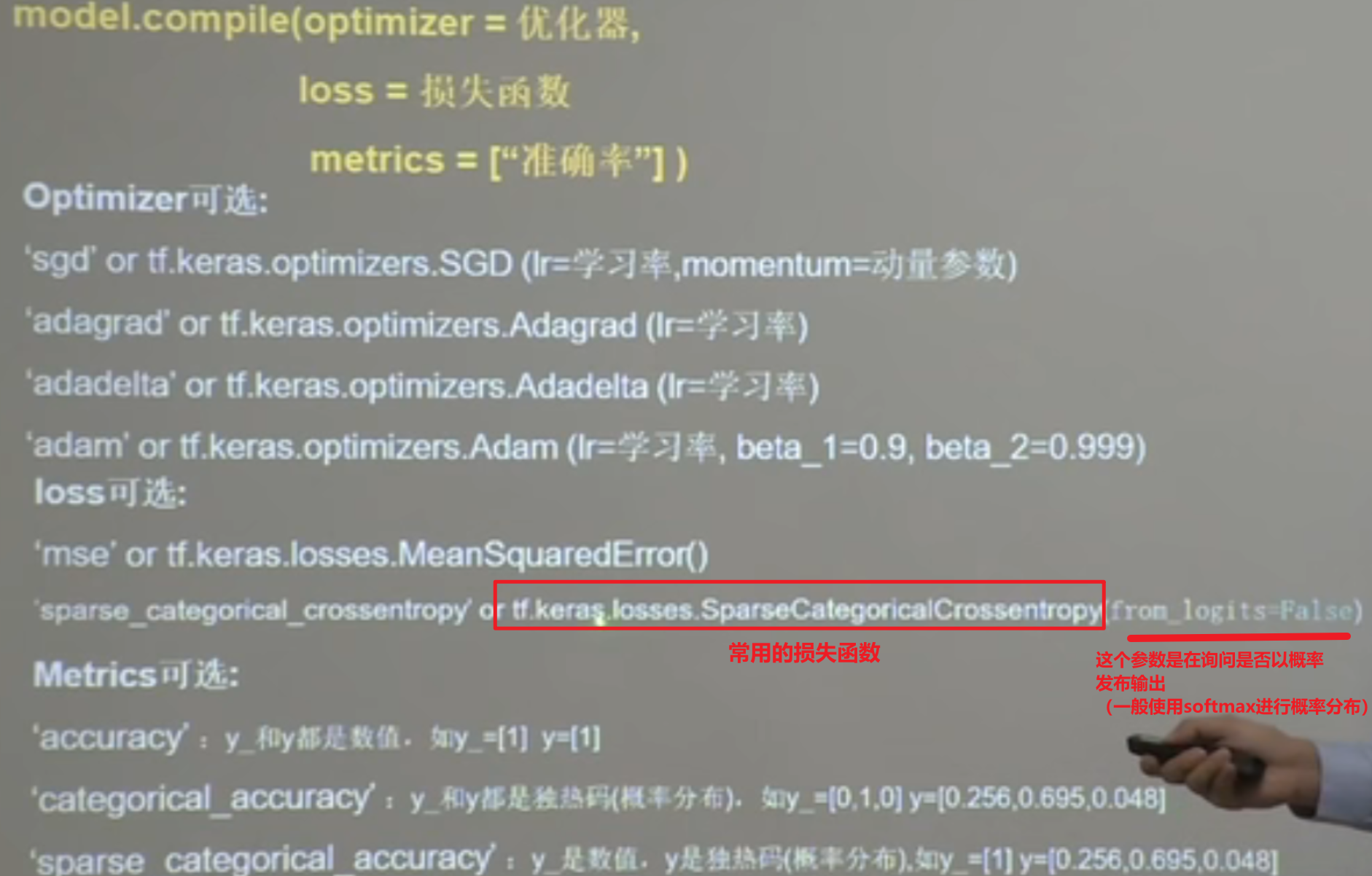

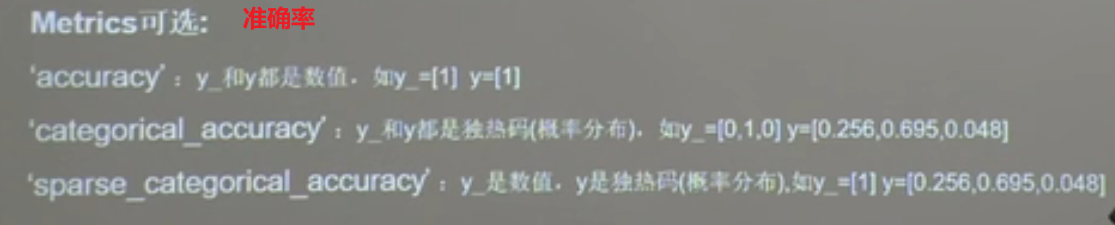

* compile() configures the training method

form_ · value of Logits (sometimes the problem of accuracy may be due to the problem of setting this value) 1. If the neural network prediction result is not released by probability before output, it is directly output, form_logits=true1. If the neural network prediction result is released by probability before output, form_logits=false

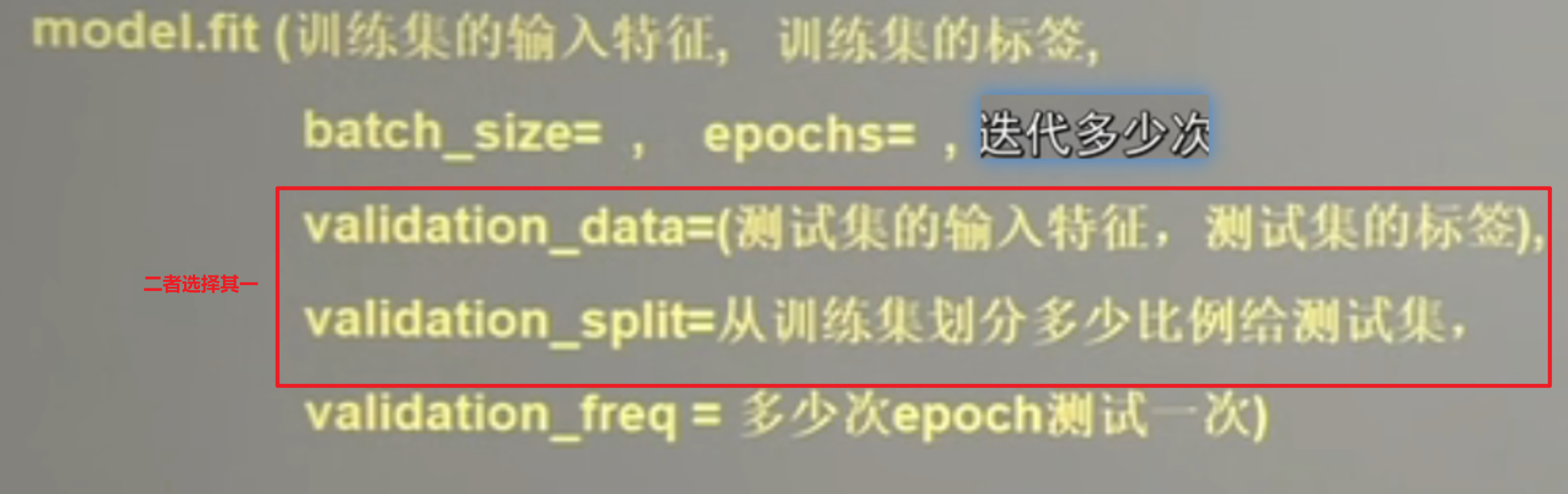

* fit()

* . summary print out the structure and parameter statistics of the network

Code screenshot

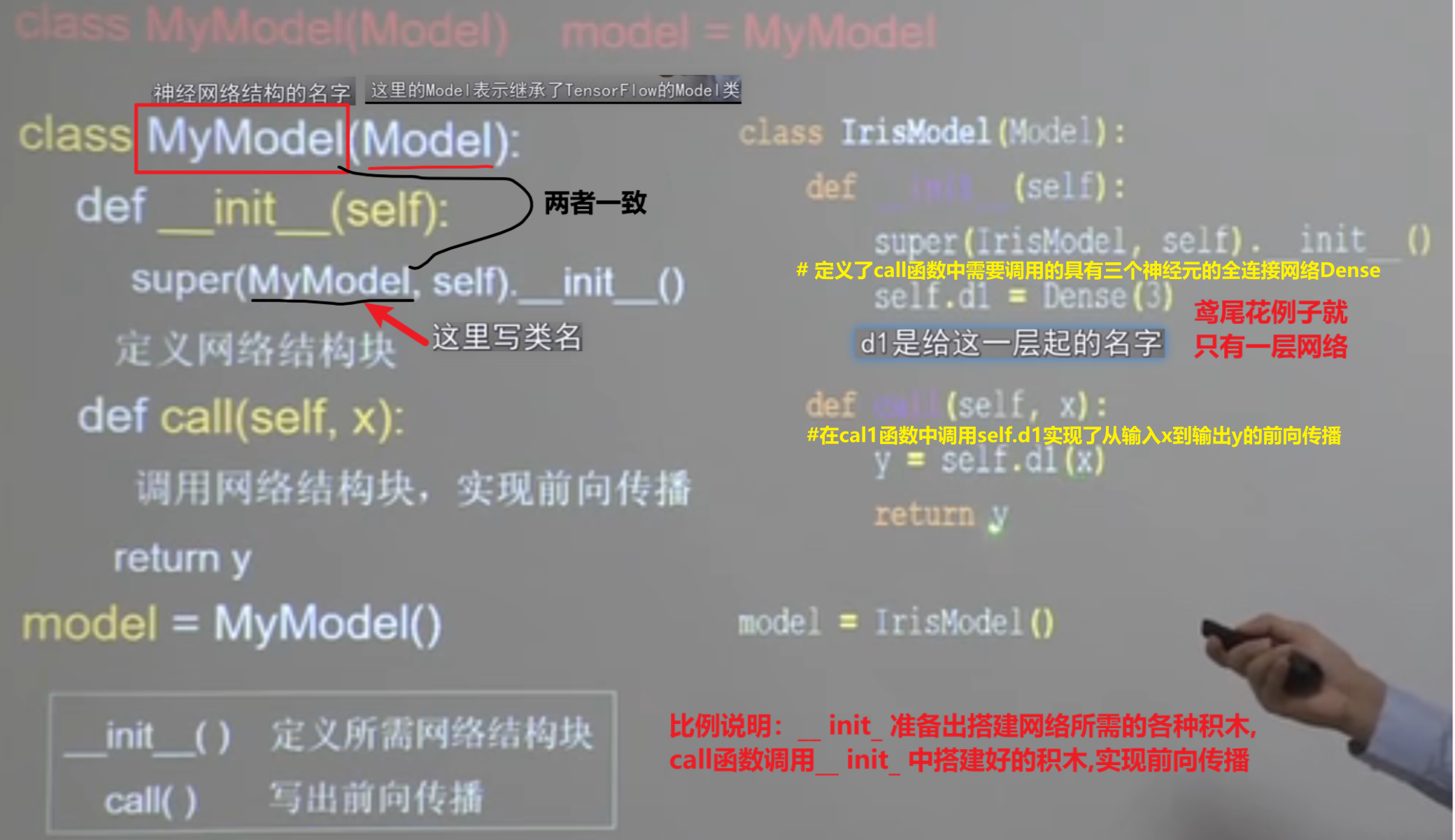

Second, use the six step method to build the network

1. import

2. train, test

3. class MyModel(Model)model=MyModel

4. model.compile

5. model.fit

6. model.summary

import tensorflow as tf

#Add the model module to these two lines

from tensorflow.keras.layers import Dense

from tensorflow.keras import Model

#Add model module

from sklearn import datasets

import numpy as np

x_train = datasets.load_iris().data

y_train = datasets.load_iris().target

np.random.seed(116)

np.random.shuffle(x_train)

np.random.seed(116)

np.random.shuffle(y_train)

tf.random.set_seed(116)

#Define the IrisModel class

class IrisModel(Model):

def __init__(self):

super(IrisModel, self).__init__()

#The fully connected network density with three neurons that needs to be called in the call function is defined

self.d1 = Dense(3, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

def call(self, x):

#Calling self. in the cal1 function D1 realizes the forward propagation from input x to output y

y = self.d1(x)

return y

#Instantiation mode

model = IrisModel()

model.compile(optimizer=tf.keras.optimizers.SGD(lr=0.1),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

model.fit(x_train, y_train, batch_size=32, epochs=500, validation_split=0.2, validation_freq=20)

model.summary()