Two Methods of Preserving and Loading TensorFlow Model

1.saver.save/saver.restore:

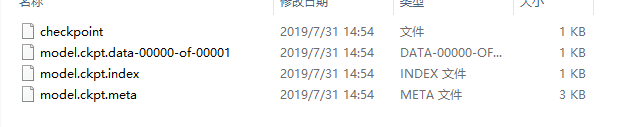

The model saved by this method has four files:

Where model.ckpt is the name of the model

1.checkpoint text file, record path information of model file

2.model.ckpt.data-00000-of-00001 preserves the network weight information of the model

3.model.ckpt.index is a binary file that stores the parameter information of variables in the model.

4.model.ckpt.mete binary file, which preserves the model's computational graph structure information (model's network structure) protobuf

Detailed explanation of protobuf: https://www.jianshu.com/p/419efe983cb2 Know what it's like. (Vegetable chickens think so, or the process is too time-consuming, God does not spray)

Use saver to save the model:

import tensorflow as tf

from os.path import join as pjoin

import os

graph = tf.Graph()

with graph.as_default():

X_1 = tf.placeholder(tf.float32,name= 'input_x1')

X_2 = tf.placeholder(tf.float32,name='input_x2')

b = tf.Variable(1.3,tf.float32,name='input_b')

x1_mul_x2 = tf.add(X_1,X_2)

add_x_b = tf.add(x1_mul_x2,b,name='add_op')

saver = tf.train.Saver()

init = tf.global_variables_initializer()

with tf.Session(graph=graph) as sess:

sess.run(init)

feed_dict = {X_1:1.2,X_2:3.1}

y = sess.run(add_x_b,feed_dict=feed_dict)

saver.save(sess,'model_save1/model.ckpt')

The program generates the four files mentioned above.

Restoration model:

graph = tf.Graph()

with tf.Session(graph=graph) as sess:

saver = tf.train.import_meta_graph(pjoin(os.getcwd(),'model_save1/model.ckpt.meta')) #Loading model structure

saver.restore(sess,tf.train.latest_checkpoint(pjoin(os.getcwd(),'model_save1'))) #Specify model to save directory recovery variable information

#Get the saved variable b

print(sess.run('input_b:0'))

#Get placeholder placeholder variable

input_x1 = sess.graph.get_tensor_by_name('input_x1:0')

input_x2 = sess.graph.get_tensor_by_name('input_x2:0')

#Get the handle Op to compute

op = sess.graph.get_tensor_by_name('add_op:0')

#Add a new operation:

add_new_op = tf.multiply(op,2)

out = sess.run(add_new_op,feed_dict={input_x1:3,input_x2:4})

print(out)2.builder/load:

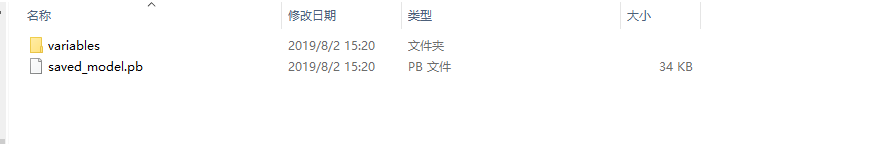

This saves files in pb format:

Build a model using builder (example is a small deom done before, mainly looking at the save process):

init = tf.global_variables_initializer()

builder = tf.saved_model.builder.SavedModelBuilder(model_save_path)

with tf.Session() as sess:

writer = tf.summary.FileWriter("logs/", sess.graph)

sess.run(init)

for epoch in range(self.max_epochs):

_,pred_train,loss_= sess.run([train_op,pred,loss],feed_dict = {

X : train_x,

Y : train_y,

keep_prob : 1,

})

if epoch % 100 == 0:

print('Number of iterations :' ,epoch,'loss',loss_)

X_TensorInfo = tf.saved_model.utils.build_tensor_info(X)

Y_TensorInfo = tf.saved_model.utils.build_tensor_info(Y)

keep_prob_TensorInfo = tf.saved_model.utils.build_tensor_info(keep_prob)

prediction_signature = (

tf.saved_model.signature_def_utils.build_signature_def(

inputs={'input_X' : X_TensorInfo,

'keep_prob' : keep_prob_TensorInfo},

outputs={'output' : Y_TensorInfo},

method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME

))

legacy_init_op = tf.group(tf.tables_initializer(),name = 'legacy_init_op')

builder.add_meta_graph_and_variables(sess,

[tf.saved_model.tag_constants.SERVING],

signature_def_map = {

tf.saved_model.signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY:prediction_signature

},

legacy_init_op = legacy_init_op)

builder.save()

writer.close()This post is very detailed about the functions and parameters used, you can refer to the following.

https://blog.csdn.net/thriving_fcl/article/details/75213361

Then the model is restored:

#Establishing session objects and restoring models

with tf.Session() as sess:

MetaGraphDef = tf.saved_model.loader.load(sess,[tf.saved_model.tag_constants.SERVING],model_save_path)

#Analytical SignatureDef protobuf

SignatureDef_d = MetaGraphDef.signature_def

SignatureDef = SignatureDef_d[tf.saved_model.signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY]

#Analyzing TensorInfo protobuf corresponding to variables

X_TensorInfo = SignatureDef.inputs['input_X']

keep_prob_TensorInfo = SignatureDef.inputs['keep_prob']

# Y_TensorInfo = SignatureDef.outputs['output']

#Analyzing to get concrete tensor

input_X = sess.graph.get_tensor_by_name(X_TensorInfo.name)

keep_prob = sess.graph.get_tensor_by_name(keep_prob_TensorInfo.name)

pred = sess.graph.get_tensor_by_name('dnn_model/Add_1:0')

# with tf.variable_scope('dnn_model',reuse = tf.AUTO_REUSE):

# pred = DNN(input_X)

fre_predict = []

for step in range(length):

prediction = sess.run(pred,feed_dict = {input_X:np.array(pre_data[step]).reshape((1,2)),keep_prob:1.})

prediction = prediction.reshape((-1))

fre_predict.extend(prediction)

pred = sess.graph.get_tensor_by_name('dnn_model/Add_1:0')

Predicted variables, pred does not use Y_TensroInfo to parse and obtain, because using this method, there will be placeholder placeholder placeholder placeholder input error, because after restoring the model, using the model to predict, it is not placeholder, but will return pred as a return value, so this error will occur.

Due to time constraints, specific to the deployment of the model, after the completion of the follow-up project, a more detailed process will emerge.