Since many frameworks and suites have stopped supporting CUDA 10.0, CUDA 10.0 will be installed as originally 0's server is reconfigured cuda10 2 and new drive

Original drive unloading

1. Stop X Server

sudo service lightdm stop

2. Uninstall the previous Driver

sudo /usr/bin/nvidia-uninstall

After uninstallation is completed, enter NVIDIA SMI to prompt that the command cannot be found

nvidia-smi: command not found

CUDA installation

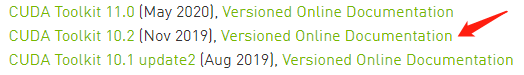

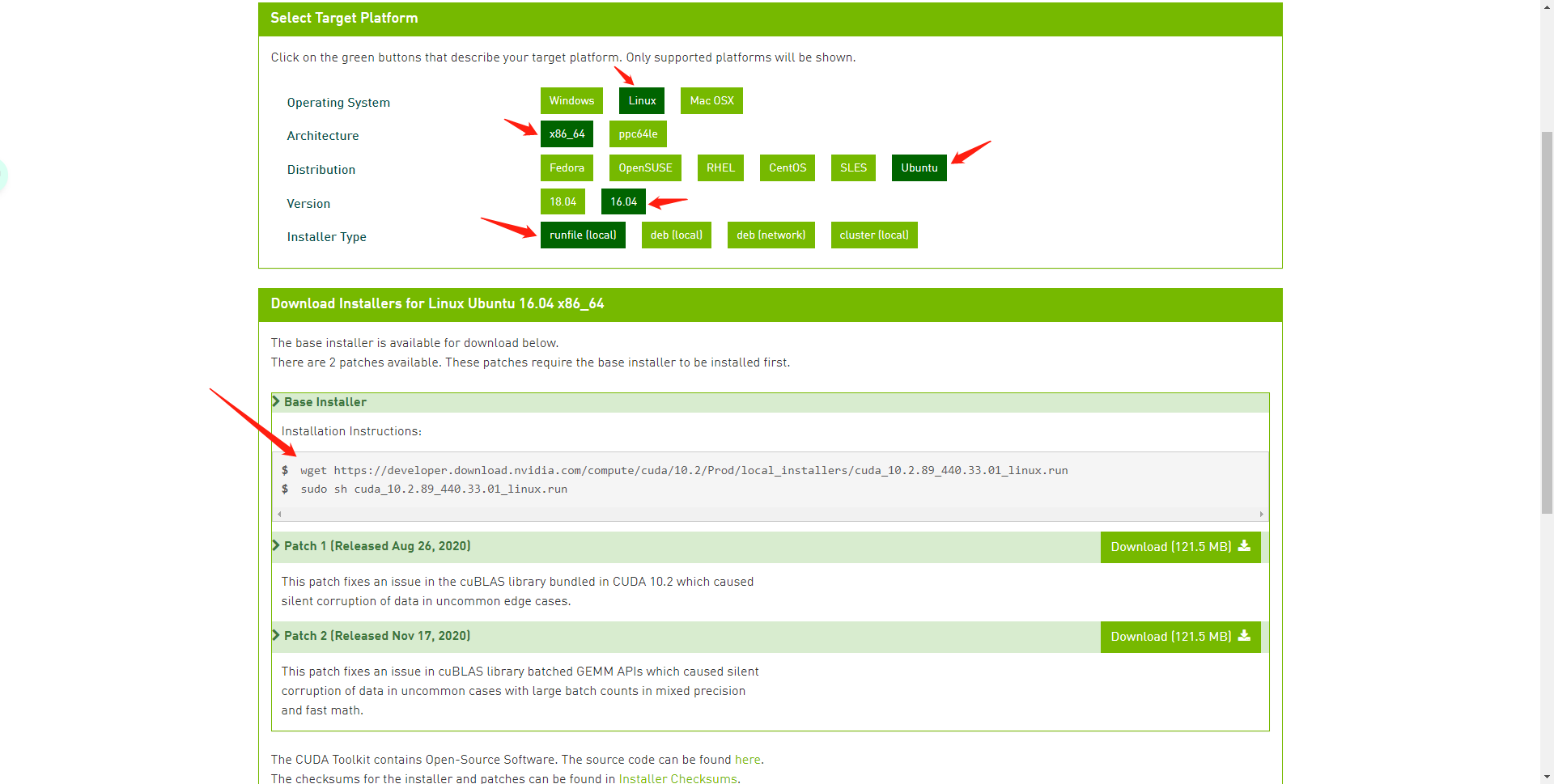

Click here Enter CUDA download page

Select the version you want

According to the installation prompt, enter the following command

wget https://developer.download.nvidia.com/compute/cuda/10.2/Prod/local_installers/cuda_10.2.89_440.33.01_linux.run sudo sh cuda_10.2.89_440.33.01_linux.run

Enter accept

┌──────────────────────────────────────────────────────────────────────────────┐ │ End User License Agreement │ │ -------------------------- │ │ │ │ │ │ Preface │ │ ------- │ │ │ │ The Software License Agreement in Chapter 1 and the Supplement │ │ in Chapter 2 contain license terms and conditions that govern │ │ the use of NVIDIA software. By accepting this agreement, you │ │ agree to comply with all the terms and conditions applicable │ │ to the product(s) included herein. │ │ │ │ │ │ NVIDIA Driver │ │ │ │ │ │ Description │ │ │ │ This package contains the operating system driver and │ │──────────────────────────────────────────────────────────────────────────────│ │ Do you accept the above EULA? (accept/decline/quit): │ │ accept │ └──────────────────────────────────────────────────────────────────────────────┘

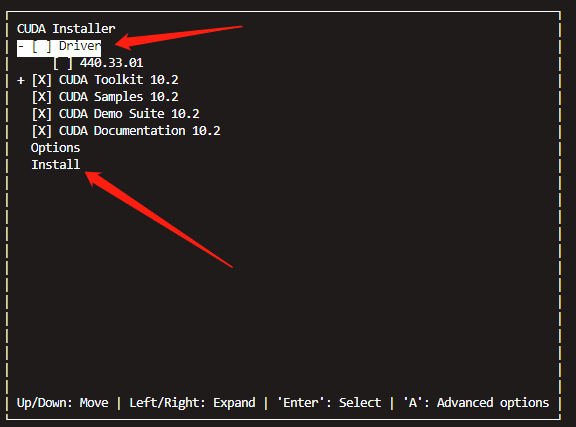

We install the driver and cuda separately, and uncheck the driver through Enter during installation, as follows:

After installation, modify the environment variables and activate them as follows

vim ~/.bashrc

export PATH="/usr/local/cuda-10.2/bin:$PATH" export LD_LIBRARY_PATH="/usr/local/cuda-10.2/lib64:$LD_LIBRARY_PATH" export CUDA_HOME=usr/local/cuda-10.2$CUDA_HOME

source ~/.bashrc

Enter nvcc -V verification

nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2019 NVIDIA Corporation Built on Wed_Oct_23_19:24:38_PDT_2019 Cuda compilation tools, release 10.2, V10.2.89

10.2 indicates successful installation!!!

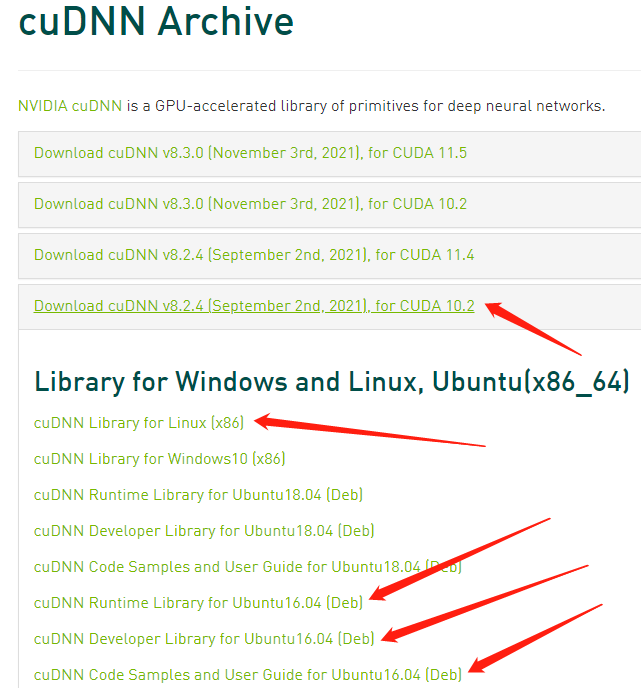

CUDNN configuration

Download cudnn for CUDA version. I download version 8.2 here to match with TensorRT later. Click here to enter Official download link , select the Library package

Right click Copy link

After downloading, use the mv command to rename (remove the fields after tgz)

mv cudnn-10.2-linux-x64-v8.2.4.15.tgz\?QCogpoDS_Z1q53hHFgWmiQWSZqnkTJQ7rwBVj-b9kZc0nkobdCw11ri1t9znzIPSTKUiijoxTwJBGOCxYUj_3QHjlTERLhG8lOFu63haEzcKbGjnUAQDxG6SiJJvoySYHG-8Su7EpOGKoo5iJySeHSnvdJrXp2BhnIKmYKqokryTSw9WVWL5lkZ9AeoSuRr4nPltqp_jV cudnn-10.2-linux-x64-v8.2.4.15.tgz

In this way, you should have the following documents

Unzip. The cuda folder appears after unzip

tar zxvf cudnn-10.2-linux-x64-v8.2.4.15.tgz

Copy the corresponding files to the CUDA directory of the previous installation

sudo cp cuda/include/* /usr/local/cuda-10.2/include sudo cp cuda/lib64/* /usr/local/cuda-10.2/lib64 sudo chmod a+r /usr/local/cuda-10.2/include/cudnn.h sudo chmod a+r /usr/local/cuda-10.2/lib64/libcudnn*

In addition, the remaining three packages need to be installed

sudo dpkg -i libcudnn8_8.2.4.15-1+cuda10.2_amd64.deb sudo dpkg -i libcudnn8-dev_8.2.4.15-1+cuda10.2_amd64.deb sudo dpkg -i libcudnn8-samples_8.2.4.15-1+cuda10.2_amd64.deb

Use the following command to view the versions. If there are many other versions, use sudo apt get remove libcudnn7, sudo apt get remove libcudnn7 dev, sudo apt get remove libcudnn7 samples to delete them in turn

dpkg -l | grep cudnn

ii libcudnn8 8.2.4.15-1+cuda10.2 amd64 cuDNN runtime libraries ii libcudnn8-dev 8.2.4.15-1+cuda10.2 amd64 cuDNN development libraries and headers ii libcudnn8-samples 8.2.4.15-1+cuda10.2 amd64 cuDNN documents and samples

Drive installation

Enter the official website , select your own configuration, start searching and download the latest version

Query whether X-server exists

ps aux | grep X

If the display is as follows, it indicates that there is an X-server

root 18714 0.0 0.0 415608 25916 tty7 Ssl+ Dec28 0:04 /usr/lib/xorg/Xorg -core :0 -seat seat0 -auth /var/run/lightdm/root/:0 -nolisten tcp vt7 -novtswitch ubuntu 28400 0.0 0.0 12940 1012 pts/16 S+ 03:57 0:00 grep --color=auto X

There are many ways to turn off X. just enter all of them:

sudo service lightdm stop sudo service gdm stop sudo service kdm stop

Query again

ps aux | grep X

appear

ubuntu 5217 0.0 0.0 12940 928 pts/16 S+ 04:05 0:00 grep --color=auto X

Closed successfully

install

sudo sh NVIDIA-Linux-x86_64-495.46.run

Follow the prompt and click by default

After installation, enter NVIDIA SMI and the following screen will appear

Fri Dec 31 04:09:03 2021 +-----------------------------------------------------------------------------+ | NVIDIA-SMI 495.46 Driver Version: 495.46 CUDA Version: 11.5 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |===============================+======================+======================| | 0 NVIDIA GeForce ... Off | 00000000:02:00.0 Off | N/A | | 19% 30C P0 58W / 250W | 0MiB / 11178MiB | 0% Default | | | | N/A | +-------------------------------+----------------------+----------------------+ | 1 NVIDIA GeForce ... Off | 00000000:03:00.0 Off | N/A | | 21% 37C P0 58W / 250W | 0MiB / 11178MiB | 0% Default | | | | N/A | +-------------------------------+----------------------+----------------------+ | 2 NVIDIA GeForce ... Off | 00000000:82:00.0 Off | N/A | | 17% 32C P0 57W / 250W | 0MiB / 11178MiB | 0% Default | | | | N/A | +-------------------------------+----------------------+----------------------+ | 3 NVIDIA GeForce ... Off | 00000000:83:00.0 Off | N/A | | 18% 34C P0 56W / 250W | 0MiB / 11178MiB | 1% Default | | | | N/A | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

PyTorch installation

Select the version suitable for cuda-10.2

conda create -n pytorch python=3.7 conda activate pytorch pip install torch torchvision torchaudio

Python 3.7.11 (default, Jul 27 2021, 14:32:16) [GCC 7.5.0] :: Anaconda, Inc. on linux Type "help", "copyright", "credits" or "license" for more information. >>> import torch >>> torch.cuda.is_available() True >>>

PaddlePaddle installation

**Since nccl has been installed in the previous environment, I directly support multiple cards. For more installation, see paddlepaddle official website

conda create -n paddle python=3.7 conda activate paddle pip install paddlepaddle-gpu==2.2.1 -i https://mirror.baidu.com/pypi/simple

Python 3.7.11 (default, Jul 27 2021, 14:32:16) [GCC 7.5.0] :: Anaconda, Inc. on linux Type "help", "copyright", "credits" or "license" for more information. >>> import paddle >>> paddle.utils.run_check() Running verify PaddlePaddle program ... W1231 04:38:06.920902 20825 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 6.1, Driver API Version: 11.5, Runtime API Version: 10.2 W1231 04:38:06.922672 20825 device_context.cc:465] device: 0, cuDNN Version: 8.3. PaddlePaddle works well on 1 GPU. W1231 04:38:08.923141 20825 parallel_executor.cc:617] Cannot enable P2P access from 0 to 2 W1231 04:38:08.923198 20825 parallel_executor.cc:617] Cannot enable P2P access from 0 to 3 W1231 04:38:09.725281 20825 parallel_executor.cc:617] Cannot enable P2P access from 1 to 2 W1231 04:38:09.725327 20825 parallel_executor.cc:617] Cannot enable P2P access from 1 to 3 W1231 04:38:09.725330 20825 parallel_executor.cc:617] Cannot enable P2P access from 2 to 0 W1231 04:38:09.725334 20825 parallel_executor.cc:617] Cannot enable P2P access from 2 to 1 W1231 04:38:10.782135 20825 parallel_executor.cc:617] Cannot enable P2P access from 3 to 0 W1231 04:38:10.782181 20825 parallel_executor.cc:617] Cannot enable P2P access from 3 to 1 W1231 04:38:14.150104 20825 fuse_all_reduce_op_pass.cc:76] Find all_reduce operators: 2. To make the speed faster, some all_reduce ops are fused during training, after fusion, the number of all_reduce ops is 2. PaddlePaddle works well on 4 GPUs. PaddlePaddle is installed successfully! Let's start deep learning with PaddlePaddle now. >>>

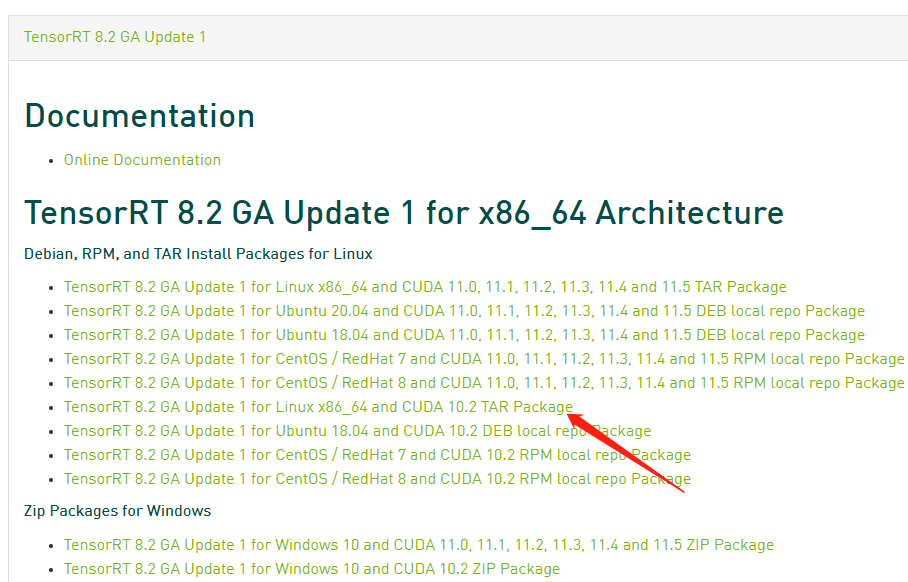

TensorRT installation

Click here Go to the official website to download the appropriate version. Try to choose the version of TensorRT 8 and install it with tar package, as shown below

according to Official installation routine , edit variables according to your own system and version, and output the following commands in turn

version="8.2.2.1"

arch="x86_64"

cuda="cuda-10.2"

cudnn="cudnn8.2"

tar xzvf TensorRT-${version}.Linux.${arch}-gnu.${cuda}.${cudnn}.tar.gz

View extracted files

ls TensorRT-${version}

bin data doc graphsurgeon include lib onnx_graphsurgeon python samples targets TensorRT-Release-Notes.pdf uff

Copy to / usr/local/cuda-10.2/lib64. Of course, you can also add an environment variable export LD for this directory_ LIBRARY_ PATH=$LD_ LIBRARY_ PATH:<TensorRT-${version}/lib>

sudo cp -r TensorRT-${version}/lib/* /usr/local/cuda-10.2/lib64

Install TensorRT

cd TensorRT-${version}/python

pip install tensorrt-*-cp37-none-linux_x86_64.whl

Install the uff using TensorRT and TensorFlow

cd TensorRT-${version}/uff

pip install uff-0.6.9-py2.py3-none-any.whl

Verify the installation using the following command

which convert-to-uff

/home/ubuntu/anaconda3/envs/pytorch/bin/convert-to-uff

Installing graphsurgeon

cd TensorRT-${version}/graphsurgeon

pip install graphsurgeon-0.4.5-py2.py3-none-any.whl

Install onnx graphsurgeon

cd TensorRT-${version}/onnx_graphsurgeon

pip install onnx_graphsurgeon-0.3.12-py2.py3-none-any.whl

verification

cd TensorRT-${version}/samples/sampleMNIST

make

cd ../../bin

./sample_mnist

Finally, the following results show that the installation is successful

&&&& RUNNING TensorRT.sample_mnist [TensorRT v8202] # ./sample_mnist [12/31/2021-07:28:34] [I] Building and running a GPU inference engine for MNIST [12/31/2021-07:28:36] [I] [TRT] [MemUsageChange] Init CUDA: CPU +160, GPU +0, now: CPU 162, GPU 215 (MiB) [12/31/2021-07:28:36] [I] [TRT] [MemUsageSnapshot] Begin constructing builder kernel library: CPU 162 MiB, GPU 215 MiB [12/31/2021-07:28:36] [I] [TRT] [MemUsageSnapshot] End constructing builder kernel library: CPU 183 MiB, GPU 215 MiB [12/31/2021-07:28:37] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 10.2.3 but loaded cuBLAS/cuBLAS LT 10.2.2 [12/31/2021-07:28:37] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +115, GPU +46, now: CPU 300, GPU 261 (MiB) [12/31/2021-07:28:37] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +119, GPU +62, now: CPU 419, GPU 323 (MiB) [12/31/2021-07:28:37] [I] [TRT] Local timing cache in use. Profiling results in this builder pass will not be stored. [12/31/2021-07:28:42] [I] [TRT] Some tactics do not have sufficient workspace memory to run. Increasing workspace size may increase performance, please check verbose output. [12/31/2021-07:28:42] [I] [TRT] Detected 1 inputs and 1 output network tensors. [12/31/2021-07:28:42] [I] [TRT] Total Host Persistent Memory: 5440 [12/31/2021-07:28:42] [I] [TRT] Total Device Persistent Memory: 0 [12/31/2021-07:28:42] [I] [TRT] Total Scratch Memory: 0 [12/31/2021-07:28:42] [I] [TRT] [MemUsageStats] Peak memory usage of TRT CPU/GPU memory allocators: CPU 1 MiB, GPU 16 MiB [12/31/2021-07:28:42] [I] [TRT] [BlockAssignment] Algorithm ShiftNTopDown took 0.084057ms to assign 3 blocks to 11 nodes requiring 57857 bytes. [12/31/2021-07:28:42] [I] [TRT] Total Activation Memory: 57857 [12/31/2021-07:28:42] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 10.2.3 but loaded cuBLAS/cuBLAS LT 10.2.2 [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +8, now: CPU 591, GPU 397 (MiB) [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +0, GPU +10, now: CPU 591, GPU 407 (MiB) [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in building engine: CPU +0, GPU +4, now: CPU 0, GPU 4 (MiB) [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] Init CUDA: CPU +0, GPU +0, now: CPU 592, GPU 369 (MiB) [12/31/2021-07:28:42] [I] [TRT] Loaded engine size: 1 MiB [12/31/2021-07:28:42] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 10.2.3 but loaded cuBLAS/cuBLAS LT 10.2.2 [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +8, now: CPU 592, GPU 379 (MiB) [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +0, GPU +8, now: CPU 592, GPU 387 (MiB) [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in engine deserialization: CPU +0, GPU +1, now: CPU 0, GPU 1 (MiB) [12/31/2021-07:28:42] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 10.2.3 but loaded cuBLAS/cuBLAS LT 10.2.2 [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +8, now: CPU 568, GPU 379 (MiB) [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +0, GPU +8, now: CPU 568, GPU 387 (MiB) [12/31/2021-07:28:42] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in IExecutionContext creation: CPU +0, GPU +0, now: CPU 0, GPU 1 (MiB) [12/31/2021-07:28:42] [I] Input: @@@@@@@@@@@@@@@@@@@@@@@@@@@@ @@@@@@@@@@@@@@@@@@@@@@@@@@@@ @@@@@@@@@@@@@@@@@@@@@@@@@@@@ @@@@@@@@@@@@@@@@@@@@@@@@@@@@ @@@@@@@@@@@@@@@@@@@@@@@@@@@@ @@@@@@@@@@@@@@@@.*@@@@@@@@@@ @@@@@@@@@@@@@@@@.=@@@@@@@@@@ @@@@@@@@@@@@+@@@.=@@@@@@@@@@ @@@@@@@@@@@% #@@.=@@@@@@@@@@ @@@@@@@@@@@% #@@.=@@@@@@@@@@ @@@@@@@@@@@+ *@@:-@@@@@@@@@@ @@@@@@@@@@@= *@@= @@@@@@@@@@ @@@@@@@@@@@. #@@= @@@@@@@@@@ @@@@@@@@@@= =++.-@@@@@@@@@@ @@@@@@@@@@ =@@@@@@@@@@ @@@@@@@@@@ :*## =@@@@@@@@@@ @@@@@@@@@@:*@@@% =@@@@@@@@@@ @@@@@@@@@@@@@@@% =@@@@@@@@@@ @@@@@@@@@@@@@@@# =@@@@@@@@@@ @@@@@@@@@@@@@@@# =@@@@@@@@@@ @@@@@@@@@@@@@@@* *@@@@@@@@@@ @@@@@@@@@@@@@@@= #@@@@@@@@@@ @@@@@@@@@@@@@@@= #@@@@@@@@@@ @@@@@@@@@@@@@@@=.@@@@@@@@@@@ @@@@@@@@@@@@@@@++@@@@@@@@@@@ @@@@@@@@@@@@@@@@@@@@@@@@@@@@ @@@@@@@@@@@@@@@@@@@@@@@@@@@@ @@@@@@@@@@@@@@@@@@@@@@@@@@@@ [12/31/2021-07:28:42] [I] Output: 0: 1: 2: 3: 4: ********** 5: 6: 7: 8: 9: &&&& PASSED TensorRT.sample_mnist [TensorRT v8202] # ./sample_mnist

remarks

Note: if nvidia download cannot be linked using wget, modify the following configuration

sudo chmod 777 /etc/resolv.conf vim /etc/resolv.conf

nameserver 8.8.8.8 #google domain name server nameserver 8.8.4.4 #google domain name server

Remember to comment it out after downloading to avoid sudo: unable to resolve host XXXX when using sudo