GCD

In the process of iOS development, we often deal with multithreads, asynchronous drawing, network requests, etc. The ways are NSThread, NSOperationQueue, GCD, etc. Here GCD plays an important role, so write an article about GCD today. First comes the first question:

What is a GCD

The full name Grand Central Dispatch is a technology for asynchronous task execution, a set of api implementation based on the c language. The syntax is very concise. Just simply define the task or join the queue on demand, then you can implement the function as scheduled. Here is a simple example.

dispatch_async(dispatch_get_global_queue(0, 0), ^{

// Network Request

// Draw image asynchronously

// Database access, etc.

dispatch_async(dispatch_get_main_queue(), ^{

// Refresh UI

});

});

Here a task is defined asynchronously and added to a global queue, where the content of the task can perform some time-consuming operations without affecting the main thread because it is asynchronous. When the task is finished, a task is also defined asynchronously and added to the main queue, where the task executed is completed by the main thread.

Synchronous and asynchronous

The word "asynchronous" has been mentioned in the previous section, so let's talk about what is "asynchronous". There is also the word "synchronous".

Asynchronization does not require an immediate response from the CPU, but instead waits for a signal or callback to proceed. The current thread can immediately execute the next action, while synchronization can block the current thread until one task has been executed.

Asynchronization is the goal, and multithreading is the means

When we start a new thread, we can delegate the task to the new thread for asynchronous execution and return to the previous thread when needed.

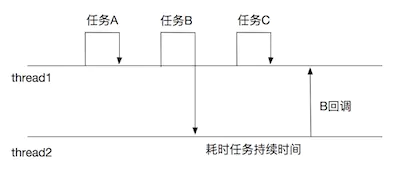

Use diagrams for a synchronous and asynchronous interpretation

Here thread1 simulates the main thread, so it can be completed on the main thread because it takes less time to execute Task A. When Task B is executed, it takes longer to execute, so it opens up a new thread, leaving the task to the sub-thread and continuing to complete Task C. When the time-consuming operation is completed, the result is returned to the main thread for operation. The asynchronous function is implemented.

There are still several issues to note when using multithreading, 1. Data Competition 2. Deadlock 3. Too many threads open up can cause excessive memory consumption, but multithreading can be a huge benefit to our development as long as it is used properly.

Serial and concurrent queues

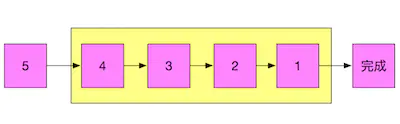

First, explain Queue, such as its name, which is a waiting queue for processing. Here, the queue is scheduled in FIFO-First In First Out mode, where tasks added to the queue are executed first, and of course there are several other modes, but for the time being, look up the data yourself if you are interested

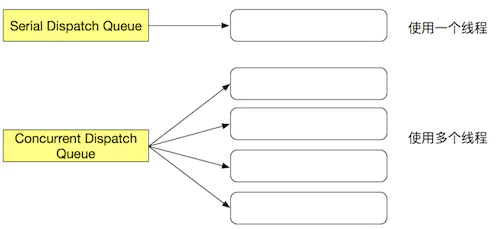

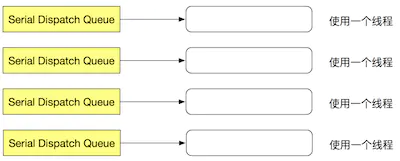

There are also two types of queues, Serial Dispatch Queue and Concurrent Dispatch Queue, which are serial and concurrent queues. Serial queues must be executed sequentially, and concurrent queues can be executed by multiple threads in a concurrent manner.

Here's the code to see the difference between a serial queue and a concurrent queue. First, use a serial queue to create several asynchronous operations

dispatch_queue_t queue = dispatch_queue_create("com.example.gcdDemo", DISPATCH_QUEUE_SERIAL);

dispatch_async(queue, ^{

NSLog(@"%@---0", [NSThread currentThread]);

});

dispatch_async(queue, ^{

NSLog(@"%@---1", [NSThread currentThread]);

});

dispatch_async(queue, ^{

NSLog(@"%@---2", [NSThread currentThread]);

});

dispatch_async(queue, ^{

NSLog(@"%@---3", [NSThread currentThread]);

});

Look at the output

<NSThread: 0x6000002777c0>{number = 3, name = (null)}---0

<NSThread: 0x6000002777c0>{number = 3, name = (null)}---1

<NSThread: 0x6000002777c0>{number = 3, name = (null)}---2

<NSThread: 0x6000002777c0>{number = 3, name = (null)}---3

Clearly, this is the same thread, and it does execute sequentially, so concurrent queues are used next.

dispatch_queue_t queue = dispatch_queue_create("com.example.gcdDemo", DISPATCH_QUEUE_CONCURRENT);

dispatch_async(queue, ^{

NSLog(@"%@---0", [NSThread currentThread]);

});

dispatch_async(queue, ^{

NSLog(@"%@---1", [NSThread currentThread]);

});

dispatch_async(queue, ^{

NSLog(@"%@---2", [NSThread currentThread]);

});

dispatch_async(queue, ^{

NSLog(@"%@---3", [NSThread currentThread]);

});

Look at the output again

<NSThread: 0x60400046adc0>{number = 5, name = (null)}---2

<NSThread: 0x60400046b000>{number = 3, name = (null)}---0

<NSThread: 0x6000004655c0>{number = 4, name = (null)}---1

<NSThread: 0x6000004653c0>{number = 6, name = (null)}---3

Clearly, the execution is not sequential and is not the same thread, which confirms the difference between the serial queue and the concurrent queue mentioned above.

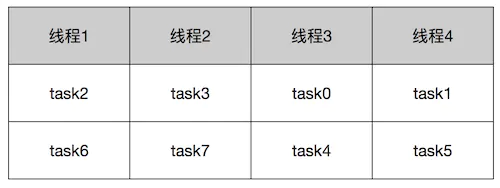

But it doesn't mean how many concurrent tasks are created that how many threads will be opened. The number of threads is determined by the XNU kernel. Adjusting the case above to 8 tasks, you can see that some threads are duplicated, indicating that if you could start with 8 threads, this would not happen, so when a task is finished, The next task can be placed on the completed thread, but is different from a serial queue

Four threads are opened to process tasks, task0-task3 is put into the thread asynchronously to execute. Assuming task0 completes first, task4 is taken out of the concurrent queue and put into thread 3 to execute, and so on, some threads are duplicated.

DISPATCH MAIN QUEUE/DISPATCH GLOBAL QUEUE

Most of us usually use these two types, the former is a queue executed in the main thread, because there is only one main thread, it is a serial queue naturally, and global_queue is a concurrent queue with four priorities: low priority (DISPATCH_QUEUE_PRIORITY_LOW), default priority (DISPATCH_QUEUE_PRIORITY_DEFAULT), high priority (DISPATCH_QUEUE_PRIORITY_HIGH), and background priority (HISPATCH_QUEUE_PRIORITY_HIGH).

DISPATCH_QUEUE_PRIORITY_BACKGROUND), code as follows

dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_LOW, 0);

api of GCD

Some APIs for GCD have been mentioned earlier, and the functionality of these APIs will be discussed in detail below.

dispatch_queue_create

dispatch_queue_t serialDispatchQueue = dispatch_queue_create("com.example.gcdDeo", NULL);

Here's how to create a queue with a return type of dispatch_queue_t, the method has two parameters, one is the queue name, the domain name method with reverse application ID is recommended, the latter parameter indicates the type of queue generated, the other is DISPATCH_QUEUE_SERIAL is a serial queue, and NULL can also be used here. The value defined by this macro is NULL and the other is DISPATCH_QUEUE_CONCURRENT represents a concurrent queue, where although the tasks in a serial queue are performed sequentially, if multiple serial queues are created, they are processed in parallel.

Since a queue operates on only one thread, use serial queues whenever possible to avoid data conflicts when performing more important operations.

dispatch_(a)sync

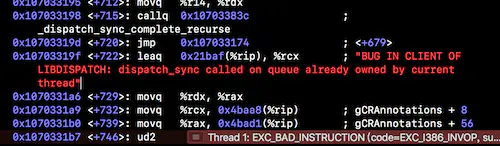

The two most common functions are dispatch_sync synchronous execution, dispatch_async executes asynchronously, the first parameter is the queue name specified, the block is the task content executed, but there are also some issues to note when using gcd

NSLog(@"1");

dispatch_sync(dispatch_get_main_queue(), ^{

NSLog(@"2");

});

NSLog(@"3");

1 //Next error

You can see why this problem is caused by synchronization executing a task on the main thread, causing the thread to block.

dispatch_after

Dispatch_can be used when we need to delay certain tasks after a period of time After this function, the sample code is as follows:

NSLog(@"Before delay");

dispatch_time_t time = dispatch_time(DISPATCH_TIME_NOW, (int64_t)(3 * NSEC_PER_SEC));

dispatch_after(time, dispatch_get_main_queue(), ^{

NSLog(@"Delay execution for 3 seconds");

});

The result returned here is

2018-09-04 14:52:59.437518+0800 GCDDemo[75192:21501573] Before delay 2018-09-04 14:53:02.438042+0800 GCDDemo[75192:21501573] Delay execution for 3 seconds

You can see that it is indeed a task that is delayed by 3 seconds, but the actual meaning here is to add the task to the main queue after 3 seconds. Since Main Dispatch Queue is in the main thread's unloop, the real time is 3 seconds plus the shortest immediate maximum time for a Runloop cycle, and it may take longer if more tasks are accumulated in Runloop. So the time is not exactly precise, but to get the delay function done roughly, dispatch_after is completely OK.

Time is dispatch_ Time_ Type t, starting with the first parameter time and ending with the second specified time, where NSEC_PER_SEC is a time type in seconds, NSEC_PER_MSEC is in milliseconds, other types of units are not explained here.

dispatch_group

In a project, we often encounter the results of multiple asynchronous tasks being returned at the same time. If we are just synchronizing, the last task to be executed is the end, but asynchronous is not possible, so we need to use dispatch_here Group, code as follows

NSLog(@"Start All-----%@", [NSThread currentThread]);

dispatch_group_t group = dispatch_group_create();

dispatch_queue_t queue = dispatch_get_global_queue(0, 0);

dispatch_group_async(group, queue, ^{

sleep(4);

NSLog(@"Subthread 1-----%@", [NSThread currentThread]);

});

dispatch_group_async(group, queue, ^{

sleep(3);

NSLog(@"Subthread 2-----%@", [NSThread currentThread]);

});

dispatch_group_notify(group, dispatch_get_main_queue(), ^{

NSLog(@"All over-----%@", [NSThread currentThread]);

});

Here we use sleep to simulate two time-consuming operations, and print the threads where several locations are located, using dispatch_first Group_ Create creates a group and uses global queue, then simulates the asynchronous delay operation using dispatch_group_async This function and dispatch_ The function of async is the same, except that the former belongs to the first parameter group and dispatch_is called when the function is all over Group_ Notify This method means that all asynchronous operations have ended and the result of code execution is as follows

2018-09-04 16:22:03.449143+0800 GCDDemo[76086:21787405] Start All-----<NSThread: 0x6040002601c0>{number = 1, name = main}

2018-09-04 16:22:06.451174+0800 GCDDemo[76086:21787469] Subthread 2-----<NSThread: 0x60400027ed40>{number = 3, name = (null)}

2018-09-04 16:22:07.452564+0800 GCDDemo[76086:21787470] Subthread 1-----<NSThread: 0x600000470100>{number = 4, name = (null)}

2018-09-04 16:22:07.452926+0800 GCDDemo[76086:21787405] All over-----<NSThread: 0x6040002601c0>{number = 1, name = main}

You can see here that it is true that when both subtrees are finished, they return to the main thread's callback, another way to do this is through dispatch_group_wait prevents subsequent operations until all asynchronous functions are completed

dispatch_group_wait(group, DISPATCH_TIME_FOREVER); NSLog(@"All over-----%@", [NSThread currentThread]);

Here wait means wait, the first parameter means the object to wait, and the type is dispatch_group_t, the second parameter represents the wait time, where DISPATCH_is used TIME_ FOREVER means wait, so this function changes to wait until all the code in the group ends, but dispatch_is more recommended here Group_ Notify mode because dispatch_ Group_ Wat is synchronous, so it is not recommended for use in the main thread.

dispatch_group_enter/dispatch_group_leave

In practice, there will be cases where multiple requests return results at the same time, which can cause some problems if you use the above method, because the asynchronous task above is only a NSLog. If it is a delayed request, let's simulate the cases where multiple requests occur simultaneously.

NSLog(@"Start All-----%@", [NSThread currentThread]);

dispatch_group_t group = dispatch_group_create();

dispatch_queue_t queue = dispatch_queue_create("com.example.gcdDemo", DISPATCH_QUEUE_CONCURRENT);

dispatch_group_async(group, queue, ^{

dispatch_async(dispatch_get_global_queue(0, 0), ^{

sleep(4);

NSLog(@"Simulated request 1-----%@", [NSThread currentThread]);

});

});

dispatch_group_async(group, queue, ^{

dispatch_async(dispatch_get_global_queue(0, 0), ^{

sleep(3);

NSLog(@"Simulated Request 2-----%@", [NSThread currentThread]);

});

});

dispatch_group_notify(group, dispatch_get_main_queue(), ^{

NSLog(@"All over-----%@", [NSThread currentThread]);

});

Here at dispatch_ Group_ Among async asynchronous tasks, a request task is simulated asynchronously again. Here's the result

2018-09-04 16:50:50.221045+0800 GCDDemo[76421:21817204] Start All-----<NSThread: 0x60000007ecc0>{number = 1, name = main}

2018-09-04 16:50:50.249321+0800 GCDDemo[76421:21817204] All over-----<NSThread: 0x60000007ecc0>{number = 1, name = main}

2018-09-04 16:50:53.225810+0800 GCDDemo[76421:21817394] Simulated Request 2-----<NSThread: 0x604000663000>{number = 5, name = (null)}

2018-09-04 16:50:54.225917+0800 GCDDemo[76421:21817393] Simulated request 1-----<NSThread: 0x6040004649c0>{number = 3, name = (null)}

The reason for this is that the notify method was called because the two tasks that originated the request have been completed, but the result of the request has not been successful, so the code is problematic, so dispatch_is used here Group_ Enter and dispatch_group_leave this solution.

NSLog(@"Start All-----%@", [NSThread currentThread]);

dispatch_group_t group = dispatch_group_create();

dispatch_queue_t queue = dispatch_queue_create("com.example.gcdDemo", DISPATCH_QUEUE_CONCURRENT);

dispatch_group_async(group, queue, ^{

dispatch_group_enter(group);

dispatch_async(dispatch_get_global_queue(0, 0), ^{

sleep(4);

NSLog(@"Simulated request 1-----%@", [NSThread currentThread]);

dispatch_group_leave(group);

});

});

dispatch_group_async(group, queue, ^{

dispatch_group_enter(group);

dispatch_async(dispatch_get_global_queue(0, 0), ^{

sleep(3);

NSLog(@"Simulated Request 2-----%@", [NSThread currentThread]);

dispatch_group_leave(group);

});

});

dispatch_group_notify(group, dispatch_get_main_queue(), ^{

NSLog(@"All over-----%@", [NSThread currentThread]);

});

This results in no problem, which can be interpreted as retain and release, using dispatch_each time you enter a new asynchronous task Group_ Enter tells the original asynchronous task that it hasn't finished yet and uses dispatch_when the request is complete Group_ Leave indicates that the asynchronous operation is over and can be quit so that a real delay function can be completed. The result is as follows

2018-09-04 16:54:22.832901+0800 GCDDemo[76462:21821447] Start All-----<NSThread: 0x60000007e980>{number = 1, name = main}

2018-09-04 16:54:25.837875+0800 GCDDemo[76462:21821502] Simulated Request 2-----<NSThread: 0x604000460840>{number = 3, name = (null)}

2018-09-04 16:54:26.833634+0800 GCDDemo[76462:21821503] Simulated request 1-----<NSThread: 0x6000004676c0>{number = 4, name = (null)}

2018-09-04 16:54:26.833992+0800 GCDDemo[76462:21821447] All over-----<NSThread: 0x60000007e980>{number = 1, name = main}

There are other solutions for the current case, and I'm going to write about other solutions for the case in the next article, which I won't cover here.

dispatch_barrier_(a)sync

Once again, let's look at this function in a case where there are four asynchronous tasks a, b, c, d, and I've added a new task, which I hope can be completed after a and B and before C and D. There are other ways to solve this problem, such as synchronization, but there is a better way to solve it, dispatch_barrier_async, like its name, can be interpreted as a fence, separating the front from the back, and coding below

dispatch_queue_t queue = dispatch_queue_create(NULL, DISPATCH_QUEUE_CONCURRENT);

dispatch_async(queue, ^{

sleep(3);

NSLog(@"task - A");

});

dispatch_async(queue, ^{

sleep(2);

NSLog(@"task - B");

});

NSLog(@"before barrier");

dispatch_barrier_sync(queue, ^{

NSLog(@"task - new");

});

NSLog(@"after barrier");

dispatch_async(queue, ^{

sleep(2);

NSLog(@"task - C");

});

dispatch_async(queue, ^{

sleep(1);

NSLog(@"task - D");

});

Here we simulate four asynchronous operation tasks, inserting a new task in the middle, and see the results returned

2018-09-04 17:55:08.783989+0800 GCDDemo[76920:21886183] before barrier 2018-09-04 17:55:10.787647+0800 GCDDemo[76920:21886310] task - B 2018-09-04 17:55:11.785806+0800 GCDDemo[76920:21886309] task - A 2018-09-04 17:55:11.786003+0800 GCDDemo[76920:21886183] task - new 2018-09-04 17:55:11.786164+0800 GCDDemo[76920:21886183] after barrier 2018-09-04 17:55:12.790452+0800 GCDDemo[76920:21886312] task - D 2018-09-04 17:55:13.790432+0800 GCDDemo[76920:21886309] task - C

Here you can see that before outputs first and then executes tasks A and B because there are simulated delays, while tasks in the barrier without delays take effect after A and B, indicating that the fence functions are in effect because sync synchronization is involved, so after the output, what happens here when sync is replaced with async?

2018-09-04 17:59:29.114406+0800 BlockDemo[76948:21890748] before barrier 2018-09-04 17:59:29.114600+0800 BlockDemo[76948:21890748] after barrier

before and after are next to the output, indicating dispatch_barrier_sync also has a sync effect, while dispatch_barrier_async also has an asynchronous effect.

dispatch_apply

If there is a case where a gcd task needs to be executed a specified number of times, you can use this function, the first parameter is the number, the second parameter is the specified queue, the third parameter is the block with parameters, and the parameter is the current number subscript

NSArray *array = @[@"1", @"2", @"3", @"4", @"5",];

dispatch_apply([array count], dispatch_get_global_queue(0, 0), ^(size_t index) {

NSLog(@"Elements:%@----No.%ld second", array[index], index);

});

2018-09-04 18:44:56.970194+0800 GCDDemo[77406:21942336] Element: 4----Third 2018-09-04 18:44:56.970194+0800 GCDDemo[77406:21942205] Element: 1----0th 2018-09-04 18:44:56.970194+0800 GCDDemo[77406:21942337] Element: 3----Second 2018-09-04 18:44:56.970194+0800 GCDDemo[77406:21942335] Element: 2----First 2018-09-04 18:44:56.970380+0800 GCDDemo[77406:21942205] Element: 5----4th

dispatch_semaphore

There is already a section on the processing of different data, but sometimes more detailed exclusive control is needed. For example, if you go to a house and there is a chair in it, you can sit down. If you bring another chair, you can still sit down, but if you take the chair away, you can only stand and wait. semaphore accomplishes a similar situation using signal traffic, starting with the first function:

dispatch_semaphore_create(0);

A semaphore is created here, and the return type is dispatch_semaphore_t, has a parameter representing the value of the initial semaphore, which is given here as 0, and needs to wait if a wait is encountered.

dispatch_semaphore_wait(semaphore,DISPATCH_TIME_FOREVER); dispatch_semaphore_signal(semaphore);

When the semaphore in the first function is 0, this function needs to wait. If it is greater than 0, then the semaphore-1, the second parameter is the waiting time. This can be used as needed. This assumes that you need to wait for an infinite amount of time, knowing that the increase of the semaphore is unknown.

The second function gives the semaphore + 1, so you can see that the two functions appear in pairs, and if wait appears separately and is initialized to 0, all subsequent tasks get stuck and cannot be executed. Here's a small case

dispatch_group_t group = dispatch_group_create();

dispatch_queue_t queue = dispatch_queue_create(NULL, DISPATCH_QUEUE_CONCURRENT);

dispatch_semaphore_t semaphore = dispatch_semaphore_create(0);

dispatch_group_async(group, queue, ^{

dispatch_async(dispatch_get_global_queue(0, 0), ^{

sleep(3);

NSLog(@"Complete 1");

dispatch_semaphore_signal(semaphore);

});

});

dispatch_group_async(group, queue, ^{

dispatch_async(dispatch_get_global_queue(0, 0), ^{

sleep(2);

NSLog(@"Complete 2");

dispatch_semaphore_signal(semaphore);

});

});

dispatch_group_notify(group, queue, ^{

dispatch_semaphore_wait(semaphore, DISPATCH_TIME_FOREVER);

dispatch_semaphore_wait(semaphore, DISPATCH_TIME_FOREVER);

NSLog(@"Complete All");

});

Here again, the problem of multiple requests requiring simultaneous return of results is simulated. This requirement can also be accomplished by semaphores, where there are two waiting signals in notify, which can only be offset by increasing the number of successful requests, and when both requests are completed, the waiting signals are all ended. This means the task is complete.

But what if we change the requirements? If we need to have multiple requests executed synchronously, first we need to turn on asynchronous to manage. Requests are also asynchronous, so we can't make requests execute synchronously in this way. We need to operate in a thread-dependent way. GCD threads rely on semaphore in this way. Modify the code as follows

dispatch_group_t group = dispatch_group_create();

dispatch_queue_t queue = dispatch_queue_create(NULL, DISPATCH_QUEUE_CONCURRENT);

dispatch_semaphore_t semaphore0 = dispatch_semaphore_create(0);

dispatch_semaphore_t semaphore1 = dispatch_semaphore_create(0);

dispatch_group_async(group, queue, ^{

dispatch_async(dispatch_get_global_queue(0, 0), ^{

[NSThread sleepForTimeInterval:3];

NSLog(@"Complete 1");

dispatch_semaphore_signal(semaphore0);

});

});

dispatch_group_async(group, queue, ^{

dispatch_semaphore_wait(semaphore0,DISPATCH_TIME_FOREVER);

dispatch_async(dispatch_get_global_queue(0, 0), ^{

[NSThread sleepForTimeInterval:2];

NSLog(@"Complete 2");

dispatch_semaphore_signal(semaphore1);

});

});

dispatch_group_notify(group, queue, ^{

dispatch_semaphore_wait(semaphore1, DISPATCH_TIME_FOREVER);

NSLog(@"Complete All");

});

Two semaphores are created here because Task 1 simulates a longer period of time and we need to let Task 1 finish first. In Task 2, we wait for the semaphore of Task 1 until Task 1 finishes and increases the semaphore, then execute Task 2. The result is as follows

2018-09-05 10:27:02.725024+0800 GCDDemo[81615:22654482] Complete 1 2018-09-05 10:27:04.727478+0800 GCDDemo[81615:22654482] Complete 2 2018-09-05 10:27:04.727852+0800 GCDDemo[81615:22654485] Complete All

You can see that our goal has been achieved, but if you have multiple request tasks, you will have to create multiple semaphores to depend on in the order you need them, but this method is easy to write chaotically. The best way to do this is to add thread dependencies using NSOperationQueue, but it's not going to be covered here. This case will also be analyzed in detail in a subsequent article. And use other ways to solve this problem.

dispatch_once

This function should also be familiar, and we'll use dispatch_when we want a function to execute only once Once, this function is also used when we create singletons.

+ (instancetype)shareInstance {

static Manager * manager = nil;

static dispatch_once_t onceToken;

dispatch_once(&onceToken, ^{

manager = [Manager new];

});

return manager;

}

This singleton does not have to worry about threading issues, and it must be safe even in a multi-threaded environment, where onceToken guarantees that this part will only execute once during run.

Implementation of GCD

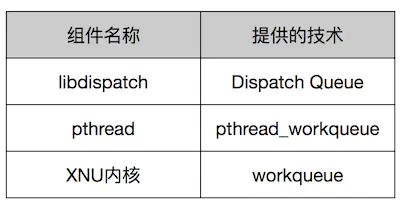

GCD is very convenient to use, here we will discuss how it is implemented

C-language implementation for managing FIFO queues for appended blocks

Lightweight signal of atomic type for exclusive control

A container for managing threads implemented in C

First, identify the software components used to implement dispatch queue

All the APIs we use are in the libdispatch library, and Dispatch Queue implements FIFO queues through structs and chain lists. FIFO via dispatch_ Functions such as async manage added block s.

However, instead of directly joining the FIFO queue, the block first joins the dispatch_Dispatch Continuation Continuation_ The T-type structure, added to the queue, contains information such as the group to which the block belongs, that is, the execution context.

There are four workqueues in the XNU kernel with the same priority as Global Dispatch Queue. When a block is executed in Global Dispatch Queue, libdispatch takes Dispatch Continuation out of the FIFO queue and calls pthread_ Workqueue_ Additem_ The NP function passes information about itself, workqueue information that matches its priority, and executing callback functions to parameters.

pthread_work_queue_additem_np function uses workq_ Kenreturn system call. Notify workqueue of the project that should be executed. Based on notifications, the XNU kernel always generates threads if it is an overcommit property, based on the system's judgment.

Workqueue's thread executes pthread_ The workqueue function, which calls the callback function of libdispatch to execute the Block added to Dispatch Continuation.

After the block is executed, Dispatch Group is notified to end, Dispatch Continuation is released, and so on. Start preparing to execute the next Block of Global Dispatch Queue

This is the general execution of Dispatch Queue.

Dispatch Source

In addition to Dispatch Queue, which is commonly used in GCD s, there is Dispatch Source, which has many types of processing power, the most common of which is the use of timers.

dispatch_source_t timer = dispatch_source_create(DISPATCH_SOURCE_TYPE_TIMER, 0, 0, dispatch_get_main_queue());

// Create a dispatch_source_t-type variable, type specified as timer

dispatch_source_set_timer(timer, DISPATCH_TIME_NOW, 1.0*NSEC_PER_SEC, 0);

// Specify timer execution time to be executed once per second

dispatch_source_set_event_handler(timer, ^{

NSLog(@"Timing Content");

//Content executed per second

});

dispatch_source_set_cancel_handler(timer, ^{

NSLog(@"Timed Cancellation");

// Cancel callback of timer

});

dispatch_resume(timer);

// Start timer

// dispatch_source_cancel(timer);

// Cancel timer

The problem with this is set_event_handle This callback will not execute because this source may be released after the scope has been executed, so you can enlarge the scope of the source by adding to the property to ensure that the timer always executes

@property (nonatomic, strong) dispatch_source_t timer;

Here, if the memory management semantics use assign to create a timer, an error will be reported that will be released.

Dispatch Queue itself does not have "Cancel" functionality, either discard Cancel or use methods such as NSOperationQueue, which Dispatch Source does, and processing performed after Cancel can take the form of a block, which shows the power of Dispatch Source.