Thank you for your reference- http://bjbsair.com/2020-04-01/tech-info/18508.html

When you see an image, your brain can easily distinguish the meaning of the image, but can the computer distinguish the meaning of the image? Computer vision researchers have done a lot of work for this, they think it is impossible until now! With the development of deep learning technology, the availability of massive data sets and the enhancement of computer functions, we can build models that can generate captions for images.

This is what we will achieve in this project. In this project, we will use convolutional neural network and a kind of deep learning technology of loop neural network (LSTM).

What is an image caption generator?

Image title generator is a task that involves the concepts of computer vision and natural language processing to identify the context of images and describe them in natural language.

The purpose of our project is to learn the concept of CNN and LSTM model, and build the working model of image caption generator by using LSTM to realize CNN.

In this project, we will use CNN (convolutional neural network) and LSTM (short and long term memory) to implement subtitle generator. Image features will be extracted from Xception, which is a CNN model trained on the imagenet dataset. Then we input the features into the LSTM model, which will be responsible for generating image titles.

Organize datasets

For the image title generator, we will use the Flickr? 8K dataset. There are other big datasets, such as Flickr K and MSCOCO datasets, but it may take weeks to train the network, so we will use a small Flickr8k dataset. The advantage of large datasets is that we can build better models.

Preparation conditions

We will need the following Libraries

- tensorflow

- keras

- pillow

- numpy

- tqdm

- jupyterlab

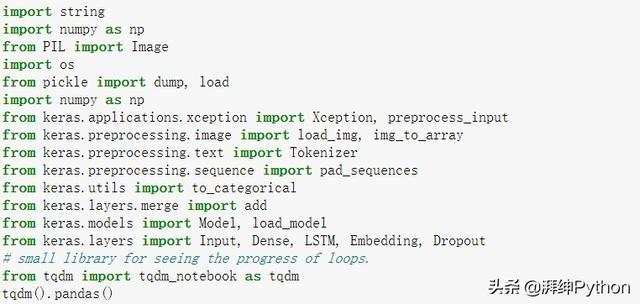

1. First, we import all the necessary Libraries

import string import numpy as np from PIL import Image import os from pickle import dump, load import numpy as np from keras.applications.xception import Xception, preprocess_input from keras.preprocessing.image import load_img, img_to_array from keras.preprocessing.text import Tokenizer from keras.preprocessing.sequence import pad_sequences from keras.utils import to_categorical from keras.layers.merge import add from keras.models import Model, load_model from keras.layers import Input, Dense, LSTM, Embedding, Dropout # small library for seeing the progress of loops. from tqdm import tqdm_notebook as tqdm tqdm().pandas()

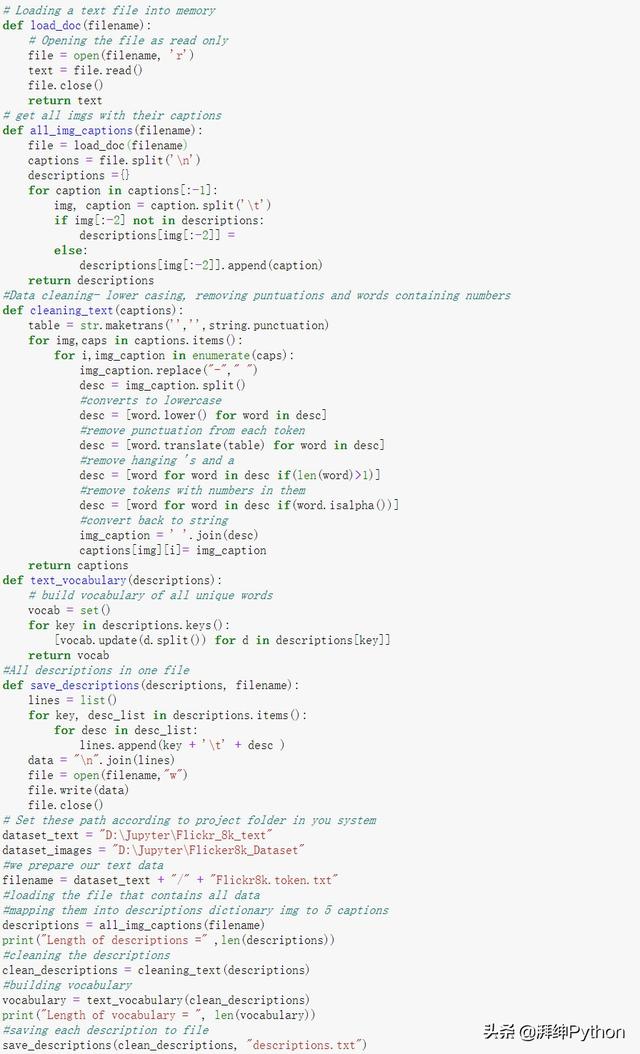

2. Get and perform data cleansing

Our file format is image and title, separated by a new line ("\ n").

Each image has 5 subtitles, and we can see that each subtitle is assigned a (0 to 5) number.

We will define five functions:

- Load doc (filename) - used to load a document file and read the contents inside the file as a string.

- All? Img? Captions (filename) - this function creates a description dictionary that maps images with five subtitle lists.

- cleaning_text (descriptions) - this function takes all descriptions and performs data cleaning. This is an important step when using text data, and depending on the goal, we decide which type of cleanup to perform on the text. In our example, we'll delete the punctuation, convert all text to lowercase, and delete the words that contain numbers.

- text_vocabulary (descriptions) - this is a simple function that separates all unique words and creates a vocabulary from all descriptions.

- save_descriptions (descriptions, filename) - this function creates a list of all descriptions that have been preprocessed and stores them in a file. We will create a descriptions.txt file to store all the titles.

# Loading a text file into memory

def load_doc(filename):

# Opening the file as read only

file = open(filename, 'r')

text = file.read()

file.close()

return text

# get all imgs with their captions

def all_img_captions(filename):

file = load_doc(filename)

captions = file.split('\n')

descriptions ={}

for caption in captions[:-1]:

img, caption = caption.split('\t')

if img[:-2] not in descriptions:

descriptions[img[:-2]] =

else:

descriptions[img[:-2]].append(caption)

return descriptions

#Data cleaning- lower casing, removing puntuations and words containing numbers

def cleaning_text(captions):

table = str.maketrans('','',string.punctuation)

for img,caps in captions.items():

for i,img_caption in enumerate(caps):

img_caption.replace("-"," ")

desc = img_caption.split()

#converts to lowercase

desc = [word.lower() for word in desc]

#remove punctuation from each token

desc = [word.translate(table) for word in desc]

#remove hanging 's and a

desc = [word for word in desc if(len(word)>1)]

#remove tokens with numbers in them

desc = [word for word in desc if(word.isalpha())]

#convert back to string

img_caption = ' '.join(desc)

captions[img][i]= img_caption

return captions

def text_vocabulary(descriptions):

# build vocabulary of all unique words

vocab = set()

for key in descriptions.keys():

[vocab.update(d.split()) for d in descriptions[key]]

return vocab

#All descriptions in one file

def save_descriptions(descriptions, filename):

lines = list()

for key, desc_list in descriptions.items():

for desc in desc_list:

lines.append(key + '\t' + desc )

data = "\n".join(lines)

file = open(filename,"w")

file.write(data)

file.close()

# Set these path according to project folder in you system

dataset_text = "D:\dataflair projects\Project - Image Caption Generator\Flickr_8k_text"

dataset_images = "D:\dataflair projects\Project - Image Caption Generator\Flicker8k_Dataset"

#we prepare our text data

filename = dataset_text + "/" + "Flickr8k.token.txt"

#loading the file that contains all data

#mapping them into descriptions dictionary img to 5 captions

descriptions = all_img_captions(filename)

print("Length of descriptions =" ,len(descriptions))

#cleaning the descriptions

clean_descriptions = cleaning_text(descriptions)

#building vocabulary

vocabulary = text_vocabulary(clean_descriptions)

print("Length of vocabulary = ", len(vocabulary))

#saving each description to file

save_descriptions(clean_descriptions, "descriptions.txt")

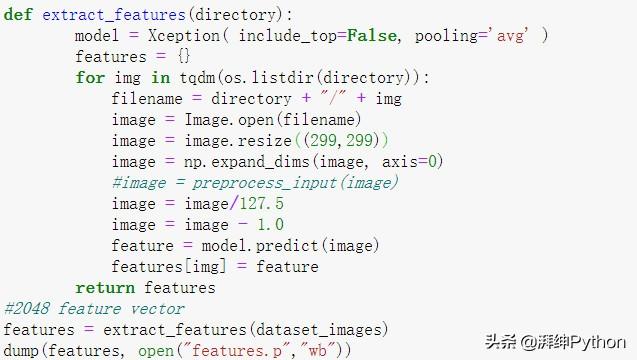

3. Extract feature vectors from all images

This technology is also called transfer learning. We don't have to do anything by ourselves. We use pre training models that have been trained on large datasets, and extract features from these models and use them in our tasks. We are using the Xception model, which has been trained in the imagenet dataset, which has 1000 different categories for classification. We can import this model directly from keras.applications. Since the Xception model was originally built for imagenet, we made few changes when integrating with the model. One thing to note is that the Xception model uses the image size of 2992993 as the input. We will delete the last classification layer and get 2048 feature vectors.

Model = Xception (include_top = False, pooling ='avg ')

The extract? Features() function extracts the features of all images and maps the image names to their respective feature arrays. Then, we dump the feature dictionary into the "features.p" pickle file.

def extract_features(directory):

model = Xception( include_top=False, pooling='avg' )

features = {}

for img in tqdm(os.listdir(directory)):

filename = directory + "/" + img

image = Image.open(filename)

image = image.resize((299,299))

image = np.expand_dims(image, axis=0)

#image = preprocess_input(image)

image = image/127.5

image = image - 1.0

feature = model.predict(image)

features[img] = feature

return features

#2048 feature vector

features = extract_features(dataset_images)

dump(features, open("features.p","wb"))

Depending on your system, this process can take a lot of time.

features = load(open("features.p","rb"))4. Load datasets to train models

In the Flickr? 8K? Test folder, we have the Flickr? 8k.trainimages.txt file, which contains a list of 6000 image names for training.

In order to load the training data set, we need more functions:

- Load? Photos (filename) - this loads the text file as a string and returns a list of image names.

- Load? Clean? Descriptions – this function creates a dictionary that contains the title of each photo in the photo list. We also attach < start > and < end > identifiers to each caption. We need to do this so that our LSTM model can recognize the beginning and end of subtitles.

- Load? Features (photos) - this function will provide us with a dictionary of image names and their feature vectors previously extracted from the Xception model.

#load the data

def load_photos(filename):

file = load_doc(filename)

photos = file.split("\n")[:-1]

return photos

def load_clean_descriptions(filename, photos):

#loading clean_descriptions

file = load_doc(filename)

descriptions = {}

for line in file.split("\n"):

words = line.split()

if len(words)<1 :

continue

image, image_caption = words[0], words[1:]

if image in photos:

if image not in descriptions:

descriptions[image] = []

desc = '<start> ' + " ".join(image_caption) + ' <end>'

descriptions[image].append(desc)

return descriptions

def load_features(photos):

#loading all features

all_features = load(open("features.p","rb"))

#selecting only needed features

features = {k:all_features[k] for k in photos}

return features

filename = dataset_text + "/" + "Flickr_8k.trainImages.txt"

#train = loading_data(filename)

train_imgs = load_photos(filename)

train_descriptions = load_clean_descriptions("descriptions.txt", train_imgs)

train_features = load_features(train_imgs)

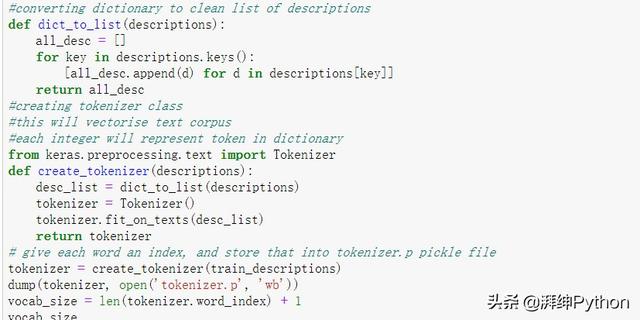

5. Lexicalization

We will map each word in the vocabulary with a unique index value. The Keras library provides us with the tokenizer function, which we will use to create tokens from the vocabulary and save them to the "tokenizer.p" pickle file.

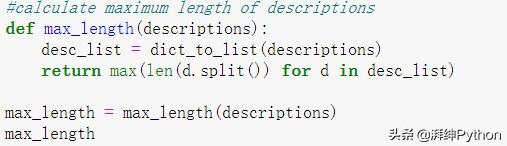

#calculate maximum length of descriptions

def max_length(descriptions):

desc_list = dict_to_list(descriptions)

return max(len(d.split()) for d in desc_list)

max_length = max_length(descriptions)

max_length

Our vocabulary contains 7577 words.

We calculate the maximum length of the description. It is very important to determine the structural parameters of the model. The maximum length of the description is 32.

#create input-output sequence pairs from the image description.

#data generator, used by model.fit_generator()

def data_generator(descriptions, features, tokenizer, max_length):

while 1:

for key, description_list in descriptions.items():

#retrieve photo features

feature = features[key][0]

input_image, input_sequence, output_word = create_sequences(tokenizer, max_length, description_list, feature)

yield [[input_image, input_sequence], output_word]

def create_sequences(tokenizer, max_length, desc_list, feature):

X1, X2, y = list(), list(), list()

# walk through each description for the image

for desc in desc_list:

# encode the sequence

seq = tokenizer.texts_to_sequences([desc])[0]

# split one sequence into multiple X,y pairs

for i in range(1, len(seq)):

# split into input and output pair

in_seq, out_seq = seq[:i], seq[i]

# pad input sequence

in_seq = pad_sequences([in_seq], maxlen=max_length)[0]

# encode output sequence

out_seq = to_categorical([out_seq], num_classes=vocab_size)[0]

# store

X1.append(feature)

X2.append(in_seq)

y.append(out_seq)

return np.array(X1), np.array(X2), np.array(y)

#You can check the shape of the input and output for your model

[a,b],c = next(data_generator(train_descriptions, features, tokenizer, max_length))

a.shape, b.shape, c.shape

#((47, 2048), (47, 32), (47, 7577))

6. Create data generator

First, let's look at the model input and output. In order to make this task a supervised learning task, we must provide input and output to the model for training. We have to train the model on 6000 images, each image will contain 2048 feature vectors of length, and the title is also represented by numbers. The amount of data for these 6000 images cannot be saved to memory, so we will use the generator method to generate the batch.

The generator will generate input and output sequences.

#create input-output sequence pairs from the image description.

#data generator, used by model.fit_generator()

def data_generator(descriptions, features, tokenizer, max_length):

while 1:

for key, description_list in descriptions.items():

#retrieve photo features

feature = features[key][0]

input_image, input_sequence, output_word = create_sequences(tokenizer, max_length, description_list, feature)

yield [[input_image, input_sequence], output_word]

def create_sequences(tokenizer, max_length, desc_list, feature):

X1, X2, y = list(), list(), list()

# walk through each description for the image

for desc in desc_list:

# encode the sequence

seq = tokenizer.texts_to_sequences([desc])[0]

# split one sequence into multiple X,y pairs

for i in range(1, len(seq)):

# split into input and output pair

in_seq, out_seq = seq[:i], seq[i]

# pad input sequence

in_seq = pad_sequences([in_seq], maxlen=max_length)[0]

# encode output sequence

out_seq = to_categorical([out_seq], num_classes=vocab_size)[0]

# store

X1.append(feature)

X2.append(in_seq)

y.append(out_seq)

return np.array(X1), np.array(X2), np.array(y)

#You can check the shape of the input and output for your model

[a,b],c = next(data_generator(train_descriptions, features, tokenizer, max_length))

a.shape, b.shape, c.shape

#((47, 2048), (47, 32), (47, 7577))

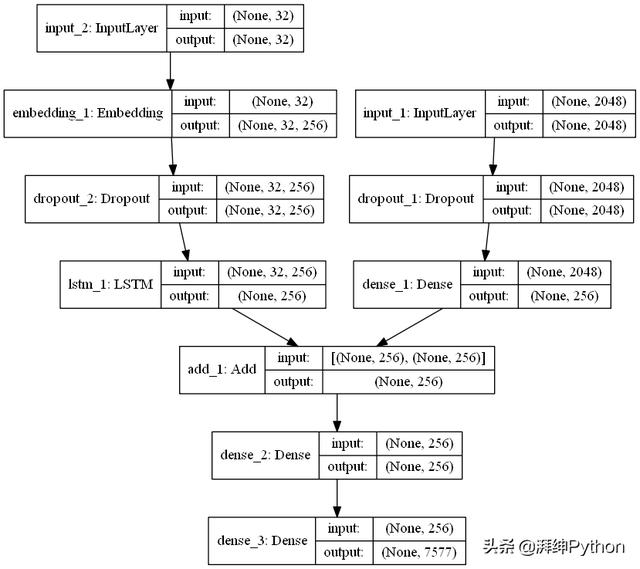

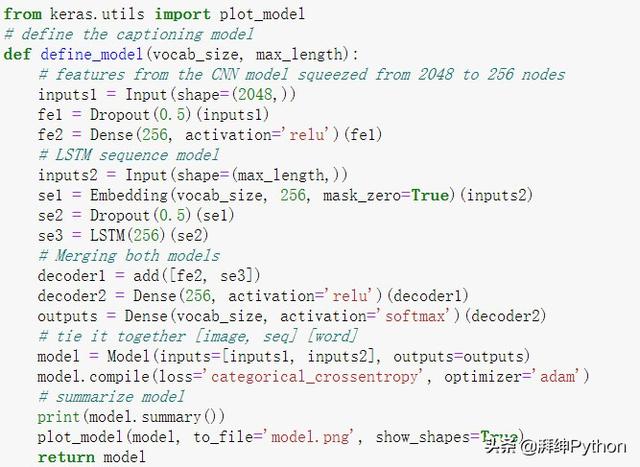

7. Define CNN-RNN model

To define the structure of the model, we will use the Keras model in the Functional API. It will consist of three main parts:

- Feature Extractor – the feature size extracted from the image is 2048, with dense layers, we will reduce the size to 256 nodes.

- Sequence Processor – the embedded layer will process the text input, followed by the LSTM layer.

- Decoder – by combining the output of the above two layers, we will process by dense layer to make the final prediction. The last layer will contain the number of nodes equal to our vocabulary.

The visual representation of the final model is as follows:

from keras.utils import plot_model

# define the captioning model

def define_model(vocab_size, max_length):

# features from the CNN model squeezed from 2048 to 256 nodes

inputs1 = Input(shape=(2048,))

fe1 = Dropout(0.5)(inputs1)

fe2 = Dense(256, activation='relu')(fe1)

# LSTM sequence model

inputs2 = Input(shape=(max_length,))

se1 = Embedding(vocab_size, 256, mask_zero=True)(inputs2)

se2 = Dropout(0.5)(se1)

se3 = LSTM(256)(se2)

# Merging both models

decoder1 = add([fe2, se3])

decoder2 = Dense(256, activation='relu')(decoder1)

outputs = Dense(vocab_size, activation='softmax')(decoder2)

# tie it together [image, seq] [word]

model = Model(inputs=[inputs1, inputs2], outputs=outputs)

model.compile(loss='categorical_crossentropy', optimizer='adam')

# summarize model

print(model.summary())

plot_model(model, to_file='model.png', show_shapes=True)

return model

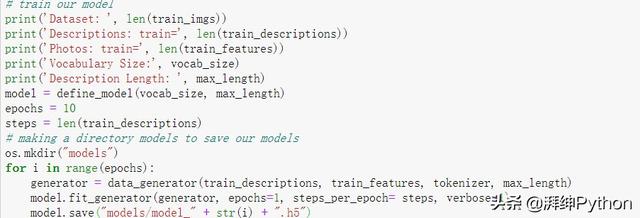

8. Training model

In order to train the model, we will use 6000 training images by generating input and output sequences in batches, and fitting them into the model using the model. Fit? Generator () method. We also save the model to our model folder.

# train our model

print('Dataset: ', len(train_imgs))

print('Descriptions: train=', len(train_descriptions))

print('Photos: train=', len(train_features))

print('Vocabulary Size:', vocab_size)

print('Description Length: ', max_length)

model = define_model(vocab_size, max_length)

epochs = 10

steps = len(train_descriptions)

# making a directory models to save our models

os.mkdir("models")

for i in range(epochs):

generator = data_generator(train_descriptions, train_features, tokenizer, max_length)

model.fit_generator(generator, epochs=1, steps_per_epoch= steps, verbose=1)

model.save("models/model_" + str(i) + ".h5")

9. Test model

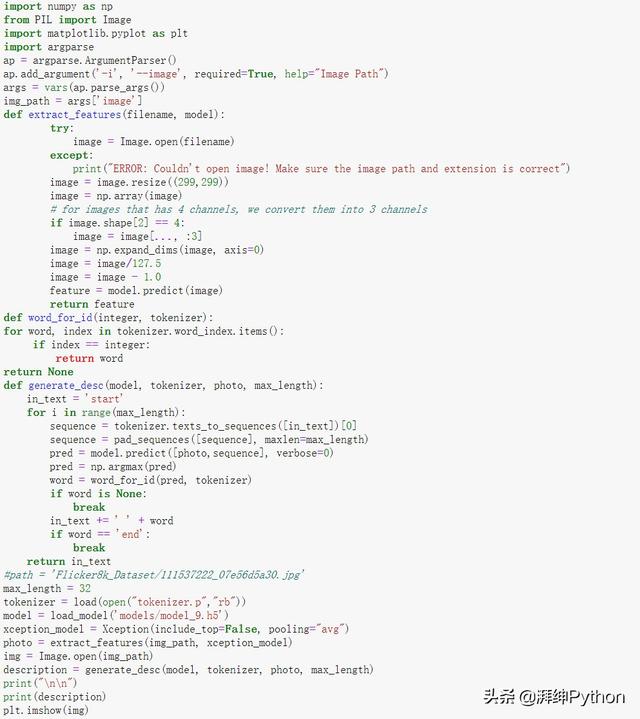

Now that the model has been trained, we'll make a separate file, testing? Caption? Generator.py, which will load the model and generate predictions. Predict the maximum length of the containing index value, so we'll use the same tokenizer.p pickle file to get the word from its index value.

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

import argparse

ap = argparse.ArgumentParser()

ap.add_argument('-i', '--image', required=True, help="Image Path")

args = vars(ap.parse_args())

img_path = args['image']

def extract_features(filename, model):

try:

image = Image.open(filename)

except:

print("ERROR: Couldn't open image! Make sure the image path and extension is correct")

image = image.resize((299,299))

image = np.array(image)

# for images that has 4 channels, we convert them into 3 channels

if image.shape[2] == 4:

image = image[..., :3]

image = np.expand_dims(image, axis=0)

image = image/127.5

image = image - 1.0

feature = model.predict(image)

return feature

def word_for_id(integer, tokenizer):

for word, index in tokenizer.word_index.items():

if index == integer:

return word

return None

def generate_desc(model, tokenizer, photo, max_length):

in_text = 'start'

for i in range(max_length):

sequence = tokenizer.texts_to_sequences([in_text])[0]

sequence = pad_sequences([sequence], maxlen=max_length)

pred = model.predict([photo,sequence], verbose=0)

pred = np.argmax(pred)

word = word_for_id(pred, tokenizer)

if word is None:

break

in_text += ' ' + word

if word == 'end':

break

return in_text

#path = 'Flicker8k_Dataset/111537222_07e56d5a30.jpg'

max_length = 32

tokenizer = load(open("tokenizer.p","rb"))

model = load_model('models/model_9.h5')

xception_model = Xception(include_top=False, pooling="avg")

photo = extract_features(img_path, xception_model)

img = Image.open(img_path)

description = generate_desc(model, tokenizer, photo, max_length)

print("\n\n")

print(description)

plt.imshow(img)

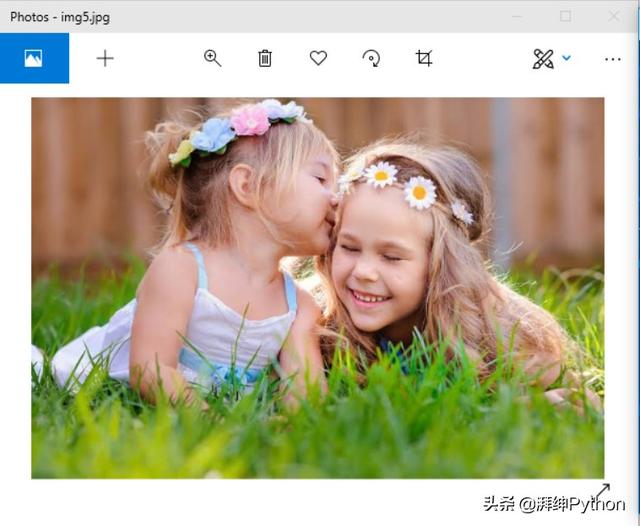

Two girls are playing in the grass

conclusion

In this project, we implement CNN-RNN model by building image title generator. Some of the key points to note are that our model depends on the data, so it cannot predict words outside the vocabulary. We used a small dataset of 8000 images. For production level models, we need to train data sets of more than 100000 images to produce better precision models.