Video player based on FFmpeg and SDL (3) - SDL video display

SDL introduction

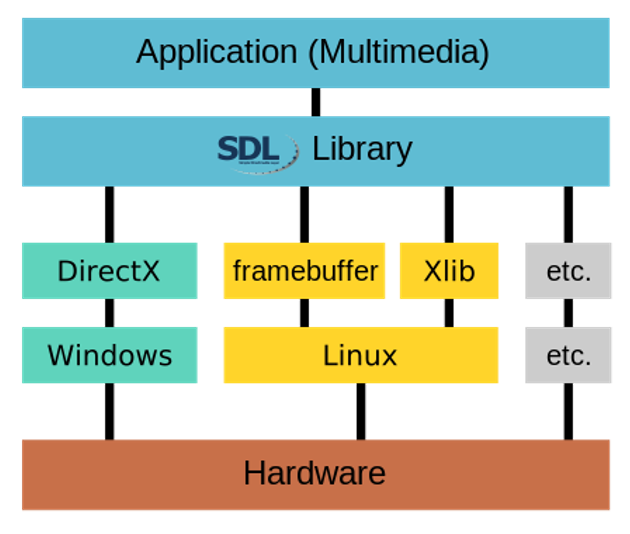

- SDL (Simple DirectMedia Layer) is a set of open source cross platform multimedia development library written in C language. SDL provides several functions to control image, sound, output and input, so that developers can develop applications across multiple platforms (Linux, Windows, Mac OS X, etc.) as long as they use the same or similar code. At present, SDL is mostly used in the development of games, simulators, media players and other multimedia applications.

- Its function is to encapsulate the complex bottom operation of video and audio, simplify the difficulty of video and audio processing, and greatly simplify the code needed to control image, sound, output and input

- SDL calls the underlying API such as DirectX to complete the interaction with the hardware. In structure, it encapsulates the libraries of different operating systems into the same functions, so as to realize its cross platform characteristics.

Construction of FFmpeg+SDL environment based on QT

The previous FFmpeg environment construction has been operated, so we will not show it here.

-

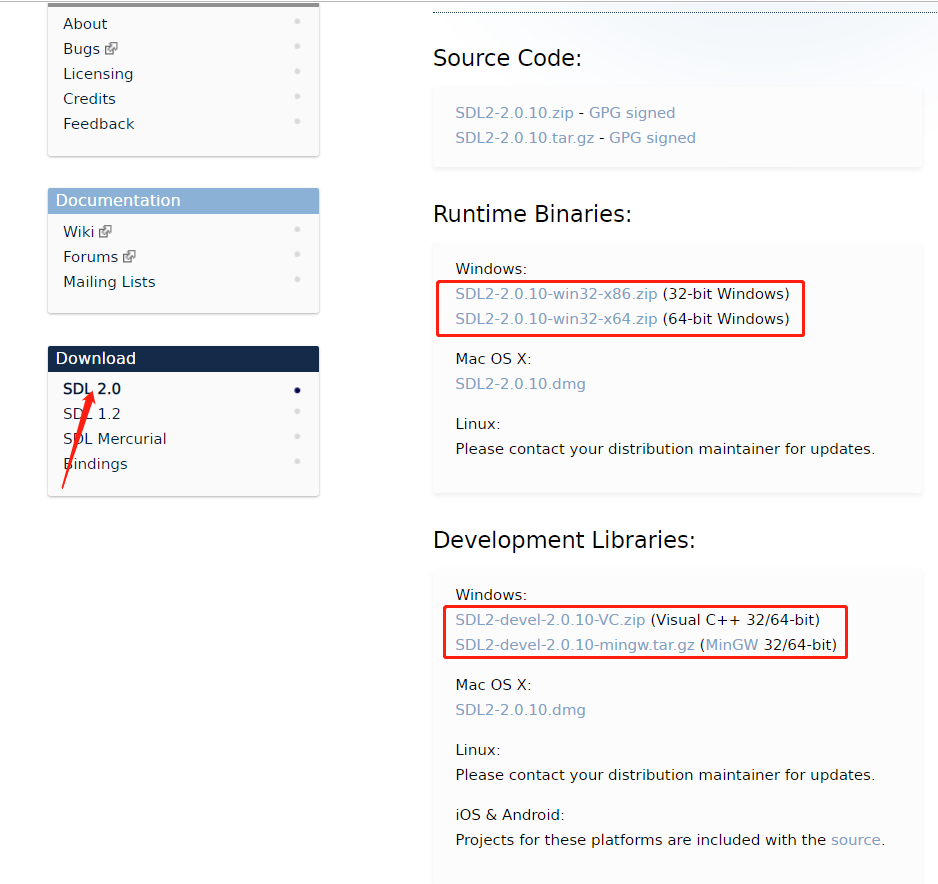

Get SDL

SDL access address: http://www.libsdl.org/

Select the appropriate version according to your device

-

Reference SDL in project

The creation process of the project will not be described here, only the introduction of SDL will be explained. First, extract the dynamic library and Dev compression package just downloaded. There is only one. dll file needed by the project when the dynamic library compression package is running. After the Dev compression package is decompressed, we only need the include and lib folders in it, and directly copy them to our project directory.

Then we introduce it into the. pro file (including the ffmpeg introduced earlier)

INCLUDEPATH += $$PWD/lib/ffmpeg/include\ $$PWD/lib/SDL2/include LIBS += $$PWD/lib/ffmpeg/lib/avcodec.lib\ $$PWD/lib/ffmpeg/lib/avdevice.lib\ $$PWD/lib/ffmpeg/lib/avfilter.lib\ $$PWD/lib/ffmpeg/lib/avformat.lib\ $$PWD/lib/ffmpeg/lib/avutil.lib\ $$PWD/lib/ffmpeg/lib/postproc.lib\ $$PWD/lib/ffmpeg/lib/swresample.lib\ $$PWD/lib/ffmpeg/lib/swscale.lib\ $$PWD/lib/SDL2/lib/SDL2.libSince we build a C + + project, we use the C + + compiler to compile, and FFMPEG is the library of C, so we need to add extern "C" here.

extern "C" { #include <libavcodec\avcodec.h> #include <libavformat\avformat.h> #include <libswscale\swscale.h> #include <libswresample\swresample.h> #include "SDL.h" }

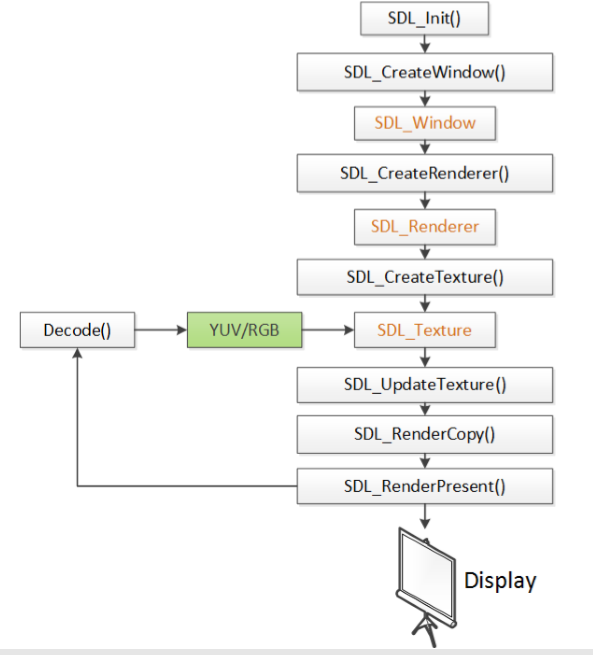

SDL display function and data structure and introduction of time and multithreading in SDL

function

- SDL init(): initialize SDL system

- SDL? Createwindow(): create window SDL? Window

- SDL? Createrenderer(): create a renderer SDL? Renderer

- SDL? Createtexture(): create texture SDL? Texture

- SDL? Updatetexture(): set texture data

- SDL rendercopy(): copies texture data to the renderer

- SDL? Renderpresent(): display

- SDL_Delay(): tool function for delay.

- SDL? Quit(): exit SDL system

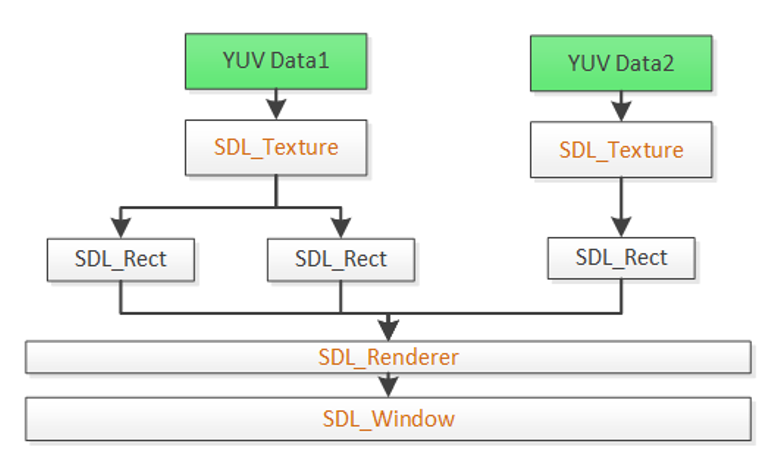

data structure

- SDL? Window represents a "window"

- SDL? Renderer represents a "renderer"

- SDL? Texture represents a "texture"

- SDL rect a simple rectangular structure

SDL incident

To understand the event handling of SDL, one of the principles we must know is that SDL stores all events in one queue. All operations on events are actually operations on queues.

- SDL ou pollevent: throws events from the queue header.

- SDL_WaitEvent: when there is an event in the queue, an event is thrown. Otherwise, it will be in blocking state and release CPU.

- SDL · waiteventtimeout: the difference between SDL · waitevent and SDL · waitevent is that when the timeout is reached, it exits the blocking state.

- SDL_PeekEvent: takes an event from the queue, but it is not removed from the queue.

- SDL? Pushevent: inserts an event into the queue.

SDL only provides such simple API s. Here are some common events:

- SDL? Windowevent: window window related events.

- SDL? Keyboardevent: keyboard related events.

- SDL? Mousemomotionevent: events related to mouse movement.

- SDL_QuitEvent: exit event.

- SDL? Userevent: user defined event.

SDL multithreading

Why introduce the concept of multithreading? We know that if we put time-consuming operations into the main thread, it will lead to interface stuttering. Therefore, we need to put some time-consuming operations into the sub thread in the actual development to avoid the pseudo death of the interface and give full play to the performance of the hardware.

The main functions to be understood are as follows:

- SDL thread creation: SDL create thread

- SDL thread waiting: SDL · waitthead

- SDL mutex: SDL? CreateMutex / SDL? Destroymutex

- SDL lock mutex: SDL? Lockmutex / SDL? Unlockmutex

- SDL condition variable (semaphore): SDL createcond / SDL destorycond

- SDL condition variable (semaphore) wait / notify: SDL ﹣ condwait / SDL ﹣ condsingle

SDL video display logic

SDL video display complete code

The following code refers to Raytheon's code. Here is the pure file in YUV format read directly for video display. There is no unpacking and video decoding operations. These operations were described in detail in the previous article.

#include <stdio.h>

extern "C"

{

#include "sdl/SDL.h"

};

const int bpp=12;

int screen_w=500,screen_h=500;

const int pixel_w=320,pixel_h=180;

unsigned char buffer[pixel_w*pixel_h*bpp/8];

//Refresh Event

#define REFRESH_EVENT (SDL_USEREVENT + 1)

//Break

#define BREAK_EVENT (SDL_USEREVENT + 2)

int thread_exit=0;

int refresh_video(void *opaque){

thread_exit=0;

while (thread_exit==0) {

SDL_Event event;

event.type = REFRESH_EVENT;

SDL_PushEvent(&event);

SDL_Delay(40);

}

thread_exit=0;

//Break

SDL_Event event;

event.type = BREAK_EVENT;

SDL_PushEvent(&event);

return 0;

}

int main(int argc, char* argv[])

{

if(SDL_Init(SDL_INIT_VIDEO)) {

printf( "Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}

SDL_Window *screen;

//SDL 2.0 Support for multiple windows

screen = SDL_CreateWindow("Simplest Video Play SDL2", SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED,

screen_w, screen_h,SDL_WINDOW_OPENGL|SDL_WINDOW_RESIZABLE);

if(!screen) {

printf("SDL: could not create window - exiting:%s\n",SDL_GetError());

return -1;

}

SDL_Renderer* sdlRenderer = SDL_CreateRenderer(screen, -1, 0);

Uint32 pixformat=0;

//IYUV: Y + U + V (3 planes)

//YV12: Y + V + U (3 planes)

pixformat= SDL_PIXELFORMAT_IYUV;

SDL_Texture* sdlTexture = SDL_CreateTexture(sdlRenderer,pixformat, SDL_TEXTUREACCESS_STREAMING,pixel_w,pixel_h);

FILE *fp=NULL;

fp=fopen("test_yuv420p_320x180.yuv","rb+");

if(fp==NULL){

printf("cannot open this file\n");

return -1;

}

SDL_Rect sdlRect;

SDL_Thread *refresh_thread = SDL_CreateThread(refresh_video,NULL,NULL);

SDL_Event event;

while(1){

//Wait

SDL_WaitEvent(&event);

if(event.type==REFRESH_EVENT){

if (fread(buffer, 1, pixel_w*pixel_h*bpp/8, fp) != pixel_w*pixel_h*bpp/8){

// Loop

fseek(fp, 0, SEEK_SET);

fread(buffer, 1, pixel_w*pixel_h*bpp/8, fp);

}

SDL_UpdateTexture( sdlTexture, NULL, buffer, pixel_w);

//FIX: If window is resize

sdlRect.x = 0;

sdlRect.y = 0;

sdlRect.w = screen_w;

sdlRect.h = screen_h;

SDL_RenderClear( sdlRenderer );

SDL_RenderCopy( sdlRenderer, sdlTexture, NULL, &sdlRect);

SDL_RenderPresent( sdlRenderer );

}else if(event.type==SDL_WINDOWEVENT){

//If Resize

SDL_GetWindowSize(screen,&screen_w,&screen_h);

}else if(event.type==SDL_QUIT){

thread_exit=1;

}else if(event.type==BREAK_EVENT){

break;

}

}

SDL_Quit();

return 0;

}

Summary: the previous article explained how to unpack and decode the encapsulated video through FFmpeg, and decrypt it into YUV or RGB format files. This article described how to simply play the YUV format video files, how to connect them in series, and how to play the. avi format video files? The simple implementation is that the video pixel data obtained after explaining the code is stored in a queue, and SDL is read from the queue for playing.