Conversion between VOC dataset (xml format) and COCO dataset (json format)

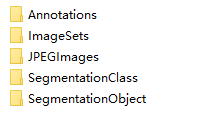

Let's first look at the directory structure of voc and coco datasets:

Take the VOC2012 dataset as an example, there are five folders below:

The Annotations folder is the XML file corresponding to the image. For example, "2007_000027.xml" stores the information corresponding to the image 2007_000027.jpg. Open it with notepad and you can see that this is the data in XML format.

The txt files of the training set and verification set officially divided for us are stored in the ImageSets folder. We mainly use the train.txt and val.txt files under the "ImageSets/Main /" folder. The train.txt file stores the image name of the officially divided training set, and the val.txt file stores the name of the verification set image.

Another folder that needs attention is JEPGImages, which stores the original pictures corresponding to the picture name. We don't need to pay special attention to the remaining two folders.

Next, let's take a look at the information in the xml file of the voc dataset.

<annotation>

<folder>Folder directory</folder>

<filename>Picture name.jpg</filename>

<path>path_to\at002eg001.jpg</path>

<source>

<database>Unknown</database>

</source>

<size>

<width>550</width>

<height>518</height>

<depth>3</depth>

</size>

<segmented>0</segmented>

<object>

<name>Apple</name>

<pose>Unspecified</pose>

<truncated>0</truncated>

<difficult>0</difficult>

<bndbox>

<xmin>292</xmin>

<ymin>218</ymin>

<xmax>410</xmax>

<ymax>331</ymax>

</bndbox>

</object>

<object>

...

</object>

</annotation>

You can see that an xml file contains the following information:

- Folder: folder

- filename: file name

- path: path

- Source: source

- Size: picture size

- segmented: image segmentation will be used. This article only introduces the target detection (taking the bounding box as an example)

- Object: an xml file can have multiple objects. Each object represents a box. Each box is composed of the following information:

- name: which category does the object in the modified box belong to, such as Apple

- bndbox: give the coordinates of the upper left corner and the lower right corner

- Truncated: is it truncated

- Difficult: is it a difficult object to detect

Unlike VOC, an image corresponds to an xml file. Coco directly writes all images and corresponding box information in a json file. Generally, the entire coco directory is as follows:

coco |______annotations # Store label information | |__train.json | |__val.json | |__test.json |______trainset # Store training set images |______valset # Store verification set images |______testset # Store test set images

A standard json file contains the following information:

{

"info" : info,

"licenses" : [license],

"images" : [image],

"annotations" : [annataton],

"categories" : [category]

}

From the overall json structure above, we can see that the type of the value corresponding to the info key is a dictionary; The types of values corresponding to the four keys of licenses, images, annotations and categories are all a list, and the data type stored in the list is still a dictionary.

We can get the length of images, annotations and categories through len(List), and we get the following contents.

(1) Length of list elements in the images field = number of pictures included in the training set (or test set);

(2) The number of list elements in the annotations field = the number of bounding box es in the training set (or test set);

(3) Number of list elements in categories field = number of categories

Next, let's look at the corresponding contents of each key:

(1)info

info{

"year" : int, # particular year

"version" : str, # edition

"description" : str, # Detailed description

"contributor" : str, # author

"url" : str, # Protocol link

"date_created" : datetime, # Generation date

}

(2)images

"images": [

{"id": 0, # int image id, starting from 0

"file_name": "0.jpg", # str file name

"width": 512, # Width of int image

"height": 512, # Height of int image

"date_captured": "2020-04-14 01:45:07.508146", # datatime get date

"license": 1, # Which protocol does int follow

"coco_url": "", # str coco picture link url

"flickr_url": "" # str flick picture link url

}]

(3)licenses

"licenses": [

{

"id": 1, # The license followed by the int protocol id number in images is 1

"name": null, # str protocol name

"url": null # str protocol link

}]

(4)annotations

"annotations": [

{

"id": 0, # int the id number of each marked object in the picture

"image_id": 0, # int the number of the picture where the object is located

"category_id": 2, # int the category id number of the marked object

"iscrowd": 0, # Whether the 0 or 1 target is covered. The default value is 0

"area": 4095.9999999999986, # float area of detected object (64 * 64 = 4096)

"bbox": [200.0, 416.0, 64.0, 64.0], # [x, y, width, height] coordinate information of target detection frame

"segmentation": [[200.0, 416.0, 264.0, 416.0, 264.0, 480.0, 200.0, 480.0]]

}]

In "bbox" [x, y, width, height]x, y represents the coordinate values of X and Y in the upper left corner of the object.

In "segmentation" [x1, y1, x2, y2, x3, y3, x4, y4] are the other three coordinate points selected clockwise starting from the coordinates of the upper left corner. And [upper left x, upper left y, upper right x, upper right y, lower right x, lower right y, lower left x, lower left y].

(5)categories

"categories":[

{

"id": 1, # int category id number

"name": "rectangle", # str category name

"supercategory": "None" # str belongs to a large category, such as trucks and cars, which belong to the motor vehicle class

},

{

"id": 2,

"name": "circle",

"supercategory": "None"

}

]

1, Convert the xml of voc dataset into json format of coco dataset

GitHub open source project address

Before starting the conversion, you have to convert all the information you want to convert The xml file name is saved in xml_list.txt list. If it is a voc dataset made by yourself, remember not to type the class alias name wrong when entering the tag name.

# create_xml_list.py

import os

xml_list = os.listdir('C:/Users/user/Desktop/train')

with open('C:/Users/user/Desktop/xml_list.txt','a') as f:

for i in xml_list:

if i[-3:]=='xml':

f.write(str(i)+'\n')

Execute Python voc2coco py xml_ list. Txt file path The real storage path of XML file is transformed json storage path can convert XML into a json file.

# voc2coco.py

# pip install lxml

import sys

import os

import json

import xml.etree.ElementTree as ET

START_BOUNDING_BOX_ID = 1

PRE_DEFINE_CATEGORIES = {}

# If necessary, pre-define category and its id

# PRE_DEFINE_CATEGORIES = {"aeroplane": 1, "bicycle": 2, "bird": 3, "boat": 4,

# "bottle":5, "bus": 6, "car": 7, "cat": 8, "chair": 9,

# "cow": 10, "diningtable": 11, "dog": 12, "horse": 13,

# "motorbike": 14, "person": 15, "pottedplant": 16,

# "sheep": 17, "sofa": 18, "train": 19, "tvmonitor": 20}

def get(root, name):

vars = root.findall(name)

return vars

def get_and_check(root, name, length):

vars = root.findall(name)

if len(vars) == 0:

raise NotImplementedError('Can not find %s in %s.'%(name, root.tag))

if length > 0 and len(vars) != length:

raise NotImplementedError('The size of %s is supposed to be %d, but is %d.'%(name, length, len(vars)))

if length == 1:

vars = vars[0]

return vars

def get_filename_as_int(filename):

try:

filename = os.path.splitext(filename)[0]

return int(filename)

except:

raise NotImplementedError('Filename %s is supposed to be an integer.'%(filename))

def convert(xml_list, xml_dir, json_file):

list_fp = open(xml_list, 'r')

json_dict = {"images":[], "type": "instances", "annotations": [],

"categories": []}

categories = PRE_DEFINE_CATEGORIES

bnd_id = START_BOUNDING_BOX_ID

for line in list_fp:

line = line.strip()

print("Processing %s"%(line))

xml_f = os.path.join(xml_dir, line)

tree = ET.parse(xml_f)

root = tree.getroot()

path = get(root, 'path')

if len(path) == 1:

filename = os.path.basename(path[0].text)

elif len(path) == 0:

filename = get_and_check(root, 'filename', 1).text

else:

raise NotImplementedError('%d paths found in %s'%(len(path), line))

## The filename must be a number

image_id = get_filename_as_int(filename)

size = get_and_check(root, 'size', 1)

width = int(get_and_check(size, 'width', 1).text)

height = int(get_and_check(size, 'height', 1).text)

image = {'file_name': filename, 'height': height, 'width': width,

'id':image_id}

json_dict['images'].append(image)

## Cruuently we do not support segmentation

# segmented = get_and_check(root, 'segmented', 1).text

# assert segmented == '0'

for obj in get(root, 'object'):

category = get_and_check(obj, 'name', 1).text

if category not in categories:

new_id = len(categories)

categories[category] = new_id

category_id = categories[category]

bndbox = get_and_check(obj, 'bndbox', 1)

xmin = int(get_and_check(bndbox, 'xmin', 1).text) - 1

ymin = int(get_and_check(bndbox, 'ymin', 1).text) - 1

xmax = int(get_and_check(bndbox, 'xmax', 1).text)

ymax = int(get_and_check(bndbox, 'ymax', 1).text)

assert(xmax > xmin)

assert(ymax > ymin)

o_width = abs(xmax - xmin)

o_height = abs(ymax - ymin)

ann = {'area': o_width*o_height, 'iscrowd': 0, 'image_id':

image_id, 'bbox':[xmin, ymin, o_width, o_height],

'category_id': category_id, 'id': bnd_id, 'ignore': 0,

'segmentation': []}

json_dict['annotations'].append(ann)

bnd_id = bnd_id + 1

for cate, cid in categories.items():

cat = {'supercategory': 'none', 'id': cid, 'name': cate}

json_dict['categories'].append(cat)

json_fp = open(json_file, 'w')

json_str = json.dumps(json_dict)

json_fp.write(json_str)

json_fp.close()

list_fp.close()

if __name__ == '__main__':

if len(sys.argv) <= 1:

print('3 auguments are need.')

print('Usage: %s XML_LIST.txt XML_DIR OUTPU_JSON.json'%(sys.argv[0]))

exit(1)

convert(sys.argv[1], sys.argv[2], sys.argv[3])

2, Convert json file in COCO format into xml file in VOC format

If you want to convert a json file in COCO format into an xml file in VOC format, use anno and xml_ Change dir to json file path and the saved path of the converted xml file, and execute the following code to complete the conversion.

# coco2voc.py

# pip install pycocotools

import os

import time

import json

import pandas as pd

from tqdm import tqdm

from pycocotools.coco import COCO

#json file path and path for storing xml file

anno = 'C:/Users/user/Desktop/val/instances_val2017.json'

xml_dir = 'C:/Users/user/Desktop/val/xml/'

coco = COCO(anno) # read file

cats = coco.loadCats(coco.getCatIds()) # Here, loadCats is the interface provided by coco to obtain categories

# Create anno dir

dttm = time.strftime("%Y%m%d%H%M%S", time.localtime())

def trans_id(category_id):

names = []

namesid = []

for i in range(0, len(cats)):

names.append(cats[i]['name'])

namesid.append(cats[i]['id'])

index = namesid.index(category_id)

return index

def convert(anno,xml_dir):

with open(anno, 'r') as load_f:

f = json.load(load_f)

imgs = f['images'] #IMG of json file_ How many images does the imgs list represent

cat = f['categories']

df_cate = pd.DataFrame(f['categories']) # Categories in json

df_cate_sort = df_cate.sort_values(["id"], ascending=True) # Sort by category id

categories = list(df_cate_sort['name']) # Get all category names

print('categories = ', categories)

df_anno = pd.DataFrame(f['annotations']) # annotation in json

for i in tqdm(range(len(imgs))): # The large loop is all images, and Tqdm is an extensible Python progress bar. You can add a progress prompt to the long loop

xml_content = []

file_name = imgs[i]['file_name'] # Through img_id find the information of the picture

height = imgs[i]['height']

img_id = imgs[i]['id']

width = imgs[i]['width']

version =['"1.0"','"utf-8"']

# Add attributes to xml file

xml_content.append("<?xml version=" + version[0] +" "+ "encoding="+ version[1] + "?>")

xml_content.append("<annotation>")

xml_content.append(" <filename>" + file_name + "</filename>")

xml_content.append(" <size>")

xml_content.append(" <width>" + str(width) + "</width>")

xml_content.append(" <height>" + str(height) + "</height>")

xml_content.append(" <depth>"+ "3" + "</depth>")

xml_content.append(" </size>")

# Through img_id found annotations

annos = df_anno[df_anno["image_id"].isin([img_id])] # (2,8) indicates that a drawing has two boxes

for index, row in annos.iterrows(): # All annotation information of a graph

bbox = row["bbox"]

category_id = row["category_id"]

cate_name = categories[trans_id(category_id)]

# add new object

xml_content.append(" <object>")

xml_content.append(" <name>" + cate_name + "</name>")

xml_content.append(" <truncated>0</truncated>")

xml_content.append(" <difficult>0</difficult>")

xml_content.append(" <bndbox>")

xml_content.append(" <xmin>" + str(int(bbox[0])) + "</xmin>")

xml_content.append(" <ymin>" + str(int(bbox[1])) + "</ymin>")

xml_content.append(" <xmax>" + str(int(bbox[0] + bbox[2])) + "</xmax>")

xml_content.append(" <ymax>" + str(int(bbox[1] + bbox[3])) + "</ymax>")

xml_content.append(" </bndbox>")

xml_content.append(" </object>")

xml_content.append("</annotation>")

x = xml_content

xml_content = [x[i] for i in range(0, len(x)) if x[i] != "\n"]

### list save file

#xml_path = os.path.join(xml_dir, file_name.replace('.xml', '.jpg'))

xml_path = os.path.join(xml_dir, file_name.split('j')[0]+'xml')

print(xml_path)

with open(xml_path, 'w+', encoding="utf8") as f:

f.write('\n'.join(xml_content))

xml_content[:] = []

if __name__ == '__main__':

convert(anno,xml_dir)

3, Convert txt file to XML format of Pascal VOC

For example, the directory of the BIllboard dataset downloaded from OpenImageV5 is as follows:

Billboard

|______images # Store training set images

| |__train

|__train.jpg

| |__val

|__val.jpg

|______labels # Store label information

| |__train

|__train.txt

| |__val

|__val.txt

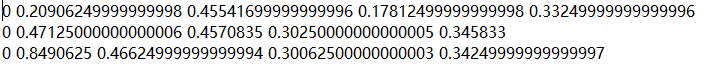

The content in the txt corresponding to each image corresponds to the coordinate information of the target. As shown in the following figure, 0 indicates that there is only one category of billboard.

The code for converting txt file into XML format of Pascal VOC is as follows:

#! /usr/bin/python

# -*- coding:UTF-8 -*-

import os, sys

import glob

from PIL import Image

# VEDAI image storage location

src_img_dir = "F:/Billboard/dataset/images/val"

# Storage location of txt file of ground truth of VEDAI image

src_txt_dir = "F:/Billboard/dataset/labels/val"

src_xml_dir = "F:/Billboard/dataset/xml/val"

name=['billboard']

img_Lists = glob.glob(src_img_dir + '/*.jpg')

img_basenames = [] # e.g. 100.jpg

for item in img_Lists:

img_basenames.append(os.path.basename(item))

img_names = [] # e.g. 100

for item in img_basenames:

temp1, temp2 = os.path.splitext(item)

img_names.append(temp1)

for img in img_names:

im = Image.open((src_img_dir + '/' + img + '.jpg'))

width, height = im.size

# open the crospronding txt file

gt = open(src_txt_dir + '/' + img + '.txt').read().splitlines()

#gt = open(src_txt_dir + '/gt_' + img + '.txt').read().splitlines()

# write in xml file

#os.mknod(src_xml_dir + '/' + img + '.xml')

xml_file = open((src_xml_dir + '/' + img + '.xml'), 'w')

xml_file.write('<annotation>\n')

xml_file.write(' <folder>VOC2007</folder>\n')

xml_file.write(' <filename>' + str(img) + '.png' + '</filename>\n')

xml_file.write(' <size>\n')

xml_file.write(' <width>' + str(width) + '</width>\n')

xml_file.write(' <height>' + str(height) + '</height>\n')

xml_file.write(' <depth>3</depth>\n')

xml_file.write(' </size>\n')

# write the region of image on xml file

for img_each_label in gt:

spt = img_each_label.split(' ') #Here, if the txt is separated by commas', ', it will be changed to spt = img_each_label.split(',').

xml_file.write(' <object>\n')

xml_file.write(' <name>' + str(name[int(spt[0])]) + '</name>\n')

xml_file.write(' <pose>Unspecified</pose>\n')

xml_file.write(' <truncated>0</truncated>\n')

xml_file.write(' <difficult>0</difficult>\n')

xml_file.write(' <bndbox>\n')

xml_file.write(' <xmin>' + str(spt[1]) + '</xmin>\n')

xml_file.write(' <ymin>' + str(spt[2]) + '</ymin>\n')

xml_file.write(' <xmax>' + str(spt[3]) + '</xmax>\n')

xml_file.write(' <ymax>' + str(spt[4]) + '</ymax>\n')

xml_file.write(' </bndbox>\n')

xml_file.write(' </object>\n')

xml_file.write('</annotation>')

So far, we can basically deal with the data conversion commonly used in target detection. No matter what data set we get, VOC, COCO or various txt formats, we can use the above method to convert it into the data set we need. As for making your own dataset, it's also very simple, and the space is limited. I'll summarize it in the next article...