Container Redis services are frequently AOF rewritten

Today, let's look at an online Redis case.

01 background introduction

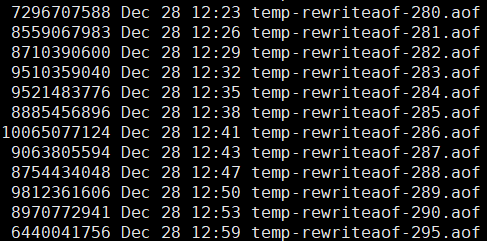

An online redis instance frequently performs aof rewriting. After entering the redis data directory, you can see the following scenario:

We can see:

1. In the data directory of redis, many temp XXX are generated Aof file.

2. The start time is about 12:23.

3. Every time a new aof file is written to about 8 ~ 10 G, switching will occur.

In general, if there are multiple temporary files of aof, it must be that the aof is not completed this time, and then Redis re initiates the aof rewrite operation.

02 troubleshooting process

1. Server level

View the monitoring indicators at the server level of the current Redis.

At about 12:23, it was found that the CPU utilization, load and disk Util values were normal.

The only exception is the memory utilization, which seems to be shaking all the time, but it seems that OOM will not happen. After all, the memory utilization is less than 70%.

2. View the read / write volume and memory usage of the current Redis instance

Viewing the business read / write volume during the problem period, you can find:

The read request is about 1500 and the write request is about 5000

It seems that the amount of reading and writing is also relatively small. It should have nothing to do with reading and writing.

Let's look at the current memory usage:

About 17G.

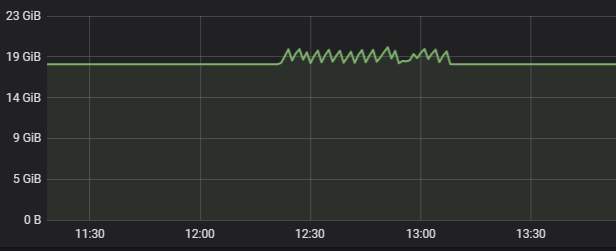

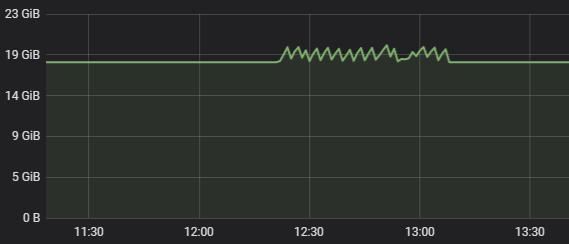

3. View the memory monitoring used by Redis itself

Through the memory usage monitoring of Redis, it can be found that the memory used by Redis has been maintained at about 17G, but there is a pile of sawteeth behind it. The memory increases and then decreases, and the process has been repeated.

4. View Redis server logs

The purpose is to see what Redis is doing during this period. Open the log and intercept the following parts:

24:S 28 Dec 12:23:46.598 * Starting automatic rewriting of AOF on 400% growth 24:S 28 Dec 12:23:47.027 * Background append only file rewriting started by pid 281 24:S 28 Dec 12:26:42.848 # Background AOF rewrite terminated by signal 9 24:S 28 Dec 12:26:42.955 * Starting automatic rewriting of AOF on 401% growth 24:S 28 Dec 12:26:43.361 * Background append only file rewriting started by pid 282 24:S 28 Dec 12:29:13.027 # Background AOF rewrite terminated by signal 9 24:S 28 Dec 12:29:13.133 * Starting automatic rewriting of AOF on 401% growth 24:S 28 Dec 12:29:13.583 * Background append only file rewriting started by pid 283 24:S 28 Dec 12:32:03.068 # Background AOF rewrite terminated by signal 9 24:S 28 Dec 12:32:03.175 * Starting automatic rewriting of AOF on 402% growth 24:S 28 Dec 12:32:03.630 * Background append only file rewriting started by pid 284 24:S 28 Dec 12:35:01.723 # Background AOF rewrite terminated by signal 9 24:S 28 Dec 12:35:01.828 * Starting automatic rewriting of AOF on 403% growth 24:S 28 Dec 12:35:02.256 * Background append only file rewriting started by pid 285 24:S 28 Dec 12:38:11.077 # Background AOF rewrite terminated by signal 9 24:S 28 Dec 12:38:11.185 * Starting automatic rewriting of AOF on 403% growth 24:S 28 Dec 12:38:11.636 * Background append only file rewriting started by pid 286 24:S 28 Dec 12:41:01.469 # Background AOF rewrite terminated by signal 9 24:S 28 Dec 12:41:01.576 * Starting automatic rewriting of AOF on 404% growth 24:S 28 Dec 12:41:02.036 * Background append only file rewriting started by pid 287 24:S 28 Dec 12:43:51.044 # Background AOF rewrite terminated by signal 9 24:S 28 Dec 12:43:51.152 * Starting automatic rewriting of AOF on 404% growth 24:S 28 Dec 12:43:51.584 * Background append only file rewriting started by pid 288 24:S 28 Dec 12:47:04.495 # Background AOF rewrite terminated by signal 9 24:S 28 Dec 12:47:04.599 * Starting automatic rewriting of AOF on 405% growth 24:S 28 Dec 12:47:05.072 * Background append only file rewriting started by pid 289 24:S 28 Dec 12:50:18.393 # Background AOF rewrite terminated by signal 9 24:S 28 Dec 12:50:18.499 * Starting automatic rewriting of AOF on 405% growth 24:S 28 Dec 12:50:19.004 * Background append only file rewriting started by pid 290 24:S 28 Dec 12:53:11.886 # Background AOF rewrite terminated by signal 9

It is not difficult to guess from the log that Redis has reached the threshold of aof rewriting, that is, 400%,

redis > config get auto* 1) "auto-aof-rewrite-percentage" 2) "400"

Then start rewriting, but the rewritten subprocess is killed by the system signal 9. It can be understood that the subprocess is killed - 9.

Here, confirm the conjecture through the system log / var/log/message:

Dec 28 12:23:45 kernel: memory+swap: usage 20740096kB, limit 9007199254740988kB, failcnt 0 Dec 28 12:23:45 kernel: kmem: usage 0kB, limit 9007199254740988kB, failcnt 0 Dec 28 12:23:45 kernel: Memory cgroup stats for /kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod3ae01d1b_0d39_11eb_adc7_1c98ec1b7874.slice/do cker-9c9cd155c58b6f28b9d5771a230ea711d48fdb5fb50c99caa14a1afb474d6b54.scope: cache:28KB rss:20740068KB rss_huge:0KB mapped_file:0KB swap:0KB inactive_anon:0KB active_anon:2 0739924KB inactive_file:12KB active_file:8KB unevictable:0KB Dec 28 12:23:45 kernel: [ pid ] uid tgid total_vm rss nr_ptes swapents oom_score_adj name Dec 28 12:23:45 kernel: [22253] 0 22253 2932 154 10 0 999 startredis.sh Dec 28 12:23:45 kernel: [22302] 0 22302 27565 290 58 0 999 sudo Dec 28 12:23:45 kernel: [22313] 1000 22313 4672591 4659513 9119 0 999 redis-server Dec 28 12:23:45 kernel: [26944] 1000 26944 4719696 4688593 9214 0 999 redis-server Dec 28 12:23:45 kernel: Memory cgroup out of memory: Kill process 26944 (redis-server) score 1905 or sacrifice child Dec 28 12:23:45 kernel: Killed process 26944 (redis-server) total-vm:18878784kB, anon-rss:18753972kB, file-rss:400kB, shmem-rss:0kB

Confirm that the child process of Redis is kill ed due to OOM.

5. Analysis

Then, where exactly is the memory limit set, resulting in the child process being kill ed? maxmemory?

Generally, when the memory occupied by Redis reaches maxmemory, the elimination policy will be triggered. This policy is controlled by maxmemory policy. After viewing, the current Redis configured policies are as follows:

redis > config get maxmemory-policy 1) "maxmemory-policy" 2) "allkeys-lru"

This strategy means that all key s are eliminated according to lru algorithm. Logically, it will not cause the Redis sub process to hang up.

In fact, the answer is in the system log:

Memory cgroup out of memory

Because online Redis runs in K8S cluster in the form of pod, pod itself is a container. The internal of pod is conducive to Cgroup technology, which limits memory.

Query the corresponding limit value through K8S api, as follows:

apiVersion: v1

kind: Pod

metadata:

annotations:

resources/cpu-limit: "1.2076"

resources/cpu-request: "0.6038"

resources/egress-bandwidth-limit: "209715200"

resources/egress-bandwidth-request: "1048576"

resources/memory-limit: "21237858304"

resources/memory-request: "10618929152"

scheduler/policy: normal

scheduler/service: redis

creationTimestamp: "2020-10-13T09:48:21Z"

labels:

port: "22392"

service_type: redis

What is the specific value of 21237858304? Use MySQL to calculate:

select 21237858304/1024/1024/1024; +----------------------------+ | 21237858304/1024/1024/1024 | +----------------------------+ | 19.779296875000 | +----------------------------+ 1 row in set (0.00 sec)

That's about 19.77GB.

Now that everything is clear, let's look at the memory usage diagram to understand:

Originally, Redis used about 17G of memory, but then it kept rising, then falling and cycling. The essential reason is that aof rewriting requires additional memory space. In the process of Redis aof rewriting, the total memory usage reaches the memory limit of Cgroup, resulting in the child process being kill ed, and then the memory decreases. This process is repeated all the time, so a lot of saw teeth are generated, that is, a lot of temp XXX aof file.

03

Summary & & divergence

In case of Redis related problems, the troubleshooting idea can start from the following steps:

1. Server level

First, make an overall observation. The angle here may be relatively high. Mainly observe the common indicators of the server: CPU, load, memory, disk, network bandwidth, TCP connections, etc

2. Redis instance level

After confirming that the server is correct, check the running status of the Redis instance itself

Read / write QPS, memory usage, Maxmemory, aof rewrite strategy, etc

3. Redis log analysis

View some log information of the current Redis at the point of failure through the Redis log

4. System log, monitoring and auxiliary troubleshooting

Here, there will probably be a simple conclusion, and then guess according to this conclusion and find other elements to support the conclusion.

5. Source code analysis

If you can't solve it here, you may need to view it from the source code level.

Divergence:

1. cgroup technology is mentioned in this article. If you don't understand it, you can see the previous article:

docker learning notes of cloud native technology (5)

2. If the memory occupied by Redis reaches the maxmemory configuration of Redis, and rewriting occurs, what happens to Redis? If you are interested, you can test it yourself.