If you don't say much, see the effect first

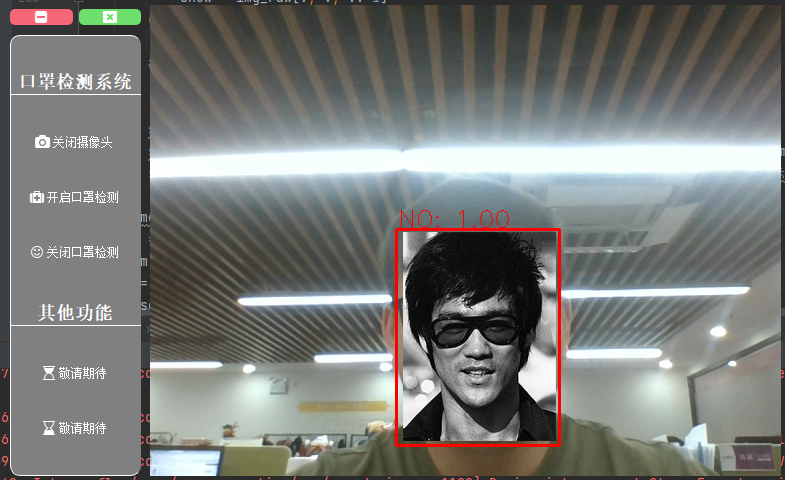

Below is the effect of not wearing a mask (cover it with an idol first)

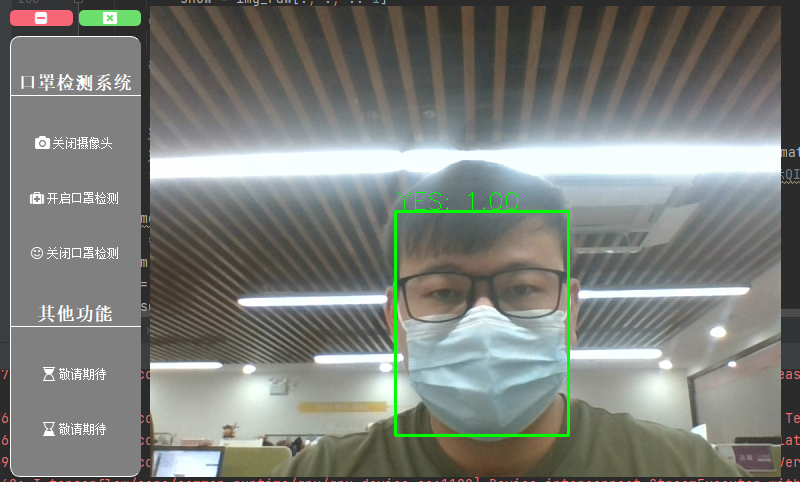

Here is the effect of wearing a mask

Let's describe this effect again. In fact, it's not a picture, but read by the camera in real time. First of all, thank AIZOOTech's open source project - FaceMaskDetection. At the same time, I also refer to the following blogs. Thank you one by one:

(https://blog.csdn.net/qq_41204464/article/details/104596777)

(https://blog.csdn.net/qq_41204464/article/details/106026650)

(https://www.freesion.com/article/8339842034/)

(https://zmister.com/archives/477.html)

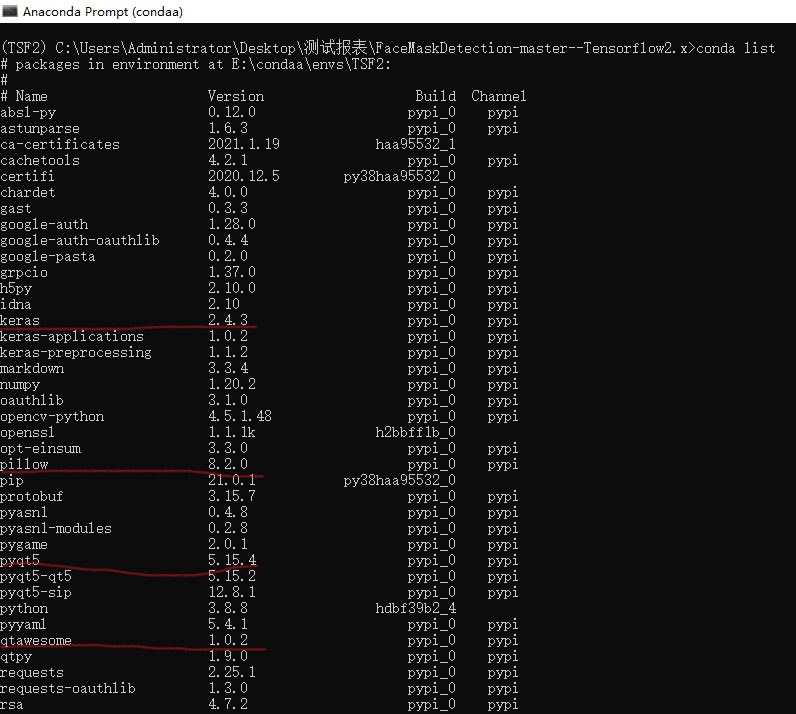

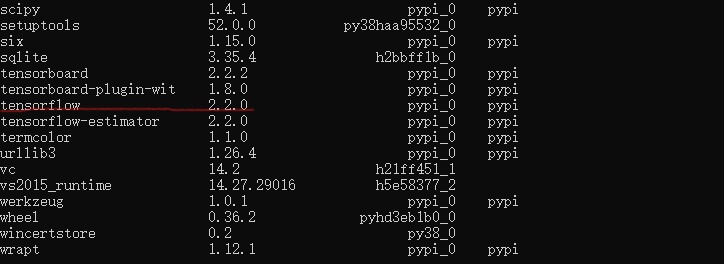

The equipped environment is pycharm (free version) + Anaconda. I won't talk about the installation of editor and environment. Several great gods mentioned above. Go and see for yourself. The screenshot of pip installation in conda environment is as follows (my own machine can operate normally in these environments, and I have tried to use it on my friend's machine). As shown in the figure below, just install pip yourself. Finally, don't forget conda install tensorflow. My understanding is to load the library installed by pip into conda (please correct the error). Finally, conda list checks whether it is consistent with my screenshot version. If it is inconsistent, uninstall a non compliant version, and then reinstall and specify the version. For example, pip uninstall keras first, and then pip install keras==2.4.3

It should be noted that I installed tensorflow version 2.2.0, which corresponds to keras version 2.4.3. Before I installed keras version 2.2, an error will be reported. In addition, several other major third-party libraries have been marked with red lines.

The following is the main program code. For convenience, I wrote the UI design code and the main program code together.

import sys

from PyQt5 import QtCore, QtGui, QtWidgets

import qtawesome

from PyQt5.QtCore import Qt

from PyQt5.QtWidgets import *

from PyQt5.QtCore import *

from PyQt5.QtGui import *

import cv2

import tensorflow_infer as tsf

class Ui_Form(object):

def setupUi(self, Form):

Form.setObjectName("Form")

Form.resize(800, 511)

self.verticalLayoutWidget = QtWidgets.QWidget(Form)

self.verticalLayoutWidget.setGeometry(QtCore.QRect(10, 60, 131, 441))

self.verticalLayoutWidget.setObjectName("verticalLayoutWidget")

self.verticalLayout = QtWidgets.QVBoxLayout(self.verticalLayoutWidget)

self.verticalLayout.setContentsMargins(0, 0, 0, 0)

self.verticalLayout.setObjectName("verticalLayout")

self.label1 = QtWidgets.QPushButton(self.verticalLayoutWidget)

self.label1.setObjectName("labeltitle")

self.verticalLayout.addWidget(self.label1)

self.pushButton1 = QtWidgets.QPushButton(qtawesome.icon('fa.camera',color='white'),"")

self.pushButton1.setObjectName("pushButton")

self.verticalLayout.addWidget(self.pushButton1)

self.pushButton2 = QtWidgets.QPushButton(qtawesome.icon('fa.medkit',color='white'),"")

self.pushButton2.setObjectName("pushButton")

self.verticalLayout.addWidget(self.pushButton2)

self.pushButton3 = QtWidgets.QPushButton(qtawesome.icon('fa.smile-o',color='white'),"")

self.pushButton3.setObjectName("pushButton")

self.verticalLayout.addWidget(self.pushButton3)

self.label2 = QtWidgets.QPushButton(self.verticalLayoutWidget)

self.label2.setObjectName("labeltitle")

self.verticalLayout.addWidget(self.label2)

self.pushButton4 = QtWidgets.QPushButton(qtawesome.icon('fa.hourglass-start',color='white'),"")

self.pushButton4.setObjectName("pushButton")

self.verticalLayout.addWidget(self.pushButton4)

self.pushButton5 = QtWidgets.QPushButton(qtawesome.icon('fa.hourglass-end',color='white'),"")

self.pushButton5.setObjectName("pushButton")

self.verticalLayout.addWidget(self.pushButton5)

self.horizontalLayoutWidget = QtWidgets.QWidget(Form)

self.horizontalLayoutWidget.setGeometry(QtCore.QRect(10, 30, 131, 25))

self.horizontalLayoutWidget.setObjectName("horizontalLayoutWidget")

self.horizontalLayout = QtWidgets.QHBoxLayout(self.horizontalLayoutWidget)

self.horizontalLayout.setContentsMargins(0, 0, 0, 0)

self.horizontalLayout.setObjectName("horizontalLayout")

self.verticalLayoutWidget.setStyleSheet('''

QPushButton{border:none;color:white;}

QPushButton#labeltitle{

border:none;

border-bottom:1px solid white;

font-size:18px;

font-weight:700;

font-family: "Helvetica Neue", Helvetica, Arial, sans-serif; }

QPushButton#pushButton:hover{border-left:4px solid red;font-weight:700;}

QWidget#verticalLayoutWidget{

background:gray;

border-top:1px solid white;

border-bottom:1px solid white;

border-left:1px solid white;

border-top-left-radius:10px;

border-top-right-radius:10px;

border-bottom-right-radius:10px;

border-bottom-left-radius:10px; }

''')

self.pushButton8 = QtWidgets.QPushButton(qtawesome.icon('fa.minus-square',color='white'),"") # Minimize button

self.pushButton8.setObjectName("pushButton8")

self.horizontalLayout.addWidget(self.pushButton8)

self.pushButton9 = QtWidgets.QPushButton(qtawesome.icon('fa.window-close',color='white'),"") # close button

self.pushButton9.setObjectName("pushButton9")

self.horizontalLayout.addWidget(self.pushButton9)

self.pushButton8.setStyleSheet('''QPushButton{background:#F76677;border-radius:5px;}QPushButton:hover{background:red;}''')

self.pushButton9.setStyleSheet('''QPushButton{background:#6DDF6D;border-radius:5px;}QPushButton:hover{background:green;}''')

self.pushButton8.clicked.connect(Form.showMinimized)

self.pushButton9.clicked.connect(Form.closeEvent)

self.verticalLayoutWidget_2 = QtWidgets.QWidget(Form)

self.verticalLayoutWidget_2.setGeometry(QtCore.QRect(150, 30, 631, 471))

self.verticalLayoutWidget_2.setObjectName("verticalLayoutWidget_2")

self.verticalLayout_2 = QtWidgets.QVBoxLayout(self.verticalLayoutWidget_2)

self.verticalLayout_2.setContentsMargins(0, 0, 0, 0)

self.verticalLayout_2.setObjectName("verticalLayout_2")

self.label = QtWidgets.QLabel(self.verticalLayoutWidget_2)

self.label.setObjectName("label")

self.label.setFixedSize(641,481) #Set the size of the Label for video display to 641x481

self.verticalLayout_2.addWidget(self.label)

self.verticalLayoutWidget_2.setStyleSheet('''

QWidget#verticalLayoutWidget_2{

background:gray;

border-top:1px solid white;

border-bottom:1px solid white;

border-left:1px solid white;

border-top-left-radius:10px;

border-top-right-radius:10px;

border-bottom-right-radius:10px;

border-bottom-left-radius:10px; }

''')

self.retranslateUi(Form)

QtCore.QMetaObject.connectSlotsByName(Form)

self.label1.setText( "Mask detection system")

self.pushButton1.setText("Turn on the camera")

self.pushButton2.setText("Open mask detection")

self.pushButton3.setText("Close mask detection")

self.label2.setText("Other functions")

self.pushButton4.setText("Coming soon")

self.pushButton5.setText("Coming soon")

self.label.setText("The camera will be slow in the process of opening. Please wait a moment and don't mess up to avoid jamming.")

def retranslateUi(self, Form):

_translate = QtCore.QCoreApplication.translate

Form.setWindowTitle(_translate("Form", "Form"))

def setNoTittle(self,Form):

Form.setWindowFlags(Qt.FramelessWindowHint)

Form.setWindowOpacity(1) # Set window transparency

Form.setAttribute(Qt.WA_TranslucentBackground) # Set window background transparency

class MainUi(QtWidgets.QMainWindow):

def mouseMoveEvent(self, e: QMouseEvent): # Override move event

if self._tracking:

self._endPos = e.pos() - self._startPos

self.move(self.pos() + self._endPos)

def mousePressEvent(self, e: QMouseEvent):

if e.button() == Qt.LeftButton:

self._startPos = QPoint(e.x(), e.y())

self._tracking = True

def mouseReleaseEvent(self, e: QMouseEvent):

if e.button() == Qt.LeftButton:

self._tracking = False

self._startPos = None

self._endPos = None

def closeEvent(self):

reply = QMessageBox.question(self, 'Prompt information', 'Are you sure to exit?',

QMessageBox.Yes | QMessageBox.No, QMessageBox.No)

if reply == QMessageBox.Yes:

QCoreApplication.instance().quit()

else:

pass

class MyMainForm(QtWidgets.QWidget,Ui_Form):

def __init__(self,parent=None):

super().__init__(parent) #Constructor of parent class

self.timer_camera = QtCore.QTimer() #Define a timer to control the frame rate of the displayed video

self.cap = cv2.VideoCapture() #Video stream

self.CAM_NUM = 0 #0 indicates that the video stream comes from the built-in camera of the notebook

self.openkz = False

def setupUi(self,Form):

Ui_Form.setupUi(self,Form)

def slot_init(self):

self.pushButton1.clicked.connect(self.open_camera_clicked) #If the key is clicked, the button is called_ open_ camera_ clicked()

self.timer_camera.timeout.connect(self.show_camera) #If the timer ends, call show_camera()

self.pushButton2.clicked.connect(self.openkouzhao)

self.pushButton3.clicked.connect(self.closekouzhao)

def openkouzhao(self):

self.openkz = True

def closekouzhao(self):

self.openkz = False

def open_camera_clicked(self):

self.label.setText("")

if self.timer_camera.isActive() == False: #If the timer does not start

flag = self.cap.open(self.CAM_NUM) #If the parameter is 0, it means to open the built-in camera of the notebook. If the parameter is the path of the video file, it means to open the video

if flag == False: #flag indicates whether open() is successful

msg = QtWidgets.QMessageBox.warning(self,'warning',"Please check whether the camera is connected to the computer correctly",buttons=QtWidgets.QMessageBox.Ok)

else:

self.timer_camera.start(30) #The timer starts to count for 30ms, and the result is to take a frame from the camera for display every 30ms

self.pushButton1.setText('Turn off the camera')

else:

self.timer_camera.stop() #off timer

self.cap.release() #Release video stream

self.label.clear() #Clear video display area

self.pushButton1.setText('Turn on the camera')

self.label.setText("The camera will be slow in the process of opening. Please wait a moment and don't mess up to avoid jamming.")

def show_camera(self):

flag,self.image = self.cap.read() #Read from video stream

#

if self.openkz:

img_raw = cv2.cvtColor(self.image, cv2.COLOR_BGR2RGB)

tsf.inference(img_raw,

conf_thresh=0.5,

iou_thresh=0.5,

target_shape=(260, 260),

draw_result=True,

show_result=False)

show = img_raw[:, :, ::-1]

else:

show = cv2.resize(self.image,(640,480)) #Reset the size of the read frame to 640x480

#

show = cv2.cvtColor(show,cv2.COLOR_BGR2RGB) #The video color is converted back to RGB, which is the real color

showImage = QtGui.QImage(show.data,show.shape[1],show.shape[0],QtGui.QImage.Format_RGB888) #Convert the read video data into QImage form

self.label.setPixmap(QtGui.QPixmap.fromImage(showImage)) #Display QImage in the Label displaying the video

if __name__ =='__main__':

app = QtWidgets.QApplication(sys.argv)

Form = MainUi()

ui = MyMainForm()

ui.setupUi(Form)

ui.setNoTittle(Form)

ui.slot_init()

Form.show()

sys.exit(app.exec_())

Someone should be able to find that my QT is made with designer and then transferred to the source code. Yes, so it can be written in a simpler way, but I'm lazy, so I keep inheriting and inheriting. QT here mainly uses several key methods, one is to rewrite the move function, the other is to close the window and design the zoom out and close buttons by yourself, and the other is the style. Then the video stream is transmitted to lable, so that the video can be turned on and off.

Open source tensorflow_infer.py's code has been modified. In fact, it is just to comment out a few lines, and then take the video stream as a sample for comparison, which is verified by TSF The information method checks, returns the result, and finally assigns a value to label.

Here is the tensorflow_infer.py code (if you want to download the model and source code, go to the address below)

# -*- coding:utf-8 -*-

import cv2

import time

import argparse

import numpy as np

from PIL import Image

from keras.models import model_from_json

from utils.anchor_generator import generate_anchors

from utils.anchor_decode import decode_bbox

from utils.nms import single_class_non_max_suppression

from load_model.tensorflow_loader import load_tf_model, tf_inference

sess, graph = load_tf_model('models/face_mask_detection.pb')

# anchor configuration

feature_map_sizes = [[33, 33], [17, 17], [9, 9], [5, 5], [3, 3]]

anchor_sizes = [[0.04, 0.056], [0.08, 0.11], [0.16, 0.22], [0.32, 0.45], [0.64, 0.72]]

anchor_ratios = [[1, 0.62, 0.42]] * 5

# generate anchors

anchors = generate_anchors(feature_map_sizes, anchor_sizes, anchor_ratios)

# for inference , the batch size is 1, the model output shape is [1, N, 4],

# so we expand dim for anchors to [1, anchor_num, 4]

anchors_exp = np.expand_dims(anchors, axis=0)

id2class = {0: 'YES', 1: 'NO'}

def inference(image,

conf_thresh=0.5,

iou_thresh=0.4,

target_shape=(160, 160),

draw_result=True,

show_result=True

):

'''

Main function of detection inference

:param image: 3D numpy array of image

:param conf_thresh: the min threshold of classification probabity.

:param iou_thresh: the IOU threshold of NMS

:param target_shape: the model input size.

:param draw_result: whether to daw bounding box to the image.

:param show_result: whether to display the image.

:return:

'''

# image = np.copy(image)

output_info = []

height, width, _ = image.shape

image_resized = cv2.resize(image, target_shape)

image_np = image_resized / 255.0 # Normalized to 0 ~ 1

image_exp = np.expand_dims(image_np, axis=0)

y_bboxes_output, y_cls_output = tf_inference(sess, graph, image_exp)

# remove the batch dimension, for batch is always 1 for inference.

y_bboxes = decode_bbox(anchors_exp, y_bboxes_output)[0]

y_cls = y_cls_output[0]

# To speed up, do single class NMS, not multiple classes NMS.

bbox_max_scores = np.max(y_cls, axis=1)

bbox_max_score_classes = np.argmax(y_cls, axis=1)

# keep_idx is the alive bounding box after nms.

keep_idxs = single_class_non_max_suppression(y_bboxes,

bbox_max_scores,

conf_thresh=conf_thresh,

iou_thresh=iou_thresh,

)

for idx in keep_idxs:

conf = float(bbox_max_scores[idx])

class_id = bbox_max_score_classes[idx]

bbox = y_bboxes[idx]

# clip the coordinate, avoid the value exceed the image boundary.

xmin = max(0, int(bbox[0] * width))

ymin = max(0, int(bbox[1] * height))

xmax = min(int(bbox[2] * width), width)

ymax = min(int(bbox[3] * height), height)

if draw_result:

if class_id == 0:

color = (0, 255, 0)

else:

color = (255, 0, 0)

cv2.rectangle(image, (xmin, ymin), (xmax, ymax), color, 2)

cv2.putText(image, "%s: %.2f" % (id2class[class_id], conf), (xmin + 2, ymin - 2),

cv2.FONT_HERSHEY_SIMPLEX, 0.8, color)

output_info.append([class_id, conf, xmin, ymin, xmax, ymax])

if show_result:

Image.fromarray(image).show()

return output_info

def run_on_video(video_path, output_video_name, conf_thresh):

cap = cv2.VideoCapture(video_path)

height = cap.get(cv2.CAP_PROP_FRAME_HEIGHT)

width = cap.get(cv2.CAP_PROP_FRAME_WIDTH)

fps = cap.get(cv2.CAP_PROP_FPS)

fourcc = cv2.VideoWriter_fourcc(*'XVID')

# writer = cv2.VideoWriter(output_video_name, fourcc, int(fps), (int(width), int(height)))

total_frames = cap.get(cv2.CAP_PROP_FRAME_COUNT)

if not cap.isOpened():

raise ValueError("Video open failed.")

return

status = True

idx = 0

while status:

start_stamp = time.time()

status, img_raw = cap.read()

img_raw = cv2.cvtColor(img_raw, cv2.COLOR_BGR2RGB)

read_frame_stamp = time.time()

if (status):

inference(img_raw,

conf_thresh,

iou_thresh=0.5,

target_shape=(260, 260),

draw_result=True,

show_result=False)

cv2.imshow('image', img_raw[:, :, ::-1])

cv2.waitKey(1)

inference_stamp = time.time()

# writer.write(img_raw)

write_frame_stamp = time.time()

idx += 1

# print("%d of %d" % (idx, total_frames))

# print("read_frame:%f, infer time:%f, write time:%f" % (read_frame_stamp - start_stamp,

# inference_stamp - read_frame_stamp,

# write_frame_stamp - inference_stamp))

# writer.release()

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="Face Mask Detection")

parser.add_argument('--img-mode', type=int, default=0, help='set 1 to run on image, 0 to run on video.')

parser.add_argument('--img-path', type=str, help='path to your image.')

parser.add_argument('--video-path', type=str, default='0', help='path to your video, `0` means to use camera.')

# parser.add_argument('--hdf5', type=str, help='keras hdf5 file')

args = parser.parse_args()

if args.img_mode:

imgPath = args.img_path

img = cv2.imread(imgPath)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

inference(img, show_result=True, target_shape=(260, 260))

else:

video_path = args.video_path

if args.video_path == '0':

video_path = 0

run_on_video(video_path, '', conf_thresh=0.5)

Tensorflow2 is required Friends of version 2 project can get it from the online disk:

Link:

https://pan.baidu.com/s/10IQ1uscONZOYkgdtjcx2pQ

Extraction code: g8g9

--------

After all, I took it from others, so I took the link of [a small tree x] for everyone to download. Welcome to communicate.