1. Introduction to data enhancement

When there are few pictures in our training set, it is easy to cause over fitting of the network. In order to avoid this situation, we generally need to increase some picture data artificially through image processing, which will increase the number of available pictures and reduce the possibility of over fitting.

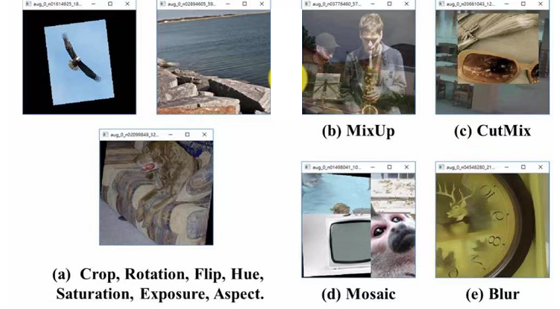

- Data enhancement can be done through pixel level clipping, rotation, flip, hue, saturation, exposure and aspect.

- In addition, image level data can be enhanced, such as MixUp, CurMix, Mosaic and Blur

2. Picture level pixel enhancement

- Mixup: as shown in the figure, a picture of a cat is superimposed on a picture of a dog. In this way, after the weighting operation of the two pictures, you can see that there are both dogs and cats in this new picture.

- Cutout: as shown in the figure, fill a certain area in the picture with a certain color, such as black in the figure

- CutMix: as shown in the figure, clip out a certain area of the picture, and then fill the clipped area with another image

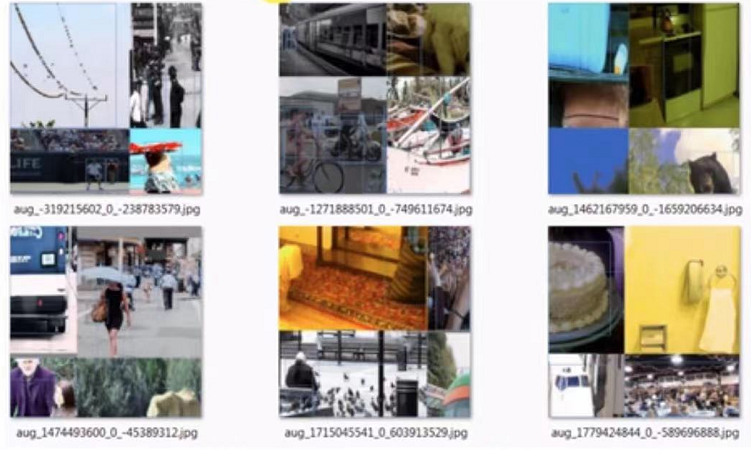

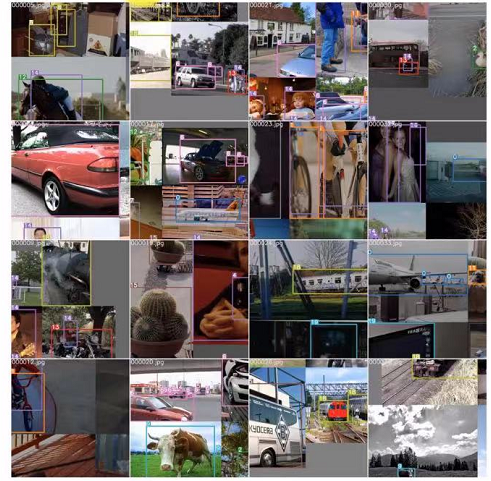

- Mosaic data enhancement: it combines four pictures into a big picture. In YOLOv5, mosaic method is used for data enhancement, which is proposed by the author of YOLOv5. As shown in the figure below

During the training process of YOLOv5, four small pictures are assembled into a large picture, and the four small pictures are randomly processed during splicing, so the size and shape of the four small pictures are different. - We can omit mosaic through train.py --rect

- --rect, sort the aspect ratio of the whole data set, and then combine the similar aspect ratio pictures together.

- The advantage of sorting by aspect ratio is that it can reduce FLOPS operation and speed up data processing

3. Code explanation

3.1 mosaic code

Code location yolov5-3.1 > utils > datasets.py

def load_mosaic(self, index):

# loads images in a mosaic

labels4 = []

s = self.img_size

#Take the center point of mosaic s randomly

yc, xc = [int(random.uniform(-x, 2 * s + x)) for x in self.mosaic_border] # mosaic center x, y

#Randomly take the index of the other three pictures

indices = [index] + [random.randint(0, len(self.labels) - 1) for _ in range(3)] # 3 additional image indices

for i, index in enumerate(indices):

# Load image

# load_image loads the picture and resize s it according to the ratio of the set input size to the original size of the picture

img, _, (h, w) = load_image(self, index)

# Initialize large img4

if i == 0: # top left

img4 = np.full((s * 2, s * 2, img.shape[2]), 114, dtype=np.uint8) # base image with 4 tiles

# Set the position on the large drawing (upper left corner)

x1a, y1a, x2a, y2a = max(xc - w, 0), max(yc - h, 0), xc, yc # xmin, ymin, xmax, ymax (large image)

# Select the position on the thumbnail

x1b, y1b, x2b, y2b = w - (x2a - x1a), h - (y2a - y1a), w, h # xmin, ymin, xmax, ymax (small image)

elif i == 1: # top right

x1a, y1a, x2a, y2a = xc, max(yc - h, 0), min(xc + w, s * 2), yc

x1b, y1b, x2b, y2b = 0, h - (y2a - y1a), min(w, x2a - x1a), h

elif i == 2: # bottom left

x1a, y1a, x2a, y2a = max(xc - w, 0), yc, xc, min(s * 2, yc + h)

x1b, y1b, x2b, y2b = w - (x2a - x1a), 0, w, min(y2a - y1a, h)

elif i == 3: # bottom right

x1a, y1a, x2a, y2a = xc, yc, min(xc + w, s * 2), min(s * 2, yc + h)

x1b, y1b, x2b, y2b = 0, 0, min(w, x2a - x1a), min(y2a - y1a, h)

img4[y1a:y2a, x1a:x2a] = img[y1b:y2b, x1b:x2b] # img4[ymin:ymax, xmin:xmax]

#Calculate the offset from small image to large image to calculate the position of mosaic enhanced label

padw = x1a - x1b

padh = y1a - y1b

# Labels

x = self.labels[index]

labels = x.copy()

# Update target box position based on offset

if x.size > 0: # Normalized xywh to pixel xyxy format

labels[:, 1] = w * (x[:, 1] - x[:, 3] / 2) + padw

labels[:, 2] = h * (x[:, 2] - x[:, 4] / 2) + padh

labels[:, 3] = w * (x[:, 1] + x[:, 3] / 2) + padw

labels[:, 4] = h * (x[:, 2] + x[:, 4] / 2) + padh

labels4.append(labels)

# Concat/clip labels

if len(labels4):

labels4 = np.concatenate(labels4, 0)

np.clip(labels4[:, 1:], 0, 2 * s, out=labels4[:, 1:]) # use with random_perspective

# img4, labels4 = replicate(img4, labels4) # replicate

# Augment

# When mosaic is performed, the shape of the four pictures is [2*img_size,2*img_size]

# The mosaic integrated pictures are randomly rotated, translated, scaled and cropped, and resize d to the input size img_size

img4, labels4 = random_perspective(img4, labels4,

degrees=self.hyp['degrees'],

translate=self.hyp['translate'],

scale=self.hyp['scale'],

shear=self.hyp['shear'],

perspective=self.hyp['perspective'],

border=self.mosaic_border) # border to remove

return img4, labels4

3.2 load_img code

# load_image loads the picture and resize s it according to the ratio of the set input size to the original size of the picture

def load_image(self, index):

# loads 1 image from dataset, returns img, original hw, resized hw

img = self.imgs[index]

if img is None: # not cached

path = self.img_files[index]

img = cv2.imread(path) # BGR

assert img is not None, 'Image Not Found ' + path

h0, w0 = img.shape[:2] # orig hw

r = self.img_size / max(h0, w0) # resize image to img_size

if r != 1: # always resize down, only resize up if training with augmentation

interp = cv2.INTER_AREA if r < 1 and not self.augment else cv2.INTER_LINEAR

img = cv2.resize(img, (int(w0 * r), int(h0 * r)), interpolation=interp)

return img, (h0, w0), img.shape[:2] # img, hw_original, hw_resized

else:

return self.imgs[index], self.img_hw0[index], self.img_hw[index] # img, hw_original, hw_resized

3.3 random_perspective

#Random perspective transformation

#The calculation method is the product of coordinate vector and transformation matrix

def random_perspective(img, targets=(), degrees=10, translate=.1, scale=.1, shear=10, perspective=0.0, border=(0, 0)):

# torchvision.transforms.RandomAffine(degrees=(-10, 10), translate=(.1, .1), scale=(.9, 1.1), shear=(-10, 10))

# targets = [cls, xyxy]

height = img.shape[0] + border[0] * 2 # shape(h,w,c)

width = img.shape[1] + border[1] * 2

# Center

C = np.eye(3)

C[0, 2] = -img.shape[1] / 2 # x translation (pixels)

C[1, 2] = -img.shape[0] / 2 # y translation (pixels)

# Perspective

P = np.eye(3)

P[2, 0] = random.uniform(-perspective, perspective) # x perspective (about y)

P[2, 1] = random.uniform(-perspective, perspective) # y perspective (about x)

# Rotation and Scale

R = np.eye(3)

a = random.uniform(-degrees, degrees)

# a += random.choice([-180, -90, 0, 90]) # add 90deg rotations to small rotations

s = random.uniform(1 - scale, 1 + scale)

# s = 2 ** random.uniform(-scale, scale)

R[:2] = cv2.getRotationMatrix2D(angle=a, center=(0, 0), scale=s)

# Shear

S = np.eye(3)

S[0, 1] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # x shear (deg)

S[1, 0] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # y shear (deg)

# Translation

T = np.eye(3)

T[0, 2] = random.uniform(0.5 - translate, 0.5 + translate) * width # x translation (pixels)

T[1, 2] = random.uniform(0.5 - translate, 0.5 + translate) * height # y translation (pixels)

# @Representation matrix multiplication

# Combined rotation matrix

M = T @ S @ R @ P @ C # order of operations (right to left) is IMPORTANT

if (border[0] != 0) or (border[1] != 0) or (M != np.eye(3)).any(): # image changed

if perspective:

#Perspective transformation function can keep the straight line from deformation, but the parallel lines may no longer be parallel

img = cv2.warpPerspective(img, M, dsize=(width, height), borderValue=(114, 114, 114))

else: # affine

# Affine transformation function, which can realize rotation, translation and scaling; The transformed parallel lines are still parallel

img = cv2.warpAffine(img, M[:2], dsize=(width, height), borderValue=(114, 114, 114))

# Visualize

# import matplotlib.pyplot as plt

# ax = plt.subplots(1, 2, figsize=(12, 6))[1].ravel()

# ax[0].imshow(img[:, :, ::-1]) # base

# ax[1].imshow(img2[:, :, ::-1]) # warped

# Transform label coordinates

n = len(targets)

if n:

# warp points

xy = np.ones((n * 4, 3))

xy[:, :2] = targets[:, [1, 2, 3, 4, 1, 4, 3, 2]].reshape(n * 4, 2) # x1y1, x2y2, x1y2, x2y1

xy = xy @ M.T # transform

if perspective:

xy = (xy[:, :2] / xy[:, 2:3]).reshape(n, 8) # rescale

else: # affine

xy = xy[:, :2].reshape(n, 8)

# create new boxes

x = xy[:, [0, 2, 4, 6]]

y = xy[:, [1, 3, 5, 7]]

xy = np.concatenate((x.min(1), y.min(1), x.max(1), y.max(1))).reshape(4, n).T

# # apply angle-based reduction of bounding boxes

# radians = a * math.pi / 180

# reduction = max(abs(math.sin(radians)), abs(math.cos(radians))) ** 0.5

# x = (xy[:, 2] + xy[:, 0]) / 2

# y = (xy[:, 3] + xy[:, 1]) / 2

# w = (xy[:, 2] - xy[:, 0]) * reduction

# h = (xy[:, 3] - xy[:, 1]) * reduction

# xy = np.concatenate((x - w / 2, y - h / 2, x + w / 2, y + h / 2)).reshape(4, n).T

# clip boxes

# Remove the frame cut too small after the above series of operations; reject warped points outside of image

xy[:, [0, 2]] = xy[:, [0, 2]].clip(0, width)

xy[:, [1, 3]] = xy[:, [1, 3]].clip(0, height)

# filter candidates

i = box_candidates(box1=targets[:, 1:5].T * s, box2=xy.T)

targets = targets[i]

targets[:, 1:5] = xy[i]

return img, targets