1, Introduction to Tidb

1. What is TiDB

TiDB is an open-source distributed relational database independently designed and developed by PingCAP company. It is an integrated distributed database product that supports both online transaction processing and online analytical processing (HTAP). It has horizontal expansion or reduction, financial level high availability, real-time HTAP, cloud native distributed database Compatible with MySQL 5.7 protocol and MySQL ecology. The goal is to provide users with one-stop OLTP (Online Transactional Processing), OLAP (Online Analytical Processing) and HTAP solutions. TiDB is suitable for various application scenarios such as high availability, strong consistency, high requirements and large data scale.

Compared with the traditional stand-alone database, TiDB has the following advantages:

1) Pure distributed architecture, with good scalability and supporting elastic capacity expansion and contraction

2) It supports SQL, exposes the network protocol of MySQL, and is compatible with most MySQL syntax. In most scenarios, it can directly replace mysql

3) High availability is supported by default. When a few replicas fail, the database itself can automatically repair and fail over data, which is transparent to the business

4) It supports ACID transactions and is friendly to some scenarios with strong consistent requirements, such as bank transfer

- It has rich tool chain ecology, covering a variety of scenarios such as data migration, synchronization and backup

2. Overall structure of tidb

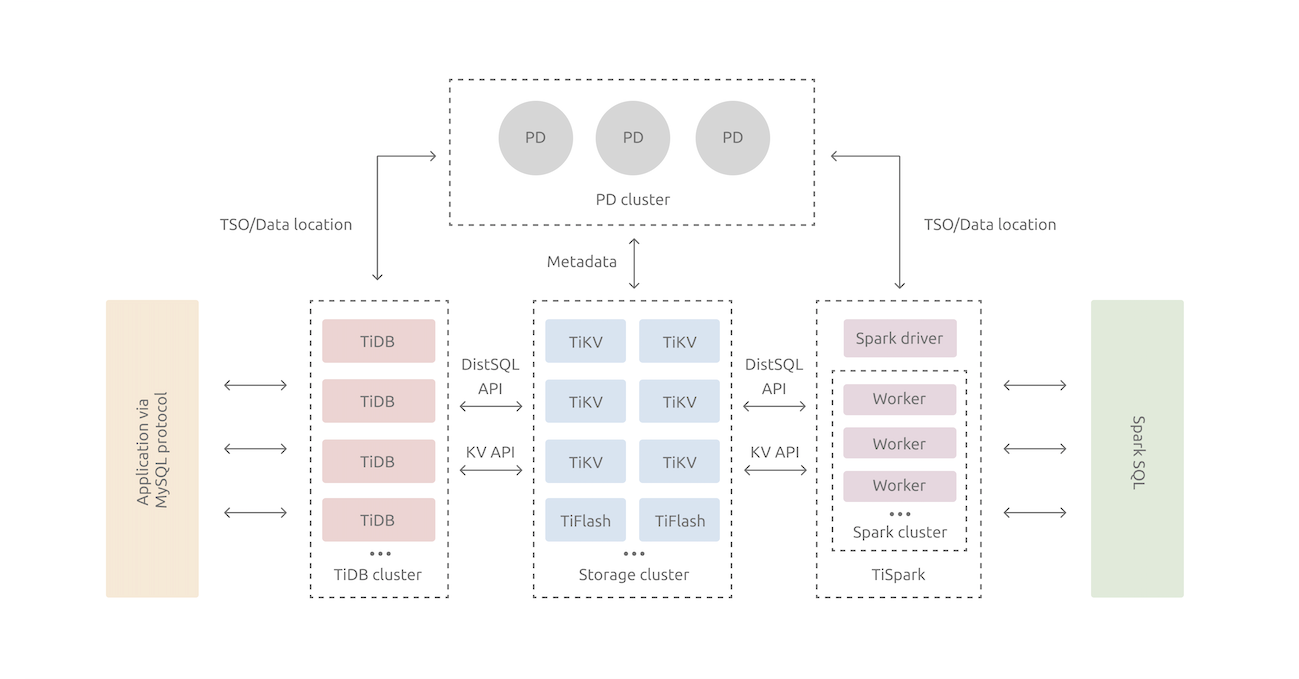

In terms of kernel design, TiDB distributed database divides the overall architecture into multiple modules, and each module communicates with each other to form a complete TiDB system. The corresponding architecture diagram is as follows:

2. Introduction to main modules

1) TiDB Server

TiDB Server: SQL layer, which exposes the connection endpoint of MySQL protocol. It is responsible for accepting the connection of the client, performing SQL parsing and optimization, and finally generating a distributed execution plan. The TiDB layer itself is stateless. In practice, multiple TiDB instances can be started to provide a unified access address through load balancing components (such as LVS, HAProxy or F5). The connection of the client can be evenly distributed on multiple TiDB instances to achieve the effect of load balancing. TiDB Server itself does not store data, but only parses SQL and forwards the actual data reading request to the underlying storage node TiKV (or TiFlash).

2) PD Server

PD (Placement Driver) Server: the meta information management module of the whole TiDB cluster, which is responsible for storing the real-time data distribution of each TiKV node and the overall topology of the cluster, providing TiDB Dashboard control interface, and assigning transaction ID to distributed transactions. PD not only stores meta information, but also issues data scheduling commands to specific TiKV nodes according to the data distribution status reported by TiKV nodes in real time. It can be said to be the "brain" of the whole cluster. In addition, PD itself is also composed of at least three nodes, with high availability. It is recommended to deploy an odd number of PD nodes.

3) Storage node

TiKV Server:

It is responsible for storing data. From the outside, TiKV is a distributed key value storage engine that provides transactions. The basic unit for storing data is Region. Each Region is responsible for storing the data of a Key Range (the left closed right open interval from StartKey to EndKey). Each TiKV node is responsible for multiple regions. TiKV API provides native support for distributed transactions at the KV key value pair level. By default, it provides the isolation level of SI (Snapshot Isolation), which is also the core of TiDB's support for distributed transactions at the SQL level. After the SQL layer of TiDB completes the SQL parsing, it will convert the SQL execution plan into the actual call to the TiKV API. Therefore, the data is stored in TiKV. In addition, the data in TiKV will automatically maintain multiple copies (three copies by default), which naturally supports high availability and automatic failover.

TiFlash

TiFlash is a special kind of storage node. Unlike ordinary TiKV nodes, data is stored in the form of columns within TiFlash, and its main function is to speed up analytical scenarios

3. Experimental environment

Four virtual machines are required

| node | Installed services |

|---|---|

| server11:172.25.254.21 | zabbix-server mariadb PD1,TiDB |

| server12:172.25.254.22 | tikv cluster |

| server13:172.25.254.23 | tikv cluster |

2, server11 environment setup

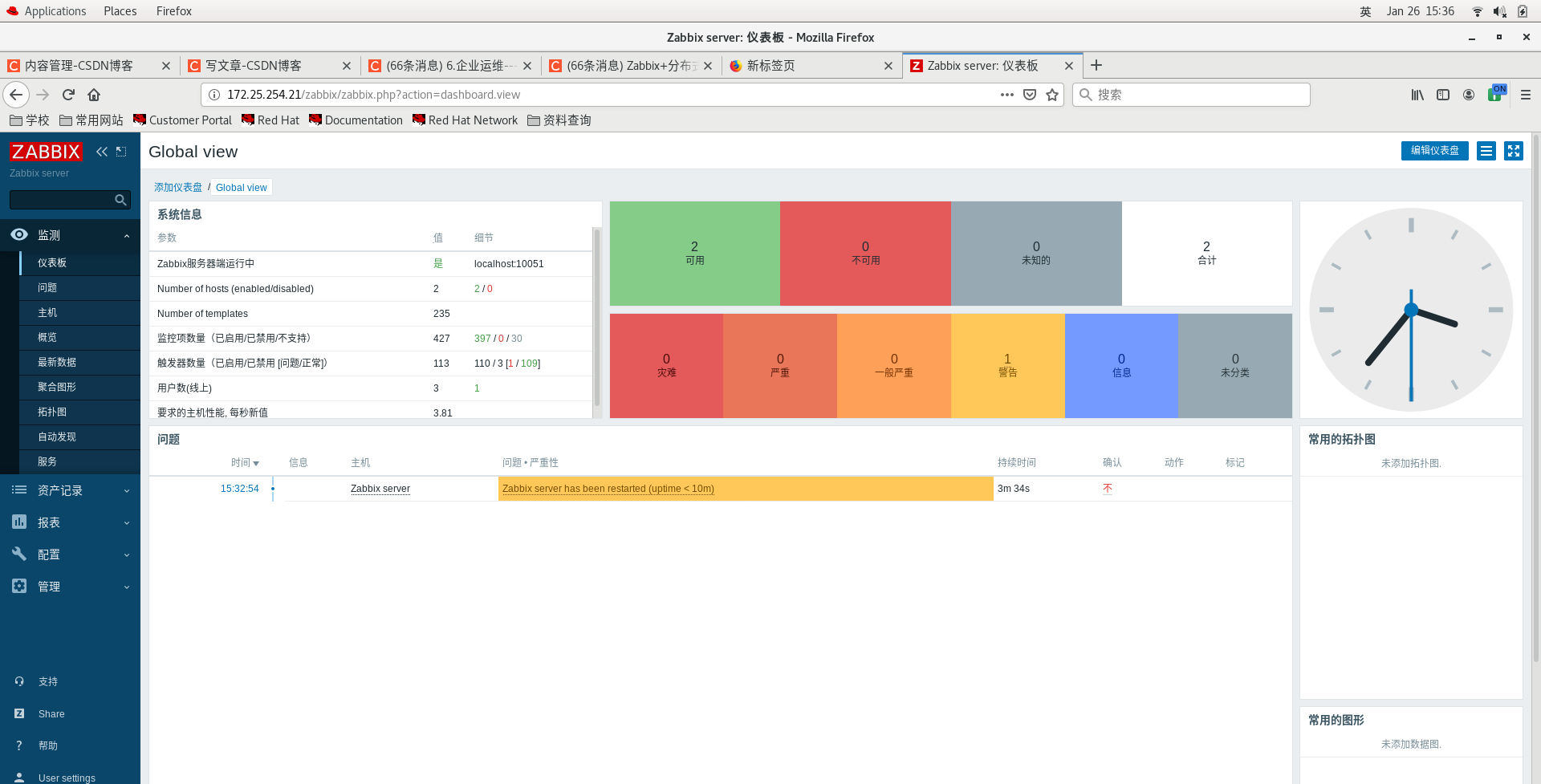

1. ZABBIX server service is set up in advance

Successfully started

2.mariadb deployment in advance

3.11 installing and configuring TiDB

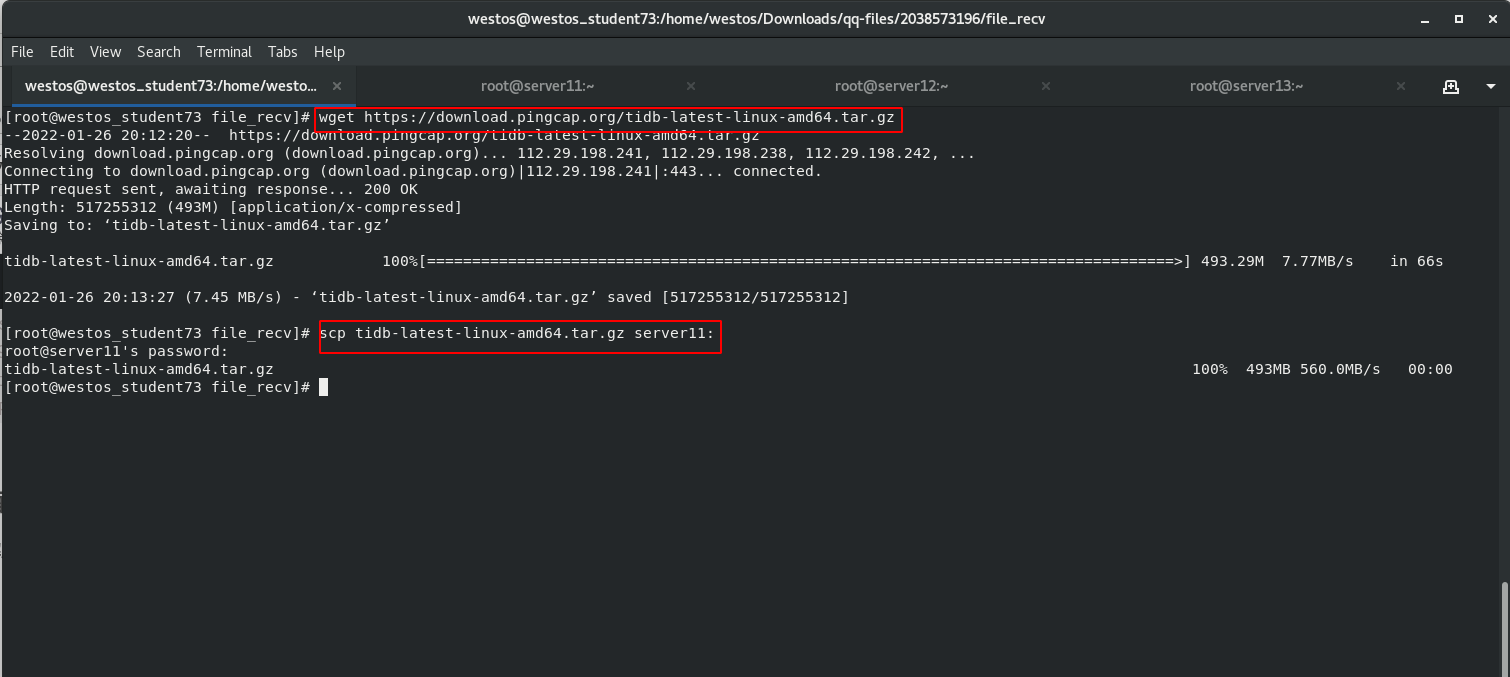

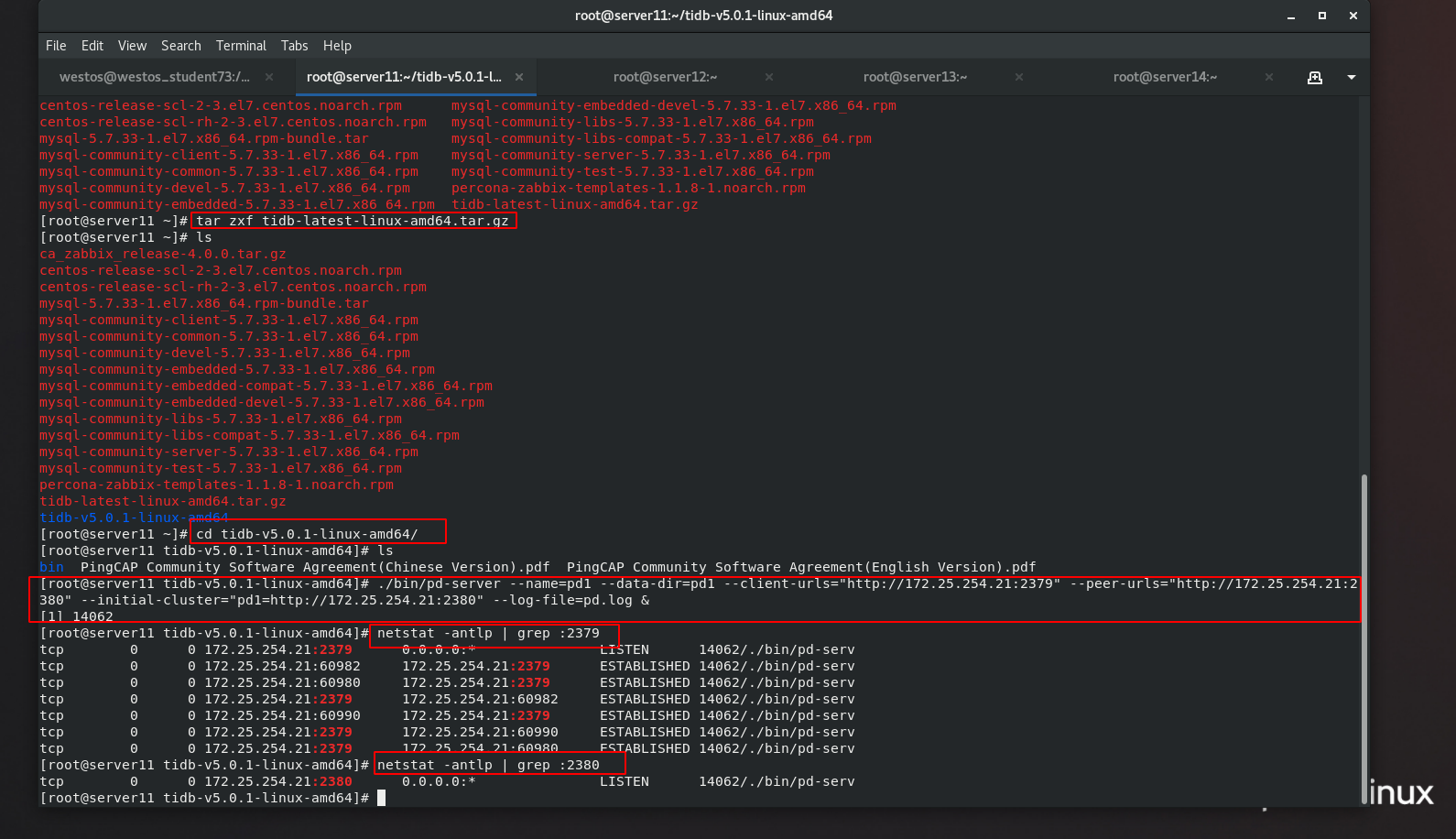

server11 download the installation package, extract it and enable PD in the background

download TiDB Binary package [root@westos_student73 file_recv]# wget https://download.pingcap.org/tidb-latest-linux-amd64.tar.gz [root@westos_student73 file_recv]# scp tidb-latest-linux-amd64.tar.gz server11:

server11 starts PD and runs in the background

server11 starts PD and runs in the background

[root@server11 ~]# tar zxf tidb-latest-linux-amd64.tar.gz [root@server11 ~]# cd tidb-v5.0.1-linux-amd64/ [root@server11 tidb-v5.0.1-linux-amd64]# ls bin PingCAP Community Software Agreement(Chinese Version).pdf PingCAP Community Software Agreement(English Version).pdf #Start PD and run in the background [root@server11 tidb-v5.0.1-linux-amd64]# ./bin/pd-server --name=pd1 --data-dir=pd1 --client-urls="http://172.25.254.21:2379" --peer-urls="http://172.25.254.21:2380" --initial-cluster="pd1=http://172.25.254.21:2380" --log-file=pd.log & #Check whether ports 2379 and 2380 are open [root@server11 tidb-v5.0.1-linux-amd64]# netstat -antlp | grep :2379 tcp 0 0 172.25.254.21:2379 0.0.0.0:* LISTEN 14062/./bin/pd-serv tcp 0 0 172.25.254.21:60982 172.25.254.21:2379 ESTABLISHED 14062/./bin/pd-serv tcp 0 0 172.25.254.21:60980 172.25.254.21:2379 ESTABLISHED 14062/./bin/pd-serv tcp 0 0 172.25.254.21:2379 172.25.254.21:60982 ESTABLISHED 14062/./bin/pd-serv tcp 0 0 172.25.254.21:60990 172.25.254.21:2379 ESTABLISHED 14062/./bin/pd-serv tcp 0 0 172.25.254.21:2379 172.25.254.21:60990 ESTABLISHED 14062/./bin/pd-serv tcp 0 0 172.25.254.21:2379 172.25.254.21:60980 ESTABLISHED 14062/./bin/pd-serv [root@server11 tidb-v5.0.1-linux-amd64]# netstat -antlp | grep :2380 tcp 0 0 172.25.254.21:2380 0.0.0.0:* LISTEN 14062/./bin/pd-serv [root@server11 tidb-v5.0.1-linux-amd64]#

3, Unzip and start tikv(server12, server13, server14)

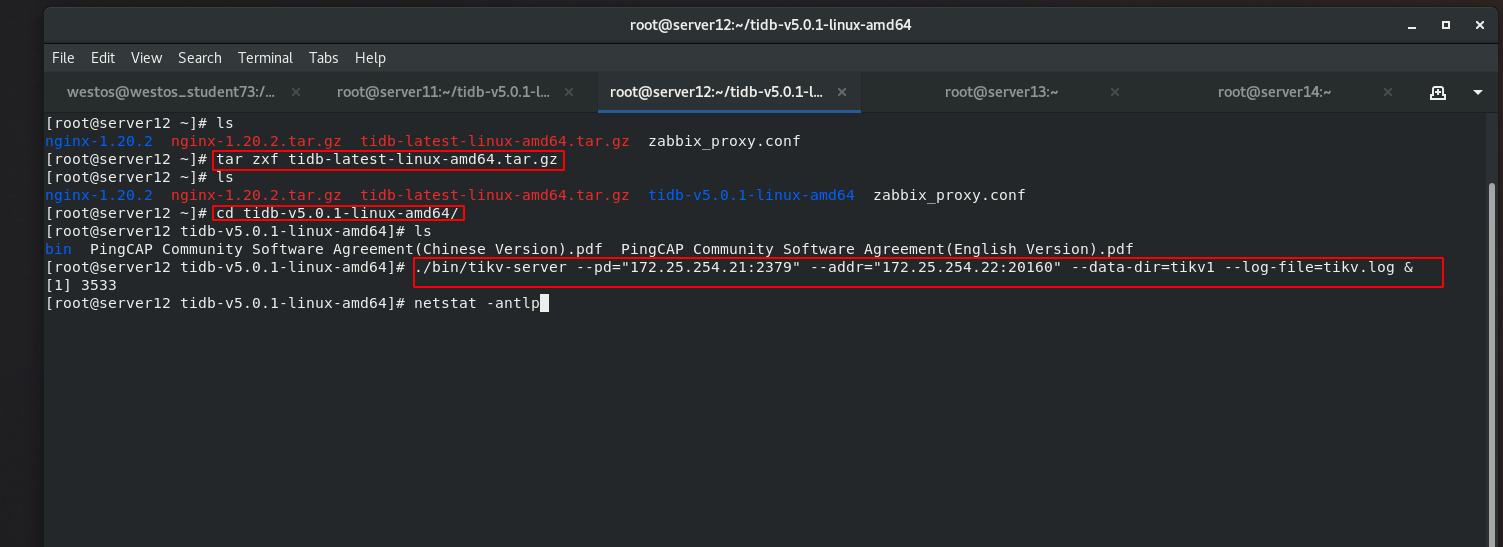

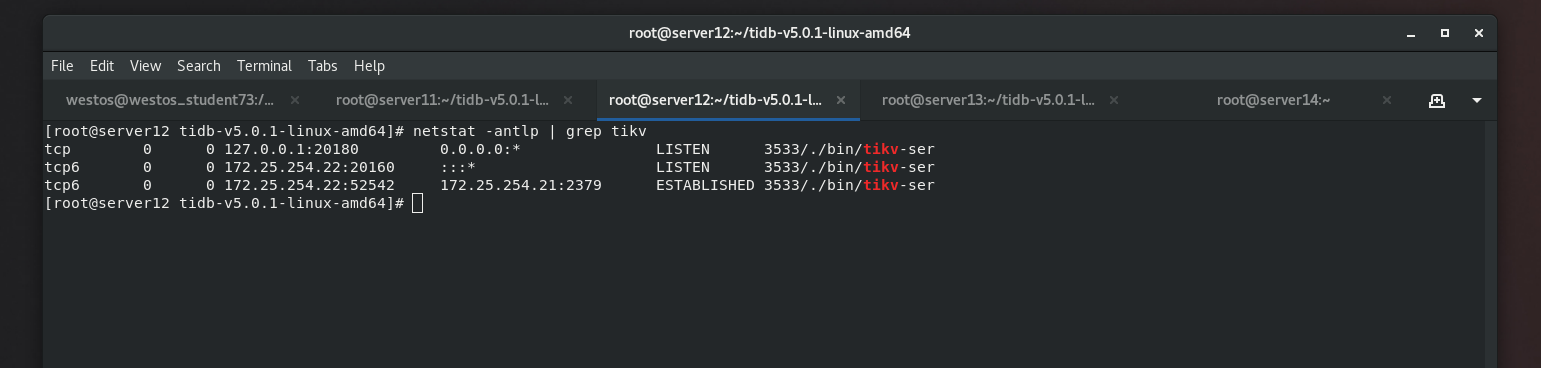

server12 configuration

[root@server12 ~]# ls nginx-1.20.2 nginx-1.20.2.tar.gz tidb-latest-linux-amd64.tar.gz zabbix_proxy.conf [root@server12 ~]# tar zxf tidb-latest-linux-amd64.tar.gz [root@server12 ~]# ls nginx-1.20.2 nginx-1.20.2.tar.gz tidb-latest-linux-amd64.tar.gz tidb-v5.0.1-linux-amd64 zabbix_proxy.conf [root@server12 ~]# cd tidb-v5.0.1-linux-amd64/ [root@server12 tidb-v5.0.1-linux-amd64]# ls bin PingCAP Community Software Agreement(Chinese Version).pdf PingCAP Community Software Agreement(English Version).pdf [root@server12 tidb-v5.0.1-linux-amd64]# ./bin/tikv-server --pd="172.25.254.21:2379" --addr="172.25.254.22:20160" --data-dir=tikv1 --log-file=tikv.log & [1] 3533 [root@server12 tidb-v5.0.1-linux-amd64]# netstat -antlp

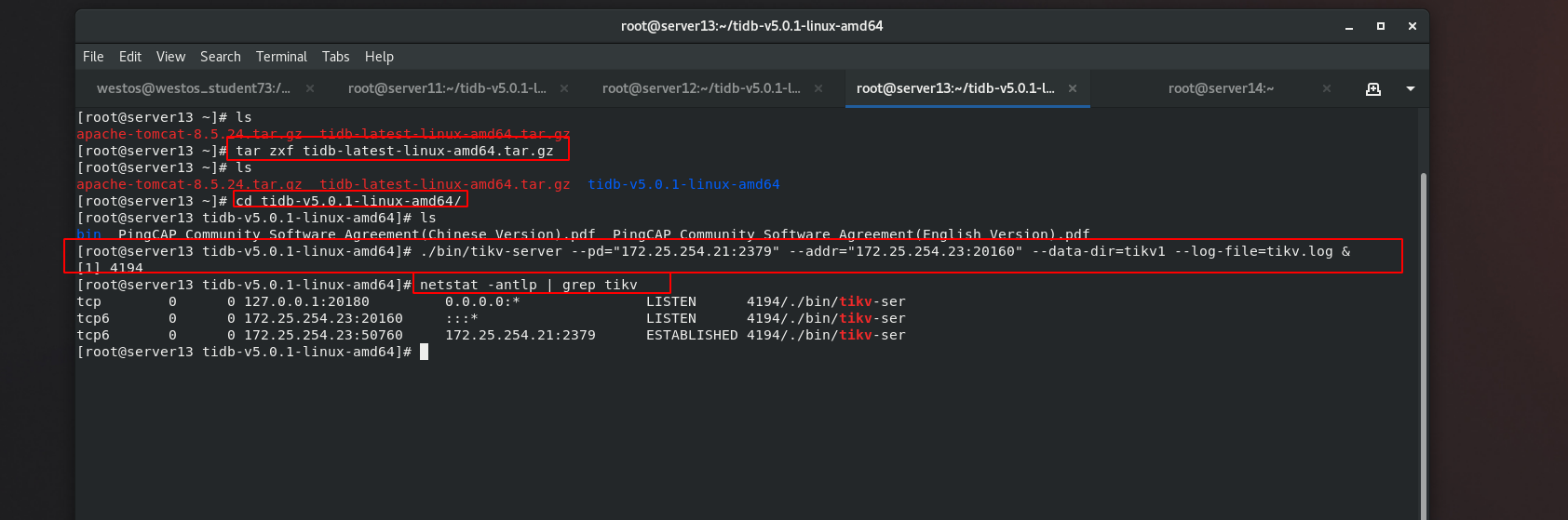

server13 configuration

[root@server13 ~]# ls apache-tomcat-8.5.24.tar.gz tidb-latest-linux-amd64.tar.gz [root@server13 ~]# tar zxf tidb-latest-linux-amd64.tar.gz [root@server13 ~]# ls apache-tomcat-8.5.24.tar.gz tidb-latest-linux-amd64.tar.gz tidb-v5.0.1-linux-amd64 [root@server13 ~]# cd tidb-v5.0.1-linux-amd64/ [root@server13 tidb-v5.0.1-linux-amd64]# ls bin PingCAP Community Software Agreement(Chinese Version).pdf PingCAP Community Software Agreement(English Version).pdf [root@server13 tidb-v5.0.1-linux-amd64]# ./bin/tikv-server --pd="172.25.254.21:2379" --addr="172.25.254.23:20160" --data-dir=tikv1 --log-file=tikv.log & [1] 4194 [root@server13 tidb-v5.0.1-linux-amd64]# netstat -antlp | grep tikv tcp 0 0 127.0.0.1:20180 0.0.0.0:* LISTEN 4194/./bin/tikv-ser tcp6 0 0 172.25.254.23:20160 :::* LISTEN 4194/./bin/tikv-ser tcp6 0 0 172.25.254.23:50760 172.25.254.21:2379 ESTABLISHED 4194/./bin/tikv-ser

4, Enable zabbix and tidb on server11 server

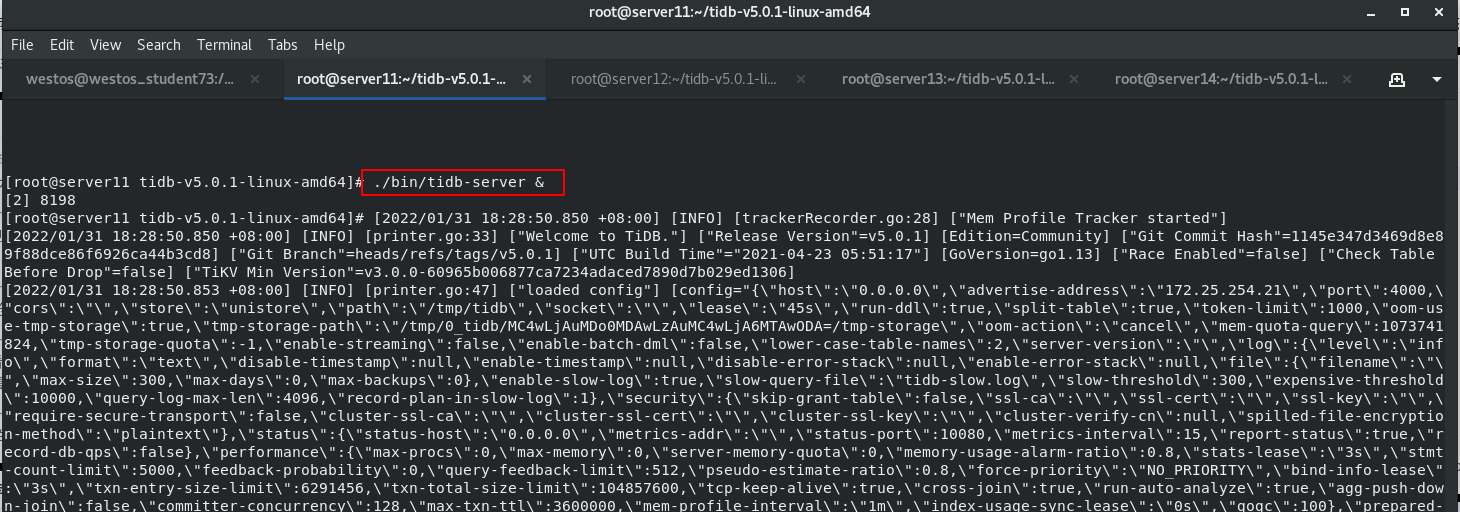

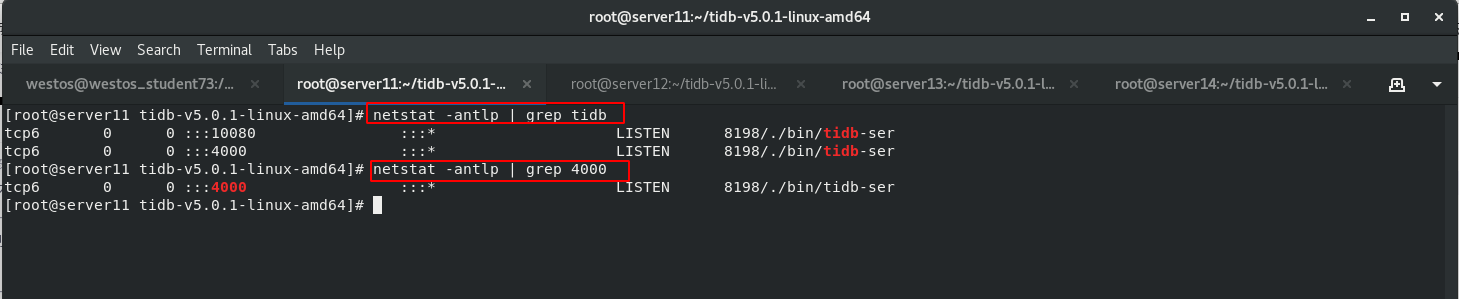

Start tidb server service and enter the background

[root@server11 tidb-v5.0.1-linux-amd64]# ./bin/tidb-server & [root@server11 tidb-v5.0.1-linux-amd64]# netstat -antlp | grep tidb tcp6 0 0 :::10080 :::* LISTEN 8198/./bin/tidb-ser tcp6 0 0 :::4000 :::* LISTEN 8198/./bin/tidb-ser [root@server11 tidb-v5.0.1-linux-amd64]# netstat -antlp | grep 4000 tcp6 0 0 :::4000 :::* LISTEN 8198/./bin/tidb-ser [root@server11 tidb-v5.0.1-linux-amd64]#

5, Data import (create TIDB)

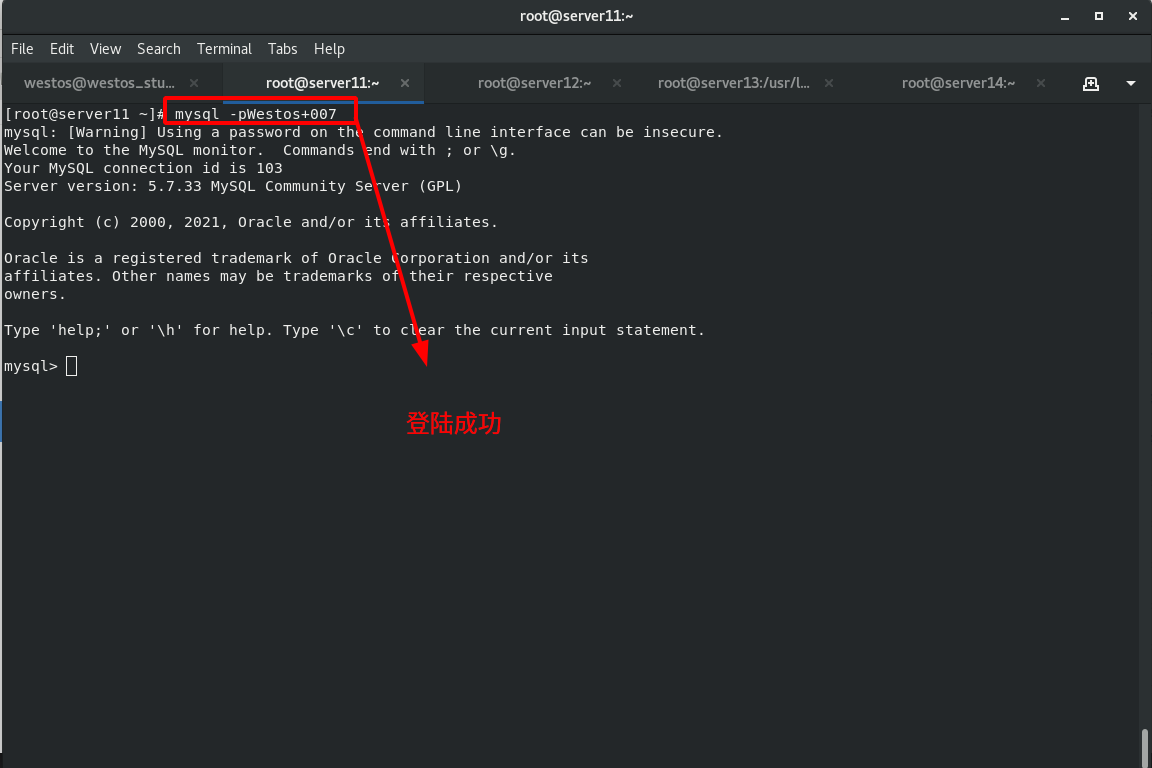

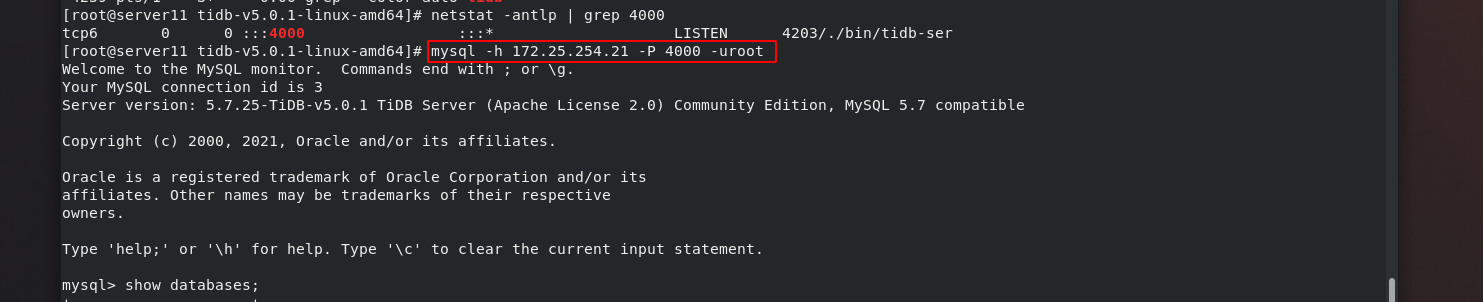

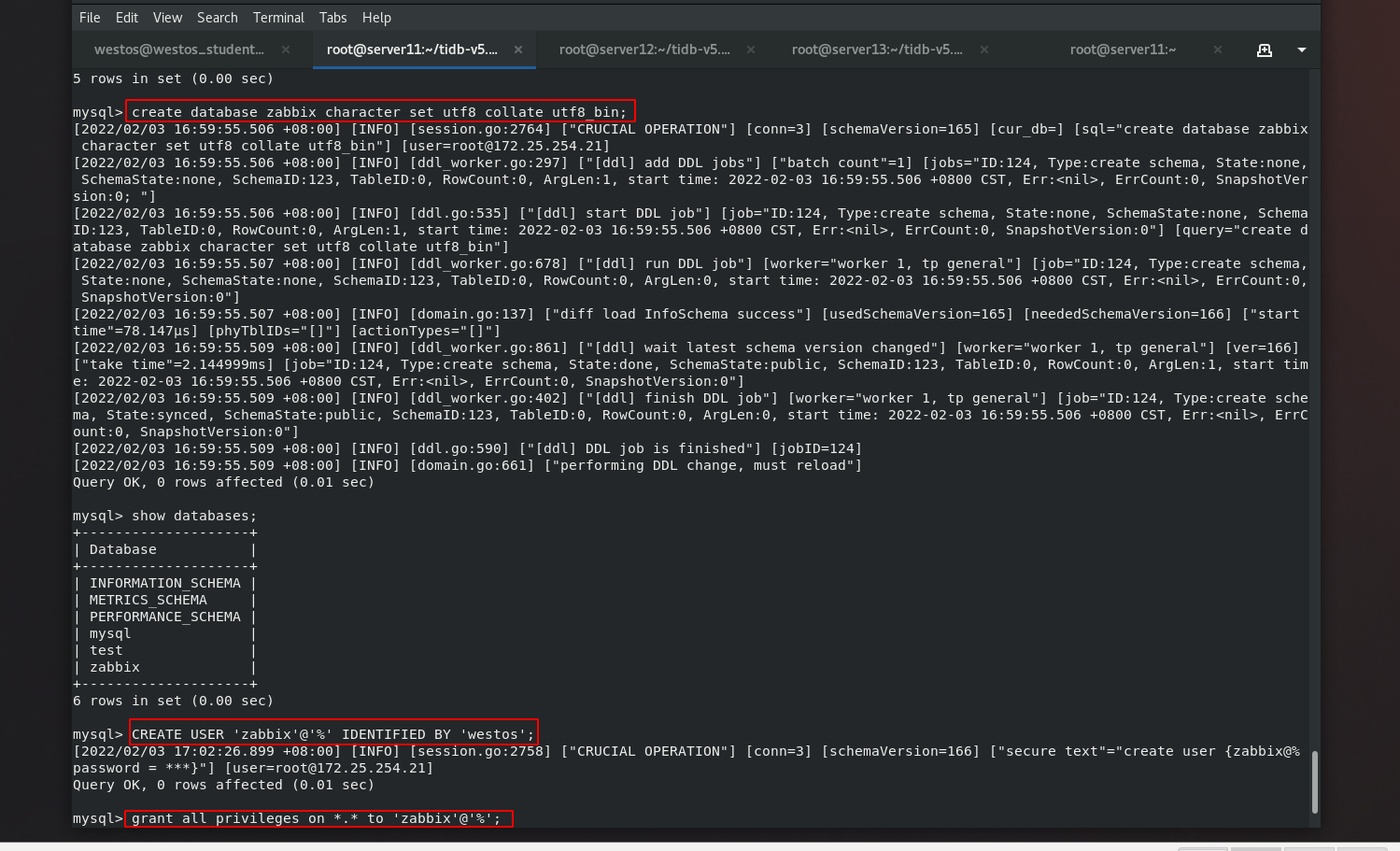

1. Database connection tidb

[root@server11 tidb-v5.0.1-linux-amd64]# mysql -h 172.25.254.21 -P 4000 -uroot Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 3 Server version: 5.7.25-TiDB-v5.0.1 TiDB Server (Apache License 2.0) Community Edition, MySQL 5.7 compatible Copyright (c) 2000, 2021, Oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> create database zabbix character set utf8 collate utf8_bin; mysql> CREATE USER 'zabbix'@'%' IDENTIFIED BY 'westos'; mysql> grant all privileges on *.* to 'zabbix'@'%';

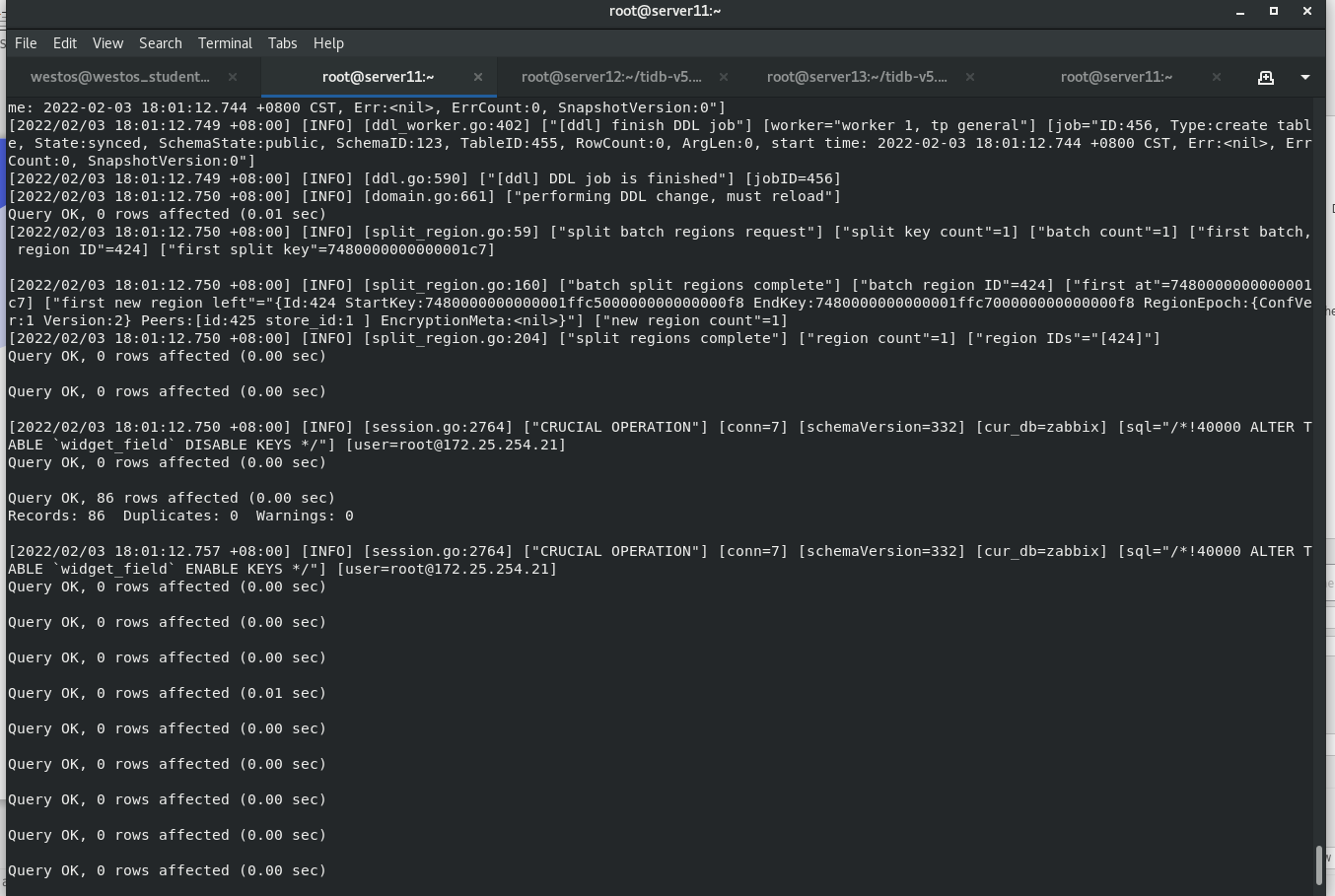

2. Import data to database

Before mysql Imported zabbix Data pouring out of Library [root@server1 zabbix-server-mysql-4.0.5]# mysqldump -uroot -pwestos zabbix >/mnt/zabbix.sql Import the backed up database into tidb In the database [root@server1 mnt]# mysql -h 172.25.4.111 -P 4000 -uroot MySQL [(none)]> use zabbix; Database changed MySQL [zabbix]> set tidb_batch_insert=1; MySQL [zabbix]> source /mnt/zabbix.sql;

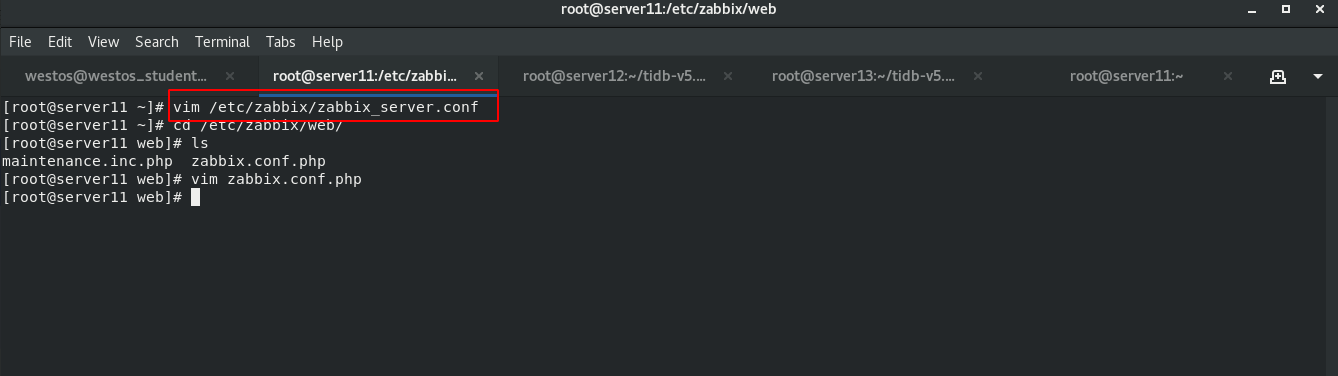

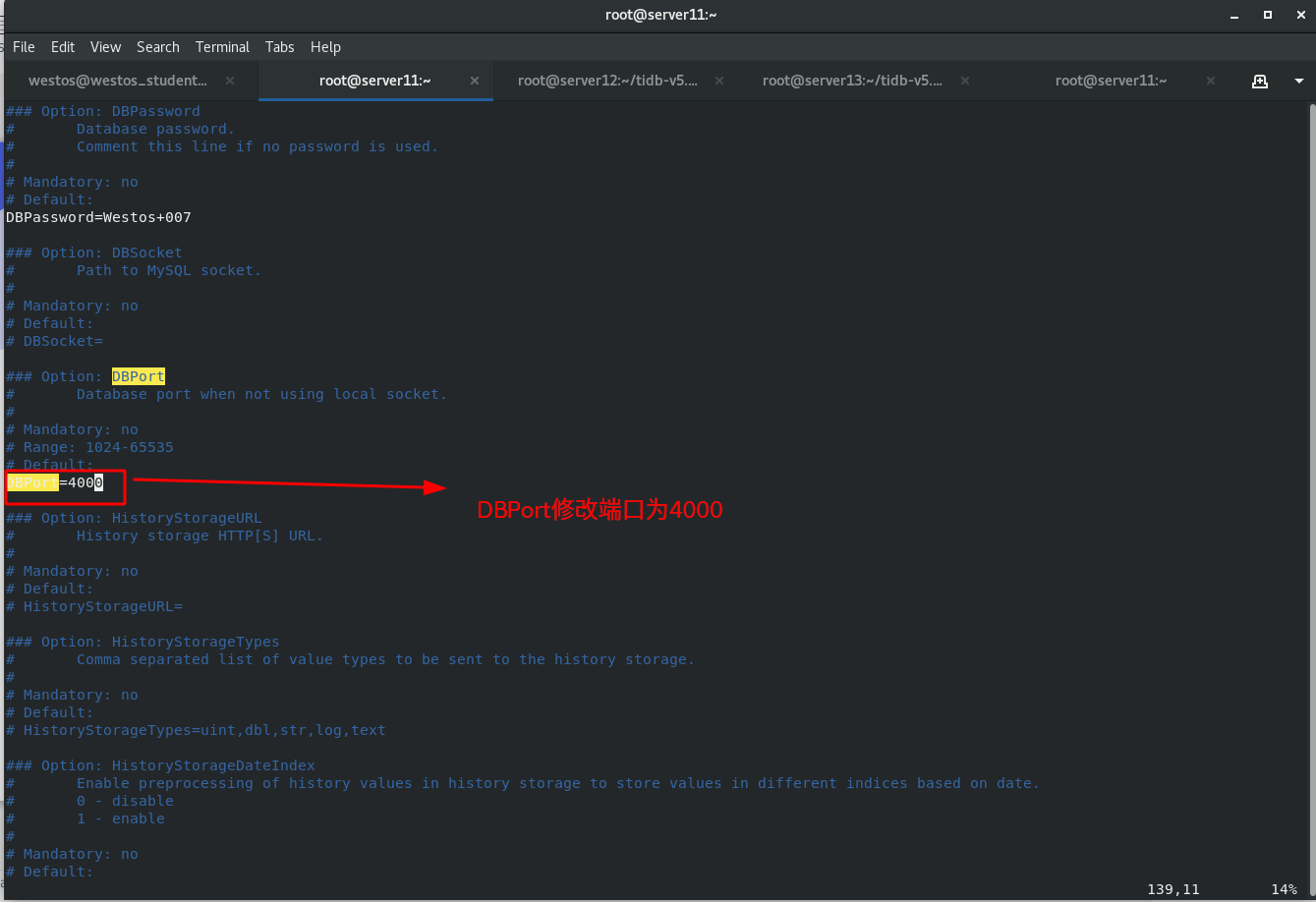

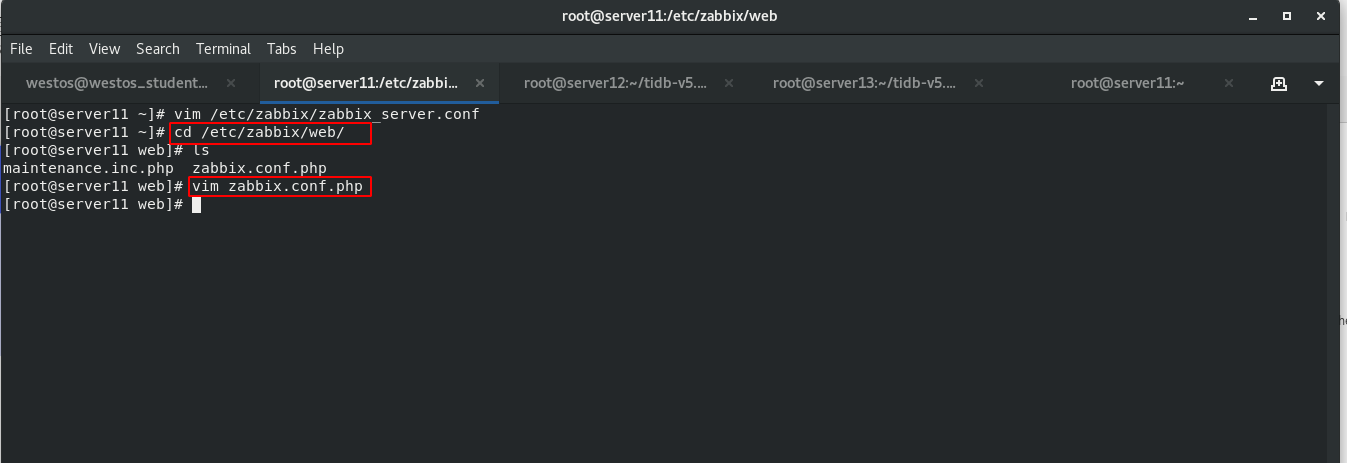

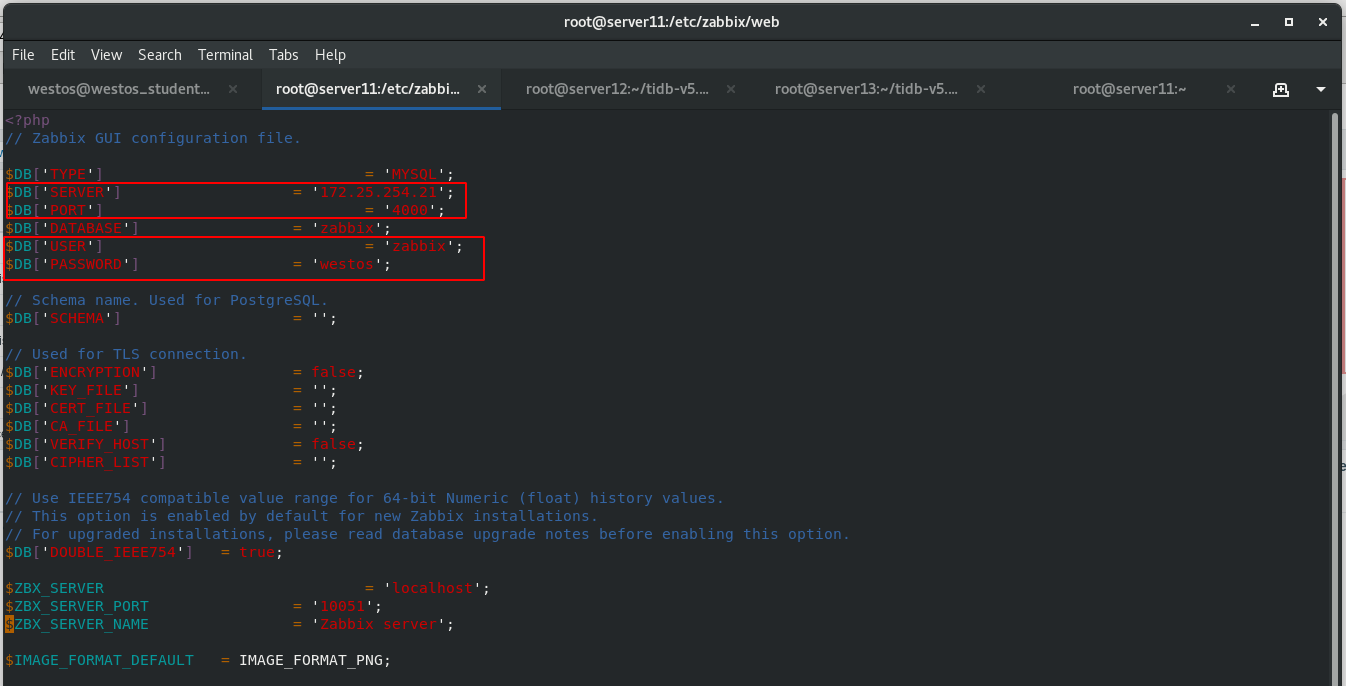

3.11 modify configuration file

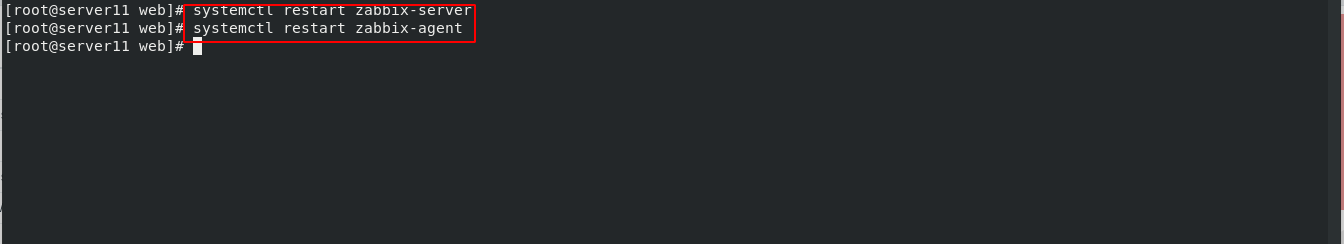

[root@server11 ~]# vim /etc/zabbix/zabbix_server.conf DBPort=4000 [root@server11 ~]# cd /etc/zabbix/web/ [root@server11 web]# vim zabbix.conf.php $DB['TYPE'] = 'MYSQL'; $DB['SERVER'] = '172.25.254.21'; $DB['PORT'] = '4000'; $DB['DATABASE'] = 'zabbix'; $DB['USER'] = 'zabbix'; $DB['PASSWORD'] = 'westos'; Restart service [root@server11 web]# systemctl restart zabbix-server [root@server11 web]# systemctl restart zabbix-agent

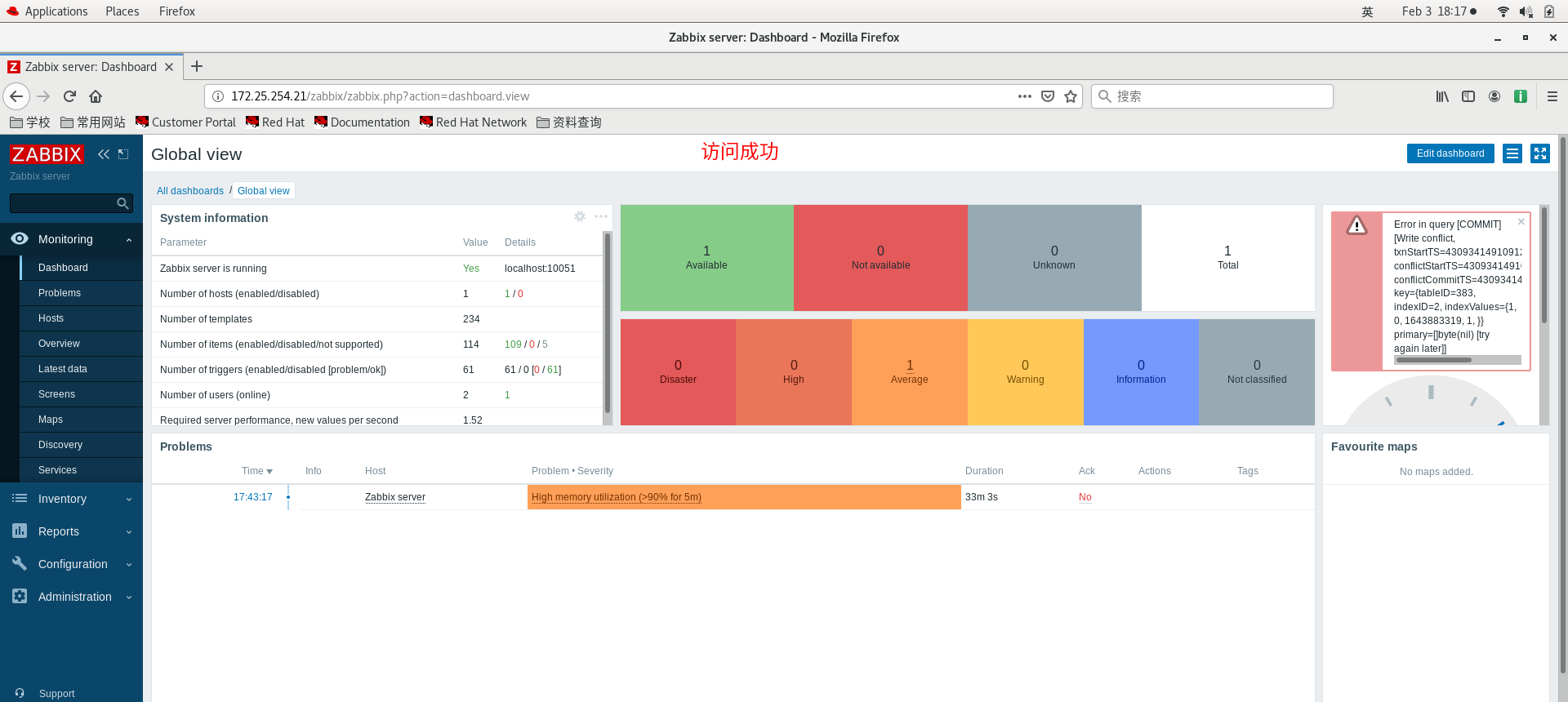

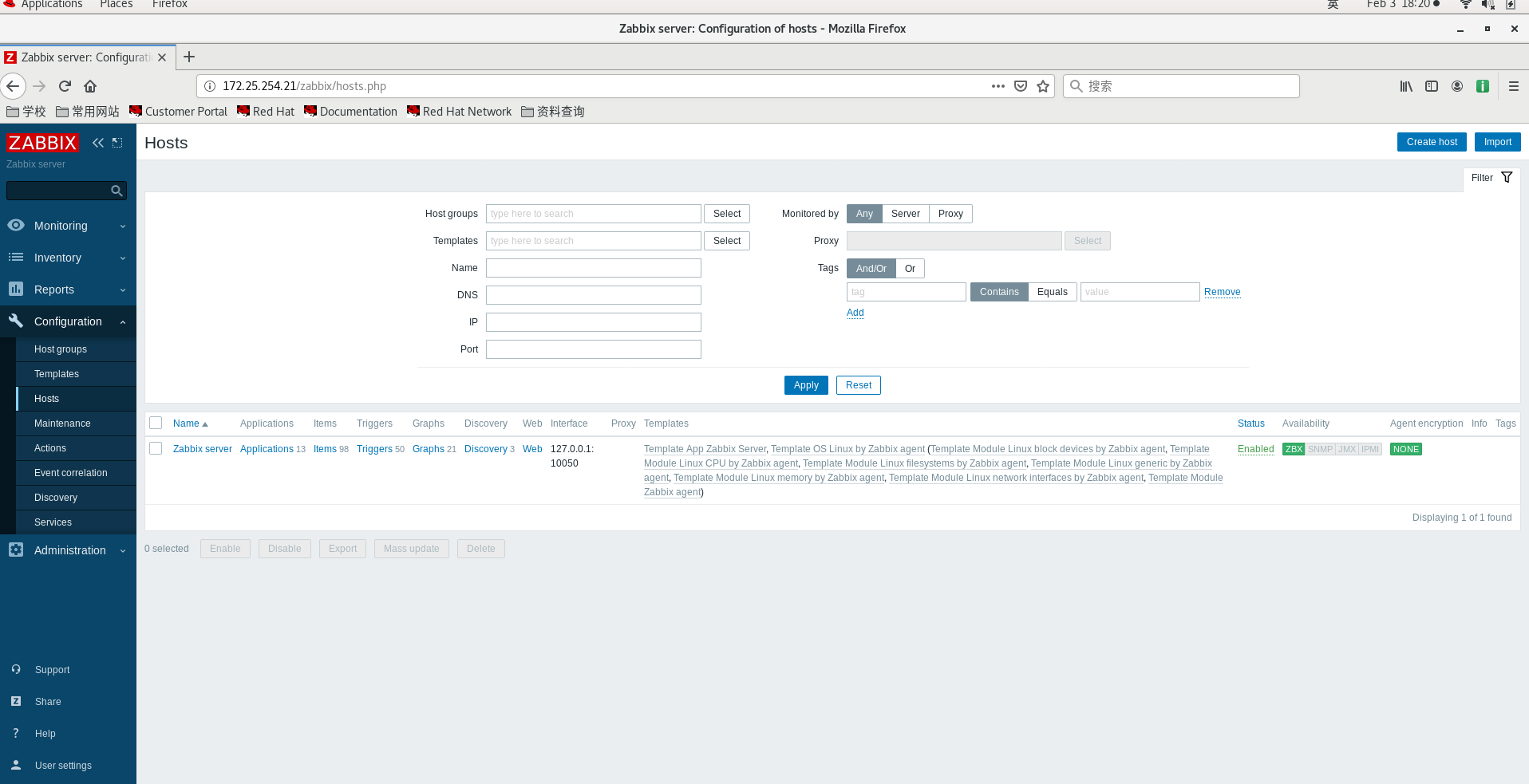

4. Browser access for testing

At this time, the access is not mariadb database, but tidb database.

At this time, the access is not mariadb database, but tidb database.