Model testing system is a sub project of ZStack woodpecker. Through the finite state machine and behavior selection strategy, it can generate random API operations and run until a defect or predefined exit condition is encountered. ZStack relies on model testing to test boundary use cases that are difficult to meet in the real world, and complements integration testing and system testing in terms of test coverage.

summary

Test coverage is an important indicator to judge the quality of a test system. Conventional testing methodologies, such as unit testing, integration testing and system testing, are constructed by human logical thinking, which is difficult to cover the boundary scenarios in software. This problem becomes more obvious in IaaS software, because managing different subsystems can lead to extremely complex scenarios. ZStack solves this problem by introducing model-based testing. It can generate scenarios composed of random API combinations that will continue to run until a predefined exit condition is encountered or a defect is found. As machine driven testing, model-based testing can overcome the defects of human logic thinking to perform some tests. It seems anti-human logic, but the API is completely correct testing to help find the boundary problems that are difficult to be found by human dominated testing.

An example can help understand this idea. The model-based test system usually exposes a bug after executing about 200 API s. After debugging, we find the sequence that can reproduce this problem at least:

- Create a VM

- Shut down this VM

- Create a cloud disk snapshot for the root cloud disk of this VM

- Create a new data cloud disk snapshot from the root cloud disk of this VM

- Destroy this VM

- Create a new data cloud disk and use the template in 4

- Create a new cloud disk snapshot from the data cloud disk in 6

This operation sequence is obviously anti logical. We believe that no tester will write an integration test case or system test case to do so. This is where machine thinking shines, because it has no human feelings and will do things that human beings feel unreasonable. After finding the bug, we generated a regression test to ensure this problem in the future.

#Model based test system model based test system is also called robot test because it is driven by machine. When the system is running, it moves from one model (also known as phase in the following sections) to another model by performing the actions selected by the action selection strategy (also known as operations). After each model is completed, the inspector will verify the test results and test the exit conditions. If any failure is found or the exit conditions are met, the system will exit. Otherwise, it will move to the next model and repeat.

Finite state machine

In the theory of model-based testing, there are many ways to generate test operations. For example: finite state machine, automatic derivation, model checking. We chose to use finite state machine because it is naturally suitable for IaaS software, in which every resource is driven by state. For example, from the perspective of users, the state of VM is like this:

In the model-based test system, each state of each resource is pre-defined in test_state.py, looks like:

vm_state_dict = {

Any: 1 ,

vm_header.RUNNING: 2,

vm_header.STOPPED: 3,

vm_header.DESTROYED: 4

}

vm_volume_state_dict = {

Any: 10,

vm_no_volume_att: 20,

vm_volume_att_not_full: 30,

vm_volume_att_full: 40

}

volume_state_dict = {

Any: 100,

free_volume: 200,

no_free_volume:300

}

image_state_dict = {

Any: 1000,

no_new_template_image: 2000,

new_template_image: 3000

}

All States of all resources in the system constitute a stage (model). The system can transfer from one stage to the next stage and operate in the conversion table by performing maintenance. A phase is defined as something like this:

class TestStage(object):

'''

Test states definition and Test state transition matrix.

'''

def __init__(self):

self.vm_current_state = 0

self.vm_volume_current_state = 0

self.volume_current_state = 0

self.image_current_state = 0

self.sg_current_state = 0

self.vip_current_state = 0

self.sp_current_state = 0

self.snapshot_live_cap = 0

self.volume_vm_current_state = 0

...

A stage can be expressed as an integer, that is, the sum of all States of this stage. Through this integer, we can find the next post selected operation in the conversion table. An example of a conversion table is as follows:

#state transition table for vm_state, volume_state and image_state

normal_action_transition_table = {

Any: [ta.create_vm, ta.create_volume, ta.idel],

2: [ta.stop_vm, ta.reboot_vm, ta.destroy_vm, ta.migrate_vm],

3: [ta.start_vm, ta.destroy_vm, ta.create_image_from_volume, ta.create_data_vol_template_from_volume],

4: [],

211: [ta.delete_volume],

222: [ta.attach_volume, ta.delete_volume],

223: [ta.attach_volume, ta.delete_volume],

224: [ta.delete_volume],

232: [ta.attach_volume, ta.detach_volume, ta.delete_volume],

233: [ta.attach_volume, ta.detach_volume, ta.delete_volume],

234: [ta.delete_volume], 244: [ta.delete_volume], 321: [],

332: [ta.detach_volume, ta.delete_volume],

333: [ta.detach_volume, ta.delete_volume], 334: [],

342: [ta.detach_volume, ta.delete_volume],

343: [ta.detach_volume, ta.delete_volume], 344: [],

3000: [ta.delete_image, ta.create_data_volume_from_image]

}

In this way, the model-based test system can keep running, from one stage to another, until it meets the predefined exit conditions or finds some defects. It can run for many days and call the API tens of thousands of times.

Action selection strategy

When moving between phases, the model-based test system needs to decide what to do next. Decision maker is called action selection strategy. It is an extensible plug-in engine. Different selection algorithms can be implemented for different purposes. The current system has three strategies:

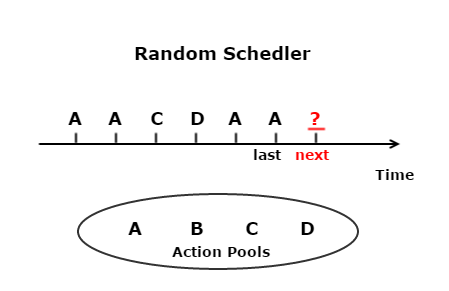

- Random scheduler: the simplest strategy is to randomly select the next operation from the candidate actions for the current stage. As a very direct algorithm, the random scheduler may repeat one operation and make other operations wait. In order to alleviate this problem, we have added a weight to each operation, so that testers can give higher weight to the operations they want to test more.

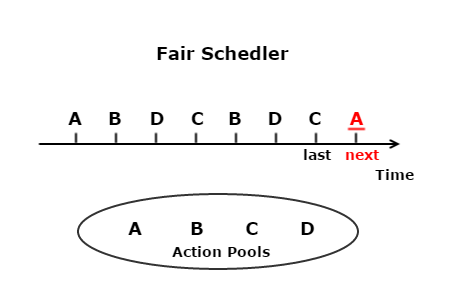

- Fair scheduler: a strategy that treats every operation completely equally. It supplements the random scheduler in such a way that every operation has an equal opportunity to be executed to ensure that every operation will be tested as long as the test cycle is long enough.

- Path coverage scheduler: determines the next operation strategy through historical data. This strategy will remember all the operation paths that have been executed, and then try to select an operation that can form a new operation path. For example, given candidate operations a, B, C and D, if the previous operation is B and the paths Ba, BB and BC have been executed, the policy will select d as the next operation, so that the path BD will be tested.

As mentioned above, the action selection policy is an extensible plug-in engine, and each policy is actually derived from the class ActionSelector: an implementation example of a random scheduler is like this:

class ActionSelector(object):

def __init__(self, action_list, history_actions, priority_actions):

self.history_actions = history_actions

self.action_list = action_list

self.priority_actions = priority_actions

def select(self):

'''

New Action Selector need to implement own select() function.

'''

pass

def get_action_list(self):

return self.action_list

def get_priority_actions(self):

return self.priority_actions

def get_history_actions(self):

return self.history_actions

Exit condition

Before starting the model-based test system, the exit conditions must be set, otherwise the system will remain running until a defect is found or the log file explodes the hard disk of the test machine. Exit conditions can be in any form. For example, exit after 24 hours of operation, exit after 100 EIP s are created in the system, and exit when there are 2 stopped VMS and 8 running VMS. It all depends on the tester to define the conditions and increase the chance of finding defects as much as possible.

Failed playback

Debugging a failure found by a model-based test system is difficult and frustrating. Most failures are exposed by a large number of operation sequences, and they usually lack logic and have a large number of logs. We usually reproduce the failure manually. After painfully calling the API 200 times with zstack cli according to about 500000 lines of logs, we finally realize that this tragic task is not human. Then we invented a tool to reproduce a failure by playing back the action log (only recording the test information about the API). An action log looks like this:

Robot Action: create_vm Robot Action Result: create_vm; new VM: fc2c0221be72423ea303a522fd6570e9 Robot Action: stop_vm; on VM: fc2c0221be72423ea303a522fd6570e9 Robot Action: create_volume_snapshot; on Root Volume: fe839dcb305f471a852a1f5e21d4feda; on VM: fc2c0221be72423ea303a522fd6570e9 Robot Action Result: create_volume_snapshot; new SP: 497ac6abaf984f5a825ae4fb2c585a88 Robot Action: create_data_volume_template_from_volume; on Volume: fe839dcb305f471a852a1f5e21d4feda; on VM: fc2c0221be72423ea303a522fd6570e9 Robot Action Result: create_data_volume_template_from_volume; new DataVolume Image: fb23cdfce4b54072847a3cfe8ae45d35 Robot Action: destroy_vm; on VM: fc2c0221be72423ea303a522fd6570e9 Robot Action: create_data_volume_from_image; on Image: fb23cdfce4b54072847a3cfe8ae45d35 Robot Action Result: create_data_volume_from_image; new Volume: 20dee895d68b428a88e5ec3d3ef634d8 Robot Action: create_volume_snapshot; on Volume: 20dee895d68b428a88e5ec3d3ef634d8

The tester can reconstruct the failed environment by calling the playback tool:

robot_replay.py -f path_to_action_log

summary

In this article, we introduce a model-based testing system. Because we are good at exposing the problems in boundary use cases, model-based test system, integrated test system and system test system are the basis for safeguarding the quality of ZStack, so that we can release products with pride and confidence.

If I think the article is helpful to you, please praise, comment and collect. Your support is my biggest motivation!!!

Finally, Xiaobian sorted out some learning materials during the learning process, which can be shared with Java engineers and friends to exchange and learn from each other, If necessary, you can join my learning exchange group 716055499 to obtain Java architecture learning materials for free (including architecture materials of multiple knowledge points such as high availability, high concurrency, high performance and distribution, Jvm performance tuning, Spring source code, MyBatis, Netty,Redis,Kafka,Mysql,Zookeeper,Tomcat,Docker,Dubbo,Nginx, etc.)

Author: dingyu002

Source: dinyu002

The copyright belongs to the author. For commercial reprint, please contact the author for authorization. For non-commercial reprint, please indicate the source.