3D-SLAM self built platform active Ackerman + RS16 + LPMS_IMU LEGO_LOAM mapping

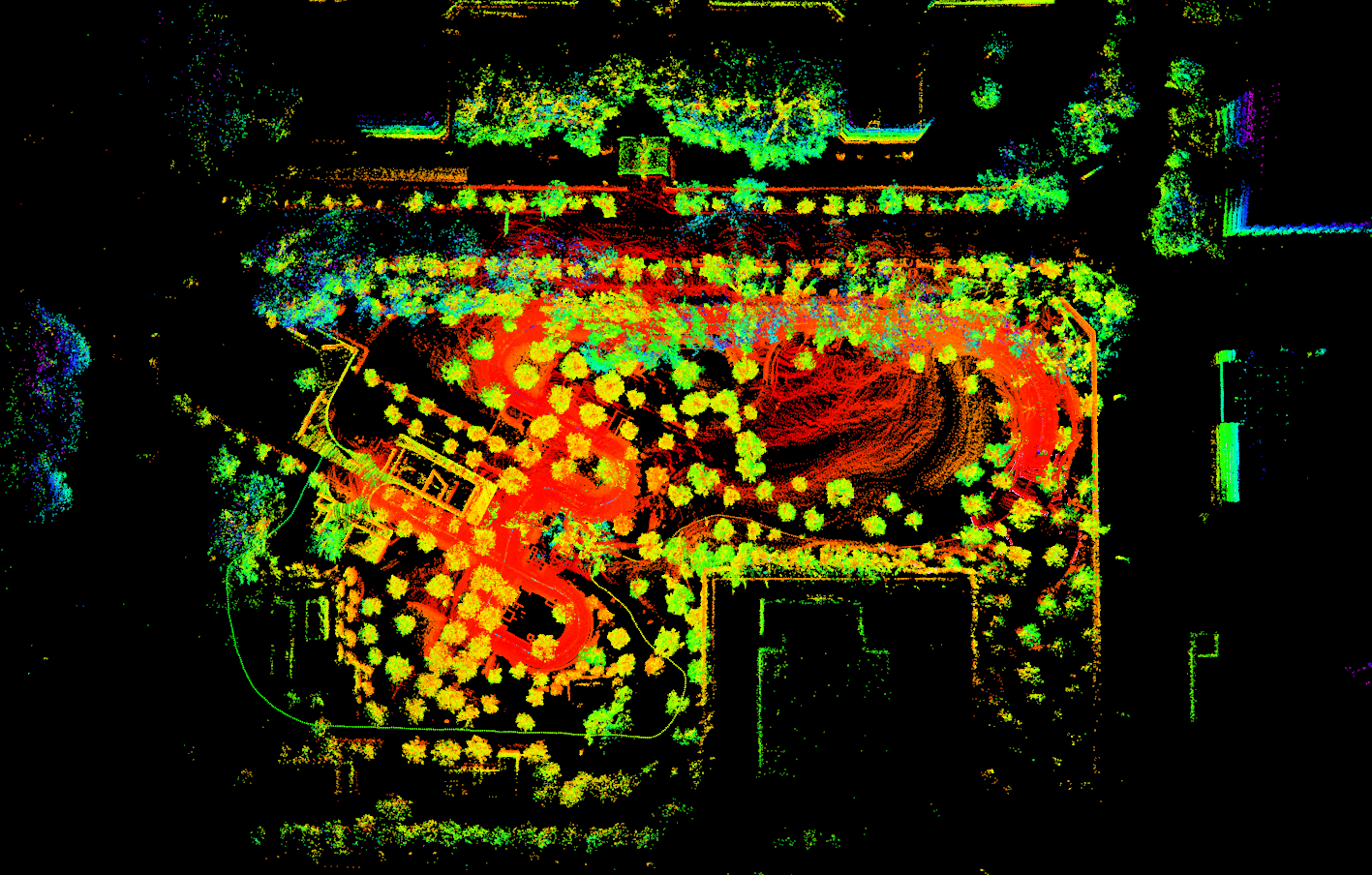

Effect display:

Detailed drawing (indoor + outdoor) video link:

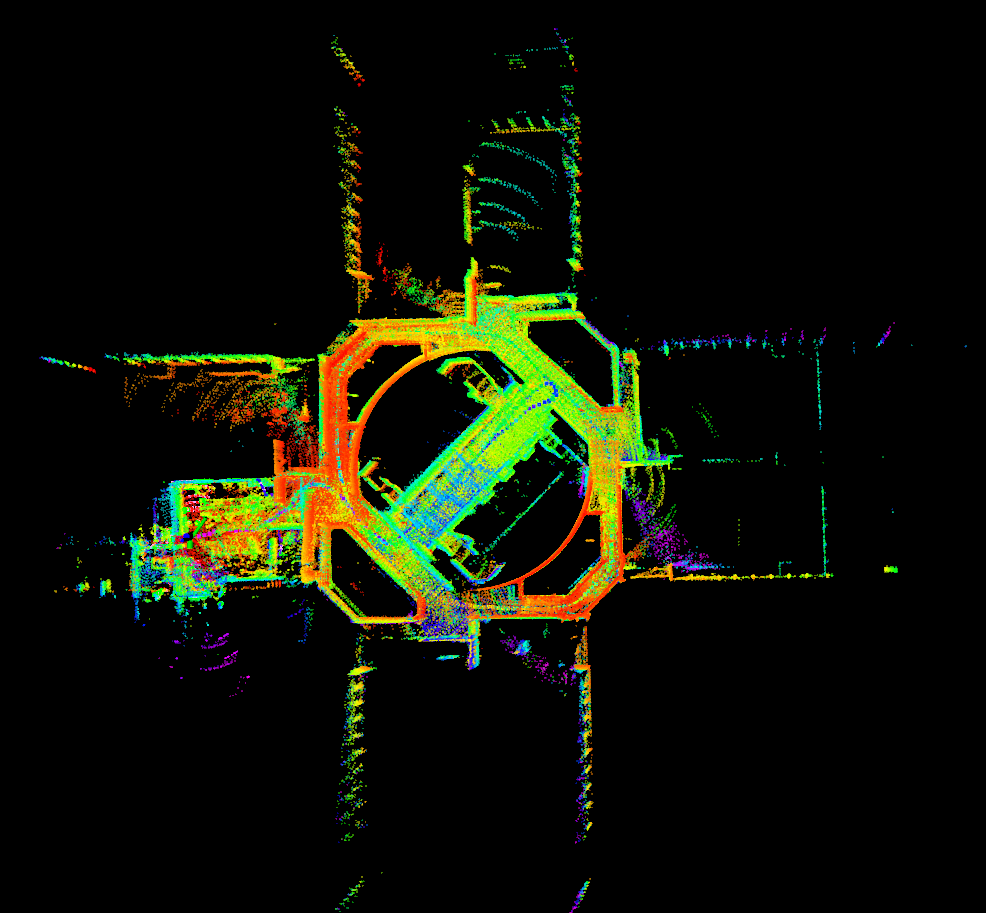

3D-SLAM self built platform active Ackerman + RS16 + LPMS_IMU LEGO_LOAM indoor construction drawing

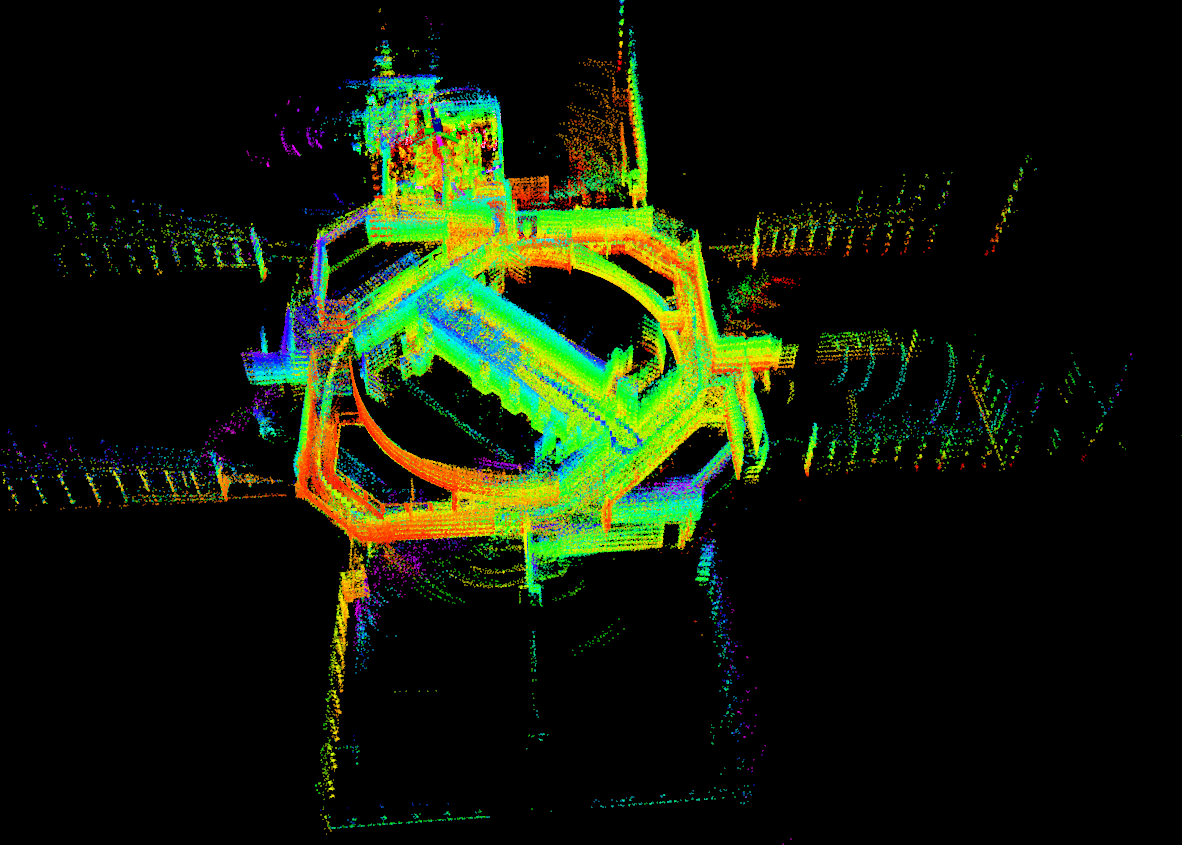

3D-SLAM self built platform active Ackerman + RS16 + LPMS_IMU LEGO_LOAM outdoor construction drawing

Complete code download link of the experiment: https://github.com/kahowang/3D-SLAM-Multiple-robot-platforms/tree/main/Active-Ackerman-Slam/%E4%B8%BB%E5%8A%A8%E9%98%BF%E5%85%8B%E6%9B%BC%2BRS16%2BLPMS_IMU%20%20LEGO_LOAM%20%E5%BB%BA%E5%9B%BE

Recorded dataset download link: https://pan.baidu.com/s/1IIGquusHYk_UmGeClXG9tA Password: fwo3

Self built 3D SLAM data acquisition wheeled mobile platform

Logo-logo indoor construction drawing

Logo-logo outdoor building base: Shenzhen cloud Park

preface:

This experiment is to use the mobile chassis and sensors built by ourselves to carry out lego_loam 3D-SLAM mapping is mainly divided into three parts

a. LEGO_LOAM Summary of the paper.(Tribute to the author Tixiao Shan and Brendan Englot,And a series of open source interpretation bloggers on the Internet)

b.Set up a platform to collect data sets and build a map process.(For reference)

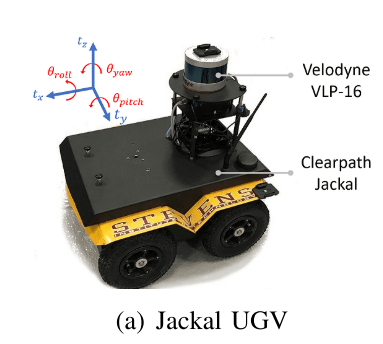

Existing equipment: Robosense16 Lidar, LPMS IMU, Self building active differential Ackerman chassis

c.Data sets are downloaded and used, and the platform is built. Indoor and outdoor data sets are collected for everyone to try. (including radar point cloud topics IMU Topic, camera topic)

1.LEGO_LOAM overview

1.1 paper abstract

We propose logo-beam, which is a lightweight and ground optimized lidar odometer and mapping method for real-time estimation of the six degrees of freedom attitude of ground vehicles. Logo-beam is lightweight because it can realize real-time attitude estimation on Low-Power Embedded systems. Logo-beam is optimized by the ground because it makes use of the constraints of the ground in the segmentation and optimization steps. Firstly, we apply point cloud segmentation to filter noise and extract features to obtain unique plane and edge features. Then, the two-step Levenberg Marquardt optimization method is used to solve the different components of six degrees of freedom transformation in continuous scanning by using plane and edge features. We use the data set collected by ground vehicles from variable terrain environment to compare the performance of logo-beam with the most advanced beam method. The results show that logo-beam achieves similar or better accuracy with reduced computational overhead. In order to eliminate the attitude estimation error caused by drift, we also integrate logo-LOAM into SLAM framework and test it with KITTI data set.

The paper is mainly compared with LOAM, which has the following five characteristics

1.2 system performance

The paper is mainly compared with LOAM, which has the following five characteristics

a. Lightweight and can run in real time on embedded devices

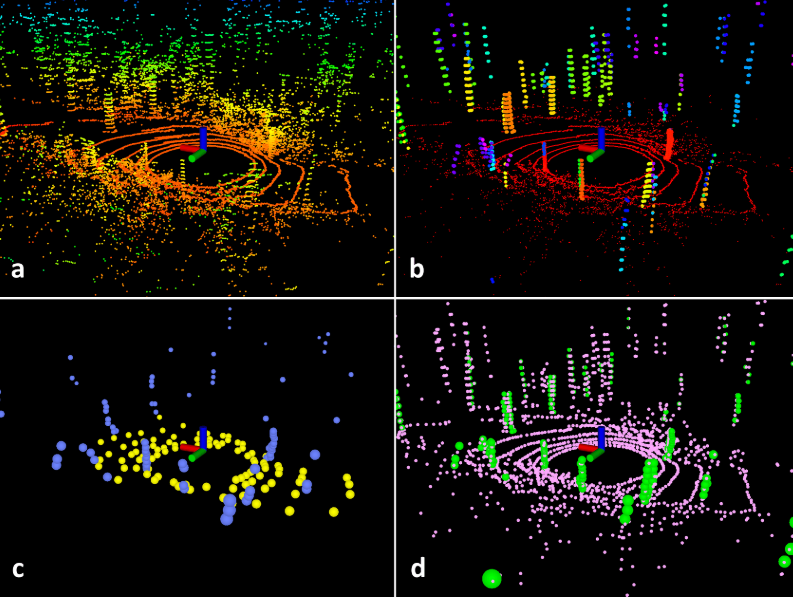

b. For ground optimization, a segmentation module is added to the point cloud processing part, which can remove the interference of ground points and extract features only from the clustering targets. It mainly includes the extraction of a ground (it is not assumed that the ground is a plane) and the segmentation of a point cloud. Using the filtered point cloud to extract feature points will greatly improve the efficiency. (as shown in the following figure: to perform ground segmentation before feature extraction)

c. When extracting feature points, the point cloud is divided into small blocks and feature points are extracted respectively to ensure the uniform distribution of feature points. When matching feature points, the preprocessed seggeneration label is used to filter (the point cloud is divided into edge point class and plane point class) to improve the efficiency again.

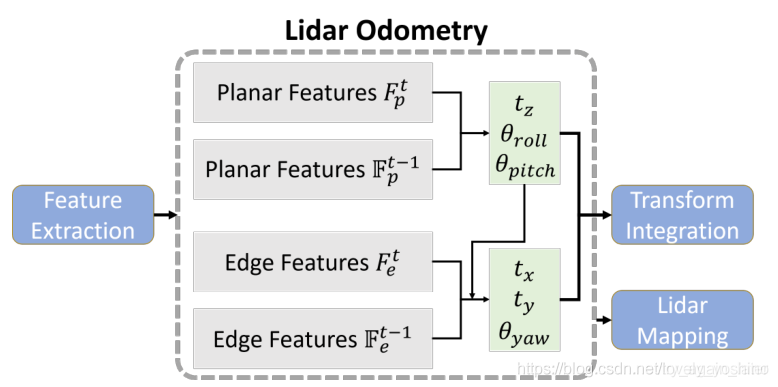

d. Two step L-M optimization method is used to estimate the position and attitude of odometer in 6 dimensions. Firstly, the plane points are used to optimize the height, and the direction of the ground is used to optimize the two angle information. The plane features extracted from the ground are used to obtain t in the first step_ z,theta_ roll,theta_ pitch; Then the remaining three variables are optimized by using edge and corner points, and the rest of the transformation t is obtained by matching the edge features extracted from the segmented point cloud_ x,t_ y,theta_ yaw. The matching method is scan2scan. Optimized separately in this way, the efficiency is improved by 40%, but there is no loss of accuracy.

e. Integrated Loop detection The ability to correct motion estimation drift (that is, gtsam is used for loop detection and graph optimization, but it is still loop detection based on Euclidean distance in essence, and there is no global descriptor).

2. Self built platform to reproduce LEGO_LOAM mapping

2.1 environment configuration

2.1.1 hardware configuration

2.1.1.1 Suteng 16 wire lidar

The lidar is configured through the network port, and the master-slave network needs to be configured. The local machine (computer or embedded device on the robot) needs to be in the same network segment as the radar.

Radar default factory IP by master_address : 192.168.1.200 Local settings IP by slaver_address : 192.168.1.102

The following figure shows the hardware driver of Sagitar 16 wire lidar. Plug one end of the network cable into the radar hardware driver and the other end into the computer network port.

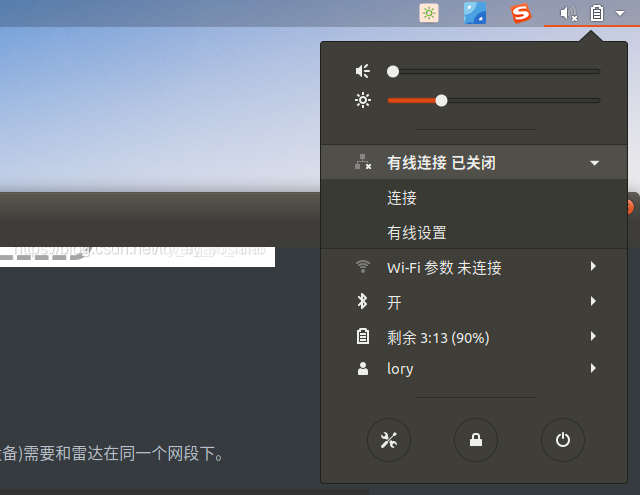

Then click the wired connection in the upper right corner of ubuntu and click wired settings

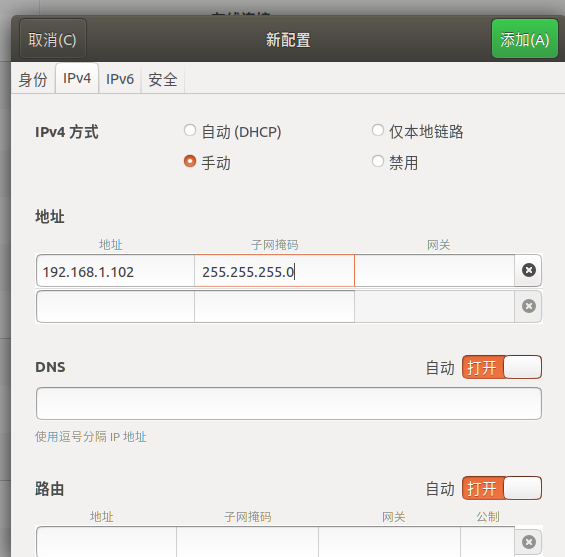

Click "+" on the upper right corner of wired connection

Change the name to any of your own (here, it is changed to "robosense 16")

Click IPv4 and fill in the address 192.168.1.102. The subnet mask is 255.255.255.0

Click add in the upper right corner to see that the wired connection with the radar is successful

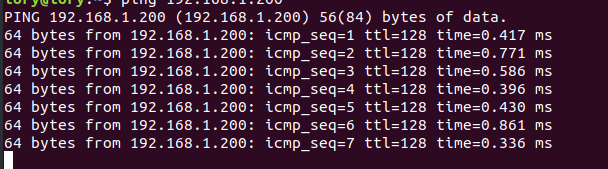

#Open a new terminal and try to ping the same radar data. If the data shown below is received, the radar network interface is configured successfully

ping 192.168.1.200

2.1.1.2 IMU

The IMU used is LPMS_URS2 9-axis

2.1.1.3 active differential Ackerman chassis

2.1.2 software configuration

2.1.2.1 download the ROS driver of Sagitar 16 line lidar software

cd ~/catkin_ws/src git clone https://github.com/RoboSense-LiDAR/rslidar_sdk.git cd rslidar_sdk git submodule init git submodule update

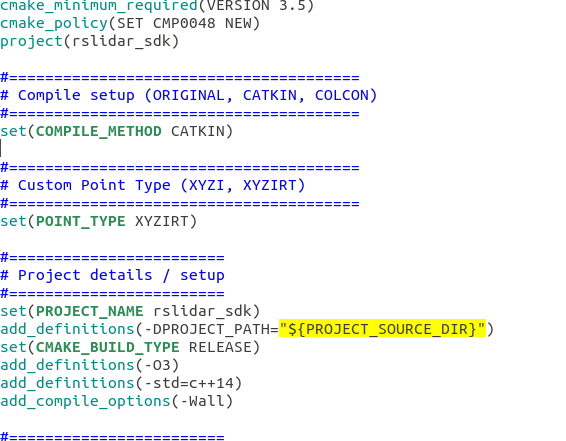

Modify rslidar_sdk CMakeLists.txt

cd rslidar_sdk sudo gedit CMakeLists.txt #Modify set(POINT_TYPE XYZIRT) to set(COMPILE_METHOD CATKIN) #Change set(POINT_TYPE XYZI) to set(POINT_TYPE XYZIRT)

Modify rslidar_sdk package_ros1.xml is renamed package.xml xml

Modify rslidar_ sdk/config/comfig. Lidar in yaml line 16_ Type: RS16

lidar_type: RS16 #LiDAR type - RS16, RS32, RSBP, RS128, RS80, RSM1, RSHELIOS

compile

cd ~/catkin_ws catkin_make

2.1.2.2 LPMS IMU configuration

catkin_make -DCMAKE_C_COMPILER=gcc-7 -DCMAKE_CXX_COMPILER=g++-7 #Upgrade cmake version apt install ros-melodic-openzen-sensor #Download openzen sensor cd lego_loam_ws/src git clone --recurse-submodules https://bitbucket.org/lpresearch/openzenros.git cd .. catkin_make

2.1.2.3 lego_loam configuration

Dependency:

a. ROS (tested with indigo, kinetic, and melodic)

b.gtsam (Georgia Tech Smoothing and Mapping library, 4.0.0-alpha2)

Install VTK

sudo apt install python-vtk

To install gtsam:

wget -O ~/Downloads/gtsam.zip https://github.com/borglab/gtsam/archive/4.0.0-alpha2.zip # install gtsam cd ~/Downloads/ && unzip gtsam.zip -d ~/Downloads/ cd ~/Downloads/gtsam-4.0.0-alpha2/ mkdir build && cd build cmake .. sudo make install

compile:

cd ~/catkin_ws/src # Enter your workspace git clone https://github. com/RobustFieldAutonomyLab/LeGO-LOAM. Git # git clone LEGO loam source code cd .. catkin_make -j1 # When compiling the code for the first time, you need to add "- j1" after "catkin_make" to generate some message types. "- j1" is not required for future compilation. "

2.1.2.4 RS16 to VLP16

Because LEGO_ The radar topic accepted by default in loam is / velodyne_points, the received radar point cloud information is XYZIR, while the Sagitar lidar driver only supports XYZI and XYZIRT data types, so the data needs to be converted. Rs can be used_ to_ The velodyne node converts the format of radar point cloud messages.

cd ~/catkin_ws/src git clone https://github.com/HViktorTsoi/rs_to_velodyne.git catkin_make

2.2 operation

2.2.1 offline mapping

2.2.1.1 offline data collection

#Running trolley chassis ssh coo@10.42.0.1 password: cooneo_coo cd catkin_ws roslaunch turn_on_wheeltec_robot base_camera.launch

#Collect data cd catkin_ws source devel/setup.bash roslaunch rslidar_sdk start.launch # Start RS16 radar

rosrun rs_to_velodyne rs_to_velodyne XYZIRT XYZIR # Start radar data type conversion node

rosrun openzen_sensor openzen_sensor_node # Run LPMs IMU

mkdir catkin_ws/rosbag cd catkin_ws/rosbag rosbag record -a -O lego_loam.bag # Record all topics

2.2.1.2 offline mapping

roslaunch lego_loam run.launch # Run lego_loam rosbag play *.bag --clock --topic /velodyne_points /imu/data # Select radar point cloud topic and IMU topic (only point cloud topic can be used if IMU configuration is general)

2.2.2 online mapping

Modify run / use in launch file_ sim_ time

<param name="/use_sim_time" value="false" /> # Set "false" for online mapping and "true" for offline mapping

#Running trolley chassis ssh coo@10.42.0.1 password: cooneo_coo cd catkin_ws roslaunch turn_on_wheeltec_robot base_camera.launch

#Turn on the radar cd catkin_ws source devel/setup.bash roslaunch rslidar_sdk start.launch # Start RS16 radar

roslaunch lego_loam run.launch # Run lego_loam

2.3 final effect

Indoor and outdoor data sets have been uploaded to the network disk for everyone to download

link: https://pan.baidu.com/s/1IIGquusHYk_UmGeClXG9tA password: fwo3

2.3.1 indoor offline drawing

cd lego_loam_ws #Go to and download LEGO_ In the workspace of loam roslaunch lego_loam run.launch # Run lego_loam mapping cd rosbag #Enter the directory where the dataset is stored rosbag play cooneo_indoor_urs2.bag --clock --topic /velodyne_points /imu/data /usb_cam/image_raw/compressed # Data set in operation room

Logo-logo 3D Indoor 3D map

Logo-logo 3D Indoor 3D map

2D gmpping mapping

2.3.2 outdoor offline drawing

cd lego_loam_ws #Go to and download LEGO_ In the workspace of loam roslaunch lego_loam run.launch # Run lego_loam mapping cd rosbag #Enter the directory where the dataset is stored rosbag play cooneo_outdoor_urs2.bag --clock --topic /velodyne_points /imu/data /usb_cam/image_raw/compressed # Run outdoor dataset

2.3.3 save map

Run launch lego_ After loam mapping program, click and check Map Cloud in rviz. After the program is completed, CRTL + C can end the process and automatically save the map.

3. Data download and use

Data set download address: link: https://pan.baidu.com/s/1IIGquusHYk_UmGeClXG9tA Password: fwo3

Scene: indoor and outdoor

Equipment: two wheel yakman chassis, robosense 16 line lidar, LPMS IMU

3.1 environment configuration

The above "2.1.2.3 lego_loam configuration" has been described

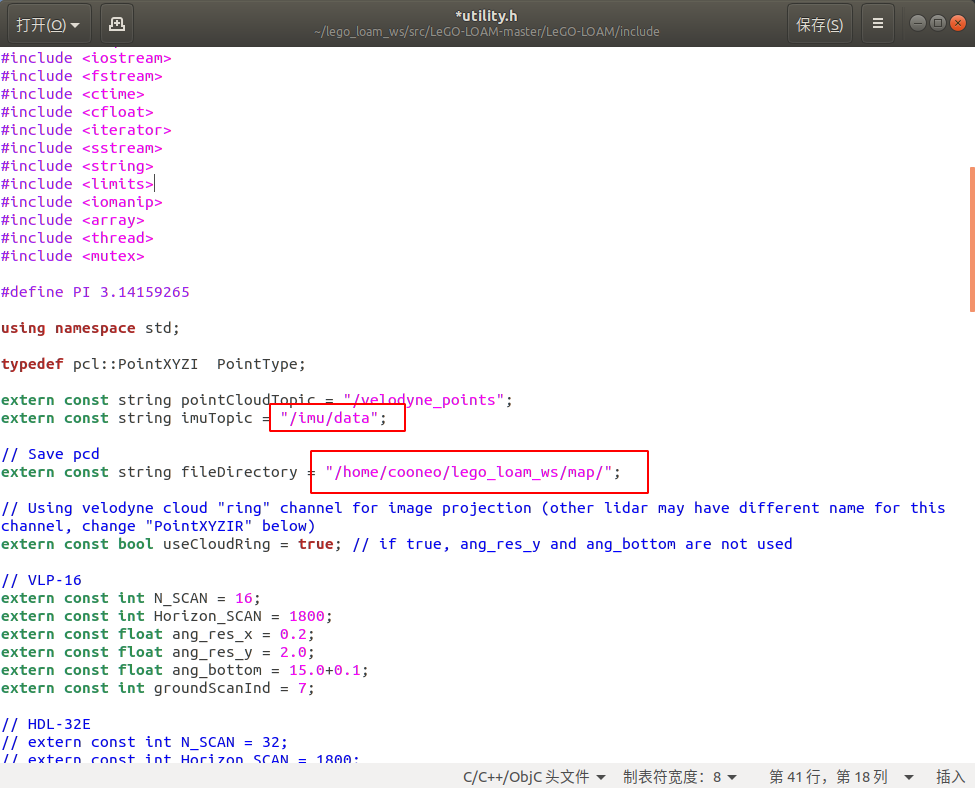

3.2 modify relevant configuration and save map

3.2.1 modify configuration file

sudo gedit lego_loam_ws/src_Lego-LOAM-master/LeGo-LOAM/include/utility.h

Modify imuTopic to your own imu_topic, the imu topic of this dataset is "/ imu/data"

Fill in the absolute path of the map you want to save in fileDirectory

3.2.2 save map

Run launch lego_ After loam mapping program, click and tick Map Cloud in rviz. After the program is completed, CRTL + C can end the process and the mapping is successful.

3.3 building maps

roslaunch lego_loam run.launch # Run lego_loam

#Indoor dataset rosbag play cooneo_indoor_urs2.bag --clock --topic /velodyne_points /imu/data # Select radar point cloud topic and IMU topic (only point cloud topic can be used if IMU configuration is general) #Outdoor dataset rosbag play cooneo_outdoor_urs2.bag --clock --topic /velodyne_points /imu/data # Select radar point cloud topic and IMU topic (only point cloud topic can be used if IMU configuration is general)

reference

[1] Logo-logo: principle, installation and test

[2] Automatic driving series (III) Suteng 16 line lidar drive installation

[4] Sc-logo-beam extension and depth analysis (I)

[6] SLAM learning notes (XX) detailed explanation of LIO-SAM process and code (the most complete)

[7] The difference and relation between logo-LOAM and LOAM

edited by kaho 2022.1.10