0. Preparation

First of all, the operating environment of St GCN network is completely configured, and behavior recognition can be carried out normally

Configuration environment reference:

1. Reproduce the old STGCN GPU Version (win10+openpose1.5.0)

2. Reproduce st GCN (win10 + openpose1.5.1 + vs2017 + cuda10 + cudnn7.6.4)

For preparing their own data sets, the author mentioned specific practices, as shown below

we first resized all videos to the resolution of 340x256 and converted the frame rate to 30 fps we extracted skeletons from each frame in Kinetics by Openpose rebuild the database by this command: python tools/kinetics_gendata.py --data_path <path to kinetics-skeleton> To train a new ST-GCN model, run python main.py recognition -c config/st_gcn/<dataset>/train.yaml [--work_dir <work folder>]

1. Download / clip video

Cutting the prepared video into 5-8s video may be convenient and simple

Then flip the cut video by using the left and right mirror images of the script to expand the data set. The script:

import os

import skvideo.io

import cv2

if __name__ == '__main__':

###########################Modification Division################

type_number = 12

typename_list = []

#################################################

for type_index in range(type_number):

type_filename = typename_list[type_index]

#Video folder

originvideo_file = './mydata/Cutting/{}/'.format(type_filename)

videos_file_names = os.listdir(originvideo_file)

#1. Left and right mirror Flip Video

for file_name in videos_file_names:

video_path = '{}{}'.format(originvideo_file, file_name)

name_without_suffix = file_name.split('.')[0]

outvideo_path = '{}{}_mirror.mp4'.format(originvideo_file, name_without_suffix)

writer = skvideo.io.FFmpegWriter(outvideo_path,

outputdict={'-f': 'mp4', '-vcodec': 'libx264', '-r':'30'})

reader = skvideo.io.FFmpegReader(video_path)

for frame in reader.nextFrame():

frame_mirror = cv2.flip(frame, 1)

writer.writeFrame(frame_mirror)

writer.close()

print('{} mirror success'.format(file_name))

print('the video in {} are all mirrored'.format(type_filename))

print('-------------------------------------------------------')

2. Use OpenPose to extract bone point data and make kinematics skeleton data set

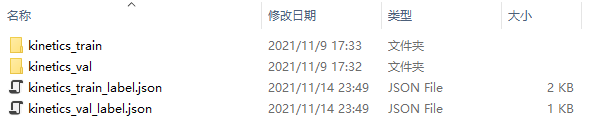

The main purpose of this step is to create your own video data set into the same format as the kinematics skeleton data set. The format is roughly shown in the figure below

First, classify your videos according to categories and put them in different folders, and then extract data mainly through two scripts.

The main parts of the first self written script are as follows. This script can be said to be the. / processor / demo of St GCN source code_ Part of old.py. First, resize the video data to a size of 340x256 and a frame rate of 30fps. Then openpose is used to detect and output skeleton data. I have added some operations for batch processing of video data under various folders.

#!/usr/bin/env python

import os

import argparse

import json

import shutil

import numpy as np

import torch

import skvideo.io

from .io import IO

import tools

import tools.utils as utils

class PreProcess(IO):

"""

utilize openpose Extract bone point data from self built dataset

"""

def start(self):

work_dir = './st-gcn-master'

###########################Modification Division################

type_number = 12

gongfu_filename_list = ['1', '2', '3', '4', '5', '6', '7', '8', '9', '10', '11', '12']

#################################################

for process_index in range(type_number):

gongfu_filename = gongfu_filename_list[process_index]

#Label information

labelgongfu_name = 'xxx_{}'.format(process_index)

label_no = process_index

#Video folder

originvideo_file = './mydata/Cutting/{}/'.format(gongfu_filename)

#resized video output folder

resizedvideo_file = './mydata/Cutting/resized/{}/'.format(gongfu_filename)

videos_file_names = os.listdir(originvideo_file)

#1. Video in resize folder to 340x256 30fps

for file_name in videos_file_names:

video_path = '{}{}'.format(originvideo_file, file_name)

outvideo_path = '{}{}'.format(resizedvideo_file, file_name)

writer = skvideo.io.FFmpegWriter(outvideo_path,

outputdict={'-f': 'mp4', '-vcodec': 'libx264', '-s': '340x256', '-r':'30'})

reader = skvideo.io.FFmpegReader(video_path)

for frame in reader.nextFrame():

writer.writeFrame(frame)

writer.close()

print('{} resize success'.format(file_name))

#2. Extract the bone point data of each video by openpose

resizedvideos_file_names = os.listdir(resizedvideo_file)

for file_name in resizedvideos_file_names:

outvideo_path = '{}{}'.format(resizedvideo_file, file_name)

#openpose = '{}/examples/openpose/openpose.bin'.format(self.arg.openpose)

openpose = '{}/OpenPoseDemo.exe'.format(self.arg.openpose)

video_name = file_name.split('.')[0]

output_snippets_dir = './mydata/Cutting/resized/snippets/{}'.format(video_name)

output_sequence_dir = './mydata/Cutting/resized/data'

output_sequence_path = '{}/{}.json'.format(output_sequence_dir, video_name)

label_name_path = '{}/resource/kinetics_skeleton/label_name_gongfu.txt'.format(work_dir)

with open(label_name_path) as f:

label_name = f.readlines()

label_name = [line.rstrip() for line in label_name]

# pose estimation

openpose_args = dict(

video=outvideo_path,

write_json=output_snippets_dir,

display=0,

render_pose=0,

model_pose='COCO')

command_line = openpose + ' '

command_line += ' '.join(['--{} {}'.format(k, v) for k, v in openpose_args.items()])

shutil.rmtree(output_snippets_dir, ignore_errors=True)

os.makedirs(output_snippets_dir)

os.system(command_line)

# pack openpose ouputs

video = utils.video.get_video_frames(outvideo_path)

height, width, _ = video[0].shape

# You can modify label here_ index

video_info = utils.openpose.json_pack(

output_snippets_dir, video_name, width, height, labelgongfu_name, label_no)

if not os.path.exists(output_sequence_dir):

os.makedirs(output_sequence_dir)

with open(output_sequence_path, 'w') as outfile:

json.dump(video_info, outfile)

if len(video_info['data']) == 0:

print('{} Can not find pose estimation results.'.format(file_name))

return

else:

print('{} pose estimation complete.'.format(file_name))

After that, sort out the json file of the extracted bone point data according to the format of the kinematics skeleton dataset in the figure above. kinetics_train folder to save training data, sports_ The Val folder holds validation data. The two json files outside the folder mainly contain all the file names, behavior tag names and behavior tag indexes in the corresponding folder. The generation scripts of these two json files can be referred to as follows

import json

import os

if __name__ == '__main__':

train_json_path = './mydata/kinetics-skeleton/kinetics_train'

val_json_path = './mydata/kinetics-skeleton/kinetics_val'

output_train_json_path = './mydata/kinetics-skeleton/kinetics_train_label.json'

output_val_json_path = './mydata/kinetics-skeleton/kinetics_val_label.json'

train_json_names = os.listdir(train_json_path)

val_json_names = os.listdir(val_json_path)

train_label_json = dict()

val_label_json = dict()

for file_name in train_json_names:

name = file_name.split('.')[0]

json_file_path = '{}/{}'.format(train_json_path, file_name)

json_file = json.load(open(json_file_path))

file_label = dict()

if len(json_file['data']) == 0:

file_label['has_skeleton'] = False

else:

file_label['has_skeleton'] = True

file_label['label'] = json_file['label']

file_label['label_index'] = json_file['label_index']

train_label_json['{}'.format(name)] = file_label

print('{} success'.format(file_name))

with open(output_train_json_path, 'w') as outfile:

json.dump(train_label_json, outfile)

for file_name in val_json_names:

name = file_name.split('.')[0]

json_file_path = '{}/{}'.format(val_json_path, file_name)

json_file = json.load(open(json_file_path))

file_label = dict()

if len(json_file['data']) == 0:

file_label['has_skeleton'] = False

else:

file_label['has_skeleton'] = True

file_label['label'] = json_file['label']

file_label['label_index'] = json_file['label_index']

val_label_json['{}'.format(name)] = file_label

print('{} success'.format(file_name))

with open(output_val_json_path, 'w') as outfile:

json.dump(val_label_json, outfile)

3. Training st-gcn network

For this part, please refer to the third - sixth part of the blog as shown below

St GCN training self built behavior recognition data set

4. Run the demo with your own st GCN network and visualize it

This part can be done by rewriting. / processor / demo in the st GCN source code_ Old.py script. The main thing to note is that remember to modify the tag file name of the read behavior category and modify the parameters such as model name and category number in the corresponding yaml configuration file